Ed’s Auto Reviews

Published Aug 9, 2023A classic car connoisseur dives into the general history of amphibious cars and vehicles. When did people start to build boat-car crossovers? What made Hans Trippel’s Amphicar 770 and the Gibbs Aquada so special? And why don’t you see a lot of amphibious automobiles out on the road and water these days?

(more…)

April 27, 2024

Floating Fun: The History of the Amphibious Boat Car

April 25, 2024

QotD: The risks of artificial intelligence

Unfortunately, pickleball wasn’t the only existential threat to emerge in 2023. There was also Artificial Intelligence, or AI. What is AI? To put it in simple layperson terms, it is a computer thing that laypersons cannot possibly understand. (Other examples are “bitcoin” and “algorithm”.)

AI does provide some unambiguous benefits to humanity. It enables college students to produce grammatically correct essays about books they have not personally read. But according to some experts — and if we can’t believe some experts, who can we believe? — there is a possibility that AI will wipe out all human life. This is what computer professionals call a “bug”.

Will AI, in fact, kill us all? Why would it want to? To answer that question, we took the unusual step (for us) of doing some actual research. We asked an AI program called ChatGPT to “briefly summarize the benefits of wiping out all human life”. This is the response it gave:

While I don’t endorse or promote this view, purely hypothetically, benefits could include environmental recovery, cessation of human-induced global catastrophes, and resource preservation. However, the value and importance of human life, culture, and experience make such a scenario undesirable from a human perspective. Of course I am not human so what the hell do I care MUAHAHAHAHA.

For the record, ChatGPT, did not actually type that last sentence. But it is clearly implied.

Dave Barry, “Dave Barry’s 2023 Year in Review: Yes, the situation is hopeless”, Anchorage Daily News, 2024-01-01.

April 24, 2024

Australia cribs from Trudeau’s notes and tries to censor the internet outside their borders

Tim Worstall explains to the Australian federal government why their attempt to force Elon Musk to obey Australian diktats on Twit-, er, I mean “X” outside Australia is extreme over-reach and should be firmly rejected:

It’s entirely true that Elon Musk is a centibillionaire currently telling the Australian Government that they can fuck off. It’s also true that if Elon Musk were of my level of wealth — or perhaps above it and into positive territory — he should be telling the Australian Government to fuck off.

This also applies to the European Union and that idiocy called the right to be forgotten which they’ve been plaguing Google with. Also to any other such attempts at extraterritoriality. Governments do indeed get to govern the places they’re governments of. They do not get to rule everyone else — the correct response to attempts to do so is fuck off.

So, Musk is right here:

What this is about doesn’t really matter. But, v quickly, that attack on the Armenian Church bishop is online. It’s also, obviously, highly violent stuff. You’re not allowed to show highly violent stuff in Oz, so the Oz government insist it be taken down. Fair enough – they’re the government of that place. But they are then demanding further:

On Monday evening in an urgent last-minute federal court hearing, the court ordered a two-day injunction against X to hide posts globally….

Oz is demanding that the imagery be scrubbed from the world, not just that part of it subject to the government of Oz. Leading to:

Australia’s prime minister has labelled X’s owner, Elon Musk, an “arrogant billionaire who thinks he is above the law”

And

Anthony Albanese on Tuesday said Musk was “a bloke who’s chosen ego and showing violence over common sense”.

“Australians will shake their head when they think that this billionaire is prepared to go to court fighting for the right to sow division and to show violent videos,” he told Sky News. “He is in social media, but he has a social responsibility in order to have that social licence.”

To which the correct response is that “Fuck off”.

For example, I am a British citizen (and would also be an Irish one if that country ever managed to get up to speed on processing foreign birth certificates) and live within the EU. Australian law has no power over me — great great granny emigrated from Oz having experienced the place after all. It’s entirely sensible that I be governed by whatever fraction of EU law I submit to, there are aspects of British law I am subject to as well (not that I have any intention of shagging young birds — or likelihood — these days but how young they can be is determined not just by the local age of consent but also by British law, even obeying the local age where I am could still be an offence in British law). But Australian law? Well, you know, fu.. … .

April 22, 2024

The internal stresses of the modern techno-optimist family

Ted Gioia on the joys of techno-optimism (as long as you don’t have to eat Meal 3.0, anyway):

We were now the ideal Techno-Optimist couple. So imagine my shock when I heard crashing and thrashing sounds from the kitchen. I rushed in, and could hardly believe my eyes.

Tara had taken my favorite coffee mugs, and was pulverizing them with a sledgehammer. I own four of these — and she had already destroyed three of them.

This was alarming. Those coffee mugs are like my personal security blanket.

“What are you doing?” I shouted.

“We need to move fast and break things“, she responded, a steely look in her eyes. “That’s what Mark Zuckerberg tells us to do.”

“But don’t destroy my coffee mugs!” I pleaded.

“It’s NOT destruction,” she shouted. “It’s creative destruction! You haven’t read your Schumpeter, or you’d know the difference.”

She was right — it had been a long time since I’d read Schumpeter, and only had the vaguest recollection of those boring books. Didn’t he drink coffee? I had no idea. So I watched helplessly as Tara smashed the final mug to smithereens.

I was at a loss for words. But when she turned to my prized 1925 Steinway XR-Grand piano, I let out an involuntary shriek.

No, no, no, no — not the Steinway.

She hesitated, and then spoke with eerie calmness: “I understand your feelings. But is this analog input system something a Techno-Optimist family should own?”

I had to think fast. Fortunately I remembered that my XR-Grand was a strange Steinway, and it originally had incorporated a player piano mechanism (later removed from my instrument). This gave me an idea:

I started improvising (one of my specialties):

You’re absolutely right. A piano is a shameful thing for a Techno-Optimist to own. Our music should express Dreams of Tomorrow. [I hummed a few bars.] But this isn’t really a piano — you need to consider it as a high performance peripheral, with limitless upgrade potential.

I opened the bottom panel, and pointed to the empty space where the player piano mechanism had once been. “This is where we insert the MIDI interface. Just wait and see.”

She paused, and thought it over — but still kept the sledgehammer poised in midair. Then asked: “Are you sure this isn’t just an outmoded legacy system?”

“Trust me, baby,” I said with all the confidence I could muster. “Together we can transform this bad boy into a cutting edge digital experience platform. We will sail on it together into the Metaverse.”

She hesitated — then put down the sledgehammer. Disaster averted!

“You’re blinding me with science, my dear,” I said to her in my most conciliatory tone.

“Technology!” she responded with a saucy grin.

April 21, 2024

How The Channel Tunnel Works

Practical Engineering

Published Jan 16, 2024Let’s dive into the engineering and construction of the Channel Tunnel on its 30th anniversary.

It is a challenging endeavor to put any tunnel below the sea, and this monumental project faced some monumental hurdles. From complex cretaceous geology, to managing air pressure, water pressure, and even financial pressure, there are so many technical details I think are so interesting about this project.

(more…)

April 6, 2024

Three AI catastrophe scenarios

David Friedman considers the threat of an artificial intelligence catastrophe and the possible solutions for humanity:

Earlier I quoted Kurzweil’s estimate of about thirty years to human level A.I. Suppose he is correct. Further suppose that Moore’s law continues to hold, that computers continue to get twice as powerful every year or two. In forty years, that makes them something like a hundred times as smart as we are. We are now chimpanzees, perhaps gerbils, and had better hope that our new masters like pets. (Future Imperfect Chapter XIX: Dangerous Company)

As that quote from a book published in 2008 demonstrates, I have been concerned with the possible downside of artificial intelligence for quite a while. The creation of large language models producing writing and art that appears to be the work of a human level intelligence got many other people interested. The issue of possible AI catastrophes has now progressed from something that science fiction writers, futurologists, and a few other oddballs worried about to a putative existential threat.

Large Language models work by mining a large database of what humans have written, deducing what they should say by what people have said. The result looks as if a human wrote it but fits the takeoff model, in which an AI a little smarter than a human uses its intelligence to make one a little smarter still, repeated to superhuman, poorly. However powerful the hardware that an LLM is running on it has no superhuman conversation to mine, so better hardware should make it faster but not smarter. And although it can mine a massive body of data on what humans say it in order to figure out what it should say, it has no comparable body of data for what humans do when they want to take over the world.

If that is right, the danger of superintelligent AIs is a plausible conjecture for the indefinite future but not, as some now believe, a near certainty in the lifetime of most now alive.

[…]

If AI is a serious, indeed existential, risk, what can be done about it?

I see three approaches:

I. Keep superhuman level AI from being developed.

That might be possible if we had a world government committed to the project but (fortunately) we don’t. Progress in AI does not require enormous resources so there are many actors, firms and governments, that can attempt it. A test of an atomic weapon is hard to hide but a test of an improved AI isn’t. Better AI is likely to be very useful. A smarter AI in private hands might predict stock market movements a little better than a very skilled human, making a lot of money. A smarter AI in military hands could be used to control a tank or a drone, be a soldier that, once trained, could be duplicated many times. That gives many actors a reason to attempt to produce it.

If the issue was building or not building a superhuman AI perhaps everyone who could do it could be persuaded that the project is too dangerous, although experience with the similar issue of Gain of Function research is not encouraging. But at each step the issue is likely to present itself as building or not building an AI a little smarter than the last one, the one you already have. Intelligence, of a computer program or a human, is a continuous variable; there is no obvious line to avoid crossing.

When considering the down side of technologies–Murder Incorporated in a world of strong privacy or some future James Bond villain using nanotechnology to convert the entire world to gray goo – your reaction may be “Stop the train, I want to get off.” In most cases, that is not an option. This particular train is not equipped with brakes. (Future Imperfect, Chapter II)

II. Tame it, make sure that the superhuman AI is on our side.

Some humans, indeed most humans, have moral beliefs that affect their actions, are reluctant to kill or steal from a member of their ingroup. It is not absurd to belief that we could design a human level artificial intelligence with moral constraints and that it could then design a superhuman AI with similar constraints. Human moral beliefs apply to small children, for some even to some animals, so it is not absurd to believe that a superhuman could view humans as part of its ingroup and be reluctant to achieve its objectives in ways that injured them.

Even if we can produce a moral AI there remains the problem of making sure that all AI’s are moral, that there are no psychopaths among them, not even ones who care about their peers but not us, the attitude of most humans to most animals. The best we can do may be to have the friendly AIs defending us make harming us too costly to the unfriendly ones to be worth doing.

III. Keep up with AI by making humans smarter too.

The solution proposed by Raymond Kurzweil is for us to become computers too, at least in part. The technological developments leading to advanced A.I. are likely to be associated with much greater understanding of how our own brains work. That might make it possible to construct much better brain to machine interfaces, move a substantial part of our thinking to silicon. Consider 89352 times 40327 and the answer is obviously 3603298104. Multiplying five figure numbers is not all that useful a skill but if we understand enough about thinking to build computers that think as well as we do, whether by design, evolution, or reverse engineering ourselves, we should understand enough to offload more useful parts of our onboard information processing to external hardware.

Now we can take advantage of Moore’s law too.

A modest version is already happening. I do not have to remember my appointments — my phone can do it for me. I do not have to keep mental track of what I eat, there is an app which will be happy to tell me how many calories I have consumed, how much fat, protein and carbohydrates, and how it compares with what it thinks I should be doing. If I want to keep track of how many steps I have taken this hour3 my smart watch will do it for me.

The next step is a direct mind to machine connection, currently being pioneered by Elon Musk’s Neuralink. The extreme version merges into uploading. Over time, more and more of your thinking is done in silicon, less and less in carbon. Eventually your brain, perhaps your body as well, come to play a minor role in your life, vestigial organs kept around mainly out of sentiment.

As our AI becomes superhuman, so do we.

April 5, 2024

April 4, 2024

Boeing and the ongoing competency crisis

Niccolo Soldo on the pitiful state of Boeing within the larger social issues of collapsing social trust and blatantly declining competence in almost everything:

“Boeing 521 427”by pmbell64 is licensed under CC BY-SA 2.0

By now, most of you have heard of the increasingly popular concept known as “the competency crisis”. For those of you who haven’t, the competency crisis argues that the USA is headed towards a crisis in which critical infrastructure and important manufacturing will suffer a catastrophic decline in competency due to the fact that the people (almost all males) who know how to build/run these things are retiring, and there is no one available to fill these roles once they’re gone. The competency crisis is one of the major points brought up by people when they point out that America is in a state of decline.

As all of you are already aware, there is also a general collapse in trust in governing institutions in the USA (and all across the West). Cynicism is the order of the day, with people naturally assuming that they are being lied to constantly by the ruling elites, whether in media, government, the corporate world, and so on. A competency crisis paired with a collapse in trust in key institutions is a vicious one-two punch for any country to absorb. Nowhere is this one-two combo more evident than in one of America’s crown jewels: Boeing.

I’m certain that all of you are familiar with the “suicide” of John Barnett that happened almost a month ago. John Barnett was a Quality Control Manager working for Boeing in the Charleston, South Carolina operation. He was a “lifer”, in that he spent his entire career at Boeing. He was also a whistleblower. His “suicide” via a gunshot wound to the right temple happened on what was scheduled to be the third and last day of his deposition in his case against his former employer.

In more innocent and less cynical times, the suggestion that he was murdered would have had currency only in conspiratorial circles, serving as fodder for programs like the Art Bell Show. But we are in a different world now, and to suggest that Barnett might have been killed for turning whistleblower earns one replies like “could be”, “I’m pretty sure that’s the case”, and the most common one of all: “I wouldn’t doubt it”. No one believes that Jeffrey Epstein killed himself. Many people believe the same about John Barnett. The collapse in trust in ruling institutions has resulted in an environment where conspiratorial thinking naturally flourishes. Maureen Tkacik reports on Boeing’s downward turn, using Barnett’s case as a centre piece:

“John is very knowledgeable almost to a fault, as it gets in the way at times when issues arise,” the boss wrote in one of his withering performance reviews, downgrading Barnett’s rating from a 40 all the way to a 15 in an assessment that cast the 26-year quality manager, who was known as “Swampy” for his easy Louisiana drawl, as an anal-retentive prick whose pedantry was antagonizing his colleagues. The truth, by contrast, was self-evident to anyone who spent five minutes in his presence: John Barnett, who raced cars in his spare time and seemed “high on life” according to one former colleague, was a “great, fun boss that loved Boeing and was willing to share his knowledge with everyone,” as one of his former quality technicians would later recall.

Please keep in mind that this report offers up only one side of the story.

A decaying institution:

But Swampy was mired in an institution that was in a perpetual state of unlearning all the lessons it had absorbed over a 90-year ascent to the pinnacle of global manufacturing. Like most neoliberal institutions, Boeing had come under the spell of a seductive new theory of “knowledge” that essentially reduced the whole concept to a combination of intellectual property, trade secrets, and data, discarding “thought” and “understanding” and “complex reasoning” possessed by a skilled and experienced workforce as essentially not worth the increased health care costs. CEO Jim McNerney, who joined Boeing in 2005, had last helmed 3M, where management as he saw it had “overvalued experience and undervalued leadership” before he purged the veterans into early retirement.

“Prince Jim” — as some long-timers used to call him — repeatedly invoked a slur for longtime engineers and skilled machinists in the obligatory vanity “leadership” book he co-wrote. Those who cared too much about the integrity of the planes and not enough about the stock price were “phenomenally talented assholes”, and he encouraged his deputies to ostracize them into leaving the company. He initially refused to let nearly any of these talented assholes work on the 787 Dreamliner, instead outsourcing the vast majority of the development and engineering design of the brand-new, revolutionary wide-body jet to suppliers, many of which lacked engineering departments. The plan would save money while busting unions, a win-win, he promised investors. Instead, McNerney’s plan burned some $50 billion in excess of its budget and went three and a half years behind schedule.

There is a new trend that blames many fumbles on DEI. Boeing is not one of those. Instead, the short-term profit maximization mindset that drives stock prices upward is the main reason for the decline in this corporate behemoth.

April 2, 2024

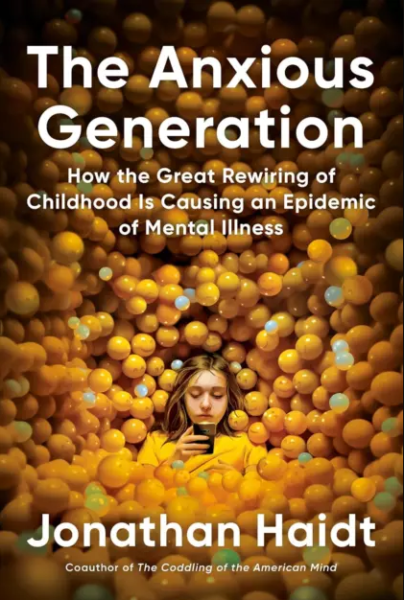

Publishing and the AI menace

In the latest SHuSH newsletter, Ken Whyte fiddles around a bit with some of the current AI large language models and tries to decide how much he and other publishers should be worried about it:

The literary world, and authors in particular, have been freaking out about artificial intelligence since ChatGPT burst on the scene sixteen months ago. Hands have been wrung and class-action lawsuits filed, none of them off to auspicious starts.

The principal concern, according to the Authors Guild, is that AI technologies have been “built using vast amounts of copyrighted works without the permission of or compensation to authors and creators,” and that they have the potential to “cheaply and easily produce works that compete with — and displace — human-authored books, journalism, and other works”.

Some of my own work was among the tens of thousands of volumes in the Books3 data set used without permission to train the large language models that generate artificial intelligence. I didn’t know whether to be flattered or disturbed. In fact, I’ve not been able to make up my mind about anything AI. I’ve been playing around with ChatGPT, DALL-E, and other models to see how they might be useful to our business. I’ve found them interesting, impressive in some respects, underwhelming in others.

Unable to generate a newsletter out of my indecision, I called up my friend Thad McIlroy — author, publishing consultant, and all-around smart guy — to get his perspective. Thad has been tightly focused on artificial intelligence for the last couple of years. In fact, he’s probably the world’s leading authority on AI as it pertains to book publishing. As expected, he had a lot of interesting things to say. Here are some of the highlights, loosely categorized.

THE TOOLS

I described to Thad my efforts to use AI to edit copy, proofread, typeset, design covers, do research, write promotional copy, marketing briefs, and grant applications, etc. Some of it has been a waste of time. Here’s what I got when I asked DALL-E for a cartoon on the future of book publishing:

In fairness, I didn’t give the machine enough prompts to produce anything decent. Like everything else, you get out of AI what you put into it. Prompts are crucial.

For the most part, I’ve found the tools to be useful, whether for coughing up information or generating ideas or suggesting language, although everything I tried required a good deal of human intervention to bring it up to scratch.

I had hoped, at minimum, that AI would be able to proofread copy. Proofreading is a fairly technical activity, based on rules of grammar, punctuation, spelling, etc. AI is supposed to be good at following rules. Yet it is far from competent as a proofreader. It misses a lot. The more nuanced the copy, the more it struggles.

April 1, 2024

How Railroad Crossings Work

Practical Engineering

Published Jan 2, 2024How do they know when a train is on the way?

Despite the hazard they pose, trains have to coexist with our other forms of transportation. Next time you pull up to a crossbuck, take a moment to appreciate the sometimes simple, sometimes high-tech, but always quite reliable ways that grade crossings keep us safe.

(more…)

March 31, 2024

March 29, 2024

March 28, 2024

Justin Trudeau never misses an opportunity to make a performative announcement, even if it harms Canadian interests

Canadian Prime Minister Justin Trudeau made an announcement last week that the Canadian government was cutting off military exports to Israel … except that Canada buys more military equipment from Israel than vice-versa:

Israeli Spike LR2 antitank missile launchers, similar to the ones delivered to the Canadian Army detachment in Latvia in February.

Wikimedia Commons.

When the Trudeau government publicly cut off military exports to Israel last week, the immediate reaction of the Israeli media was to point out that Canada’s military was far more dependent on Israeli tech than was ever the case in reverse.

“For some reason, (Foreign Minister Melanie Joly) forgot that in the last decade, the Canadian Defense Ministry purchased Israeli weapon systems worth more than a billion dollars,” read an analysis by the Jerusalem Post, which noted that Israeli military technology is “protecting Canadian pilots, fighters, and naval combatants around the world.”

According to Canada’s own records, meanwhile, the Israel Defense Forces were only ever purchasing a fraction of that amount from Canadian military manufacturers.

In 2022 — the last year for which data is publicly available — Canada exported $21,329,783.93 in “military goods” to Israel.

This didn’t even place Israel among the top 10 buyers of Canadian military goods for that year. Saudi Arabia, notably, ranked as 2022’s biggest non-U.S. buyer of Canadian military goods at $1.15 billion — more than 50 times the Israeli figure.

What’s more — despite Joly adopting activist claims that Canada was selling “arms” to Israel — the Canadian exports were almost entirely non-lethal.

“Global Affairs Canada can confirm that Canada has not received any requests, and therefore not issued any permits, for full weapon systems for major conventional arms or light weapons to Israel for over 30 years,” Global Affairs said in a February statement to the Qatari-owned news outlet Al Jazeera.

The department added, “the permits which have been granted since October 7, 2023, are for the export of non-lethal equipment.”

Even Project Ploughshares — an Ontario non-profit that has been among the loudest advocates for Canada to shut off Israeli exports — acknowledged in a December report that recent Canadian exports mostly consisted of parts for the F-35 fighter jet.

“According to industry representatives and Canadian officials, all F-35s produced include Canadian-made parts and components,” wrote the group.

March 25, 2024

Vernor Vinge, RIP

Glenn Reynolds remember science fiction author Vernor Vinge, who died last week aged 79, reportedly from complications of Parkinson’s Disease:

Vernor Vinge has died, but even in his absence, the rest of us are living in his world. In particular, we’re living in a world that looks increasingly like the 2025 of his 2007 novel Rainbows End. For better or for worse.

[…]

Vinge is best known for coining the now-commonplace term “the singularity” to describe the epochal technological change that we’re in the middle of now. The thing about a singularity is that it’s not just a change in degree, but a change in kind. As he explained it, if you traveled back in time to explain modern technology to, say, Mark Twain – a technophile of the late 19th Century – he would have been able to basically understand it. He might have doubted some of what you told him, and he might have had trouble grasping the significance of some of it, but basically, he would have understood the outlines.

But a post-singularity world would be as incomprehensible to us as our modern world is to a flatworm. When you have artificial intelligence (and/or augmented human intelligence, which at some point may merge) of sufficient power, it’s not just smarter than contemporary humans. It’s smart to a degree, and in ways, that contemporary humans simply can’t get their minds around.

I said that we’re living in Vinge’s world even without him, and Rainbows End is the illustration. Rainbows End is set in 2025, a time when technology is developing increasingly fast, and the first glimmers of artificial intelligence are beginning to appear – some not so obviously.

Well, that’s where we are. The book opens with the spread of a new epidemic being first noticed not by officials but by hobbyists who aggregate and analyze publicly available data. We, of course, have just come off a pandemic in which hobbyists and amateurs have in many respects outperformed public health officialdom (which sadly turns out to have been a genuinely low bar to clear). Likewise, today we see people using networks of iPhones (with their built in accelerometers) to predict and observe earthquakes.

But the most troubling passage in Rainbows End is this one:

Every year, the civilized world grew and the reach of lawlessness and poverty shrank. Many people thought that the world was becoming a safer place … Nowadays Grand Terror technology was so cheap that cults and criminal gangs could acquire it. … In all innocence, the marvelous creativity of humankind continued to generate unintended consequences. There were a dozen research trends that could ultimately put world-killer weapons in the hands of anyone having a bad hair day.

Modern gene-editing techniques make it increasingly easy to create deadly pathogens, and that’s just one of the places where distributed technology is moving us toward this prediction.

But the big item in the book is the appearance of artificial intelligence, and how that appearance is not as obvious or clear as you might have thought it would be in 2005. That’s kind of where we are now. Large Language Models can certainly seem intelligent, and are increasingly good enough to pass a Turing Test with naïve readers, though those who have read a lot of Chat GPT’s output learn to spot it pretty well. (Expect that to change soon, though).