German government control over what people can say online seems like something Justin Trudeau would love to have (and, in fact, is working toward) here in Canada:

Almost two years ago, on 26 July 2022, a German Twitter user known only as MicLiberal posted a thread that culminated in his criminal trial this week. His is but the latest in a long line of such prosecutions – the tactic our rulers increasingly favour to intimidate and harass those who use their freedom of expression in inconvenient ways.

MicLiberal committed his alleged offence as Germany was still awakening from months of hypervaccination insanity. Science authorities and politicians had spent the winter decrying the “tyranny of the unvaccinated“, demanding that “we have to take care of the unvaccinated, and … make vaccination compulsory“, firing people who protested institutional vaccine mandates on social media and denouncing the unvaccinated for ongoing virus restrictions and Covid deaths. Our neighbour, Austria, even went so far as to impose a specific lockdown on those who refused the Covid vaccines. Culturally and politically, those were the darkest months I have ever lived through; they changed my life forever and I will never forget them.

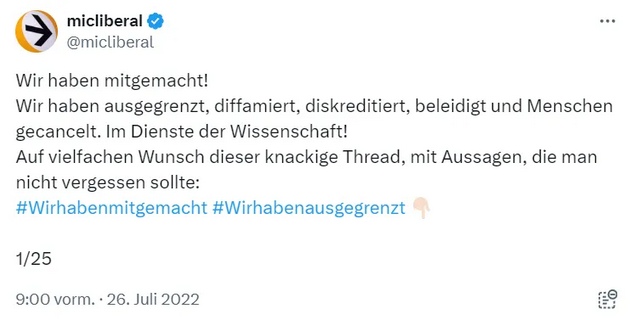

MicLiberal’s thread aimed only to memorialise some of the crazy things the vaccinators had said. It opened with this tweet:

We were complicit!

We marginalised, defamed, discredited, insulted and cancelled people. On behalf of science!

By popular demand, this brief thread with statements that should not be forgotten:

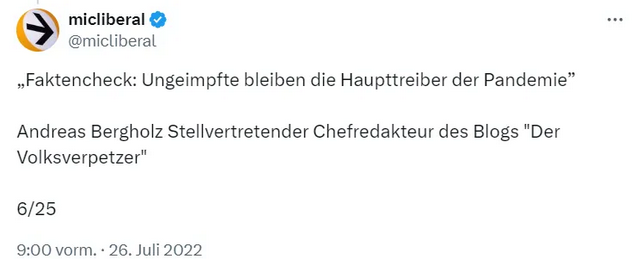

There ensued nothing but a series of citations, most of them wholly typical samples of vintage 2021/22 vaccinator rhetoric, much of it not even that remarkable. For example, MicLiberal included this statement from Andreas Berholz, deputy editor-in-chief of the widely read blog Der Volksverpetzer:

Fact-check: The unvaccinated remain the main drivers of the pandemic.

[…]

You might be wondering what crime MicLiberal can possibly have committed by drawing attention to these already-public statements. The most honest answer is that his thread achieved millions of views in a matter of days, and at a very awkward moment – precisely when everyone was beginning to regret all the illiberal and wildly intemperate things they had said in the depths of the virus craze. He had embarrassed some very vain and powerful people with their own incredibly stupid words, and today many are of the opinion that that ought to be a crime in and of itself.

Alas, things have not yet deteriorated that far. Thus the police and prosecutors were left to scour our dense thicket of laws for a more plausible offence. They decided that their best chance lay with a novel provision of the German Criminal Code (Paragraph 126a). This provision makes it a crime to “disseminate the personal data of another person in a matter that is … intended to expose this person … to the risk of a criminal offence directed against them“. On 28 July, two days after MicLiberal posted his 25 tweets, Cologne police filed a criminal complaint against him, and afterwards the Cologne prosecutor’s office brought charges, arguing that MicLiberal had suggested that the people he cited were “perpetrators” and therefore associated them with “fascism”. The district court declined to approve the charges, but the prosecutors appealed to the regional court, where the judges saw things differently. They believed that a prosecution was warranted because of the “heated social debate” surrounding Covid measures, and because MicLiberal’s audience was composed of “homogeneous” like-minded people, who (in the summary of the Berliner Zeitung) “could either form groups or encourage individual members to commit acts of violence”. MicLiberal had furthermore assembled his citations from a website that the judges deemed guilty of an “anti-government orientation”.

We must take a moment to ponder this truly amazing argumentation, which would seem to criminalise such things as participating in the wrong discussions before the wrong kind of people and assembling one’s (wholly accurate) data from the wrong sources. In each of these cases, of course, it is the prosecutors and the judges eager to apply Paragraph 126a to their political opponents who get to decide what is “wrong”.

The good news in all of this is that the court acquitted him of these creative charges, but the prosecution has given notice that they intend to appeal.