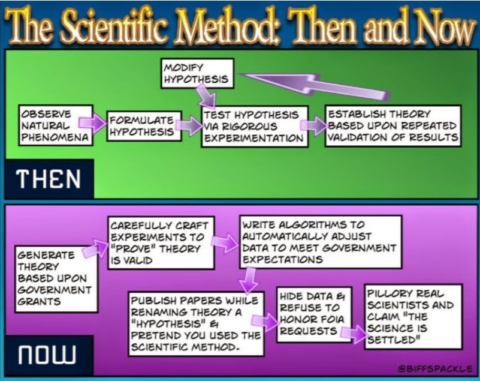

Tim Worstall on a few of the more upsetting details of how much we’ve been able depend on truth and testability in the scientific community and how badly that’s been undermined in recent years:

The Observer tells us that science itself is becoming polluted by journal mills. Fools — intellectual thieves perhaps — are publishing nonsense in scientific journals, this then pollutes the conclusions reached by people surveying science to see what’s what.

This is true and is a problem. But it’s what people publish as supposedly real science that is the real problem here, not just those obvious cases they’re complaining about:

The startling rise in the publication of sham science papers has its roots in China, where young doctors and scientists seeking promotion were required to have published scientific papers. Shadow organisations – known as “paper mills” – began to supply fabricated work for publication in journals there.

The practice has since spread to India, Iran, Russia, former Soviet Union states and eastern Europe, with paper mills supplying fabricated studies to more and more journals as increasing numbers of young scientists try to boost their careers by claiming false research experience. In some cases, journal editors have been bribed to accept articles, while paper mills have managed to establish their own agents as guest editors who then allow reams of falsified work to be published.

Indeed, an actual and real problem:

The products of paper mills often look like regular articles but are based on templates in which names of genes or diseases are slotted in at random among fictitious tables and figures. Worryingly, these articles can then get incorporated into large databases used by those working on drug discovery.

Others are more bizarre and include research unrelated to a journal’s field, making it clear that no peer review has taken place in relation to that article. An example is a paper on Marxist ideology that appeared in the journal Computational and Mathematical Methods in Medicine. Others are distinctive because of the strange language they use, including references to “bosom peril” rather than breast cancer and “Parkinson’s ailment” rather Parkinson’s disease.

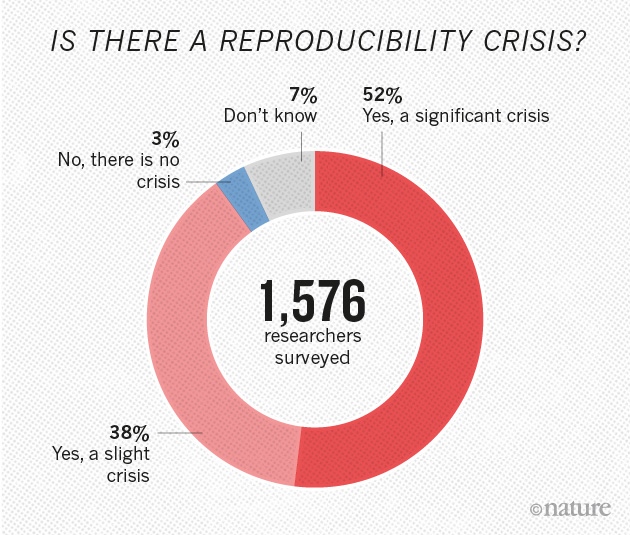

Quite. But the problem is worse, much, much, worse.

Let us turn to something we all can agree is of some importance. Those critical minerals things. We all agree that we’re going to be using more of them in the future. Largely because the whole renewables thing is changing the minerals we use to power the world. We’re — to some extent, perhaps enough, perhaps not enough — moving from using fossil fuels to power the world to using rare earths, silicon, copper and so on to power the world. How much there is, how much useable, of those minerals is important. Because that’s what we’re doing, we’re changing which minerals — from fossil to metallic elements — we use to power the world.

Those estimates of how much there is out there are therefore important. The European Union, for example, has innumerable reports and task forces working on the basis that there’s not that much out there and therefore we’ve got to recycle everything. One of those foundational blocks of the circular economy is that we’ve got to do it anyway. Because there’s simply not enough to be able to power society without the circular economy.

This argument is nads*. The circular economy might be desirable for other reasons. At least in part it’s very sensible too – if it’s cheaper to recycle than to dig up new then of course we should recycle. But that we must recycle, regardless of the cost, because otherwise supply will vanish is that nads*.

But, folk will and do say, if we look at the actual science here we are short of these minerals and metals. Therefore etc. But it’s the science that has become infected. Wrongly infected, infested even.

Here’s the Royal Society of Chemistry and their periodic table. You need to click around a bit to see this but they have hafnium supply risk as “unknown”. That’s at least an advance from their previous insistence that it was at high supply risk. It isn’t, there’s more hafnium out there than we can shake a stick at. At current consumption rates — and assuming no recycling at all which, with hafnium, isn’t all that odd an idea — we’re going to run out sometime around the expected date for the heat death of the universe. No, not run out of the universe’s hafnium, run out of what we’ve got in the lithosphere of our own Earth. To a reasonable and rough measure the entirety of Cornwall is 0.01% hafnium. We happily mine for gold at 0.0001% concentrations and we use less hafnium annually than we do gold.

The RSC also says that gallium and germanium have a high supply risk. Can you guess which bodily part(s) such a claim should be associated with? For gallium we already have a thousand year supply booked to pass through the plants we normally use to extract our gallium for us. For germanium I — although someone competent could be a preference — could build you a plant to supply 2 to 4% of global annual germanium demand/supply. Take about 6 months and cost $10 million even at government contracting rates to do it too. The raw material would be fly ash from coal burning and there’s no shortage of that — hundreds of such plants could be constructed that is.

The idea that humanity is, in anything like the likely timespan of our species, going to run short in absolute terms of Hf, Ga or Ge is just the utmost nads*

But the American Chemistry Society says the same thing:

* As ever, we are polite around here. Therefore we use the English euphemism “nads”, a shortening of “nadgers”, for the real meaning of “bollocks”.