… most bureaucrats aren’t evil, just ignorant … and as Scott shows, this ignorance isn’t really their fault. They don’t know what they don’t know, because they can’t know. Very few bureaucratic cock-ups are as blatant as Chandigarh, where all anyone has to do is look at pictures for five minutes to conclude “you couldn’t pay me enough to move there”. For instance, here’s the cover of Scott’s book:

That’s part of the state highway system in North Dakota or someplace, and though again my recall is fuzzy, the reason for this is something like: The planners back in Bismarck (or wherever) decreed that the roads should follow county lines … which, on a map, are perfectly flat. In reality, of course, the earth is a globe, which means that in order to comply with the law, the engineers had to put in those huge zigzags every couple of miles.

No evil schemes, just bureaucrats not mentally converting 2D to 3D, and if it happens to cost a shitload more and cause a whole bunch of other inconvenience to the taxpayers, well, these things happen … and besides, by the time the bureaucrat who wrote the regulation finds out about it — which, of course, he never will, but let’s suppose — he has long since moved on to a different part of the bureaucracy. He couldn’t fix it if he wanted to … which he doesn’t, because who wants to admit to that obvious (and costly!) a fuckup?

Add to this the fact that most bureaucrats have been bureaucrats all their lives — indeed, the whole “educational” system we have in place is designed explicitly to produce spreadsheet boys and powerpoint girls, kids who do nothing else, because they know nothing else. Oh, I’m sure the spreadsheet boys and powerpoint girls know, as a factual matter, that the earth is round — we haven’t yet declared it rayciss to know it. But they only “know” it as choice B on the standardized test. It means nothing to them in practical terms, so it would never occur to them that the map they’re looking at is an oversimplification — a necessary one, no doubt, but not real. As the Zen masters used to say, the finger pointing at the moon is not, itself, the moon.

Severian, “The Finger is Not the Moon”, Rotten Chestnuts, 2021-09-14.

January 26, 2025

QotD: The map is not the territory, state bureaucrat style

January 24, 2025

January 21, 2025

Claim – First Nations lived sustainably and harmoniously with their natural environment. Reality – “Head-Smashed-In Buffalo Jump”

Pim Wiebel contrasts how children are taught about how First Nations before contact with Europeans were living fully sustainable lives in a kind of Garden of Eden until the white snakes man arrived and the rather less Edenic reality:

Among the many “proofs” offered in First Nations circles to support the claim of a pre-contact Eden imbued with an ethos of environmental harmony, is the idea that before the Europeans arrived, the buffalo was considered sacred, treated with great respect, and killed only in numbers that would sustain it in perpetuity.

Each of these notions require scrutiny.

For the Great Plains tribes, the buffalo was an essential source of food and of materials for tools, clothing and lodges. It is unsurprising that the buffalo featured prominently in tribal mythology. Among the Blackfoot, the animal was considered Nato’ye (of the Sun) sacred and to have great power. Buffalo skulls were placed at the top of the medicine lodges and prominently featured at communal ceremonies.

It is ubiquitously asserted that the tribes only killed as many buffalo as they needed for their sustenance between hunts, and that every part of the animal was used. A Canadian history website suggests, “The buffalo hunt was a major community effort and every part of the slaughtered animal was used“. An American publication states, presumptuously: “It’s one of the cliches of the West; Native Americans used all the parts of the buffalo. It’s something that almost everyone knows, whether you are interested in history or not.” The Assembly of First Nations weighs in, teaching Canadian school children in their heavily promoted “Learning Modules”, that “Hunters took only what was necessary to survive. Every part of the animal was used.”

But was the Indigenous relationship with the buffalo in reality one of supreme reverence? Was every part of the animal used, and were the buffalo always killed only in numbers that would satisfy immediate needs while ensuring the sustainability of the herds?

The evidence suggests something quite different.

Archaeologists have studied ancient buffalo “jump sites”, places where Indigenous bands hunted buffalo herds by driving them over high cliffs. Investigations of sites from the Late Archaic period (1000 B.C. to 700 A.D.) reveal that many more buffalo than could be used were killed and that rotting heaps of only partially butchered bison carcasses were left behind.

Buffalo jumps continued to be used as a hunting method long after first contact with Europeans. Early Canadian fur trader and explorer Alexander Henry, made the following entry on May 29th, 1805 in his diary of travels in the Missouri country: “Today we passed on the Stard. (starboard) side the remains of a vast many mangled carcasses of Buffaloe which had been driven over a precipice of 120 feet by the Indians and perished; the water appeared to have washed away a part of this immense pile of slaughter and still there remained the fragments of at least a hundred carcasses they created a most horrid stench. In this manner the Indians of the Missouri distroy vast herds of buffaloe at a stroke.”

Alexander Henry described how the buffalo jump unfolded. The hunters approached the herd from the rear and sides, and chased it toward a cliff. A particularly agile young man disguised in a buffalo head and robe positioned himself between the herd and the cliff edge, luring the animals forward. Henry was told on one occasion that the decoy sometimes met the same fate as the buffalo: “The part of the decoy I am informed is extremely dangerous if they are not very fleet runers the buffaloe tread them under foot and crush them to death, and sometimes drive them over the precipice also, where they perish in common with the buffaloe.”

The Blackfoot called their jump sites Pishkun, meaning “deep blood kettle”. It is not difficult to imagine the horrendous bawling of the animals that suffered physical trauma from the fall but did not immediately succumb. Did the hunters have the ability, or even make an attempt, to put them out of their misery with dispatch? We do not know.

January 16, 2025

QotD: “At promise” youth

A new law in California bans the use, in official documents, of the term “at risk” to describe youth identified by social workers, teachers, or the courts as likely to drop out of school, join a gang, or go to jail. Los Angeles assemblyman Reginald B. Jones-Sawyer, who sponsored the legislation, explained that “words matter”. By designating children as “at risk”, he says, “we automatically put them in the school-to-prison pipeline. Many of them, when labeled that, are not able to exceed above that.”

The idea that the term “at risk” assigns outcomes, rather than describes unfortunate possibilities, grants social workers deterministic authority most would be surprised to learn they possess. Contrary to Jones-Sawyer’s characterization of “at risk” as consigning kids to roles as outcasts or losers, the term originated in the 1980s as a less harsh and stigmatizing substitute for “juvenile delinquent”, to describe vulnerable children who seemed to be on the wrong path. The idea of young people at “risk” of social failure buttressed the idea that government services and support could ameliorate or hedge these risks.

Instead of calling vulnerable kids “at risk”, says Jones-Sawyer, “we’re going to call them ‘at-promise’ because they’re the promise of the future”. The replacement term — the only expression now legally permitted in California education and penal codes — has no independent meaning in English. Usually we call people about whom we’re hopeful “promising”. The language of the statute is contradictory and garbled, too. “For purposes of this article, ‘at-promise pupil’ means a pupil enrolled in high school who is at risk of dropping out of school, as indicated by at least three of the following criteria: Past record of irregular attendance … Past record of underachievement … Past record of low motivation or a disinterest in the regular school program.” In other words, “at-promise” kids are underachievers with little interest in school, who are “at risk of dropping out”. Without casting these kids as lost causes, in what sense are they “at promise”, and to what extent does designating them as “at risk” make them so?

This abuse of language is Orwellian in the truest sense, in that it seeks to alter words in order to bring about change that lies beyond the scope of nomenclature. Jones-Sawyer says that the term “at risk” is what places youth in the “school-to-prison pipeline”, as if deviance from norms and failure to thrive in school are contingent on social-service terminology. The logic is backward and obviously naive: if all it took to reform society were new names for things, then we would all be living in utopia.

Seth Barron, “Orwellian Word Games”, City Journal, 2020-02-19.

January 7, 2025

QotD: Most people hate their jobs

A Gallup poll found that 85 percent of people hate their jobs. Business schools would say that this is due to poor strategy, poor leadership, or poor innovation. Nothing that cannot be fixed with an MBA degree. The real explanation is much simpler, however: 85 percent of people hate their jobs because, given the choice, they would never do them in the first place. Twenty years ago, I applied to a business school. A good one. Actually, one of the best. When the acceptance letter arrived, I was convinced that I’d been admitted under some female quota, as my abilities are perfectly average. Then I started the course and realised that so were everyone else’s. No one in my class was especially bright. Or if they were, it was of the topical, tactical sort of intelligence — one that allows a person to see the different angles but somehow totally miss the point. The course itself was akin to vocational training: two months of accounting, two months of strategy, two months of marketing, and then off you go, ripe and ready for the office. Sorry, for leadership — which is telling other people in the office what to do.

We do, of course, have a choice. If you don’t like office work, you can become a PE teacher. If you are bad with authority, start your own business. The corporate sector is too greedy for you? Join an NGO. How glorious our life would be if things were so simple. Regrettably, they are not. Nicolai Berdyaev, a Russian religious philosopher during the first half of the twentieth century, argued — quite convincingly — that this choice to which we habitually refer is not really a choice at all. There is no freedom in it. It is a decision to adjust, adapt, and fit in. It is not a choice to create. At best, it is the choice of an animal looking for food and shelter, not of a human agent created in God’s image. He was right. As we leave childhood and the need to earn a living becomes increasingly urgent, our dreams start getting trimmed and trampled and squashed, until there comes a day when we no longer remember them. We begin by seeking the sublime. We end up resigned to the ordinary.

Elena Shalneva, “Work — the Tragedy of Our Age”, Quillette, 2020-01-29.

January 5, 2025

“Everything humans build starts with human and social capital. This includes everything economic.”

Lorenzo Warby explains why he has always disagreed with the “standard model” of economic growth, as it fails to include the biggest cultural variables that matter enormously for economic development:

“Hyderabad bazaar” by ruffin_ready is licensed under CC BY 2.0 .

The seminal theory of economic growth is the Solow Growth Model (technically, the Solow-Swan model). The model can be easily expressed mathematically.

I have never liked the model, nor its later variations.1 The intuition behind my dislike was that societies — and indeed different ethnic groups within societies — obviously varied enormously in their capacity to use, to “put together”, the factors of production. They also vary enormously in their capacity to generate factors of production: specifically capital, the produced means of production. The model implies that there will be a general convergence between economies that has not happened.

Updating the model by including human capital was a gesture in that direction but did not fix the problem with the model, which is much more basic. The update attempted to grapple with the failure of investment to flow to poorer countries and, by implication, the long-term, systematic failures of foreign aid. The failures of foreign [aid] also supported my intuition.

The most recent (2024) Nobel memorial was for work that also directly supports my intuition — how much institutions matter for economic growth. The long-term economic growth literature — identifying culture as very much mattering for economic growth — also supports my intuition.

Skills and knowledge (human capital) are basic

To explain why the entire approach — basically, fiddling with some version of the Cobb-Douglas production function — is fundamentally mistaken, we need to go back to the origins of human economic growth. I mean, right back — all the way to foragers.

What are the original forms of capital? Well, there are tools, which are the original form of physical capital. But without the skills to make and use the tools, they either do not exist, or they are useless.

So, we start with skills and knowledge, with human capital. It takes almost 20 years to train a young human forager to be a subsistence adult — that is, to forage as much nutrition as they consume. The need to stuff the human brain with skills and knowledge — and the need to grow a brain that can be so stuffed — is why we have the most biologically expensive children in the biosphere. The need to impart skills to biologically expensive children is fundamental to the dynamics of all human societies.

Human capital — skills and knowledge — is not an “add on”. It is basic.

So are social connections (social capital)

Foragers do not live as atomistic individuals. They live in families and (fluid) foraging bands. Families and foraging bands are vehicles for our highly cooperative subsistence and reproduction strategies.

That we are the tool-making and tool-using species lacking tearing teeth and claws with the most biologically expensive offspring is why we have highly cooperative subsistence and reproduction strategies. It is also why we are so much the normative species — enabling robust cooperation based on convergent expectations — and why we have prestige and propriety as forms of status.

Both these forms of status represent currencies of cooperation. Prestige grants people status for doing things which are risky, clever, hard, entertaining. It is status by conspicuous competence. It provides a way to reward people for engaging in activities which generate wider social benefits — what economists call positive externalities. It also encourages people to want to associate with you.2

The other form of status — propriety — grants status to those who uphold the norms of the group. In particular, it wields stigma against those deemed to have violated those norms. It provides a way to punish people for engaging in activities which generate wider social costs — what economists call negative externalities. It helps solve the free-rider problem regarding the effort to enforce norms.

Reversing (i.e. perverting) status patterns so that people get prestige from victimhood — extending to various forms of failures of competence or even wildly anti-social behaviour — while stigmatising people who conspicuously successful (as oppressors or exploiters) is deeply destructive of human flourishing.3 We can see this pattern currently operating in “progressive” US states, and especially cities, but murderous versions of it operated in various Communist states. These things affect economic activity, but cannot be discerned by a Cobb-Douglas production function.

It is not true that scientists have never discovered Homo economicus. Unfortunately, Homo economicus is not a member of genus Homo. It is Pan troglodytes (chimpanzees) playing strategy games in a lab. It is precisely because we Homo sapiens are more normative, allowing us to encapsulate the social conquest of the Earth, that there are billions of us and only a few thousand of them.

We — as a highly social, indeed ultra-social, species — engage in both individual and social calculations. Different cultures notoriously generate different patterns for, and balances between, such calculations.

1. The model has some utility for short-term calculations of growth.

2. As with any social benefit, the knock-on dynamics of prestige can be complex, but status from conspicuous competence is at the heart of it.

3. The November 2014 Shirtgate controversy — where a rocket scientist who had led the technically incredibly difficult task of landing a probe on a comet was publicly humiliated over the shirt he wore (a gift from a female friend it turned out) — represented conspicuous achievement (prestige) being trumped by feminist stigmatisation (propriety).

December 22, 2024

QotD: “Sparta Is Terrible and You Are Terrible for Liking Sparta”

“This. Isn’t. Sparta.” is, by view count, my second most read series (after the Siege of Gondor series); WordPress counts the whole series with just over 415,000 page views as I write this, with the most popular part (outside of the first one; first posts in a series always have the most views) being the one on Spartan Equality followed by Spartan Ends (on Spartan strategic failure). The least popular is actually the fifth part on Spartan Government, which doesn’t bother me overmuch as that post was the one most narrowly focused on the spartiates (though I think it also may be the most Hodkinsonian post of the bunch, we’ll come back to that in a moment) and if one draws anything out of my approach it must be that I don’t think we should be narrowly focused on the spartiates.

In the immediate moment of August, 2019 I opted to write the series – as I note at the beginning – in response to two dueling articles in TNR and a subsequent (now lost to the ages and only imperfectly preserved by WordPress’ tweet embedding function) Twitter debate between Nick Burns (the author of the pro-Sparta side of that duel) and myself. In practice however the basic shape of this critique had been brewing for a lot longer; it formed out of my own frustrations with seeing how Sparta was frequently taught to undergraduates: students tended to be given Plutarch’s Life of Lycurgus (or had it described to them) with very little in the way of useful apparatus to either question his statements or – perhaps more importantly – extrapolate out the necessary conclusions if those statements were accepted. Students tended to walk away with a hazy, utopian feel about Sparta, rather than anything that resembled either of the two main scholarly “camps” about the polis (which we’ll return to in a moment).

That hazy vision in turn was continually reflected and reified in the popular image of Sparta – precisely the version of Sparta that Nick Burns was mobilizing in his essay. That’s no surprise, as the Sparta of the undergraduate material becomes what is taught when those undergrads become high school teachers, which in turn becomes the Sparta that shows up in the works of Frank Miller, Steven Pressfield and Zack Snyder. It is a reading of the sources that is at once both gullible and incomplete, accepting all of the praise without for a moment thinking about the implications; for the sake of simplicity I’m going to refer to this vision of Sparta subsequently as the “Pressfield camp”, after Steven Pressfield, the author of Gates of Fire (1998). It has always been striking to me that for everything we are told about Spartan values and society, the actual spartiates would have despised nearly all of their boosters with sole exception of the praise they got from southern enslaver-planter aristocrats in the pre-Civil War United States. If there is one thing I wish I had emphasized more in “This. Isn’t. Sparta.” it would have been to tell the average “Sparta bro” that the Spartans would have held him in contempt.

And so for years I regularly joked with colleagues that I needed to make a syllabus for a course simply entitled, “Sparta Is Terrible and You Are Terrible for Liking Sparta”. Consequently the TNR essays galvanized an effort to lay out what in my head I had framed as “The Indictment Against Sparta”. The series was thus intended to be set against the general public hagiography of Sparta and its intended audience was what I’ve heard termed the “Sparta Bro” – the person for whom the Spartans represent a positive example (indeed, often the pinnacle) of masculine achievement, often explicitly connected to roles in law enforcement, military service and physical fitness (the regularity with which that last thing is included is striking and suggests to me the profound unseriousness of the argument). It was, of course, not intended to make a meaningful contribution to debates within the scholarship on Sparta; that’s been going on a long time, the questions by now are very technical and so all I was doing was selecting the answers I find most persuasive from the last several decades of it (evidently I am willing to draw somewhat further back than some). In that light, I think the series holds up fairly well, though there are some critiques I want to address.

One thing I will say, not that this critique has ever been made, but had I known that fellow UNC-alum Sarah E. Bond had written a very good essay for Eidolon entitled “This is Not Sparta: Why the Modern Romance With Sparta is a Bad One” (2018), I would have tried to come up with a different title for the series to avoid how uncomfortably close I think the two titles land to each other. I might have gone back to my first draft title of “The Indictment Against Sparta” though I suspect the gravitational pull that led to Bond’s title would have pulled in mine as well. In any case, Sarah’s essay takes a different route than mine (with more focus on reception) and is well worth reading.

Bret Devereaux, “Collections: This. Isn’t. Sparta. Retrospective”, A Collection of Unmitigated Pedantry, 2022-08-19.

December 7, 2024

QotD: Game of Thrones as PoMo “deconstructionism”

Finally, Game of Thrones. I think it’s the same deal here, the same faux world weary cynicism. I’ve only seen one or two episodes of the show, but I read the first two or three books, up to the point where I realized two things: 1) he has no idea how he’s going to finish the story, and 2) it’s yet more tedious PoMo “deconstruction”.

Again, I guess I can forgive my colleagues, under-sexed little closet cases that they are, for being distracted by the boob cornucopia up on screen, but in the books, anyway, this comes through plain as day: Everyone in Westeros is either a psychopathic scumbag, or dead. In the very best PoMo style, the author is rubbing our faces in his belief that, since it’s extremely difficult to be heroic — or, all too often, merely decent — everyone who even thinks about trying is a fool, and deserves all the awful shit that happens to him. I’m told that back in the 18th century, a fun topic of debate at salons is whether a society of atheists could endure. Martin’s entire oeuvre seems dedicated to proving that life — mere, grubby, eating-shitting-sleeping existence — will continue in a society composed entirely of scumbags … but he has no idea why.

I have no idea why this idea (if that’s the right word) is so deeply appealing to academics, but evidently it is … and these are the people who are teaching your children.

Severian, “The One Pop Culture Thing”, Rotten Chestnuts, 2021-09-16.

December 2, 2024

QotD: Intersectionality on campus

… intersectionality’s intellectual flaws translate into moral shortcomings. Importantly, it is blind to forms of harm that occur within identity groups. For a black woman facing discrimination from a white man, intersectionality is great. But a gay woman sexually assaulted by another gay woman, or a black boy teased by another black boy for “acting white”, or a Muslim girl whose mother has forced her to wear the hijab will find that intersectionality has no space for their experiences. It certainly does not recognize instances in which the arrow of harm runs in the “wrong” direction — a black man committing an antisemitic hate crime, for instance. The more popular intersectionality becomes, the less we should expect to hear these sorts of issues discussed in public.

Perhaps the most pernicious consequence of intersectionality, however, is its effect on the culture of elite college campuses. Some claims about “campuses-gone-crazy” are surely overblown. For instance, judging from my experience at Columbia, nobody believes there are 63 genders, and hardly anyone loves Soviet-style communism. (That said, the few communists on campus tend to despise intersectionality with an unusual passion.) But one thing is certainly not exaggerated: intersectionality dominates the day-to-day culture. It operates as a master formula by which social status is doled out. Being black and queer is better than just being black or queer, being Muslim and gender non-binary is better than being either one on its own, and so forth. By “better”, I mean that people are more excited to meet you, you’re spoken of more highly behind your back, and your friends enjoy an elevated social status for being associated with you.

In this way, intersectionality creates a perverse social incentive structure. If you’re cis, straight, and white, you start at the bottom of the social hierarchy — especially if you’re a man, but also if you’re a woman. For such students, there is a strong incentive to create an identity that will help them attain a modicum of status. Some do this by becoming gender non-binary; others do it by experimenting with their sexuality under the catch-all label “queer”. In part, this is healthy college-aged exploration — finding oneself, as it were. But much of it amounts to needless confusion and pain imposed on hapless young people by the bizarre tenets of a new faith.

Coleman Hughes, “Reflections on Intersectionality”, Quillette, 2020-01-13.

November 27, 2024

Scolianormativity

At FEE, Michael Strong defines the neologism and provides evidence that it has been a long-term harm to children forced into the Prussian-originated school regimentation regime:

Scolianormative (adj.): The assumption that behaviors defined by institutionalized schooling are “normal”. An assumption that became pervasive in industrialized societies in which institutionalized schooling became the norm that resulted in marginalizing and harming millions of children. Once society began to question scolianormativity, gradually people began to realize that the norms set by institutionalized schooling were perfectly arbitrary. It turned out that it was not necessary to harm children. The institutions that led to such widespread harms were dismantled, and humanity transcended the terrible century of institutionalized schooling.

The conventional educational model, government-enforced and subsidized, is based on 13 years of schooling consisting of state-defined curriculum standards and exams leading to a high school diploma.

Young human beings are judged as either “normal” or not based on the extent to which they are “on track” with respect to grade level exams and test scores. Students who are not making the expected progress may be diagnosed with learning differences (formerly known as disabilities). Students who can’t sit still adequately may be diagnosed with ADD/ADHD. Students who find the experience soul-killing may be diagnosed with depression or anxiety. Students who can’t stand to be told what to do all day may be diagnosed with Oppositional Defiant Disorder (ODD). Students who score higher on certain tests are labeled “gifted”.

Massive amounts of research and institutional authority have been invested in these and other diagnoses. When a child is not progressing appropriately in the system, the child is often sent to specialists who then perform the diagnosis. When appropriate, then the child is given some combination of medication, accommodations, and/or sent to a special program for children with “special needs”.

Many well-intentioned people regard this system as life-saving for the children who might otherwise have “not had their needs met” in the absence of such a diagnosis and intervention. And this is no doubt often true, but our fixation on scolianormativity blinds us to the fact that an entirely different perspective might actually result in better lives for more children.

How could one possibly deny mountains of evidence on behalf of such a life-saving system?

Scolianormativity

The Prussian school model, a state-led model devoted to nationalism, is only about two hundred years old. For much of its first century it was limited to a few hours per day, for a few months per year, for a few years of schooling. It has only gradually grown to encompass most of a child’s waking hours for nine months a year from ages 5 to 18. Indeed, in the U.S., it was only in the 1950s that a majority of children graduated from high school (though laws requiring compulsory attendance through age 16 had been passed in the late 19th and early 20th century). In addition, for most of its first century, it was far more flexible than it has become in its second. The increasing standardization and bureaucratization of childhood is a remarkably recent phenomenon in historical terms.

In his book Seeing Like a State, the political scientist James C. Scott documents how governments work to create societies that are “legible”, that can be perceived and managed by the state to suit the needs of the state’s bureaucrats and political leaders. Public schools are one of the most pervasive of all state institutions. The structure of public schooling has grown to suit the needs of the state bureaucrats who monitor it.

October 27, 2024

Whittier College as a small-scale model of the decline of higher education

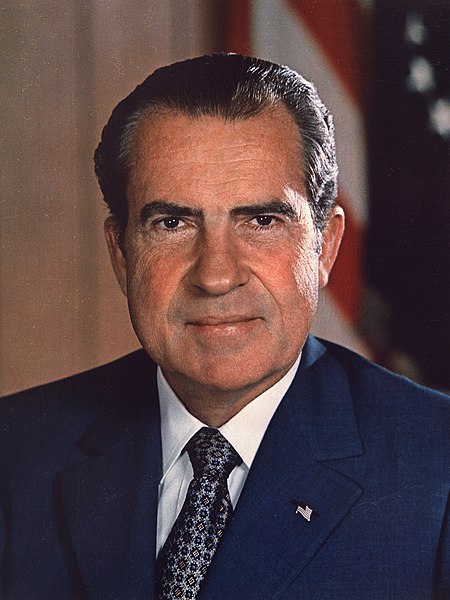

At Postcards From Barsoom, John Carter returns to the state of higher education in the west, this time looking at the plight of Whittier College which appears to be well along in a death spiral:

Whittier College’s most famous graduate, Richard M. Nixon, 1 June 1972.

Official portrait via Wikimedia Commons.

While I like to jump around subject matter here, in order to keep myself – and you – from getting bored, one topic that I return to regularly (as a dog returns to his vomit, as a sow returns to her mire) is the ongoing polycrisis in higher ed. You may have noticed, as I just wrote about this a week ago. Academia Is Women’s Work created a bit of a buzz. It seems to have struck a nerve with a lot of people, both with those who have observed the same things that I’ve noticed, and who had the same “ah-hah!” moment that I did once the phenomenon of male flight was connected to the myriad symptoms of academic decay that we all know so well; and with those (mainly women, naturally) who reacted with sputtering outrage – misogynist! incel! – when my Xitter thread on the subject went viral and broke containment in the basedosphere. Despite quite a few hostile eyeballs on the thread, the only thing they could find to correct was a grammatical typo (*its!) in the opening tweet.

When writing about the DIEvory Tower I usually keep it very general, as the problems are systemic, affecting the entire sector, and the view from orbit avoids giving the impression that the issues are specific to any one institution. But a couple of stories recently came to my attention which are simply too perfect not to share with you. Each of them provides a sort of holographic totality of the academic polycrisis, illustrating all of the afflictions in specific, personalized detail.

[…]

The title of this article really says it all: “Plunging enrollment, financial woes, trustee exodus. Whittier College confronts crisis“. It’s a bit out of date now – it was published about a year and a half ago – but the subject matter remains timeless. It has everything: infrastructural decay, forced diversity, incompetent and corrupt administration, a terrified faculty, accusations of racism, collapsing enrolment, angry alumni, reduced donations, budgetary problems. It’s all there.

Whittier College is a small liberal arts school in California, founded in the 19th century by abolitionist Quakers, and known mainly for being President Richard Nixon’s alma mater. It has seen better days:

[T]he once-bustling quad is often all but empty these days, students say, and inside the Wanberg Hall dormitory, carpets smell musty, the Wi-Fi is spotty, and 25 students share two restrooms with toilets that frequently break down and take ages to fix. The eerie quiet outside and fetid bathrooms inside are signs of the turmoil roiling one of California’s oldest liberal arts colleges.

Imagine spending $49,000 a year to use fetid bathrooms.

Enrolment and revenue have both collapsed over a very short timespan:

Since 2018, enrollment has plummeted by about 35%, from 1,853 students to about 1,200, according to college figures. Annual revenue has plunged by 29% over roughly the same period, audited financial statements show. … This term, faculty report the number of undergraduates is just 1,027.

The athletics programs are being sacrificed:

Partly to save money, Whittier cut football and three other sports programs last year.

One of the other teams that got shut down was lacrosse, which is a very white sport. Sheer coincidence, probably. “Partly to save money,” though, huh.

The president of Whittier College is one Linda Oubré. Oubré has an MBA from Harvard Business School, previously served as a dean at College of Business at San Francisco State University, has worked as a consultant, was president of a teeth-whitening spa, and is also – and this surely the most important line item on her curriculum vitae – professionally qualified as a black woman. Given these impeccable credentials, it will be no surprise to learn that Whittier’s problems commenced immediately upon Oubré taking the helm.

[…]

Oubré is very concerned about people doing racisms:

For a decade, more than half of Whittier’s undergraduates have been people of color. But in an hour-long talk at a South by Southwest education conference earlier this month, Oubré told attendees she encountered attitudes at Whittier such as, “‘We can’t have too many Hispanics,’ whatever, fill in the blank, ‘because the white kids won’t be comfortable’.”

It seems very unlikely that any of the faculty at a contemporary liberal arts college would have dared to suggest that the potential discomfort of white students was something to be avoided – yes, I’m saying that I think Oubré just made that up – but it’s revealing that she thinks that saying that people saying that the white kids might be uncomfortable with too much diversity is an own. The white kids are supposed to be uncomfortable! Also: a greater-than-fifty-percent non-white student body, in a country that is (for now) majority white, is apparently an insufficient level of diversity. Sufficient diversity is zero white people. But we already knew that.

October 25, 2024

Diversity at all costs

In the National Post, Harry Rakowski explains why Toronto Generic University — sorry, I mean “Toronto Metropolitan University” — is reserving 75% of available enrolment in their new medical school for more diverse candidates, even if they wouldn’t normally qualify by their grades:

We have a critical shortage of doctors and nurses in Canada. Almost a quarter of our population can’t find a family doctor. Wait times for seeing a specialist, getting medical imaging and surgical dates continue to climb, with only Band-Aid solutions being proposed by the federal and provincial governments. We desperately need to expand medical schools and the licensing of highly qualified foreign medical graduates.

So it was welcome news to hear that 94 undergraduate medical students and 105 postgraduate students (residents) will be entering the newly established Toronto Metropolitan University School of Medicine when it opens in September 2025. The city of Brampton, Ont. donated a former civic centre along with $20 million in funding for renovations in order to make the school happen.

However, it was highly disturbing to learn that admissions to the new facility will be driven by a culture-war philosophy that will dilute the quality of medical practice.

There’s no doubt that diversity in medicine helps to optimize care and provide better outcomes for the differing needs of Canada’s highly diverse population, which includes many individuals disadvantaged by their geography as well as racial and economic inequality. But TMU is going about it the wrong way. Its admissions policy will focus on DEI (diversity, equity and inclusion) rather than quality.

TMU’s plan is to reserve 75 per cent of its enrolment slots for “equity-deserving” students — Black and Indigenous applicants and others who meet “equity-deserving” criteria including students who identify as members of the 2SLGBTQ+ community, those who are “racialized” and individuals “with lived experiences of poverty or low socio-economic status.” It will accept a minimum grade point average (GPA) of 3.3 (B+) — or even less for select Black and Indigenous applicants — and then use GPA only as an application criterion, not as a selection criterion. By comparison, the University of Toronto’s Temerty Faculty of Medicine requires a minimum GPA average of 3.6 for undergraduate applicants (although average acceptance is now 3.95).

October 24, 2024

October 19, 2024

Changing gender balance in occupations and in higher education

At Postcards from Barsoom, John Carter ruminates on the likely downward path of many institutes of higher learning as current gender balance changes continue:

An occupation that flips from male to female dominance invariably suffers not only diminished prestige, but also a decline in wages … which, once again, makes sense in the context of sexual psychology. A man’s income is one element (and a big element) of a woman’s attraction to him, but the reverse is not true; if women are paid less, this does not really hurt their value in the sexual marketplace at all, and so they will push back against it much less than men would. This is probably what lies behind the tendency of women to be less forceful when negotiating salaries.

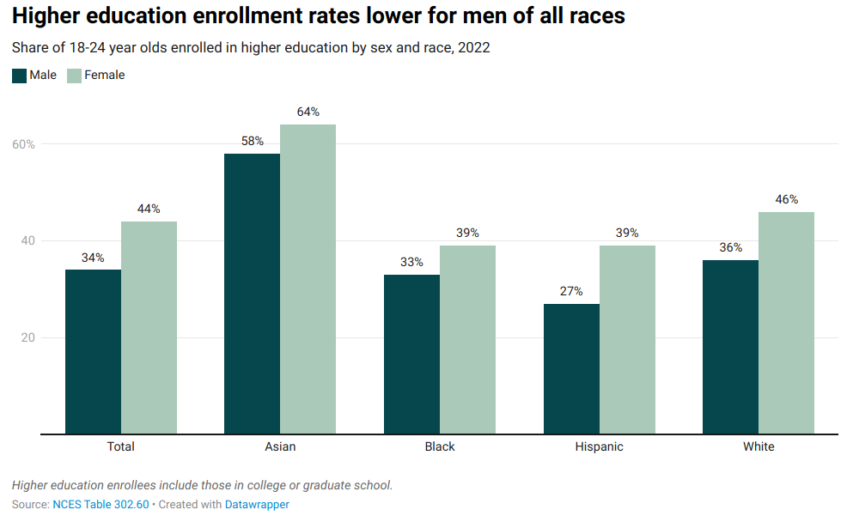

To the point: ever since the 1970s, women have overtaken and gradually eclipsed men within higher education. There is a gap in enrolment, consistent across racial groups:

[…]

Across all programs, at all academic levels, American universities recently reached the threshold of 60% of the student body being female.

This will be a disaster for academia.

Indeed, it’s already a disaster. About a year ago, I analyzed a Gallup poll which revealed that the confidence of the American public in the trustworthiness and overall value of the academic sector had declined precipitously over the course of the 2010s.

In that article I examined several factors contributing to this DIEing confidence in the academy: the explosive growth in tuition fees, even as continuous relaxation of academic standards dilutes the actual value of a degree; the deplorable state of scholarship, with endless revelations of fraud, a seemingly irresolvable replication crisis, and the torrent of psychotic nonsense that passes for ‘research’; the increasingly frigid social environment enforced by the armies of overpaid, sour-faced administrators. Almost all of these, however, are related in some way or another to the feminization of academia.

And it is probably going to get much worse before it gets better.

As discussed in this recent article by Celeste Davis of Matriarchal Blessing, research on male flight indicates that a 60% female composition represents the tipping point beyond which men perceive an environment as feminine, which then leads to a precipitous decline in male participation. Davis appears to be some sort of feminist3, but I want you to look past that and give her article a read; it is very thorough, well-researched, and thought-provoking (and also the direct inspiration for this article).

[…]

Universities are belatedly starting to notice that male enrolment is dropping fast, particularly among white men (I wonder why…), and are starting to make noises about maybe thinking about perhaps looking into ways of trying to recruit and retain more men (albeit, not specifically white men).

This seems unlikely to succeed.

Even if universities are successful in setting up programs to increase male recruitment, they will be fighting an uphill battle against the sexual perception that has already set in. Once something is coded as being a feminine hobby, it is extremely difficult to change that code. While it’s very easy to list examples of professions that have switched from male to female dominance, off the top of my head I have a hard time coming up with examples of the reverse. This suggests that female dominance tends to be sticky. There’s no reason to expect this will be any different with academia, either within individual programs, or across the sector as a whole.

This is an entirely different problem from the one faced by female entryism. In the initial phases of female entry, the primary difficulty faced by women is that it is simply more difficult to compete with men – in the case of athletics, effectively impossible. Women must therefore either work extremely hard, or the work must be made easier for them. In practice, since the 1970s we’ve seen both of these, with “working twice as hard as the boys” predominating in the early years, and assistance from special programs predominating later on.

By contrast, the central obstacle faced by anyone trying to attract men to a female-dominated environment is that men are deeply reluctant to enter. As a third of young men told Pew when asked why they didn’t attend or complete university: they just didn’t want to. It isn’t because they can’t compete with women. They can, usually with ease, but competition is pointless because it will gain them nothing. Special programs to assist men are beside the point; if anything, they work against you, because the implicit message with any special program for men is that they need help to compete with women … thereby making competition even more pointless. “You beat a girl but you needed help to do it”, is going to impress the girls even less than beating a girl unaided.

October 7, 2024

The demographic impact of modern cities

Lorenzo Warby touches on some of the social and demographic issues that David Friedman discussed the other day:

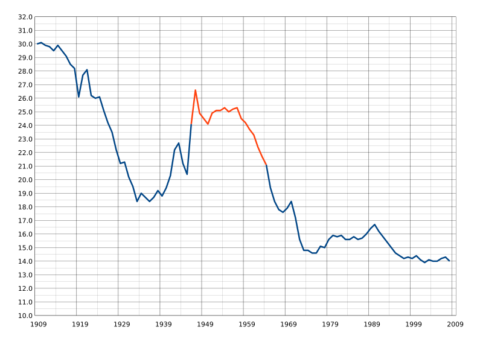

US Birth Rates from 1909-2008. The number of births per thousand people in the United States. The red segment is known as the Baby Boomer period. The drop in 1970 is due to excluding births to non-residents.

Graph by Saiarcot895 via Wikimedia Commons

Cities are demographic sinks. That is, cities have higher death rates than fertility rates.

For much of human history, cities have been unhealthy places to live. This is no longer true: cities have higher average life expectancies than rural areas. But they are still demographic sinks, for cities collapse fertility rates.

The problem is not that more women have no children, or only one child, making it to adulthood. Such women have always existed, though their share of the population has gone up across recent decades.

The key problem is the collapse in the demographic “tail” of large families. Cities are profoundly antipathetic to large families, and have always been so. This is particularly true of apartment cities — suburbs are somewhat more amenable to large families, though not enough to make up for the urbanisation effect.

While modern cities do not have slaves and household servants who were blocked from reproducing as ancient cities did, various aspects of modern technology have fertility-suppressing effects. Cars that presume a maximum of three children, for instance. An effect that is worsened by compulsory baby car-seats. Or ticketing and accommodation that presumes two children or less. There is also the deep problems of modern online dating. Plus the effects that endocrine disrupters and falling testosterone may be having.

These effects also extend to rural populations: falling fertility in rural populations is far more of a mystery than falling fertility in urban populations. How much declining metabolic health plays in all this is unclear. Indeed, futurist Samo Burja is correct, we do not really understand the “social technology” of human breeding.

Be that as it may, cities as demographic sinks is a continuation of patterns that go back to the first cities.

Matters at the margin

There are factors at the margin known to make a difference. Religious folk breed more than secular folk, though that is in part because rural people are more religious and city folk more secular.

Educating women reduces fertility. This is, in part, an urbanisation effect, as more education is available in cities. It is also an opportunity cost effect — there is more to do in cities, both paid and unpaid.

Education increases the general opportunity cost of motherhood, by expanding women’s opportunities. This also makes moving to cities more attractive. Women having more career opportunities reduces the relative attractiveness of men as marriage partners, reducing the marriage rate.

Strong cultural barriers against children outside marriage can reduce the fertility rate, by largely restricting motherhood to married women. This makes the fertility rate more dependant on the marriage rate.

Educating women makes children more expensive, as educated mothers have educated children. Part of the patterns that economist Gary Becker analysed.