Thorstein Veblen’s famous “leisure class” has evolved into the “luxury belief class”. Veblen, an economist and sociologist, made his observations about social class in the late nineteenth century. He compiled his observations in his classic work, The Theory of the Leisure Class. A key idea is that because we can’t be certain of the financial standing of other people, a good way to size up their means is to see whether they can afford to waste money on goods and leisure. This explains why status symbols are so often difficult to obtain and costly to purchase. These include goods such as delicate and restrictive clothing, like tuxedos and evening gowns, or expensive and time-consuming hobbies like golf or beagling. Such goods and leisurely activities could only be purchased or performed by those who did not live the life of a manual laborer and could spend time learning something with no practical utility. Veblen even goes so far as to say, “The chief use of servants is the evidence they afford of the master’s ability to pay.” For Veblen, butlers are status symbols, too.

[…]

Veblen proposed that the wealthy flaunt status symbols not because they are useful, but because they are so pricey or wasteful that only the wealthy can afford them. A couple of winters ago it was common to see students at Yale and Harvard wearing Canada Goose jackets. Is it necessary to spend $900 to stay warm in New England? No. But kids weren’t spending their parents’ money just for the warmth. They were spending the equivalent of the typical American’s weekly income ($865) for the logo. Likewise, are students spending $250,000 at prestigious universities for the education? Maybe. But they are also spending it for the logo.

This is not to say that elite colleges don’t educate their students, or that Canada Goose jackets don’t keep their wearers warm. But top universities are also crucial for inculcation into the luxury belief class. Take vocabulary. Your typical working-class American could not tell you what “heteronormative” or “cisgender” means. But if you visit Harvard, you’ll find plenty of rich 19-year-olds who will eagerly explain them to you. When someone uses the phrase “cultural appropriation”, what they are really saying is “I was educated at a top college”. Consider the Veblen quote, “Refined tastes, manners, habits of life are a useful evidence of gentility, because good breeding requires time, application and expense, and can therefore not be compassed by those whose time and energy are taken up with work.” Only the affluent can afford to learn strange vocabulary because ordinary people have real problems to worry about.

The chief purpose of luxury beliefs is to indicate evidence of the believer’s social class and education. Only academics educated at elite institutions could have conjured up a coherent and reasonable-sounding argument for why parents should not be allowed to raise their kids, and that we should hold baby lotteries instead. Then there are, of course, certain beliefs. When an affluent person advocates for drug legalization, or defunding the police, or open borders, or loose sexual norms, or white privilege, they are engaging in a status display. They are trying to tell you, “I am a member of the upper class”.

Affluent people promote open borders or the decriminalization of drugs because it advances their social standing, and because they know that the adoption of those policies will cost them less than others. The logic is akin to conspicuous consumption. If you have $50 and I have $5, you can burn $10 and I can’t. In this example, you, as a member of the upper class, have wealth, social connections, and other advantageous attributes, and I don’t. So you are in a better position to afford open borders or drug experimentation than me.

Or take polyamory. I recently had a revealing conversation with a student at an elite university. He said that when he sets his Tinder radius to 5 miles, about half of the women, mostly other students, said they were “polyamorous” in their bios. Then, when he extended the radius to 15 miles to include the rest of the city and its outskirts, about half of the women were single mothers. The costs created by the luxury beliefs of the former are bore by the latter. Polyamory is the latest expression of sexual freedom championed by the affluent. They are in a better position to manage the complications of novel relationship arrangements. And even if it fails, they have more financial capability, social capital, and time to recover if they fail. The less fortunate suffer the damage of the beliefs of the upper class.

Rob Henderson, “Thorstein Veblen’s Theory of the Leisure Class – An Update”, Rob Henderson’s Newsletter, 2023-01-29.

May 6, 2023

QotD: The luxury beliefs of the leisure class

April 22, 2023

Epistemology, but not using the term “epistemology” because reasons

At Founding Questions, Severian responds to a question about the teaching profession in our ever-more-self-beclowning world:

Proposed coat of arms for Founding Questions by “urbando”.

The Latin motto translates to “We are so irrevocably fucked”.

I know a guy who teaches at a SPLAC where they have some “common core” curriculum — everyone in the incoming class, regardless of major, is required to take a few set courses, and all of them revolve around that question. How do we know what we know? So it’s not just a public school / grade school thing. And epistemology IS fascinating … almost as fascinating as the fact that the word “epistemology” never shows up on my buddy’s syllabi.

I think the problem is especially acute for “teachers”, because the Dogmas of the Church of Woke are so ludicrous that it takes real effort to not see the obvious absurdities. It takes real hermeneutical talent to reconcile the most obvious empirical facts with the Dogmas of the Faith, and very few kids have it. So they either completely check out, or just repeat the Dogmas by rote.

The other problem has to do with teacher training. I know a little bit about this (or, at least, a little bit about how it stood 15 years ago), because a) I was “dating” an Education Theory person, and b) I went through Flyover State’s online

indoctrinationeducation Certificate Program.The former is just awful, even by ivory tower standards, so I’ll spare you the gory details. Just know that however bad you think “social science” is, it’s way way worse. The “certification program” was something I got my Department to sign off on — I was the guy who “knew computers” (which is just hilarious if you know me in real life; I’m all but tech-illiterate), so I got assigned all the online classes, which the Department fought against with all its might, and only grudgingly agreed to offer when the Big Cheese threatened to pull some $$$ if they didn’t. So even though I wouldn’t have been eligible for all the benefits and privileges thereunto appertaining, me being a lowly adjunct, none of the real eggheads could bring themselves to do it, so I was the guy.

The Education Department, being marginally smarter than the History Department, saw which way the wind was blowing, so they started offering big money incentives for professors to sign up for this “online teaching certification program” they’d dreamed up. No, really: It was something like $5000 for a two course, which really meant “two hours a day, four days a week,” because academia works like that (it’s a 24/7 job, remember — 24 hours a week, 7 months a year). By my math, $5000 by 16 hours is something like $300 an hour, so hell yeah I signed right up …

It was torture. Sheer torture. I knew it would be, but goddamn, man. Take the worst corporate struggle session you’ve ever been forced to attend, put it on steroids. Make the “facilitator” — not professor, by God, not in Education! — the kind of sadistic bastard that got kicked out of Viet Cong prison guard school for going overboard. Add to that the particularities of the Education Department, where all of academia’s worst pathologies are magnified. You know how egghead prose hews to the rule “Why use 5 words when 50 will do?” In the Ed Department, it’s “why use 50 when 500 will do.” The first two hours of the first two struggle sessions were devoted to Bloom’s Taxonomy of Success Words, and click that link if you dare.

I know y’all won’t believe this, but I’ll tell you anyway: I spent more than an hour rewriting the “objectives” section of my class syllabus to conform to that nonsense. Instead of just “Students will learn about the origins, events, and outcomes of the US Civil War,” I had to say shit like “Engaging with the primary sources” and “evaluating historical arguments,” and yes, the “taxonomy” buzzwords had to be both underlined and italicized, for reasons I no longer remember, but which were of course retarded.

Given that this is fairly typical Ed Major coursework, is it any surprise that they have no idea what learning is?

April 13, 2023

QotD: The real purpose of modern-day official commissions

After the riots were over, the government appointed a commission to enquire into their causes. The members of this commission were appointed by all three major political parties, and it required no great powers of prediction to know what they would find: lack of opportunity, dissatisfaction with the police, bla-bla-bla.

Official enquiries these days do not impress me, certainly not by comparison with those of our Victorian forefathers. No one who reads the Blue Books of Victorian Britain, for example, can fail to be impressed by the sheer intellectual honesty of them, their complete absence of any attempt to disguise an often appalling reality by means of euphemistic language, and their diligence in collecting the most disturbing information. (Marx himself paid tribute to the compilers of these reports.)

I was once asked to join an enquiry myself. It was into an unusual spate of disasters in a hospital. It was clear to me that, although they had all been caused differently, there was an underlying unity to them: they were all caused by the laziness or stupidity of the staff, or both. By the time the report was written, however (and not by me), my findings were so wrapped in opaque verbiage that they were quite invisible. You could have read the report without realising that the staff of the hospital had been lazy and stupid; in fact, the report would have left you none the wiser as to what had actually happened, and therefore what to do to ensure that it never happened again. The purpose of the report was not, as I had naively supposed, to find the truth and express it clearly, but to deflect curiosity and incisive criticism in which it might have resulted if translated into plain language.

Theodore Dalrymple, “It’s a riot”, New English Review, 2012-04.

April 8, 2023

The underlying philosophy of J.R.R. Tolkien’s work

David Friedman happened upon an article he wrote 45 years ago on the works of J.R.R. Tolkien:

The success of J.R.R. Tolkien is a puzzle, for it is difficult to imagine a less contemporary writer. He was a Catholic, a conservative, and a scholar in a field-philology-that many of his readers had never heard of. The Lord of the Rings fitted no familiar category; its success virtually created the field of “adult fantasy”. Yet it sold millions of copies and there are tens, perhaps hundreds, of thousands of readers who find Middle Earth a more important part of their internal landscape than any other creation of human art, who know the pages of The Lord of the Rings the way some Christians know the Bible.

Humphrey Carpenter’s recent Tolkien: A Biography, published by Houghton Mifflin, is a careful study of Tolkien’s life, including such parts of his internal life as are accessible to the biographer. His admirers will find it well worth reading. We learn details, for instance, of Tolkien’s intense, even sensual love for language; by the time he entered Oxford, he knew not only French, German, Latin, and Greek, but Anglo-Saxon, Gothic and Old Norse. He began inventing languages for the sheer pleasure of it and when he found that a language requires a history and a people to speak it he began inventing them too. The language was Quenya, the people were the elves. And we learn, too, some of the sources of his intense pessimism, of his feeling that the struggle against evil is desperate and almost hopeless and all victories at best temporary.

Carpenter makes no attempt to explain his subject’s popularity but he provides a few clues, the most interesting of which is Tolkien’s statement of regret that the English had no mythology of their own and that at one time he had hoped to create one for them, a sort of English Kalevala. That attempt became The Silmarillion, which was finally published three years after the author’s death; its enormous sales confirm Tolkien’s continuing popularity. One of the offshoots of The Silmarillion was The Lord of the Rings.

What is the hunger that Tolkien satisfies? George Orwell described the loss of religious belief as the amputation of the soul and suggested that the operation, while necessary, had turned out to be more than a simple surgical job. That comes close to the point, yet the hunger is not precisely for religion, although it is for something religion can provide. It is the hunger for a moral universe, a universe where, whether or not God exists, whether or not good triumphs over evil, good and evil are categories that make sense, that mean something. To the fundamental moral question “why should I do (or not do) something”, two sorts of answers can be given. One answer is “the reason you feel you should do this thing is because your society has trained you (or your genes compel you) to feel that way”. But that answers the wrong question. I do not want to know why I feel that I should do something; I want to know why (and whether) I should do it. Without an answer to that second question all action is meaningless. The intellectual synthesis in which most of us have been reared — liberalism, humanism, whatever one may call it — answers only the first question. It may perhaps give the right answer but it is the wrong question.

The Lord Of The Rings is a work of art, not a philosophical treatise; it offers, not a moral argument, but a world in which good and evil have a place, a world whose pattern affirms the existence of answers to that second question, answers that readers, like the inhabitants of that world, understand and accept. It satisfies the hunger for a moral pattern so successfully that the created world seems to many more real, more right, than the world about them.

Does this mean, as Tolkien’s detractors have often said, that everything in his books is black and white? If so, then a great deal of literature, including all of Shakespeare, is black and white. Nobody in Hamlet doubts that poisoning your brother in order to steal his wife and throne is bad, not merely imprudent or antisocial. But the existence of black and white does not deny the existence of intermediate shades; gray can be created only if black and white exist to be mixed. Good and evil exist in Tolkien’s work but his characters are no more purely good or purely evil than are Shakespeare’s.

March 29, 2023

QotD: Sacrifice

As a terminology note: we typically call a living thing killed and given to the gods a sacrificial victim, while objects are votive offerings. All of these terms have useful Latin roots: the word “victim” – which now means anyone who suffers something – originally meant only the animal used in a sacrifice as the Latin victima; the assistant in a sacrifice who handled the animal was the victimarius. Sacrifice comes from the Latin sacrificium, with the literal meaning of “the thing made sacred”, since the sacrificed thing becomes sacer (sacred) as it now belongs to a god, a concept we’ll link back to later. A votivus in Latin is an object promised as part of a vow, often deposited in a temple or sanctuary; such an item, once handed over, belonged to the god and was also sacer.

There is some concern for the place and directionality of the gods in question. Sacrifices for gods that live above are often burnt so that the smoke wafts up to where the gods are (you see this in Greek and Roman practice, as well in Mesopotamian religion, e.g. in Atrahasis, where the gods “gather like flies” about a sacrifice; it seems worth noting that in Temple Judaism, YHWH (generally thought to dwell “up”) gets burnt offerings too), while sacrifices to gods in the earth (often gods of death) often go down, through things like libations (a sacrifice of liquid poured out).

There is also concern for the right animals and the time of day. Most gods receive ritual during the day, but there are variations – Roman underworld and childbirth deities (oddly connected) seem to have received sacrifices by night. Different animals might be offered, in accordance with what the god preferred, the scale of the request, and the scale of the god. Big gods, like Jupiter, tend to demand prestige, high value animals (Jupiter’s normal sacrifice in Rome was a white ox). The color of the animal would also matter – in Roman practice, while the gods above typically received white colored victims, the gods below (the di inferi but also the di Manes) darkly colored animals. That knowledge we talked about was important in knowing what to sacrifice and how.

Now, why do the gods want these things? That differs, religion to religion. In some polytheistic systems, it is made clear that the gods require sacrifice and might be diminished, or even perish, without it. That seems to have been true of Aztec religion, particularly sacrifices to Quetzalcoatl; it is also suggested for Mesopotamian religion in the Atrahasis where the gods become hungry and diminished when they wipe out most of humans and thus most of the sacrifices taking place. Unlike Mesopotamian gods, who can be killed, Greek and Roman gods are truly immortal – no more capable of dying than I am able to spontaneously become a potted plant – but the implication instead is that they enjoy sacrifices, possibly the taste or even simply the honor it brings them (e.g. Homeric Hymn to Demeter 310-315).

We’ll come back to this idea later, but I want to note it here: the thing being sacrificed becomes sacred. That means it doesn’t belong to people anymore, but to the god themselves. That can impose special rules for handling, depositing and storing, since the item in question doesn’t belong to you anymore – you have to be extra-special-careful with things that belong to a god. But I do want to note the basic idea here: gods can own property, including things and even land – the temple belongs not to the city but to the god, for instance. Interestingly, living things, including people can also belong to a god, but that is a topic for a later post. We’re still working on the basics here.

Bret Devereaux, “Collections: Practical Polytheism, Part II: Practice”, A Collection of Unmitigated Pedantry, 2019-11-01.

March 26, 2023

March 18, 2023

March 10, 2023

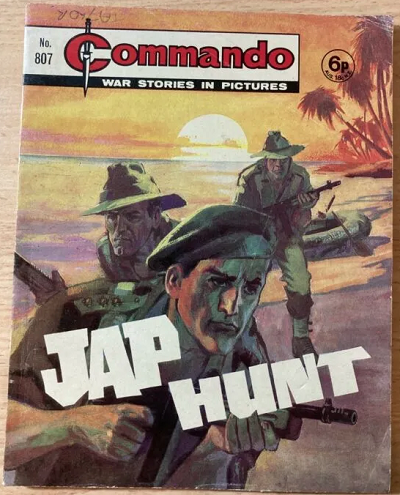

The evolution of a slur

Scott Alexander traces the reasons that we can comfortably call British people “Brits” but avoid using the similar contraction “Japs” for Japanese people:

Someone asks: why is “Jap” a slur? It’s the natural shortening of “Japanese person”, just as “Brit” is the natural shortening of “British person”. Nobody says “Brit” is a slur. Why should “Jap” be?

My understanding: originally it wasn’t a slur. Like any other word, you would use the long form (“Japanese person”) in dry formal language, and the short form (“Jap”) in informal or emotionally charged language. During World War II, there was a lot of informal emotionally charged language about Japanese people, mostly negative. The symmetry broke. Maybe “Japanese person” was used 60-40 positive vs. negative, and “Jap” was used 40-60. This isn’t enough to make a slur, but it’s enough to make a vague connotation. When people wanted to speak positively about the group, they used the slightly-more-positive-sounding “Japanese people”; when they wanted to speak negatively, they used the slightly-more-negative-sounding “Jap”.

At some point, someone must have commented on this explicitly: “Consider not using the word ‘Jap’, it makes you sound hostile”. Then anyone who didn’t want to sound hostile to the Japanese avoided it, and anyone who did want to sound hostile to the Japanese used it more. We started with perfect symmetry: both forms were 50-50 positive negative. Some chance events gave it slight asymmetry: maybe one form was 60-40 negative. Once someone said “That’s a slur, don’t use it”, the symmetry collapsed completely and it became 95-5 or something. Wikipedia gives the history of how the last few holdouts were mopped up. There was some road in Texas named “Jap Road” in 1905 after a beloved local Japanese community member: people protested that now the word was a slur, demanded it get changed, Texas resisted for a while, and eventually they gave in. Now it is surely 99-1, or 99.9-0.1, or something similar. Nobody ever uses the word “Jap” unless they are either extremely ignorant, or they are deliberately setting out to offend Japanese people.

This is a very stable situation. The original reason for concern — World War II — is long since over. Japanese people are well-represented in all areas of life. Perhaps if there were a Language Czar, he could declare that the reasons for forbidding the word “Jap” are long since over, and we can go back to having convenient short forms of things. But there is no such Czar. What actually happens is that three or four unrepentant racists still deliberately use the word “Jap” in their quest to offend people, and if anyone else uses it, everyone else takes it as a signal that they are an unrepentant racist. Any Japanese person who heard you say it would correctly feel unsafe. So nobody will say it, and they are correct not to do so. Like I said, a stable situation.

He also explains how and when (and how quickly) the use of the word “Negro” became extremely politically incorrect:

Slurs are like this too. Fifty years ago, “Negro” was the respectable, scholarly term for black people, used by everyone from white academics to Malcolm X to Martin Luther King. In 1966, Black Panther leader Stokely Carmichael said that white people had invented the term “Negro” as a descriptor, so people of African descent needed a new term they could be proud of, and he was choosing “black” because it sounded scary. All the pro-civil-rights white people loved this and used the new word to signal their support for civil rights, soon using “Negro” actively became a sign that you didn’t support civil rights, and now it’s a slur and society demands that politicians resign if they use it. Carmichael said — in a completely made up way that nobody had been thinking of before him — that “Negro” was a slur — and because people believed him it became true.

February 22, 2023

Toward a taxonomy of military bullshit

Bruce Gudmundsson linked to this essay from “Shady Maples“, a serving officer in the Canadian Army, on the general topic of bullshit in the military:

Armies, as a rule, are quagmires of bullshit. The Canadian Army is no exception. We accept that bullshit is an occupational hazard in the Army, but it’s a hazard that we struggle to manage and it’s one that threatens our effectiveness as a force.

In order to understand bullshit we need a taxonomy, that is, a map of bullshit and the common varieties found in the wild. Using our taxonomy, we can develop diagnostic tools to detect bullshit and expose its corrosive effects on our integrity and professional culture. I assume that bullshit is also a hazard in other environments and government departments, but since my experience is in the land force I will be focusing my attention on that.

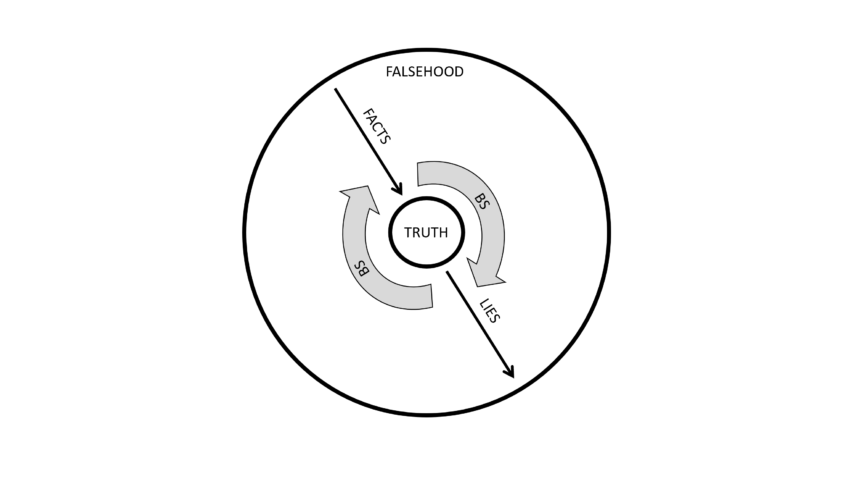

The subject of bullshit entered the mainstream in 2005 when Princeton University Press issued a hardcover edition of Harry G. Frankfurt’s 1988 essay “On Bullshit“. Frankfurt doesn’t mince words: “[one] of the most salient features of our culture is that there is so much bullshit. Everyone knows this. Each of us contributes his share.” He argues that even though we treat bullshit as a lesser moral offence than lying, it’s actually more harmful to a culture of truth. I believe this wholeheartedly, for reasons which will become clear.

In Frankfurt’s view, even though a liar and a bullshitter both aim to deceive their targets, bullshitting and lying require different mental states. In order to lie, someone must believe that they know the truth and intentionally make a false account of it. For example, if you believe that today is Monday but today is in fact Tuesday, and somebody asks you what day it is, you’re not a liar if you say “today is Monday”. You’re not lying because you really do believe that today is Monday, even though this belief is incorrect. In order to lie, you have to intentionally make a statement that is contrary to the truth as you understand it. In this example, you would be lying if you said “today is Tuesday” while believing that today is actually Monday. You would be accidentally correct, but you would still be lying because you are providing a false account of what you believe is true.

This means that lying actually requires a tacit respect for the truth on the part of the liar. The liar has to at least acknowledge the existence of truth in order to avoid it. To provide a false account of the truth, the liar must first believe in the truth.

The bullshitter, by contrast, does not operate under this constraint. Bullshit according to Frankfurt is speech without any regard for the truth whatsoever. To Frankfurt, “[the bullshitter] does not care whether the things he says describe reality correctly. He just picks them out, or makes them up, to suit his purpose.” Bullshit, Frankfurt argues, is speech that is disconnected from a concern for truth. The bullshitter will say anything if it helps them achieve their desired ends.

[…]

Let’s move on to the topic of “bull”, which is discussed at length by British psychologist Norman F. Dixon, author of On the Psychology of Military Incompetence. Dixon beat Frankfurt to the punch by publishing his own theory of bullshit in 1976, twelve years before “On Bullshit”. Dixon is a Freudian psychologist writing in the 1970s, so I am skeptical of the scientific merit of his book. Nonetheless, he provides an interesting outsider’s perspective of British military culture from the Crimean War up to the Second World War and how that culture enabled terrible officers while punishing competent ones (spoiler: aristocrats and nepotism are bad for meritocracy).

There is an entire chapter of the book about bullshit as a contributing factor to military incompetence. Dixon attributes the origin of the word “bullshit” to Australian soldiers, who in 1916 “were evidently so struck by the excessive spit and polish of the British Army that they felt moved to give it a label”. His theory of the Australian origin of bullshit is supported by Australian philosopher David Stove, who believed that it’s a signature national expression.

[…]

Canadian political philosopher G. A. Cohen added some depth to the discourse when he responded to Frankfurt in “Deeper into Bullshit“. Cohen wasn’t satisfied that Frankfurt had explored full range of bullshit as a social phenomenon. Frankfurt anchors his definition of bullshit in the intention or mental state of the speaker (the bullshitter) whose lack of concern for truth is what makes their statements bullshit. Cohen responds to this by pointing out that there are many people who honestly profess their bullshit beliefs. Reading this brought me back to my undergrad days. I had a TA in a 300-level philosophy course who claimed that he doubted his own existence. Personally, I wondered whether he would still doubt his own existence if I punched him in the balls for spewing such performative bullshit in class. So I think that Cohen makes a good point: there are bullshitters who honestly believe their own bullshit.

Cohen’s argument is squarely aimed at a certain style of academic writing, which he calls “unclarifiable unclarity.” These are intentionally vague statements which cannot be clarified without distorting their apparent meaning or dropping the veil of profundity altogether. If you want an example, you can try reading Hegel or a postmodernist philosopher, or just save yourself the effort and click on this postmodern bullshit generator (trust me, it’s indistinguishable from the real thing). Pennycook et al. label statements of this type as “pseudo-profound bullshit“. Hilariously, they ran a series of experiments to see if test subjects would find the appearance of profound truth in both real and algorithmically-generated Deepak Chopra tweets. The result, disappointingly, was yes.

Cohen adds a fourth type of bullshit to our taxonomy: “irretrievably speculative comment”. Basically, when someone is arguing for a proposition which they have no way of knowing is true or false, or they put forward an argument that’s completely unsupported by readily available evidence, then that argument is bullshit.

[…]

Still with me? So far we’ve identified and defined four types of bullshit (BS):

BS1 (Frankfurt): propositions made without concern for truth.

BS2 (Dixon): ritualistic activity which doesn’t serve its stated aim or justification.

BS3 (Cohen/Pennycook): unclarifiable unclarity aka pseudo-profound bullshit.

BS4 (Cohen): speculation beyond what’s reasonably permitted by the evidence.

The common denominator of all four types of bullshit is their disconnection from reality. Bullshit statements don’t enhance our understanding and bullshit activity doesn’t get us any closer to achieving our goals in the real world. Bullshit doesn’t necessarily move us further away from truth, like lies do, but it certainly doesn’t get us any closer to it either. Essentially, bullshit lowers the signal-to-noise ratio of discourse.

February 13, 2023

QotD: Oaths in pre-modern cultures

First, some caveats. This is really a discussion of oath-taking as it existed (and exists) around the Mediterranean and Europe. My understanding is that the basic principles are broadly cross-cultural, but I can’t claim the expertise in practices south of the Sahara or East of the Indus to make that claim with full confidence. I am mostly going to stick to what I know best: Greece, Rome and the European Middle Ages. Oath-taking in the pre-Islamic Near East seems to follow the same set of rules (note Bachvarova’s and Connolly’s articles in Horkos), but that is beyond my expertise, as is the Middle East post-Hijra.

Second, I should note that I’m drawing my definition of an oath from Alan Sommerstein’s excellent introduction in Horkos: The Oath in Greek Society (2007), edited by A. Sommerstein and J. Fletcher – one of the real “go-to” works on oath-taking in the ancient Mediterranean world. As I go, I’ll also use some medieval examples to hopefully convince you that the same basic principles apply to medieval oaths, especially the all-important oaths of fealty and homage.

(Pedantry note: now you may be saying, “wait, an introduction? Why use that?” As of when I last checked, there is no monograph (single author, single topic) treatment of oaths. Rather, Alan Sommerstein has co-authored a set of edited collections – Horkos (2007, with J. Fletcher), Oath and State (2013, with A. Bayliss) and Oaths and Swearing (2014, with I. Torrance). This can make Greek oaths a difficult topic to get a basic overview of, as opposed to a laundry list of the 101 ancient works you must read for examples. Discussions of Roman oaths are, if anything, even less welcoming to the beginner, because they intersect with the study of Roman law. I think the expectation has always been that the serious student of the classics would have read so many oaths in the process of learning Latin and Greek to develop a sort of instinct for the cultural institution. Nevertheless, Sommerstein’s introduction in Horkos presents my preferred definition of the structure of an oath.)

Alright – all of the quibbling out of the way: onward!

So what is an Oath? Is it the same as a Vow?

Ok, let’s start with definitions. In modern English, we often use oath and vow interchangeably, but they are not (usually) the same thing. Divine beings figure in both kinds of promises, but in different ways. In a vow, the god or gods in question are the recipients of the promise: you vow something to God (or a god). By contrast, an oath is made typically to a person and the role of the divine being in the whole affair is a bit more complex.

(Etymology digression: the word “oath” comes to us by way of Old English āþ (pronounced “ath” with a long ‘a’) and has close cousins in Dutch “Eed” and German “Eid”. The word vow comes from Latin (via Middle English, via French), from the word votum. A votum is specifically a gift to a god in exchange for some favor – the gift can be in the present tense or something promised in the future. By contrast, the Latin word for oath is ius (it has a few meanings) and to swear an oath is the verb iuro (thus the legal phrase “ius iurandum” – literally “the oath to be sworn”). This Latin distinction is preserved into the English usage, where “vow” retains its Latin meaning, and the word “oath” usurps the place of Latin ius (along with other words for specific kinds of oaths in Latin, e.g. sacramentum)).

In a vow, the participant promises something – either in the present or the future – to a god, typically in exchange for something. This is why we talk of an oath of fealty or homage (promises made to a human), but a monk’s vows. When a monk promises obedience, chastity and poverty, he is offering these things to God in exchange for grace, rather than to any mortal person. Those vows are not to the community (though it may be present), but to God (e.g. Benedict in his Rule notes that the vow “is done in the presence of God and his saints to impress on the novice that if he ever acts otherwise, he will surely be condemned by the one he mocks“. (RB 58.18)). Note that a physical thing given in a vow is called a votive (from that Latin root).

(More digressions: Why do we say “marriage vows” in English? Isn’t this a promise to another human being? I suspect this usage – functionally a “frozen” phrase – derives from the assumption that the vows are, in fact, not a promise to your better half, but to God to maintain. After all, the Latin Church held – and the Catholic Church still holds – that a marriage cannot be dissolved by the consent of both parties (unlike oaths, from which a person may be released with the consent of the recipient). The act of divine ratification makes God a party to the marriage, and thus the promise is to him. Thus a vow, and not an oath.)

So again, a vow is a promise to a divinity or other higher power (you can make vows to heroes and saints, for instance), whereas an oath is a promise to another human, which is somehow enforced, witnessed or guaranteed by that higher power.

An example of this important distinction being handled in a very awkward manner is the “oath” of the Night’s Watch in Game of Thrones (delivered in S1E7, but taken, short a few words, verbatim from the books). The recruits call out to … someone … (they never name who, which as we’ll see, is a problem) to “hear my words and bear witness to my vow”. Except it’s not clear to me that this is a vow, so much as an oath. The supernatural being you are vowing something to does not bear witness because they are the primary participant – they don’t witness the gift, they receive it.

I strongly suspect that Martin is riffing off of here are the religious military orders of the Middle Ages (who did frequently take vows), but if this is a vow, it raises serious questions. It is absolutely possible to vow a certain future behavior – to essentially make yourself the gift – but who are they vowing to? The tree? It may well be “the Old Gods” who are supposed to be both nameless and numerous (this is, forgive me, not how ancient paganism worked – am I going to have to write that post too?) and who witness things (such as the Pact, itself definitely an oath, through the trees), but if so, surely you would want to specify that. Societies that do votives – especially when there are many gods – are often quite concerned that gifts might go awry. You want to be very specific as to who, exactly, you are vowing something to.

This is all the more important given that (as in the books) the Night’s Watch oath may be sworn in a sept as well as to a Weirwood tree. It wouldn’t do to vow yourself to the wrong gods! More importantly, the interchangeability of the gods in question points very strongly to this being an oath. Gods tend to be very particular about the votives they will receive; one can imagine saying “swear by whatever gods you have here” but not “vow yourself to whatever gods you have here”. Who is to say the local gods take such gifts?

Moreover, while they pledge their lives, they aren’t receiving anything in return. Here I think the problem may be that we are so used to the theologically obvious request of Christian vows (salvation and the life after death) that it doesn’t occur to us that you would need to specify what you get for a vow. But the Old Gods don’t seem to be in a position to offer salvation. Votives to gods in polytheistic systems almost always follow the do ut des system (lit. “I give, that you might give”). Things are not offered just for the heck of it – something is sought in return. And if you want that thing, you need to say it. Jupiter is not going to try to figure it out on his own. If you are asking the Old Gods to protect you, or the wall, or mankind, you need to ask.

(Pliny the Elder puts it neatly declaring, “of course, either to sacrifice without prayer or to consult the gods without sacrifice is useless” (Nat. Hist. 28.3). Prayer here (Latin: precatio) really means “asking for something” – as in the sense of “I pray thee (or ‘prithee’) tell me what happened?” And to be clear, the connection of Christian religious practice to the do ut des formula of pre-Christian paganism is a complex theological question better addressed to a theologian or church historian.)

The scene makes more sense as an oath – the oath-takers are swearing to the rest of the Night’s Watch to keep these promises, with the Weirwood Trees (and through them, the Old Gods – although again, they should specify) acting as witnesses. As a vow, too much is up in the air and the idea that a military order would permit its members to vow themselves to this or that god at random is nonsense. For a vow, the recipient – the god – is paramount.

Bret Devereaux, “Collections: Oaths! How do they Work?”, A Collection of Unmitigated Pedantry, 2019-06-28.

December 30, 2022

The continued relevance of Orwell’s “Politics and the English Language”

In Quillette, George Case praises Orwell’s 1946 essay “Politics and the English Language” (which was one of the first essays that convinced me that Orwell was one of the greatest writers of the 20th century), and shows how it still has relevance today:

George Orwell’s “Politics and the English Language” is widely considered one of the greatest and most influential essays ever written. First published in Britain’s Horizon in 1946, it has since been widely anthologized and is always included in any collection of the writer’s essential nonfiction. In the decades since its appearance, the article has been quoted by many commentators who invoke Orwell’s literary and moral stature in support of its continued relevance. But perhaps the language of today’s politics warrants some fresh criticisms that even the author of Nineteen Eighty-Four and Animal Farm could not have conceived.

“Politics and the English Language” addressed the jargon, double-talk, and what we would now call “spin” that had already distorted the discourse of the mid-20th century. “In our time,” Orwell argued, “political speech and writing are largely the defence of the indefensible. … Thus political language has to consist largely of euphemism, question-begging and sheer cloudy vagueness. … Political language — and with variations this is true of all political parties, from Conservatives to Anarchists — is designed to make lies sound truthful and murder respectable, and to give an appearance of solidity to pure wind.” Those are the sentences most cited whenever a modern leader or talking head hides behind terms like “restructuring” (for layoffs), “visiting a site” (for bombing), or “alternative facts” (for falsehoods). In his essay, Orwell also cut through the careless, mechanical prose of academics and journalists who fall back on clichés — “all prefabricated phrases, needless repetitions, and humbug and vagueness generally”.

These objections still hold up almost 80 years later, but historic changes in taste and technology mean that they apply to a new set of unexamined truisms and slogans regularly invoked less in oratory or print than through televised soundbites, online memes, and social media: the errors of reason and rhetoric identified in “Politics and the English Language” can be seen in familiar examples of empty platitudes, stretched metaphors, and meaningless cant which few who post, share, like, and retweet have seriously parsed. Consider how the following lexicon from 2023 is distinguished by the same question-begging, humbug, and sheer cloudy vagueness exposed by George Orwell in 1946.

[…]

Climate, [mis- and dis-]information, popular knowledge, genocide, land claims, sexual assault, and racism are all serious topics, but politicizing them with hyperbole turns them into trite catchphrases. The language cited here is largely employed as a stylistic template by the outlets who relay it — in the same way that individual publications will adhere to uniform guidelines of punctuation and capitalization, so too must they now follow directives to always write rape culture, stolen land, misinformation, or climate emergency in place of anything more neutral or accurate. Sometimes, as with cultural genocide or systemic racism, the purpose appears to be in how the diction of a few extra syllables imparts gravity to the premise being conveyed, as if a gigantic whale is a bigger animal than a whale, or a horrific murder is a worse crime than a murder.

Elsewhere, the words strive to alter the parameters of an issue so that its actual or perceived significance is amplified a little longer. “Drunk driving” will always be a danger if the legal limits of motorists’ alcohol levels are periodically lowered; likewise, relations between the sexes and a chaotic range of public opinion will always be problematic if they can be recast as rape culture, hate, or disinformation. This lingo typifies the parroted lines and reflexive responses of political communication in the 21st century.

In “Politics and the English Language”, George Orwell’s concluding lesson was not just that parroted lines and reflexive responses were aesthetically bad, or that they revealed professional incompetence in whoever crafted them, but that they served to suppress thinking. “The invasion of one’s mind by ready-made phrases … can only be prevented if one is constantly on guard against them, and every such phrase anaesthetizes a portion of one’s brain”, he wrote. He is still right: glib, shallow expression reflects, and will only perpetuate, glib, shallow thought, achieving no more than to give an appearance of solidity to pure wind.

December 28, 2022

QotD: Collective guilt

As for the concept of collective guilt, I personally think that it is totally unjustified to hold one person responsible for the behaviour of another person or a collective of persons. Since the end of World War Two I have not become weary of publicly arguing against the collective guilt concept. Sometimes, however, it takes a lot of didactic tricks to detach people from their supersitions. An American woman once confronted me with the reporach, “How can you still write some of your books in German, Adolf Hitler’s language?” In response, I asked her if she had knives in her kitchen, and when she answered that she did, I acted dismayed and shocked, exclaiming, “How can you still use knives after so many killers have used them to stab and murder their victims?” She stopped objecting to my writing books in German.

Viktor Frankl, Man’s Search for Meaning, 1946.

December 27, 2022

Coming of the Sea Peoples: Part 5 – The Hittites

seangabb

Published 6 Jul 2021[Unfortunately, parts 3 and 4 of this lecture series were not uploaded due to sound issues with the recording].

The Late Bronze Age is a story of collapse. From New Kingdom Egypt to Hittite Anatolia, from the Assyrian Empire to Babylonia and Mycenaean Greece, the coming of the Sea Peoples is a terror that threatens the end of all things. Between April and July 2021, Sean Gabb explored this collapse with his students. Here is one of his lectures. All student contributions have been removed.

(more…)

December 22, 2022

December 18, 2022

QotD: Citation systems and why they were developed

For this week’s musing I wanted to talk a bit about citation systems. In particular, you all have no doubt noticed that I generally cite modern works by the author’s name, their title and date of publication (e.g. G. Parker, The Army of Flanders and the Spanish Road (1972)), but ancient works get these strange almost code-like citations (Xen. Lac. 5.3; Hdt. 7.234.2; Thuc. 5.68; etc.). And you may ask, “What gives? Why two systems?” So let’s talk about that.

The first thing that needs to be noted here is that systems of citation are for the most part a modern invention. Pre-modern authors will, of course, allude to or reference other works (although ancient Greek and Roman writers have a tendency to flex on the reader by omitting the name of the author, often just alluding to a quote of “the poet” where “the poet” is usually, but not always, Homer), but they did not generally have systems of citation as we do.

Instead most modern citation systems in use for modern books go back at most to the 1800s, though these are often standardizations of systems which might go back a bit further still. Still, the Chicago Manual of Style – the standard style guide and citation system for historians working in the United States – was first published only in 1906. Consequently its citation system is built for the facts of how modern publishing works. In particular, we publish books in codices (that is, books with pages) with numbered pages which are typically kept constant in multiple printings (including being kept constant between soft-cover and hardback versions). Consequently if you can give the book, the edition (where necessary), the publisher and a page number, any reader seeing your citation can notionally go get that edition of the book and open to the very page you were looking at and see exactly what you saw.

Of course this breaks down a little with mass-market fiction books that are often printed in multiple editions with inconsistent pagination (thus the endless frustration with trying to cite anything in A Song of Ice and Fire; the fan-made chapter-based citation system for a work without numbered or uniquely named chapters is, I must say, painfully inadequate.) but in a scholarly rather than wiki-context, one can just pick a specific edition, specify it with the facts of publication and use those page numbers.

However the systems for citing ancient works or medieval manuscripts are actually older than consistent page numbers, though they do not reach back into antiquity or even really much into the Middle Ages. As originally published, ancient works couldn’t have static page numbers – had they existed yet, which they didn’t – for a multitude of reasons: for one, being copied by hand, the pagination was likely to always be inconsistent. But for ancient works the broader problem was that while they were written in books (libri) they were not written in books (codices). The book as a physical object – pages, bound together at a spine – is more technically called a codex. After all, that’s not the only way to organize a book. Think of a modern ebook for instance: it is a book, but it isn’t a codex! Well, prior to codex becoming truly common in third and fourth centuries AD, books were typically written on scrolls (the literal meaning of libri, which later came to mean any sort of book), which notably lack pages – it is one continuous scroll of text.

Of course those scrolls do not survive. Rather, ancient works were copied onto codices during Late Antiquity or the Middle Ages and those survive. When we are lucky, several different “families” of manuscripts for a given work survive (this is useful because it means we can compare those manuscripts to detect transcription errors; alas in many cases we have only one manuscript or one clearly related family of manuscripts which all share the same errors, though such errors are generally rare and small).

With the emergence of the printing press, it became possible to print lots of copies of these works, but that combined with the manuscript tradition created its own problems: which manuscript should be the authoritative text and how ought it be divided? On the first point, the response was the slow and painstaking work of creating critical editions that incorporate the different manuscript traditions: a main text on the page meant to represent the scholar’s best guess at the correct original text with notes (called an apparatus criticus) marking where other manuscripts differ. On the second point it became necessary to impose some kind of organizing structure on these works.

The good news is that most longer classical works already had a system of larger divisions: books (libri). A long work would be too long for a single scroll and so would need to be broken into several; its quite clear from an early point that authors were aware of this and took advantage of that system of divisions to divide their works into “books” that had thematic or chronological significance. Where such a standard division didn’t exist, ancient libraries, particularly in Alexandria, had imposed them and the influence of those libraries as the standard sources for originals from which to make subsequent copies made those divisions “canon”. Because those book divisions were thus structurally important, they were preserved through the transition from scrolls to codices (as generally clearly marked chapter breaks), so that the various “books” served as “super-chapters”.

But sub-divisions were clearly necessary – a single librum is pretty long! The earliest system I am aware of for this was the addition of chapter divisions into the Vulgate – the Latin-language version of the Bible – in the 13th century. Versification – breaking the chapters down into verses – in the New Testament followed in the early 16th century (though it seems necessary to note that there were much older systems of text divisions for the Tanakh though these were not always standardized).

The same work of dividing up ancient texts began around the same time as versification for the Bible. One started by preserving the divisions already present – book divisions, but also for poetry line divisions (which could be detected metrically even if they were not actually written out in individual lines). For most poetic works, that was actually sufficient, though for collections of shorter poems it became necessary to put them in a standard order and then number them. For prose works, chapter and section divisions were imposed by modern editors. Because these divisions needed to be understandable to everyone, over time each work developed its standard set of divisions that everyone uses, codified by critical texts like the Oxford Classical Texts or the Bibliotheca Teubneriana (or “Teubners”).

Thus one cited these works not by the page numbers in modern editions, but rather by these early-modern systems of divisions. In particular a citation moves from the larger divisions to the smaller ones, separating each with a period. Thus Hdt. 7.234.2 is Herodotus, Book 7, chapter 234, section 2. In an odd quirk, it is worth noting classical citations are separated by periods, but Biblical citations are separated by colons. Thus John 3:16 but Liv. 3.16. I will note that for readers who cannot access these texts in the original language, these divisions can be a bit frustrating because they are often not reproduced in modern translations for the public (and sometimes don’t translate well, where they may split the meaning of a sentence), but I’d argue that this is just a reason for publishers to be sure to include the citation divisions in their translations.

That leaves the names of authors and their works. The classical corpus is a “closed” corpus – there is a limited number of works and new ones don’t enter very often (occasionally we find something on a papyrus or lost manuscript, but by “occasionally” I mean “about once in a lifetime”) so the full details of an author’s name are rarely necessary. I don’t need to say “Titus Livius of Patavium” because if I say Livy you know I mean Livy. And in citation as in all publishing, there is a desire for maximum brevity, so given a relatively small number of known authors it was perhaps inevitable that we’d end up abbreviating all of their names. Standard abbreviations are helpful here too, because the languages we use today grew up with these author’s names and so many of them have different forms in different languages. For instance, in English we call Titus Livius “Livy” but in French they say Tite-Live, Spanish says Tito Livio (as does Italian) and the Germans say Livius. These days the most common standard abbreviation set used in English are those settled on by the Oxford Classical Dictionary; I am dreadfully inconsistent on here but I try to stick to those. The OCD says “Livy”, by the by, but “Liv.” is also a very common short-form of his name you’ll see in citations, particularly because it abbreviates all of the linguistic variations on his name.

And then there is one final complication: titles. Ancient written works rarely include big obvious titles on the front of them and often were known by informal rather than formal titles. Consequently when standardized titles for these works formed (often being systematized during the printing-press era just like the section divisions) they tended to be in Latin, even when the works were in Greek. Thus most works have common abbreviations for titles too (again the OCD is the standard list) which typically abbreviate their Latin titles, even for works not originally in Latin.

And now you know! And you can use the link above to the OCD to decode classical citations you see.

One final note here: manuscripts. Manuscripts themselves are cited by an entirely different system because providence made every part of paleography to punish paleographers for their sins. A manuscript codex consists of folia – individual leaves of parchment (so two “pages” in modern numbering on either side of the same physical page) – which are numbered. Then each folium is divided into recto and verso – front and back. Thus a manuscript is going to be cited by its catalog entry wherever it is kept (each one will have its own system, they are not standardized) followed by the folium (‘f.’) and either recto (r) or verso (v). Typically the abbreviation “MS” leads the catalog entry to indicate a manuscript. Thus this picture of two men fighting is MS Thott.290.2º f.87r (it’s in Det Kongelige Bibliotek in Copenhagen):

MS Thott.290.2º f.87r which can also be found on the inexplicably well maintained Wiktenauer; seriously every type of history should have as dedicated an enthusiast community as arms and armor history.

And there you go.

Bret Devereaux, “Fireside Friday, June 10, 2022”, A Collection of Unmitigated Pedantry, 2022-06-10.