At Astral Codex Ten, Scott Alexander considers some “traditions” which were clearly invented much more recently than participants might believe:

Two NYC synagogues, one in Moorish Revival style and the other is some form of modernism (you can tell it’s not Brutalism because it’s not all decaying concrete). Like Scott, I vastly prefer the one on the left even if it isn’t totally faithful to the Moroccan original design.

A: I like Indian food.

B: Oh, so you like a few bites of flavorless rice daily? Because India is a very poor country, and that’s a more realistic depiction of what the average Indian person eats. And India has poor food safety laws – do you like eating in unsanitary restaurants full of rats? And are you condoning Narendra Modi’s fascist policies?

A: I just like paneer tikka.

This is how most arguments about being “trad” sound to me. Someone points out that they like some feature of the past. Then other people object that this feature is idealized, the past wasn’t universally like that, and the past had many other bad things.

But “of the past” is just meant to be a pointer! “Indian food” is a good pointer to paneer tikka even if it’s an idealized view of how Indians actually eat, even if India has lots of other problems!

In the same way, when people say they like Moorish Revival architecture or the 1950s family structure or whatever, I think of these as pointers. It’s fine if the Moors also had some bad buildings, or not all 1950s families were really like that. Everyone knows what they mean!

But there’s another anti-tradition argument which goes deeper than this. It’s something like “ah, but you’re a hypocrite, because the people of the past weren’t trying to return to some idealized history. They just did what made sense in their present environment.”

There were hints of this in Sam Kriss’ otherwise-excellent article about a fertility festival in Hastings, England. A celebrant dressed up as a green agricultural deity figure, paraded through the street, and then got ritually murdered. Then everyone drank and partied and had a good time.

Most of the people involved assumed it derived from the Druids or something. It was popular not just as a good party, but because it felt like a connection to primeval days of magic and mystery. But actually, the Hastings festival dates from 1983. If you really stretch things, it’s loosely based on similar rituals from the 1790s. There’s no connection to anything older than that.

Kriss wrote:

I don’t think the Jack in the Green is worse because it’s not really an ancient fertility rite, but I do think it’s a little worse because it pretends to be … tradition pretends to be a respect for the past, but it refuses to let the past inhabit its own particular time: it turns the past into eternity. The opposite of tradition is invention.

Tradition is fake, and invention is real. Most of the human activity of the past consists of people just doing stuff … they didn’t need a reason. It didn’t need to be part of anything ancient. They were having fun.

I’ve been thinking a lot about [a seagull float in the Hastings parade] … in the procession, the shape of the seagull became totemic. It had the intensity of a symbol, without needing to symbolise anything in particular. Another word for a symbol that burns through any referent is a god. I wasn’t kidding when I said I felt the faint urge to worship it. I don’t think it would be any more meaningful if someone had dug up some thousand-year-old seagull fetishes from a nearby field. It’s powerful simply because of what it is. Invention, just doing stuff, is the nebula that nurses newborn gods.

I’m nervous to ever disagree with Sam Kriss about ancient history, but this strikes me as totally false.

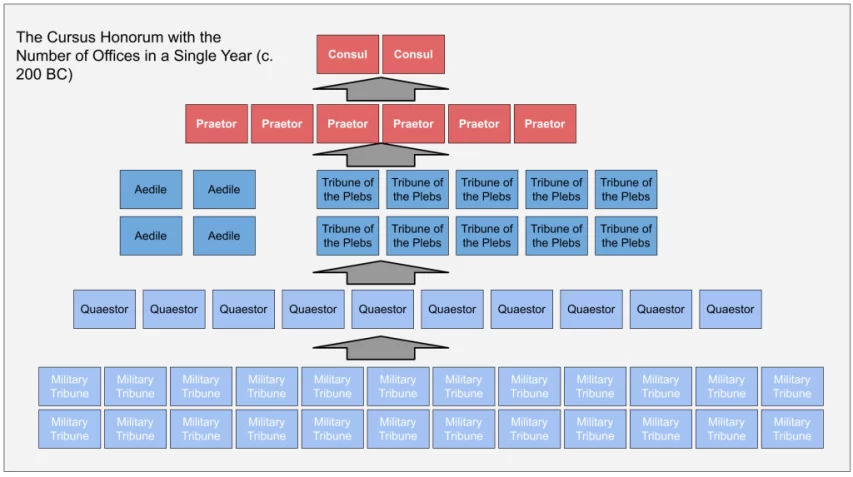

Modern traditionalists look back fondly on Victorian times. But the Victorians didn’t get their culture by just doing stuff without ever thinking of the past. They were writing pseudo-Arthurian poetry, building neo-Gothic palaces, and painting pre-Raphaelite art hearkening back to the early Renaissance. And the Renaissance itself was based on the idea of a re-naissance of Greco-Roman culture. And the Roman Empire at its peak spent half of its cultural energy obsessing over restoring the virtue of the ancient days of the Roman Republic:

Then none was for a party;

Then all were for the state;

Then the great man helped the poor,

And the poor man loved the great:

Then lands were fairly portioned;

Then spoils were fairly sold:

The Romans were like brothers

In the brave days of old.Now Roman is to Roman

More hateful than a foe,

And the Tribunes beard the high,

And the Fathers grind the low.

As we wax hot in faction,

In battle we wax cold:

Wherefore men fight not as they fought

In the brave days of old.(of course, this isn’t from a real Imperial Roman poem — it’s by a Victorian Brit pretending to be a later Roman yearning for the grand old days of Republican Rome. And it’s still better than any poem of the last fifty years, fight me.)

As for the ancient Roman Republic, they spoke fondly of a Golden Age when they were ruled by the god Saturn. As far as anyone knows, Saturn is a wholly mythical figure. But if he did exist, there are good odds he inspired his people (supposedly the fauns and nymphs) through stories of some even Goldener Age that came before.