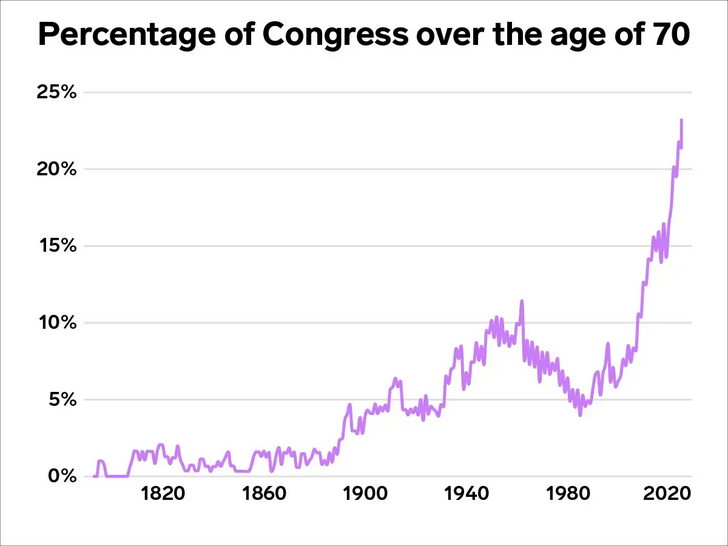

The cynic in me wonders if having AI judges would make the justice system any worse, given the ever-increasing pro-criminal bias on display in courtrooms across North America and Europe:

It’s the question rattling through chambers and law schools. Are we in danger of a world where the solemn business of justice, liberty, livelihood, and who really owns the back fence is entrusted not to a human in robes but to a chirpy algorithm with a software bug and a 4,000-word disclaimer? Are we handing over judgment itself to machines, or simply giving them the photocopying and hoping they don’t start offering opinions?

Because, depending on whom you ask, AI in law is either (a) the long-delayed democratization of justice for ordinary people or (b) the first act of a constitutional farce in which courts drown beneath PDFs full of nonsense and fake footnotes.

The Machinery Arrives

Beneath the wood paneling and the reassuring thump of legal pomposity, something mildly heretical is afoot. Judges, clerks, and barristers — those high priests of precedent — are quietly feeding their briefs to generative AI, which now whirs away in the background, summarizing, drafting, and rummaging through case law while its human overlords wrestle with the biscuit tin and their consciences.

According to the Judicial Commission of New South Wales (NSW), the robots are already in the building. Their latest handbook cheerfully notes that AI is used for legal analytics, mass document review, “natural language” searching, and predictive modeling — all of which sound terribly sophisticated until you realize they’re essentially Excel spreadsheets with delusions of grandeur. A UNESCO survey adds the clincher: nearly half the world’s judges, prosecutors, and court staff have used generative AI for work, and only 9 percent have had what’s politely called safe-usage training. This is training where someone explains that you shouldn’t upload confidential evidence to a chatbot that lives in the cloud or take legal advice from a program that thinks Brown v. Board of Education was a musical.

The Law Society of NSW, in a rare fit of clairvoyance back in 2016, created something called the Future Committee — the sort of name that already sounds like a sci-fi tribunal convened to ban fun. Their brief was to consider what might happen when clients demanded more for less, junior lawyers were burnt to a crisp, and artificial intelligence started politely asking, “Shall I draft that for you?” The conclusion was simple: adapt or be eaten.

Meanwhile, in London, the Law Society of England and Wales skipped the warm-up act and went straight to the apocalypse. Its 2021 report, Images of the Future Worlds Facing the Legal Profession 2020–2030, envisioned a legal world in which routine advice would be swallowed whole by AI portals, full-time lawyers would be reduced to an endangered species, and the survivors would work alongside AI and be mandated to take “performance-enhancing medication in order to optimise their own productivity and effectiveness.” The whole thing reads like 1984 rewritten by a management consultant — right down to the faint violin of self-pity playing somewhere in the distance.

Oh, but those were in Australia and the UK, it’s not that bad in North America, surely? Uh, well …

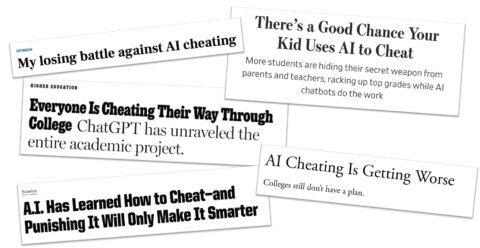

Across the Atlantic, the award for Legal Farce of the Century goes to Mata v. Avianca, Inc. (S.D.N.Y. 2023). In this modern masterpiece of professional self-immolation, a team of lawyers filed court papers quoting three magnificent precedents: Varghese v. China Southern Airlines, Martinez v. Delta, and Zicherman v. Korean Air Lines. Unfortunately, none of them existed — not in Westlaw, not in Lexis, not even in the fever dreams of law students. When the judge asked, quite reasonably, to see the cases, counsel could only offer the look of people discovering gravity for the first time. Sanctions followed under Rule 11 for what the court delicately called “subjective bad faith”, which is American for “you made this up”. The ruling is now shown at continuing-education sessions under the optimistic title Let’s Not Do That Again.

The sequel writes itself:

- Massachusetts: A lawyer submitted memoranda stuffed with phantom cases, blamed “the office AI”, and was fined. The judge, channeling divine exasperation, warned that blind acceptance of AI-generated content is not a defense — it’s a lifestyle choice.

- Alabama: Attorneys for the state prison system filed citations to imaginary authorities and were sentenced to the most humiliating punishment known to the bar: writing apology letters to their law school deans and delivering public lectures on ethics.

- California: One overzealous litigator managed to produce a brief in which twenty-one of twenty-three authorities were pure fiction. The court fined him, the press dined out on it, and AI-compliance seminars across America gained a new slide.

Thus, the first commandment of the digital age is: the robot may write it, but the Submit button still belongs to a human — and the human still gets to explain it to the judge.