The central argument of Gelernter’s essay is that random chance is not good enough, even at geologic timescales, to produce the ratchet of escalating complexity we see when we look at living organisms and the fossil record. Most mutations are deleterious and degrade the functioning of the organism; few are useful enough to build on. There hasn’t been enough time for the results we see.

Before getting to that one I want to deal with a subsidiary argument in the essay, that Darwinism is somehow falsified because we don’t observe the the slow and uniform evolution that Darwin posited. But we have actually observed evolution (all the way up to speciation) in bacteria and other organisms with rapid lifespans, and we know the answer to this one.

The rate of evolutionary change varies; it increases when environmental changes increase selective pressures on a species and decreases when their environment is stable. You can watch this happen in a Petri dish, even trigger episodes of rapid evolution in bacteria by introducing novel environmental stressors.

Rate of evolution can also increase when a species enters a new, unexploited environment and promptly radiates into subspecies all expressing slightly different modes of exploitation. Darwin himself spotted this happening among Galapagos finches. An excellent recent book, The 10,000 Year Explosion, observes the same acceleration in humans since the invention of agriculture.

Thus, when we observe punctuated equilibrium (long stretches of stable morphology in species punctuated by rapid changes that are hard to spot in the fossil record) we shouldn’t see this as the kind of ineffable mystery that Gelernter and other opponents of Darwinism want to make of it. Rather, it is a signal about the shape of variability in the adaptive environment – also punctuated.

Even huge punctuation marks like the Cambrian explosion, which Gelernter spends a lot of rhetorical energy trying to make into an insuperable puzzle, fall to this analysis. The fossil record is telling us that something happened at the dawn of the Cambrian that let loose a huge fan of possibilities; adaptive radiation, a period of rapid evolution, promptly followed just as it did for the Galapagos finches.

We don’t know what happened, exactly. It could have been something as simple as the oxygen level in seawater going up. Or maybe there was some key biological invention – better structural material for forming hard body parts with would be one obvious one. Both these things, or several other things, might have happened near enough together in time that the effects can’t be disentangled in the fossil record.

The real point here is that there is nothing special about the Cambrian explosion that demands mechanisms we haven’t observed (not just theorized about, but observed) on much faster timescales. It takes an ignotum per æque ignotum kind of mistake to erect a mystery here, and it’s difficult to imagine a thinker as bright as Dr. Gelernter falling into such a trap … unless he wants to.

But Dr. Gelernter makes an even more basic error when he says “The engine that powers Neo-Darwinian evolution is pure chance and lots of time.” That is wrong, or at any rate leaves out an important co-factor and leads to badly wrong intuitions about the scope of the problem and the timescale required to get the results we see. Down that road one ends up doing silly thought experiments like “How often would a hurricane assemble a 747 from a pile of parts?”

Eric S. Raymond, “Contra Gelernter on Darwin”, Armed and Dangerous, 2019-08-14.

August 10, 2023

QotD: The variable pace of evolution

August 9, 2023

America Plans to Incinerate Japan – War Against Humanity 107

World War Two

Published 8 Aug 2023The Allied Strategic bombing campaign has claimed hundreds of thousands of civilian lives across Europe and has made little real impact on the Axis war machine. Even so, the United States is determined to extend the campaign to Japan. Until now, the vast distances of the Asia-Pacific theatre have protected the imperial enemy. That all changes when the USAAF unleashes the Superfortress.

(more…)

“Soon, they say, white America will be over”

At Oxford Sour, Christopher Gage considers the prominent media narrative about the inevitability of a “majority-minority” America within the next twenty years:

According to both the Daily Stormer and the Washington Post‘s Jennifer Rubin, white people will be a minority sometime in the 2040s.

In 2008, the U.S. Census Bureau projected that “non-Hispanic” white Americans would fall to 49 percent by the year 2042.

Since then, breathless media-politicos have taken the coming “majority-minority” as gospel. Some on the left celebrate the forthcoming date as the first day of their permanent dominance. Some on the right employ the doomsday date to encourage lunatics to rub out innocent people in a synagogue.

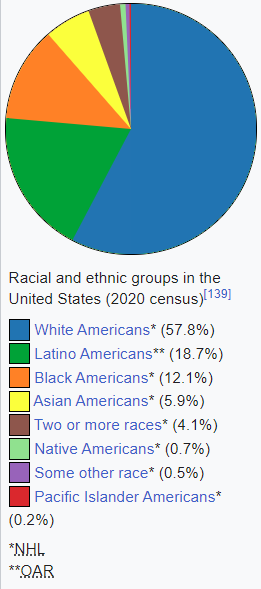

The 2020 census continued the theme. Breathless, lazy journalists took the clickbait. Ominously, they said the white population was “shrinking”. According to the Census Bureau, the white population shrunk to just 57.8 percent. Michael Moore did a little dance.

On white nationalist forums, users with names like WhiteGenocide88 were traumatised, and tooling up for war. No doubt, there are a few Robert Bowers with monstrous campaigns lurking around their skulls.

The problem with this theory, or so my Jewish puppet masters instruct me to say, is that it’s nonsense.

Firstly, the white population didn’t “shrink”. Not unless one believes the Jim Crow “one-drop” rule meaning one non-white ancestor renders one non-white. Secondly, the census ignores millions of white Americans who check the “Hispanic” box. These Americans more than cover any white decline.

According to the census, only those of one hundred percent white European ancestry are white. The Ku Klux Klan tends to agree.

For the first time on the census form, white people could include their varied multi-racial ancestry. Over 31 million whites did so. When we count these white people as white, the white population climbs to 71 percent. So, rather than “shrinking”, the white population has since 2010 increased by four million.

With a sensible definition of white, even by 2060, America remains around 70 percent white.

Professor Richard Alba, a sociologist at the City University of New York, says that definition means America remains a majority-white nation indefinitely — no doomsday clock, no murderous rampages, no primitive bloodsport over a faulty horoscope.

I’m no demographer but counting all white people as white people means there are more white people. I’m no sociologist either but revealing the increase in white people means fewer Robert Bowers keen to pump shotgun rounds into human flesh.

At Not the Bee, Joel Abbott is concerned about the obvious glee displayed by legacy media folks when they push the “replacement” story line:

You can practically feel the excitement here from the quivering fingers of the journalist that wrote this.

Ah.

Hmm.

Well, this is awkward.

See, I’m told this isn’t happening. White people aren’t being “replaced,” they’re just simply being phased out with a percentage that isn’t white at the same time all our institutions, companies, and leaders are pushing to eradicate things like “whiteness” and “white rage” in the name of equity.

For the record, I really couldn’t care less about the skin color of who lives in America. I care an infinite amount more about the spiritual worldview and values of my neighbor than whether their physical DNA gave them dark skin, blue eyes, or a big nose.

This is why I find it weird that the media keeps reporting, with abject glee, that people who look like me will be in the minority soon.

Russian 1895 Nagant Revolver

Forgotten Weapons

Published 20 May 2013One of the mechanically interesting guns that is really widely available in the US for a great price (or was until very recently, it seems) is the Russian M1895 Nagant revolver. It was adopted by the Imperial Russian government in 1895 (replacing the Smith & Wesson No.3 as service revolver), and would serve all the way through World War II in the hands of the Red Army.

As with its other standard-issue arms, the Russian government intended to manufacture the M1895 revolvers domestically. However, when the Nagant was officially adopted the major Russian arsenals were already working at capacity to make the relatively new M1891 rifle, so the first 20,000 revolvers were made by Nagant in Liege, Belgium. In 1898 space had been freed up to start production at the Tula arsenal, where they would be made until 1945 (Ishevsk put the Nagant revolver into production as well during WWII).

The common version available in the US today is a 7-shot, double action revolver chambered for 7.62x38mm. That cartridge is a very long case with the bullet sunk down well below the case mouth. The cylinder of the Nagant cams forward upon firing, allowing the case mount to protrude into the barrel and seal the cylinder gap, thus increasing muzzle velocity slightly. This also allows the Nagant to be used effectively with a suppressor, unlike almost all other revolvers (in which gas leaking from the cylinder gap defeats the purpose of a suppressor).

The Nagant’s 7.62x38mm cartridge pushes a 108 grain jacketed flat-nose projectile at approximately 850 fps (I believe a lighter 85-grain load was also used by the military later, but I haven’t fired any of it), which puts it roughly between .32 ACP and .32-20 ballistics. Not a hand cannon by any stretch, but fairly typical for the era (the 8mm Nambu and 8mm French revolver cartridges were both pretty similar in performance to the 7.62mm Nagant).

As far as being a shooter, the Nagant is mediocre, but reliable. The grip and sights are acceptable (but not great), and the cylinder loads and unloads one round at a time. The low pressure round doesn’t stick in the cylinder, at least. The worst part for a recreational shooter is the trigger, which is very heavy in double action. Single action is also heavy, but very crisp. Recoil is mild, and not uncomfortable at all. The design was simple and effective, and really a good fit for the Red Army and WWII fighting conditions.

QotD: The “Merry Pranksters”

Ken Kesey, graduating college in Oregon with several wrestling championships and a creative writing degree, made a classic mistake: he moved to the San Francisco Bay Area to find himself. He rented a house in Palo Alto (this was the 1950s, when normal people could have houses in Palo Alto) and settled down to write the Great American Novel.

To make ends meet, he got a job as an orderly at the local psych hospital. He also ran across some nice people called “MKULTRA” who offered him extra money to test chemicals for them. As time went by, he found himself more and more disillusioned with the hospital job, finding his employers clueless and abusive. But the MKULTRA job was going great! In particular, one of the chemicals, “LSD”, really helped get his creative juices flowing. He leveraged all of this into his Great American Novel, One Flew Over The Cuckoo’s Nest, and became rich and famous overnight.

He got his hands on some extra LSD and started distributing it among his social scene – a mix of writers, Stanford graduate students, and aimless upper-class twenty-somethings. They all agreed: something interesting was going on here. Word spread. 1960 San Francisco was already heavily enriched for creative people who would go on to shape intellectual history; Kesey’s friend group attracted the creme of this creme. Allan Ginsberg, Hunter S. Thompson, and Wavy Gravy passed through; so did Neil Cassady (“Dean Moriarty”) Jack Keroauc’s muse from On The Road. Kesey hired a local kid and his garage band to play music at his acid parties; thus began the career of Jerry Garcia and the Grateful Dead.

Sometime in the early 1960s, too slow to notice right away, they transitioned from “social circle” to “cult”. Kesey bought a compound in the redwood forests of the Santa Cruz Mountains, an hour’s drive from SF. Beatniks, proto-hippies, and other seekers – especially really attractive women – found their way there and didn’t leave. Kesey and his band, now calling themselves “the Merry Pranksters”, accepted all comers. They passed the days making psychedelic art (realistically: spraypainting redwood trees Day-Glo yellow), and the nights taking LSD in massive group therapy sessions that melted away psychic trauma and the chains of society and revealed the true selves buried beneath (realistically: sitting around in a circle while people said how they felt about each other).

What were Kesey’s teachings? Wrong question – what are anyone’s teachings? What were Jesus’ teachings? If you really want, you can look in the Bible and find some of them, but they’re not important. Any religion’s teachings, enumerated bloodlessly, sound like a laundry list of how many gods there are and what prayers to say. The Merry Pranksters were about Kesey, just like the Apostles were about Jesus. Something about him attracted them, drew them in, passed into them like electricity. When he spoke, you might or might not remember his words, but you remembered that it was important, that Something had passed from him to you, that your life had meaning now. Would you expect a group of several dozen drug-addled intellectuals in a compound in a redwood forest to have some kind of divisions or uncertainty? They didn’t. Whenever something threatened to come up, Kesey would say — the exact right thing — and then everyone would realize they had been wrong to cause trouble.

Scott Alexander, “Book Review: The Electric Kool-Aid Acid Test”, Slate Star Codex, 2019-07-23.

August 8, 2023

How the Battle of Amiens Influenced the “Stab in the Back” Myth

OTD Military History

Published 7 Aug 2023The Battle of Amiens started on August 8 1918. It started the process that caused the final defeat of the German Army on the battlefield during World War 1. Many people falsely claimed that the German Army was not defeated on the battlefield but at home by groups that wished to see German fall. One person who helped to create this myth was German General Erich Ludendorff. He called August 8 “the black day of the German Army”.

See how this statement connects to the stab in the back myth connects to Amiens and the National Socialists in Germany.

(more…)

Holding the BBC to account for their climate change alarmism

Paul Homewood‘s updated accounting of the BBC’s coverage — and blatant falsehoods — of climate change news over the last twelve months:

The BBC’s coverage of climate change and related policy issues, such as energy policy, has long been of serious and widespread concern. There have been numerous instances of factual errors, bias and omission of alternative views to the BBC’s narrative. Our 2022 paper, Institutional Alarmism, provided many examples. Some led to formal complaints, later upheld by the BBC’s Executive Complaints Unit. However, many programmes and articles escaped such attention, though they were equally biased and misleading.

The purpose of this paper is to update that previous analysis with further instances of factual errors, misinformation, half truths, omissions and sheer bias. These either post-date the original report or were not included previously. However, the list is still by no means complete.

The case for the prosecution

The third most active hurricane season

In December 2021, BBC News reported that “The 2021 Atlantic hurricane season has now officially ended, and it’s been the third most active on record”. It was nothing of the sort. There were seven Atlantic hurricanes in 2021, and since 1851 there have been 32 years with a higher count. The article also made great play of the fact that all of the pre-determined names had been used up, implying that hurricanes are becoming more common. They failed to explain, however, that with satellite technology we are now able to spot hurricanes in mid-ocean that would have been missed before.Hurricanes: are they getting more violent?

Shortly after Hurricane Ian in September 2022, a BBC “Reality Check” claimed that “Hurricanes are among the most violent storms on Earth and there’s evidence they’re getting more powerful”. The video offered absolutely no data or evidence to back up this claim, which contradicted the official agencies. For instance, the US National Oceanic and Atmospheric Administration (NOAA) state in their latest review:

There is no strong evidence of century-scale increasing trends in U.S. landfalling hurricanes or major hurricanes. Similarly for Atlantic basin-wide hurricane frequency (after adjusting for observing capabilities), there is not strong evidence for an increase since the late 1800s in hurricanes, major hurricanes, or the proportion of hurricanes that reach major hurricane intensity.

The IPCC came to a similar conclusion about hurricanes globally in their latest Assessment Review. However, the BBC article failed to mention any of this.

Up close with Royal Marines landing craft

Forces News

Published 12 Jul 2022Specialists in small craft operations and amphibious warfare, 47 Commando (Raiding Group) Royal Marines are preparing for overseas training in the Netherlands.

Briohny Williams met up with the marines and found out more about their landing craft.

(more…)

QotD: The British imperial educational “system”

The history of “education”, of the university system, whatever you want to call it, is long and complicated and fascinating, but not really germane. Like all human institutions, “educational” ones grew organically around what were originally very different foundations, the way coral reefs form around shipwrecks. Oversimplifying for clarity: back in the day, “schools” were supposed to handle education […] while universities were for training. That being the case, very few who attended universities emerged with degrees — a man got what training he needed for his future career, and unless that future career was “senior churchman”, the full Bachelor of Arts route was pretty much pointless.

(At the risk of straying too far afield, let’s briefly note that “senior churchman” was a common, indeed almost traditional, career path for the spare sons of the aristocracy. Well into the 18th century, every titled parent’s goal was “an heir and a spare”, with the heir destined for the title and castle and the spare earmarked for the church … but not, of course, as some humble parish priest. It was pretty common for bishops or abbots, and sometimes even cardinals, to be ordained on the day they took over their bishoprics. See, for example, Cesare Borgia. Meanwhile the illiterate, superstitious, brutish parish priest was a figure of satire throughout the Middle Ages and Renaissance. A guy like Thomas Wolsey was hated, in no small part, precisely because he was a commoner who leveraged his formal education into a senior church gig, taking a bunch of plum positions away from the aristocracy’s spare sons in the process).

That being the case — that schools were for education, universities for training — the fascinating spectacle of some 18 year old fop fresh out of Eton being sent to govern the Punjab makes a lot more sense. His character, formed by his education (in our sense), was considered sufficient; he’d pick up such technical training as he needed on the job … or employ trained technicians to do it for him. So too, of course, with the army, and the more you know about the British Army before the 20th century, the more you’re amazed that they managed to win anything, much less an empire — the heir’s spare’s spare traditionally went into the army, buying his commission outright, which meant that quite senior commands could, and often did, go to snotnosed teenagers who didn’t know their left flank from their right.

Alas, governments back in the days were severely under-bureaucratized, meaning that the aristocracy lacked sufficient spares to fill all the technician roles the heirs required in a rapidly urbanizing, globalizing world… which meant that talented commoners had to be employed to fill the gaps. See e.g. Wolsey, above. The problem with that, though, is that you can’t have some dirty-arsed commoner, however skilled, wiping his nose on his sleeve while in the presence of His Lordship, so universities took on a socializing function. And so (again, grossly oversimplifying for clarity) the “bachelor of arts” was born, meaning “a technician with the social savvy to work closely with his betters”. A good example is Thomas Hobbes, whose official job title in the Earl of Devonshire’s household was “tutor”, but whose function was basically “intellectual technician” — he was a kind of man-of-all-work for anything white collar …

At that point, if there had been a “system” of any kind, what the system’s designers should’ve done is set up finishing schools. The “universities” of Oxford, Cambridge, etc. are made up of various “colleges” anyway, each with their own rules and traditions and house colors and all that Harry Potter shit. Their Lordships should’ve gotten together and endowed another college for the sole purpose of knocking manners into ambitious commoners on the make (Wolsey might actually have had something like this in mind with Cardinal College … alas).

But they didn’t, and so the professors at the traditional colleges were forced into a role for which they were not designed, and unqualified. That tends to happen a lot — have you noticed? It actually happened to them twice, once with the need for technicians-with-manners became apparent, and then again when the realization dawned — as it did by the 1700s, if not earlier — that some subjects, like chemistry, require not just technicians and technician-trainers, but researchers. Hard to blame the “system” for this, since of course there is no “system”, but also because such a thing would be ruinously expensive.

Hence by the time an actual system came into being — in Prussia, around 1800 — the professors awkwardly inhabited the three roles we started with. The Professor of Chemistry, say, was supposed to conduct research while training technicians-with-manners. As with the pre-machinegun British army, the astounding thing is that they managed to pull it off at all, much less to such consistently high quality. They were real men back then …

Severian, “Education Reform”, Rotten Chestnuts, 2020-11-17.

August 7, 2023

Legacy media puzzled at falling levels of public trust in the scientific community

Given the way “the science” has been politicized over recent years and especially through the pandemic, it’s almost a surprise that there’s any residual public trust left for the scientific community:

Sagan’s warning was eerily prophetic. For the last three-plus years, we’ve witnessed a troubling rise of authoritarianism masquerading as science, which has resulted in a collapse in trust of public health.

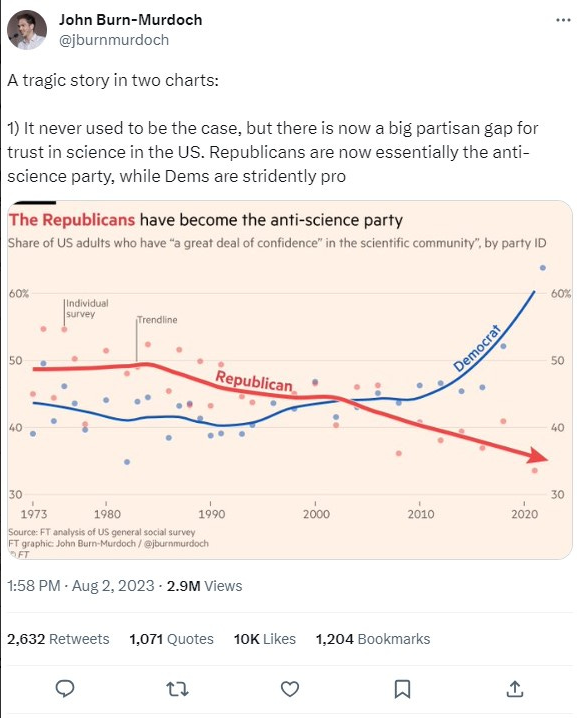

This collapse has been part of a broader and more partisan shift in Americans who say they have “a high degree of confidence in the scientific community”. Democrats, who had long had less confidence in the scientific community, are now far less skeptical. Republicans, who historically had much higher levels of trust in the scientific community, have experienced a collapse in trust in the scientific community.

John Burn-Murdoch, a data reporter at The Financial Times who shared the data in question on Twitter, said Republicans are now “essentially the anti-science party”.

First, this is a sloppy inference from a journalist. Burn-Murdoch’s poll isn’t asking respondents if they trust science. It’s asking if they trust the scientific community. There’s an enormous difference between the two, and the fact that a journalist doesn’t understand the difference between “confidence in science” and “confidence in the scientific community” is a little frightening.

Second, as Dr. Vinay Prasad pointed out, no party has a monopoly on science; but it’s clear that many of the policies the “pro science” party were advocating the last three years were not rooted in science.

“The ‘pro science’ party was pro school closure, masking a 26 month old child with a cloth mask, and mandating an mrna booster in a healthy college man who had COVID already,” tweeted Prasad, a physician at the University of California, San Francisco.

Today we can admit such policies were flawed, non-sensical or both, as were so many of the mitigations that were taken and mandated during the Covid-19 pandemic. But many forget that during the pandemic it was verboten to even question such policies.

People were banned, suspended, and censored by social media platforms at the behest of federal agencies. “The Science” had become a set of dogmas that could not be questioned. No less an authority than Dr. Fauci said that criticizing his policies was akin to “criticizing science, because I represent science”.

This could not be more wrong. Science can help us understand the natural world, but there are no “oughts” in science, the economist Ludwig von Mises pointed out, echoing the argument of philosopher David Hume.

“Science is competent to establish what is,” Mises wrote. “[Science] can never dictate what ought to be and what ends people should aim at.”

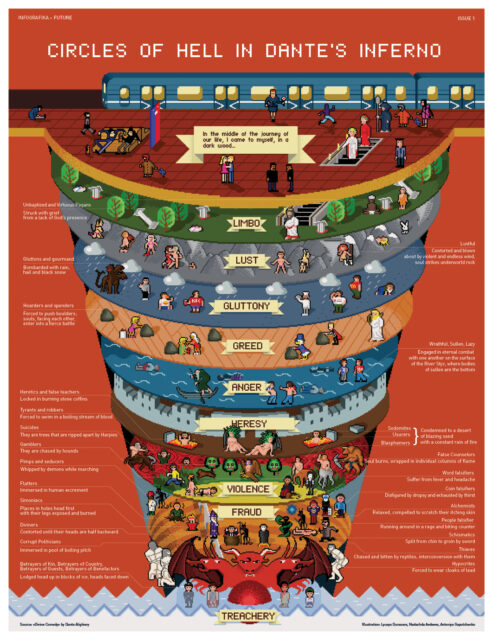

UN Secretary General updates Dante’s Inferno

Sean Walsh on the differences between the lowest level of Hell as described by Dante and the UN Secretary General’s modern characterization:

Source: Jerome, Dante’s Nine Circles of Hell, Daily Infographic, August 27, 2017, http://www.dailyinfographic.com/dantes-nine-circles-of-hell

“In the tide of time there have only been four absolutely fundamental physical theories: Newtonian mechanics; Clerk Maxwell’s theory of electromagnetism; Einstein’s theory of relativity, and quantum mechanics” – David Berlinski, The Deniable Darwin

In Dante’s Inferno, Hell is, counter-intuitively perhaps, freezing cold. In the 9th Circle the Devil is entrapped in a lake of ice. An imaginative inversion of what we normally take Hell to be.

Clearly the 14th century Italian poet didn’t get the memo from UN secretary general Antonio Guterres, for whom the Hell we currently suffer is boiling hot. Or if he did get it, perhaps he binned it. I wouldn’t blame him.

There is, of course, another difference between the two Hells: in Dante’s conception you know you’re in it; in Guterres’s diagnosis you need to be told you are. Some “Hell” that is.

I jest of course. Guterres claim is not that we are currently in Hell, more that we are on the road to it. And that the diesel-fuelled vehicle we are travelling in is called “complacency”: a stubborn and bewildering refusal on the part of you and me to recalibrate, or rather abandon, our lives in accordance with the instructions of “settled science”. An inexplicable refusal to genuflect at the altar of the Climate Change Sanhedrin.

You’ll notice that we have been here before. Restrictions imposed during the “Covid pandemic” were also justified on the grounds of an alleged scientific consensus. It’s tempting, perhaps even irresistible, to think that lockdown was the rehearsal and that incoming climate-related restrictions (and they are incoming) the main event. An amplification of the tyranny. A bit like when a thug tries his hand at assault before graduating to murder.

It’s easy to establish a consensus when the grown-ups are excluded from the discussion. And such a consensus is not really worth the candle. In fact, it is normally injurious to a genuine search for truth.

I refer you to the quotation at the top of the piece. For context: David Berlinski is a polymath who has taught philosophy, mathematics and English at universities including Columbia and Princeton. He’s the real deal and a genuine maverick whose genius is confirmed by the fact that he’s been sacked from every academic position he’s ever held.

The four foundational theories he references were in the main constructed by geniuses whose creativity was enabled precisely by their cultivated indifference to the “settled science” of the day.

The Longest Year in Human History (46 B.C.E.)

Historia Civilis

Published 24 Apr 2019

(more…)

QotD: How do we determine Roman dates like “46 BC”?

So this is actually a really interesting question that we need to break into two parts: what do historians do with dates that are at least premised on the Roman calendar and then what do we do with dates that aren’t.

Now the Roman calendar is itself kind of a moving target, so we can start with a brief history of that. At some very early point the Romans seem to have had a calendar with ten months, with December as the last month, March as the first month and no January or February. That said while you will hear a lot of folk history crediting Julius Caesar with the creation of two extra months (July and August) that’s not right; those months (called Quintilis and Sextilis) were already on the calendar. By the time we can see the Roman calendar, it has twelve months of variable lengths (355 days total) with an “intercalary month” inserted every other year to “reset” the calendar to the seasons. That calendar, which still started in March (sitting where it does, seasonally, as it does for us), the Romans attributed to the legendary-probably-not-a-real-person King Numa, which means in any case even by the Middle Republic it was so old no one knew when it started (Plut. Numa 18; Liv 1.19.6-7). The shift from March to January as the first month in turn happens in 153 (Liv. Per. 47.13), probably for political reasons.

We still use this calendar (more or less) and that introduces some significant oddities in the reckoning of dates that are recorded by the Roman calendar. See, because the length of the year (355 days) did not match the length of a solar year (famously 365 days and change), the months “drifted” over the calendar a little bit; during the first century BC when things were so chaotic that intercalary months were missed, the days might drift a lot. This problem is what Julius Caesar fixed, creating a 365 day calendar in 46; to “reset” the year for his new calendar he then extended the year 46 to 445 days. And you might think, “my goodness, that means we’d have to convert every pre-45 BC date to figure out what it actually is, how do we do that?”

And the answer is: we don’t. Instead, all of the oddities of the Roman calendar remain baked into our calendar and the year 46 BC is still reckoned as being 445 days long and thus the longest ever year. Consequently earlier Roman dates are directly convertible into our calendar system, though if you care what season a day happened, you might need to do some calculating (but not usually because the drift isn’t usually extreme). But in expressing the date as a day, the fact that the Gregorian calendar does not retroactively change the days of the Julian calendar, which also did not retroactively change the days of the older Roman calendar means that no change is necessary.

Ok, but then what year is it? Well, the Romans counted years two ways. The more common way was to refer to consular years, “In the year of the consulship of X and Y.” Thus the Battle of Cannae happened, “in the year of the consulship of Varro and Paullus,” 216 BC. In the empire, you sometimes also see events referenced by the year of a given emperor. Conveniently for us, we can reconstruct a complete list of all of the consular years and we know all of the emperors, so back-converting a date rendered like this is fairly easy. More rarely, the Romans might date with an absolute chronology, ab urbe condita (AUC) – “from the founding of the city”, which they imagined to have happened in in 753 BC. Since we know that date, this also is a fairly easy conversion.

Non-Roman dates get harder. The Greeks tend to date things either by serving magistrates (especially the Athenian “eponymous archon”, because we have so many Athenian authors) or by Olympiads. Olympiad dates are not too bad; it’s a four-year cycle starting in 780 BC, so we are now in the 700th Olympiad. Archon dates are tougher for two reasons. First, unlike Roman consuls, we have only a mostly complete list of Athenian archons, with some significant gaps. Both dates suffer from the complication that they do not line up neatly with the start of the Roman year. Olympiads begin and end in midsummer and archon years ran from July to June. If we have a day, or even a month attached to one of these dates, converting to a modern Gregorian calendar date isn’t too bad. But if, as is often the case, all you have is a year, it gets tricky; an event taking place “in the Archonship of Cleocritus” (with no further elaboration) could have happened in 413 or 412. Consequently, you’ll see the date (if there is no month or season indicator that lets us narrow it down), written as 413/2 – that doesn’t mean “in the year two-hundred and six and a half” but rather “413 OR 412”.

That said, with a complete list of emperors, consuls and Olympiads, along with a nearly complete list of archons, keeping the system together is relatively easy. Things get sticky fast when moving to societies using regnal years for which we do not have complete or reliable king’s lists. So for instance there are a range of potential chronologies for the Middle Bronze Age in Mesopotamia. I have no great expertise into how these chronologies are calculated; I was taught with the “Middle” chronology as the consensus position and so I use that and aim just to be consistent. Bronze Age Egyptian chronology has similar disputes, but with a lot less variation in potential dates. Unfortunately while obviously I have to be aware of these chronology disputes, I don’t really have the expertise to explain them – we’d have to get an Egyptologist or Assyriologist (for odd path-dependent reasons, scholars that study ancient Mesopotamia, including places and cultures that were not Assyria-proper are still called Assyriologists, although to be fair the whole region (including Egypt!) was all Assyria at one point) to write a guest post to untangle all of that.

That said in most cases all of this work has largely been done and so it is a relatively rare occurrence that I need to actually back convert a date myself. It does happen sometimes, mostly when I’m moving through Livy and have lost track of what year it is and need to get a date, in which case I generally page back to find the last set of consular elections and then check the list of consuls to determine the date.

Bret Devereaux, “Referenda ad Senatum: January 13, 2023: Roman Traditionalism, Ancient Dates and Imperial Spies”, A Collection of Unmitigated Pedantry, 2023-01-13.

August 6, 2023

The Warsaw Uprising Begins! – WW2 – Week 258 – August 5, 1944

World War Two

Published 5 Aug 2023As the Red Army closes in on Warsaw, the Polish Home Army in the city rises up against the German forces. Up in the north the Red Army takes Kaunas. The Allies take Florence in Italy this week, well, half of it, and in France break out of Normandy and into Brittany. The Allies also finally take Myitkyina in Burma after many weeks of siege, and in the Marianas take Tinian and nearly finish taking Guam. And in Finland the President resigns, which could have serious implications for Finland remaining in the war.

(more…)