A lunar eclipse occurs when the Earth gets between the Moon and the Sun.

A solar eclipse occurs when the Moon gets between the Earth and the Sun.

A terrestrial eclipse occurs when the Earth gets between you and the Sun. Happens once per 24 hours.

An atmospheric eclipse occurs when an asteroid gets between you and the sky. Generally fatal.

A reverse solar eclipse occurs when the Sun gets between the Moon and the Earth. Extremely fatal.

A motivational eclipse occurs when the Moon gets between you and your goals. You can’t let it stop you! Destroy it! Destroy the Moon!

A marital eclipse occurs when the Moon gets between you and your spouse. You’re going to need to practice good communication about the new celestial body in your life if you want your relationship to survive.

A capillary eclipse occurs when your hair gets between your eyes and the Sun. Get a haircut.

A lexicographic eclipse occurs when “Moon” gets between “Earth” and “Sun” in the dictionary. All Anglophone countries are in perpetual lexicographic eclipse.

A filioque eclipse occurs when the Holy Spirit gets between the Father and the Son.

An apoc eclipse occurs when the Great Beast 666, with seven heads and ten horns, and upon the horns ten crowns, and upon its heads the name of blasphemy, gets between the Earth and the Sun. Extremely fatal.

Scott Alexander, “Little Known Types of Eclipse”, Slate Star Codex, 2019-05-02.

December 16, 2022

QotD: Little-known types of eclipse

November 16, 2022

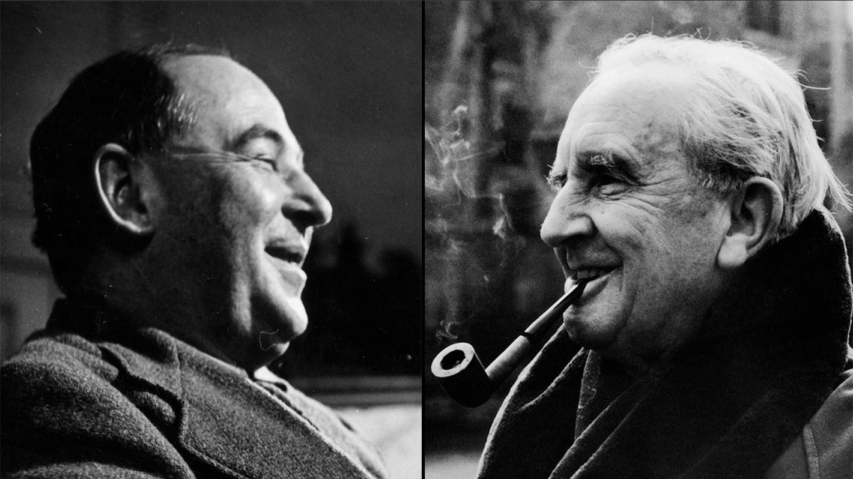

C.S. Lewis and J.R.R. Tolkien … arch-dystopians?

In The Upheaval, N.S. Lyons considers the literary warnings of well-known dystopian writers like Aldous Huxley and George Orwell, but makes the strong case that C.S. Lewis and J.R.R. Tolkien were even more prescient in the warnings their works contain:

Which dystopian writer saw it all coming? Of all the famous authors of the 20th century who crafted worlds meant as warnings, who has proved most prophetic about the afflictions of the 21st? George Orwell? Aldous Huxley? Kurt Vonnegut? Ray Bradbury? Each of these, among others, have proved far too disturbingly prescient about many aspects of our present, as far as I’m concerned. But it could be that none of them were quite as far-sighted as the fairytale spinners.

C.S. Lewis and J.R.R. Tolkien, fast friends and fellow members of the Inklings – the famous club of pioneering fantasy writers at Oxford in the 1930s and 40s – are not typically thought of as “dystopian” authors. They certainly never claimed the title. After all, they wrote tales of fantastical adventure, heroism, and mythology that have delighted children and adults ever since, not prophecies of boots stamping on human faces forever. And yet, their stories and non-fiction essays contain warnings that might have struck more surely to the heart of our emerging 21st century dystopia than any other.

The disenchantment and demoralization of a world produced by the foolishly blinkered “debunkers” of the intelligentsia; the catastrophic corruption of genuine education; the inevitable collapse of dominating ideologies of pure materialist rationalism and progress into pure subjectivity and nihilism; the inherent connection between the loss of any objective value and the emergence of a perverse techno-state obsessively seeking first total control over humanity and then in the end the final abolition of humanity itself … Tolkien and Lewis foresaw all of the darkest winds that now gather in growing intensity today.

But ultimately the shared strength of both authors may have also been something even more straightforward: a willingness to speak plainly and openly about the existence and nature of evil. Mankind, they saw, could not resist opening the door to the dark, even with the best of intentions. And so they offered up a way to resist it.

Subjectivism’s Insidious Seeds

The practical result of education in the spirit of The Green Book must be the destruction of the society which accepts it.

When Lewis delivered this line in a series of February 1943 lectures that would later be published as his short book The Abolition of Man, it must have sounded rather ridiculous. Britain was literally in a war for its survival, its cities being bombed and its soldiers killed in a great struggle with Hitler’s Germany, and Lewis was trying to sound the air-raid siren over an education textbook.

But Lewis was urgent about the danger coming down the road, a menace he saw as just as threatening as Nazism, and in fact deeply intertwined with it, give that:

The process which, if not checked, will abolish Man goes on apace among Communists and Democrats no less than among Fascists. The methods may (at first) differ in brutality. But many a mild-eyed scientists in pince-nez, many a popular dramatist, many an amateur philosopher in our midst, means in the long run just the same as the Nazi rulers of Germany. Traditional values are to be “debunked” and mankind to be cut into some fresh shape at will (which must, by hypothesis, be an arbitrary will) of some few lucky people …

Unfortunately, as Lewis would later lament, Abolition was “almost totally ignored by the public” at the time. But now that our society seems to be truly well along in the process of self-destruction kicked off by “education in the spirit of The Green Book“, it might be about time we all grasped what he was trying to warn us about.

This “Green Book” that Lewis viewed as such a symbol of menace was his polite pseudonym for a fashionable contemporary English textbook actually titled The Control of Language. This textbook was itself a popularization for children of the trendy new post-modern philosophy of Logical Positivism, as advanced in another book, I.A. Richards’ Principles of Literary Criticism. Logical Positivism saw itself as championing purely objective scientific knowledge, and was determined to prove that all metaphysical priors were not only false but wholly meaningless. In truth, however, it was as Lewis quickly realized actually a philosophy of pure subjectivism – and thus, as we shall see, a sure path straight out into “the complete void”.

In Abolition, Lewis zeros in on one seemingly innocuous passage in The Control of Language to begin illustrating this point. It relates a story told by the English poet Samuel Taylor Coleridge, in which two tourists visit a majestic waterfall. Gazing upon it, one calls it “sublime”. The other says, “Yes, it is pretty.” Coleridge is disgusted by the latter. But, as Lewis recounts, of this story the authors of the textbook merely conclude:

When the man said This is sublime, he appeared to be making a remark about the waterfall … Actually … he was not making a remark about the waterfall, but a remark about his own feelings. What he was saying was really I have feelings associated in my mind with the word “sublime”, or shortly, I have sublime feelings … This confusion is continually present in language as we use it. We appear to be saying something very important about something: and actually we are only saying something about our own feelings.

For Lewis, this “momentous little paragraph” contains all the seeds necessary for the destruction of humanity.

November 3, 2022

QotD: Why reading the news became less informative and more didactic

One of the small, pervasive changes that makes news stories seem both patronizing and politicized is the increasingly common practice of inserting judgmental adjectives into otherwise descriptive sentences. Telling readers that a statement is “false” while repeating it may be justified, if intrusive, but in other cases it’s an unnecessary tic.

Gone is the assumption that readers are intelligent people who can draw their own conclusions from a compelling presentation of the facts. Journalists now seem to live in fear that their readers won’t think correctly. Take this sentence from an interesting article on the evolution of American Sign Language: “For a portion of the 20th century, many schools for the deaf were more inclined to try to teach their students spoken English, rather than ASL, based on harmful beliefs that signing was inferior to spoken language.” (Emphasis added.)

If you read the article, you are highly unlikely to come to the conclusion that signing is anything less than a full-blown language, not inferior to spoken English. But the article never gives evidence that this incorrect 20th-century belief was harmful. It doesn’t discuss the pluses and minuses of signing, or why one belief was succeeded by another. That’s a different story. In the context of this story, the adjective is unnecessary, distracting, and insulting to the reader’s intelligence.

Virginia Postrel, “Shrinkflation, Disqualiflation, and Depression and more”, Virginia’s Newsletter, 2022-07-28.

October 11, 2022

Quebec politics explained (in Quebec!)

J.J. McCullough

Published 9 Oct 2022Politics in Canada’s French province. Thanks to Bespoke Post for sponsoring this video! New subscribers get 20% off their first box — go to https://www.bespokepost.com/jj20 and enter code

JJ20at checkout.My election watching buddy Sisyphus55: https://www.youtube.com/c/Sisyphus55

(more…)

September 15, 2022

“Presentism is … a disease, a contagion here in America as infectious as the Wuhan flu”

Jeff Minick on the mental attitude that animates so many progressives:

My online dictionary defines presentism as “uncritical adherence to present-day attitudes, especially the tendency to interpret past events in terms of modern values and concepts”. To my surprise, the 40-year-old dictionary on my shelf also contains this eyesore of a word and definition.

To be present, of course, is a generally considered a virtue. It can mean everything from giving ourselves to the job at hand — no one wants a surgeon dreaming of his upcoming vacation to St. Croix while he’s cracking open your chest — to consoling a grieving friend.

But presentism is altogether different. It’s a disease, a contagion here in America as infectious as the Wuhan flu. The latter spreads by way of a virus, the former through ignorance and puffed-up pride.

Presentism is what inspires the afflicted to tear down the statues of such Americans as Washington, Jefferson, and Robert E. Lee for owning slaves without ever once asking why this was so or seeking to discover what these men thought of slavery. Presentism is why the “Little House Books” and some of the early stories by Dr. Seuss are attacked or banned entirely.

Presentism is the reason so many young people can name the Kardashians but can’t tell you the importance of Abraham Lincoln or why we fought in World War II.

Presentism accounts in large measure for our Mount Everest of debt and inflation. Those overseeing our nation’s finances have refused to listen to warnings from the past, even the recent past, about the clear dangers of a government creating trillions of dollars out of the air.

Presentism has led America into overseas adventures that have invariably come to a bad end. Afghanistan, for example, has long been known as the graveyard of empires, a cemetery which includes the tombstones of British and Russian ambitions. By our refusal to heed the lessons of that history and our botched withdrawal from Kabul, we dug our own grave alongside them.

H/T to Kim du Toit for the link.

September 6, 2022

Fixing the American education system (other than burning it to the ground and starting over)

At First Things, M.D. Aeschliman reviews The Knowledge Gap: The Hidden Cause of America’s Broken Education System — and How to Fix It by Natalie Wexler:

E.D. Hirsch Jr., distinguished scholar of comparative literature, is the most important advocate for K–12 education reform of the past seventy-five years. Natalie Wexler’s recent book The Knowledge Gap is a helpful examination of Hirsch’s critical analyses and intellectual framework, as well as the elementary school curriculum that he designed — Core Knowledge.

One of Hirsch’s key focal points is the vapid, supposedly “developmentally appropriate” fictions that dominate language arts curricula in elementary schools — mind-numbingly banal stories with single-syllable vocabularies and large pictures. These silly literary fictions and fantasies have helped “dumb down” a hundred years of American students by eliminating or forbidding any substantial reading of expository prose about history and science in the first eight grades. A poignant narrative well worth reading is Harold Henderson’s Let’s Kill Dick and Jane, which details a noble but ultimately losing fight waged by a family firm from 1962 to 1996 against the big textbook publishers.

After a teaching career of fifty years, I agree with Hirsch that the primary problem in American public education is not the high schools, but the poorly organized, ineffective elementary school curricula, including the idiotic books of childish fiction. As Wexler writes, the governing “approach to reading instruction … leaves … many students unprepared to tackle high-school-level work”. Pity the poor high school teachers.

A hundred years ago John Dewey and his lieutenants from Columbia Teachers College, especially William H. Kilpatrick, started dismantling academic “subjects” in favor of “the project method”. They also worked to redefine history as “social studies”, a degenerative development that has continued without cease in our K–12 schools, leading to ludicrous presentism. Dewey and the progressives also attacked traditional language classes — especially phonics but also Latin — opening the way for “naturalistic” literacy instruction that has proved to be ineffective. Yet it should be obvious that students must “learn to read” well early on so as to “read to learn” for high school and college and the rest of their lives. And what they read early on is important.

The “progressive” educational assault on traditional American education had another source, which might be called “soft utopianism”. Twenty-five years ago Hirsch was already writing powerfully — in The Schools We Need and Why We Don’t Have Them — about this romantic-progressive “soft utopianism” and how it conflicted with what is wisest and best in the thinking, writings, and achievements of the founding fathers and their early republic. Yet he also knew — as himself a repentant progressive “mugged by reality” — that in the nineteenth century the republican educational legacy was already under intellectual assault by Rousseau’s American disciples Emerson, Thoreau, and Walt Whitman. Whitman’s egalitarian naturalism was one of Dewey’s greatest inspirations by the early twentieth century.

The progressives particularly dislike history, and our current “Great Awakening” indicates this. A few years ago, the former superintendent of the school system of one of our most “liberal” states said to me in private conversation that “the progressives hate history and won’t tolerate it in the curriculum”. They hate it because any thinking about history requires ethical assumptions and qualitative judgments: What in the past is worth studying? How do we structure our narratives? How do we fairly evaluate historical personalities and events? Which ones were virtuous and beneficial? These and related questions require some standard of justice and the idea that most individuals — in the past and present — have some degree of free will and some disposition to ethics: the “self-evident truths” of our founding document, and of civilization itself.

August 30, 2022

The plasticity of language, slippery definitions, and the ongoing gender wars

In The Line, Allan Stratton considers some of the reasons for misunderstanding, argument, and anger in the suddenly huge gender wars in western culture:

Two people at EuroPride 2019 in Vienna holding an LGBTQ+ pride rainbow flag featuring a design by Daniel Quasar; this variation of the rainbow flag was initially promoted as “Progress” a PRIDE Flag Reboot.

Photo by Bojan Cvetanović via Wikimedia Commons.

From my perspective, much of the controversy stems from academic redefinitions of language and concepts over the past 60 years. As these changes affected a small subculture, mainstream society paid them no mind. But language has consequences.

I’m a gay man in his early seventies, who’s paid close attention to the decades of linguistic manipulations that have turned sense into nonsense. Once, words and concepts had clear understandings that helped to create widespread support for LGBT rights. More recently, they have been conflated and inverted, and threaten to negatively affect the rights of women, the safety of gender-nonconforming children, and the lives of gays, lesbians, and transexuals.

A quick primer on the change in key terms may help to clarify our current mess and suggest a way forward:

Today the trans umbrella is understood to be a single movement within the Alphabet alliance, but in 1960s North America, it referred to three specific groups: self-identified transsexuals, transvestites and transgenders. There was some overlap, but none of the three were specifically attached to the fight for gay rights at all.

Transsexuals gained public prominence thanks to American Christine Jorgensen. After serving in the United States Army, Jorgensen had a sex change operation in Denmark before returning to America in 1953. She never identified as homosexual, but, rather, said she had born in the wrong body. Jorgenson was extraordinarily popular. I urge you to watch these two interviews, one from the ’60s and the other from the ’80s. Her wit, charm, self-assurance and intelligence demonstrate the power of persuasion, especially notable at a time far less tolerant than our own.

Transvestites (a term now considered derogatory) dressed and used the pronouns of the opposite sex, but fully acknowledged the material reality of their biology. Some were gay like the legendary Sylvia Rivera and Marsha P. Norman, who co-founded Street Transvestite Action Revolutionaries. Most, however, were straight men like Virginia Prince, who published Transvestia Magazine, founded the Society for the Second Self, and published the classic How to be Woman Though Male. They distanced themselves from the gay community, fearing the association hurt their image. “True transvestites,” Prince assured, “are exclusively heterosexual … The transvestite values his male organs, enjoys using them and does not desire them removed.”

The term transgender, coined by psychiatrist John Oliven in 1965, was designed to distinguish transsexuals, who wanted to surgically change sex, from transvestites, whose inclinations were limited to gendered feelings and presentation. But its definition soon morphed to ungainly proportions. By the ’90s, trans academic Susan Stryker had re-re-re-defined it as (deep breath) “all identities or practices that cross over, cut across, move between, or otherwise queer socially constructed sex/gender boundaries (including, but not limited to) transsexuality, heterosexual transvestism, gay drag, butch lesbianism, and such non-European identities as the Native American berdache (now 2 Spirit) or the Indian Hijra.”

It’s key to remember that, at this time, trans people typically considered themselves the opposite sex spiritually and socially, but not literally: To repeat, trans women like Virginia Price insisted they were straight male heterosexuals, and would have been outraged at the suggestion that they were lesbians. As a result, women’s rights were never infringed. No one insisted that “sexual attraction” and “biologically sexed bodies” be defined out of existence. Nor were “tomboys” and “sissies” expected to seek gender clinics or consider puberty blockers, cross-sex hormones and surgery.

Under those circumstances, trans people gradually gained public support for human and civil rights protections. It’s easy to empathize with the distress of feeling trapped in the wrong body, and the horror of wanting to claw one’s way out. And how can a live-and-let-live world justify discrimination against people for simply wanting to imagine and present themselves as they wish? Progress, though imperfect and incomplete, was real.

But, as we have seen practically every day in the last few years, for true Progressives, mere “progress” isn’t enough and there are no waypoints on the road to Utopia…

August 27, 2022

The hallmark of modern government is the institutionalization of corruption

In the New English Review, Theodore Dalrymple identifies one of the unifying trends of governments throughout the western world:

One of the most remarkable developments of recent years has been the legalization — dare I say, the institutionalization? — of corruption. This is not a matter of money passing under the table, or of bribery, though this no doubt goes on as it always has. It is far, far worse than that. Where corruption is illegal, there is at least some hope of controlling or limiting it, though of course there is no final victory over it; not, at least, until human nature changes.

The corruption of which I speak has a financial aspect, but only indirectly. It is principally moral and intellectual in nature. It is the means by which an apparatchik class and its nomenklatura of mediocrities achieve prominence and even control in society. I confess that I do not see a ready means of reversing the trend.

I happened to read the other day an article in the Times Higher Educational Supplement titled “Can army of new managers help HE [Higher Education] tackle big social challenges?” The article is subtitled “Spate of new senior roles created as universities seek answers on addressing sustainability, diversity and social responsibility.” One’s heart sinks: The old Pravda must have made for better reading than this.

As the article makes clear, though perhaps without intending to, the key to success in this brave new world of commissars, whose job is to draw a fat salary while enforcing a fatuous ideology, is mastery of a certain kind of verbiage couched in generalities that it would be too generous to call abstractions. This language nevertheless manages to convey menace. It is difficult, of course, to dissent from what is so imprecisely asserted, but one knows instinctively that any expressed reservations will be treated as a manifestation of something much worse than mere disease, something in fact akin to membership in the Ku Klux Klan.

It is obvious that the desiderata of the new class are not faith, hope, and charity, but power, salary, and pension; and of these, the greatest is the last. It is not unprecedented, of course, that the desire for personal advancement should be hidden behind a smoke screen of supposed public benefit, but rarely has it been so brazen. The human mind, however, is a complex instrument, and sometimes smoke screens remain hidden even from those who raise them. People who have been fed a mental diet of psychology, sociology, and so forth are peculiarly inapt for self-examination, and hence are especially liable to self-deception. It must be admitted, therefore, that it is perfectly possible that the apparatchik-commissar-nomenklatura class genuinely believes itself to be doing, if not God’s work exactly, at least that of progress, in the sense employed in self-congratulatory fashion by those who call themselves progressives. For it, however, there is certainly one sense in which the direction of progress has a tangible meaning: up the career ladder.

August 19, 2022

Why Quebec rejected the American Revolution

Conrad Black outlines the journey of the French colony of New France through the British conquest to the (amazing to the Americans) decision to stay under British control rather than join the breakaway American colonies in 1776:

Civil rights were not a burning issue when Canada was primarily the French colony of New France. The purpose of New France was entirely commercial and essentially based upon the fur trade until Jean Talon created industries that made New France self-sufficient. And to raise the population he imported 1,000 nubile young French women, and today approximately seven million French Canadians and Franco-Americans are descended from them. Only at this point, about 75 years after it was founded, did New France develop a rudimentary legal and judicial framework.

Eighty years later, when the British captured Québec City and Montréal in the Seven Years’ War, a gentle form of British military rule ensued. A small English-speaking population arose, chiefly composed of commercial sharpers from the American colonies claiming to be performing a useful service but, in fact, exploiting the French Canadians. Colonel James Murray became the first English civil governor of Québec in 1764. A Royal proclamation had foreseen an assembly to govern Québec, but this was complicated by the fact that at the time British law excluded any Roman Catholic from voting for or being a member of any such assembly, and accordingly the approximately 500 English-speaking merchants in Québec demanded an assembly since they would be the sole members of it. Murray liked the French Canadians and despised the American interlopers as scoundrels. He wrote: “In general they are the most immoral collection of men I ever knew.” He described the French of Québec as: “a frugal, industrious, moral race of men who (greatly appreciate) the mild treatment they have received from the King’s officers”. Instead of facilitating creation of an assembly that would just be a group of émigré New England hustlers and plunderers, Murray created a governor’s council which functioned as a sort of legislature and packed it with his supporters, and sympathizers of the French Canadians.

The greedy American merchants of Montréal and Québec had enough influence with the board of trade in London, a cabinet office, to have Murray recalled in 1766 for his pro-French attitudes. He was a victim of his support for the civil rights of his subjects, but was replaced by a like-minded governor, the very talented Sir Guy Carleton, [later he became] Lord Dorchester. Murray and Carleton had both been close comrades of General Wolfe. […]

The British had doubled their national debt in the Seven Years’ War and the largest expenses were incurred in expelling the French from Canada at the urgent request of the principal American agent in London, Benjamin Franklin. As the Americans were the most prosperous of all British citizens, the British naturally thought it appropriate that the Americans should pay the Stamp Tax that their British cousins were already paying. The French Canadians had no objection to the Stamp Tax, even though it paid for the expulsion of France from Canada.

As Murray and Carleton foresaw, the British were not able to collect that tax from the Americans; British soldiers would be little motivated to fight their American kinfolk, and now that the Americans didn’t have a neighboring French presence to worry them, they could certainly be tempted to revolt and would be very hard to suppress. As Murray and Carleton also foresaw, the only chance the British would have of retaining Canada and preventing the French Canadians from rallying to the Americans would be if the British crown became symbolic in the mind of French Canada with the survival of the French language and culture and religion. Carleton concluded that to retain Québec’s loyalty, Britain would have to make itself the protector of the culture, the religion, and also the civil law of the French Canadians. From what little they had seen of it, the French Canadians much preferred the British to the French criminal law. In pre-revolutionary France there was no doctrine of habeas corpus and the authorities routinely tortured suspects.

In a historically very significant act, Carleton effectively wrote up the assurances that he thought would be necessary to retain the loyalty of the colony. He wanted to recruit French-speaking officials from among the colonists to give them as much self-government as possible while judiciously feeding the population a worrisome specter of assimilation at the hands of a tidal wave of American officials and commercial hustlers in the event of an American takeover of Canada.

After four years of lobbying non-stop in London, Carleton gained adoption of the Québec Act, which contained the guaranties he thought necessary to satisfy French Canada. He returned to a grateful Québec in 1774. The knotty issue of an assembly, which Québec had never had and was not clamoring for, was ducked, and authority was vested in a governor with an executive and legislative Council of 17 to 23 members chosen by the governor.

Conveniently, the liberality accorded the Roman Catholic Church was furiously attacked by the Americans who in their revolutionary Continental Congress reviled it as “a bloodthirsty, idolatrous, and hypocritical creed … a religion which flooded England with blood, and spread hypocrisy, murder, persecution, and revolt into all parts of the world”. The American revolutionaries produced a bombastic summary of what the French-Canadians ought to do and told them that Americans were grievously moved by their degradation, but warned them that if they did not rally to the American colours they would be henceforth regarded as “inveterate enemies”. This incendiary polemic was translated, printed, and posted throughout the former New France, by the Catholic Church and the British government, acting together. The clergy of the province almost unanimously condemned the American agitation as xenophobic and sectarian incitements to hate and needless bloodshed.

Carleton astounded the French-Canadians, who were accustomed to the graft and embezzlement of French governors, by not taking any payment for his service as governor. It was entirely because of the enlightened policy of Murray and Carleton and Carleton’s skill and persistence as a lobbyist in the corridors of Westminster, that the civil and cultural rights of the great majority of Canadians 250 years ago were conserved. The Americans when they did proclaim the revolution in 1775 and officially in the Declaration of Independence on July 4, 1776, made the British position in Canada somewhat easier by their virulent hostility to Catholicism, and to the French generally.

August 10, 2022

“Every nation is divided, and thrives on division. But France illustrates the rule rather too well.”

Ed West on the historical divisions of the many regions in what we now know as “France”:

France is gigantic, like a continent in itself, and the most visited country on earth. It is four times the size of England and until the 17th century had a population four to five times as big (today it is 67 million v 56 million). Yet “France” until relatively recently extended not much further than Paris, in the area under the king’s direct control called the Île-de-France — beyond that, regional identity was distinctive and dialect pre-dominated.

As Robb writes, at the time of the Revolution just 11 per cent of the population, or 3 million people, spoke “French”; by 1880 still only one in five could communicate in the national language. Even with decades of centralisation, today there are still 55 distinct dialects in France; most are Romance, but the country is also home to Flemish, Alsatian, Breton and Basque-speaking communities. (Tintin has been translated into at least a dozen French dialects.) No regional identity in England, except the north-east, is as distinctive.

Many famous French historical figures wouldn’t have even understood “French”, among them St Bernadette, living in the then obscure village of Lourdes. She described the figure she saw as un petito damisela (or in French une petite demoiselle), the name for the local forest fairies in the Pyrenees. The Demoiselles dressed in white, lived in caves and grottos and were associated with water. They were also seen as being on the side of the poor, Robb points out, because here as is often the case there was a political underside to this folk belief. Indeed, a peasant conflict with the authorities from the 1830s to the 1870s had been called The War of the Demoiselles. But then conflict with the authorities — with Paris — almost defines French history.

If you like Robb’s work, you’ll also enjoy Fernand Braudel’s The Identity of France, published in 1986 and supposed to be part one of a series by the great 20th century historian. Unfortunately, Braudel was already dead by the time part one was published, and so the series was never finished.

Braudel loved his country and believed in a deep and abiding Frenchness, yet he was also fascinated by its divisions, the various different pay — from Gallo-Roman pagus — which translates as land, although it can mean either country or region. Within this, dialects can be very varied: Gascon is “quite distinct” to Languedocien and Provencal, he wrote, but in Gascony “two completely different patois” were spoken. Near to Salins the language spoken in each village “varies to the point of being unrecognisable” and “what is more extraordinary” the town “being almost half a league in length, is divided by language and even customs, into two distinct halves”.

France’s regional identity is defined by language, food — the division between butter and olive, wine and cider — and even roof tiles. Braudel was essentially a geographic determinist and, citing Sartre’s line that France was “non-unifiable”, the author lamented that: “Every nation is divided, and thrives on division. But France illustrates the rule rather too well.”

To illustrate the rivalry, compare the words of two 19th-century historians: Jean-Bernard Mary-Lafon, who contrasted the “refined and freedom-loving” southerners with “brute barbarism” of “knights from across the Loire”, violent, fanatical and pillaging. And Ernest Renan, who wrote in 1872: “I may be mistaken, but there is a view derived from historical ethnography which seems more and more convincing to my mind. The similarity between England and northern France appears increasingly clear to me every day. Our foolishness comes from the south, and if France had not drawn Languedoc and Provence into her sphere of activity, we should be a serious, active, Protestant and parliamentary people.”

He was surely mistaken, for it’s the south which is more Protestant and the north more Catholic. Just like in England, where regional and religious identity are intertwined.

Yet it is true that France’s great bounty was also its curse; this western European isthmus forms a natural unit within which the most powerful warlord could dominate, and that man was bound to be based somewhere on the Seine or Loire, close to the continent’s richest wheat-growing area. Yet in the early modern era this unit was far too big to govern effectively — 22 days’ ride from north to south — compared to England or the Netherlands. The author quotes an essayist who suggested that: “France is not a synchronised country: it is like a horse whose four legs move in a different time.”

August 9, 2022

QotD: Fusty old literary archaism

For as long as I can remember, readers have been trained to associate literary archaism with the stuffy and Victorian. Shielded thus, they may not realize that they are learning to avoid a whole dimension of poetry and play in language. Poets, and all other imaginative writers, have been consciously employing archaisms in English, and I should think all other languages, going back at least to Hesiod and Homer. The King James Version was loaded with archaisms, even for its day; Shakespeare uses them not only evocatively in his Histories, but everywhere for colour, and in juxtaposition with his neologisms to increase the shock.

In fairy tales, this “once upon a time” has always delighted children. Novelists, and especially historical novelists, need archaic means to apprise readers of location, in their passage-making through time. Archaisms may paradoxically subvert anachronism, by constantly yet subtly reminding the reader that he is a long way from home.

Get over this adolescent prejudice against archaism, and an ocean of literary experience opens to you. Among other things, you will learn to distinguish one kind of archaism from another, as one kind of sea from another should be recognized by a yachtsman.

But more: a particular style of language is among the means by which an accomplished novelist breaks the reader in. There are many other ways: for instance by showering us with proper nouns through the opening pages, to slow us down, and make us work on the family trees, or mentally squint over local geography. I would almost say that the first thirty pages of any good novel will be devoted to shaking off unwanted readers; or if they continue, beating them into shape. We are on a voyage, and the sooner the passengers get their sea legs, the better life will be all round.

David Warren, “Kristin Lavransdatter”, Essays in Idleness, 2019-03-21.

July 27, 2022

July 2, 2022

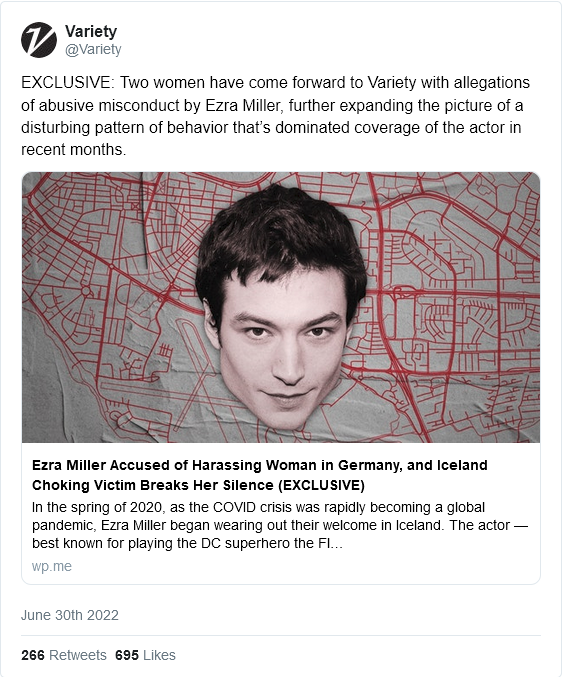

The “preferred pronoun” problem

Jim Treacher is having pronoun problems … not choosing the ones he wants to use or for others to use for him, but the whole “preferred pronoun” imposition on the rest of society to learn, remember, and use properly the bespoke pronoun choices for all the extra, extra-special snowflakes:

Remember when you could just use the pronouns that self-evidently described a person? If you were talking about a fella, you’d say stuff like, “He did this” and “Here’s what I said to him.” Or if you were talking about a lady, you’d say things like, “The 19th Amendment says she can vote now, good for her.” It was a simpler time.

Now pronouns are a frickin’ minefield. You put one little tippy-toe on the wrong pronoun and … BOOM!! A heedless “misgendering” can get you in big trouble. You can get banned from the internet and/or lose your job. For some reason, you’re expected to enable the delusions of any person with trendy mental health issues. It’s not enough for a trans person to call him-, her-, or themselves whatever he, she, or they want. The rest of us are all obligated to go along.

Even if he’s a scumbag criminal like Ezra Miller.

And what’s even worse, this bizarre phenomenon renders news stories about “nonbinary” people almost indecipherable. Just look at this latest story about the ex-Flash actor going around the world being a violent lunatic:

The actor — best known for playing the DC superhero the Flash in several films for Warner Bros. — was set to start filming the studio’s latest entry in the “Harry Potter” franchise, Fantastic Beasts: The Secrets of Dumbledore, in London when the shoot was halted on March 15, 2020, due to COVID. In the weeks after, Miller, who identifies as nonbinary and uses “they/them” pronouns, became a regular at bars in Iceland’s capital, Reykjavík, where locals came to know and even befriend them. Many recognized Miller from their earliest breakout movies, 2012’s The Perks of Being a Wallflower and 2011’s We Need to Talk About Kevin, where they played a troubled teen who brought a bow and arrow to school and murdered his classmates.

It’s a grammatical nightmare. “Many recognized Miller from their earliest breakout movies.” Oh, so those Icelanders worked with Miller on those movies? No, you see, “their” is supposed to refer to him.

And this part is just madness: “They played a troubled teen who brought a bow and arrow to school and murdered his classmates.” So it’s not “They murdered their classmates,” because the character he was playing wasn’t nonbinary? What is this gibberish?

Tom Hanks recently said he regrets playing a gay man in Philadelphia because he’s not gay. I always thought that was just called “acting”, but what do I know. If that’s the case, though, why should a nonbinary person be allowed to play a normal person?

June 14, 2022

The Early Roman Emperors – Part 2: An Empire of Peoples

seangabb

Published 4 Oct 2021The Roman Empire was the last and the greatest of the ancient empires. It is the origin from which springs the history of Western Europe and those nations that descend from the Western Roman Empire. It is the political entity within which the Christian faith was born, and the growth of the Church within the Empire, and its eventual establishment as the sole faith of the Empire, have left an indelible impression on all modern denominations. Its history, together with that of the ancient Greeks and the Jews, is our history. To understand how the Empire emerged from a great though finally dysfunctional republic, and how it was consolidated by its early rulers, is partly how we understand ourselves.

Here is a series of lectures given by Sean Gabb in late 2021, in which he discusses and tries to explain the achievement of the early Emperors. For reasons of politeness and data protection, all student contributions have been removed.

More by Sean Gabb on the Ancient World: https://www.classicstuition.co.uk/

Learn Latin or Greek or both with him: https://www.udemy.com/user/sean-gabb/

His historical novels (under the pen name “Richard Blake”): https://www.amazon.co.uk/Richard-Blake/e/B005I2B5PO

May 16, 2022

QotD: The difference between surface meaning and actual intent

A basic truism is that languages don’t map exactly over each other and that’s the most likely explanation for this database from China detailing “BreedReady” women. That languages don’t map exactly should be obvious even to the most monolingual of English speakers. We all know that “Let’s have lunch sometime” when said by an American means “Hope to see you never and definitely not while eating”. Similarly, “That’s lovely” when said by a Brit does not necessarily mean it is lovely and “How quaint” isn’t praise for the cuteness of the thing. A Californian invocation to meet Tuesday is in fact a rumination on the possible non-existence of Tuesday.

Tim Worstall, “That Chinese ‘BreedReady’ Database – Check The Translation”, Continental Telegraph, 2019-03-11.