Feral Historian

Published 28 Feb 2025Star Trek has been the “new frontier” story for so long that it’s become more retro than futurist. But that doesn’t mean the frontier story itself is dead, only that there’s a disconnect between the future we want and the visions of it that we have.

00:00 Intro

02:19 Time and Space

06:06 Inhabited Spaces

09:44 A story of the Past

July 20, 2025

Star Trek and the New Frontier Story

June 25, 2025

H.G. Wells’ Things To Come: Through The Eyes of its Time

Feral Historian

Published 10 Jan 2025H.G. Wells’ Things To Come played much differently in 1936 than it does today. So much so that it offers us an insight into the politics of the period if we can step back from our post-WWII understanding and look at it on its own terms.

Link to the Coupland essay.

http://digamoo.free.fr/coupland2000.pdf00:00 Intro

02:08 Revolution Envy

05:15 The Gulf of Time

06:32 Wells and the BUF

08:02 Empire and Establishment

12:11 The World State

15:18 To Understand the Past …

October 23, 2024

The Last Surviving Giant Passenger Hovercraft

The Tim Traveller

Published Jul 2, 2024In early 1968, Britain launched a revolutionary new form of sea transport: the giant SRN4-class hovercraft. They are the largest passenger hovercraft ever built, and they could fly between England and France in just 30 minutes — three times faster than anything else on the water. So why don’t we have anything like them any more?

MORE INFO

The Hovercraft Museum: https://www.hovercraft-museum.org/

The museum’s “Support Us” page, if you’d like to help them out: https://www.hovercraft-museum.org/sup…

September 16, 2024

Stephen Fry on artificial intelligence

On his Substack, Stephen Fry has posted the text of remarks he made last week in a speech for King’s College London’s Digital Futures Institute:

So many questions. The first and perhaps the most urgent is … by what right do I stand before you and presume to lecture an already distinguished and knowledgeable crowd on the subject of Ai and its meaning, its bright promise and/or/exclusiveOR its dark threat? Well, perhaps by no greater right than anyone else, but no lesser. We’ll come to whose voices are the most worthy of attention later.

I have been interested in the subject of Artificial Intelligence since around the mid-80s when I was fortunate enough to encounter the so-called father of Ai, Marvin Minsky and to read his book The Society of Mind. Intrigued, I devoured as much as I could on the subject, learning about the expert systems and “bundles of agency” that were the vogue then, and I have followed the subject with enthusiasm and gaping wonder ever since. But, I promise you, that makes me neither expert, sage nor oracle. For if you are preparing yourselves to hear wisdom, to witness and receive insight this evening, to bask and bathe in the light of prophecy, clarity and truth, then it grieves me to tell you that you have come to the wrong shop. You will find little of that here, for you must know that you are being addressed this evening by nothing more than an ingenuous simpleton, a naive fool, a ninny-hammer, an addle-pated oaf, a dunce, a dullard and a double-dyed dolt. But before you streak for the exit, bear in mind that so are we all, all of us bird-brained half-wits when it comes to this subject, no matter what our degrees, doctorates and decades of experience. I can perhaps congratulate myself, or at least console myself, with the fact that I am at least aware of my idiocy. This is not fake modesty designed to make me come across as a Socrates. But that great Athenian did teach us that our first step to wisdom is to realise and confront our folly.

I’ll come to the proof of how and why I am so boneheaded in a moment, but before I go any further I’d like to paint some pictures. Think of them as tableaux vivants played onto a screen at the back of your mind. We’ll return to them from time to time. Of course I could have generated these images from Midjourney or Dall-E or similar and projected them behind me, but the small window of time in which it was amusing and instructive for speakers to use Ai as an entertaining trick for talks concerning Ai has thankfully closed. You’re actually going to have to use your brain’s own generative latent diffusion skills to summon these images.

[…]

An important and relevant point is this: it wasn’t so much the genius of Benz that created the internal combustion engine, as that of Vladimir Shukhov. In 1892, the Russian chemical engineer found a way of cracking and refining the spectrum of crude oil from methane to tar yielding amongst other useful products, gasoline. It was just three years after that that Benz’s contraption spluttered into life. Germans, in a bow to this, still call petrol Benzin. John D. Rockefeller built his refineries and surprisingly quickly there was plentiful fuel and an infrastructure to rival the stables and coaching inns; the grateful horse meanwhile could be happily retired to gymkhanas, polo and royal processions.

Benz’s contemporary Alexander Graham Bell once said of his invention, the telephone, “I don’t think I am being overconfident when I say that I truly believe that one day there will be a telephone in every town in America”. And I expect you all heard that Thomas Watson, the founding father of IBM, predicted that there might in the future be a world market for perhaps five digital computers.

Well, that story of Thomas Watson ever saying such a thing is almost certainly apocryphal. There’s no reliable record of it. Ditto the Alexander Graham Bell remark. But they circulate for a reason. The Italians have a phrase for that: se non e vero, e ben trovato. “If it’s not true, it’s well founded.” Those stories, like my scenario of that group of early investors and journalists clustering about the first motorcar, illustrate an important truth: that we are decidedly hopeless at guessing where technology is going to take us and what it’ll do to us.

You might adduce as a counterargument Gordon Moore of Intel expounding in 1965 his prediction that semiconductor design and manufacture would develop in such a way that every eighteen months or so they would be able to double the number of transistors that could fit in the same space on a microchip. “He got that right,” you might say, “Moore’s Law came true. He saw the future.” Yes … but. Where and when did Gordon Moore foresee Facebook, TikTok, YouTube, Bit Coin, OnlyFans and the Dark Web? It’s one thing to predict how technology changes, but quite another to predict how it changes us.

Technology is a verb, not a noun. It is a constant process, not a settled entity. It is what the philosopher-poet T. E. Hulme called a concrete flux of interpenetrating intensities; like a river it is ever cutting new banks, isolating new oxbow lakes, flooding new fields. And as far as the Thames of Artificial Intelligence is concerned, we are still in Gloucestershire, still a rivulet not yet a river. Very soon we will be asking round the dinner table, “Who remembers ChatGPT?” and everyone will laugh. Older people will add memories of dot matrix printers and SMS texting on the Nokia 3310. We’ll shake our heads in patronising wonder at the past and its primitive clunkiness. “How advanced it all seemed at the time …”

Those of us who can kindly be designated early adopters and less kindly called suckers remember those pioneering days with affection. The young internet was the All-Gifted, which in Greek is Pandora. Pandora in myth was sent down to earth having been given by the gods all the talents. Likewise the Pandora internet: a glorious compendium of public museum, library, gallery, theatre, concert hall, park, playground, sports field, post office and meeting hall.

July 4, 2024

“In other words, God is a deliverable for the R&D team”

Ted Gioia isn’t impressed with the changes we’ve seen over the years among the Silicon Valley leadership:

Yes, I should have been alarmed when this cult-ish ideology took off in Silicon Valley — where the goal had previously been incremental progress (Moore’s law and all that) and not being evil.

When I first came to Silicon Valley at age 17, the two leading technologists in the region were named William Hewlett and David Packard. They used their extra cash to fund schools, museums, and hospitals — both my children were born at the Lucile Packard Children’s Hospital — not immortality machines, or rockets to Mars, or a dystopian Internet of brains, or worshipping at the Church of the Singularity.

Tech leaders were built differently back then. When famous historian Arnold Toynbee visited Stanford in 1963, he had a chance encounter with William Hewlett. Afterwards Toynbee marveled over his new acquaintance, declaring: “What an amazing fellow. He has more knowledge of history than many historians.”

In other words, Bill Hewlett had more wisdom than ego. He invested in the community where he lived — not the Red Planet. Instead of promulgating social engineering schemes, Hewlett and Packard built a new engineering school at their alma mater, and named it after their favorite teacher.

They wouldn’t recognize Silicon Valley today. The FM-2030s are now in charge.

Another warning sign came when Google hired cult-ish tech guru Ray Kurzweil — a man who had once created a reasonable music keyboard that even Stevie Wonder used.

But Kurzweil went on to write starry-eyed books of utopian tech worship which come straight out of the weird religion playbook (The Age of Spiritual Machines, The Singularity is Near, etc.)

What does tech look like when it gets turned into a religion? Kurzweil summed it up when asked if there is a God. His response: “Not yet.”

In other words, God is a deliverable for the R&D team.

I note that, when Forbes revisited Ray Kurzweil’s predictions, they found that almost every one went wrong.

So what does he do?

Kurzweil follows up his book The Singularity is Near with a new book entitled The Singularity is Nearer. Give the man credit for hubris. This is exactly what religious cults do when their predicted Rapture doesn’t occur.

They just change the date on the calendar — Utopia has been delayed for another 12 months.

But, of course, Utopia is always delayed another 12 months. Meanwhile the cult leaders can do a lot of damage while preparing for the Rapture.

And despite the techno-elite’s apparent endless quest for perfection in their own lives, the enshittification of the technology they deliver to us proles continues relentlessly:

Here’s a curious fact. The more they brag about their utopias, the worse their products and services get.

Even the word upgrade is now a joke — whenever a tech company promises it, you can bet it will be a downgrade in your experience. That’s not just my view, but overwhelmingly supported by survey respondents.

For the first time since the dawn of the Renaissance, innovation is now feared by the vast majority of people. And the tech leaders, once admired and emulated, now rank among the least trustworthy people in the world.

It was different when Linus Pauling was peddling his horse pills — he eventually set up shop in Big Sur, far south of the tech industry, in order to find a hospitable home for his wackiest ideas.

Nowadays, Big Sur thinking has come to the Valley.

And when you set up cults inside the largest corporations in the history of the world, we are all endangered.

Just imagine if Linus Pauling had enjoyed the power to force everybody to take his huge vitamin doses. Just imagine if Bill Shockley had possessed the authority to impose his racist eugenics theories on the populace.

It’s scary to think of. But they couldn’t do it, because they didn’t have billions of dollars, and run trillion-dollar companies with politicians at their beck and call.

But the current cultists include the wealthiest people in the world, and they are absolutely using their immense power to set rules for the rest of us. If you rely on Apple or Google or some other huge web behemoth — and who doesn’t? — you can’t avoid this constant, bullying manipulation.

The cult is in charge. And it’s like we’re all locked into an EST training sessions — nobody gets to leave even for bathroom breaks.

There’s now overwhelming evidence of how destructive the new tech can be. Just look at the metrics. The more people are plugged in, the higher are their rates of depression, suicidal tendencies, self-harm, mental illness, and other alarming indicators.

If this is what the tech cults have already delivered, do we really want to give them another 12 months? Do you really want to wait until they deliver the Rapture?

April 22, 2024

The internal stresses of the modern techno-optimist family

Ted Gioia on the joys of techno-optimism (as long as you don’t have to eat Meal 3.0, anyway):

We were now the ideal Techno-Optimist couple. So imagine my shock when I heard crashing and thrashing sounds from the kitchen. I rushed in, and could hardly believe my eyes.

Tara had taken my favorite coffee mugs, and was pulverizing them with a sledgehammer. I own four of these — and she had already destroyed three of them.

This was alarming. Those coffee mugs are like my personal security blanket.

“What are you doing?” I shouted.

“We need to move fast and break things“, she responded, a steely look in her eyes. “That’s what Mark Zuckerberg tells us to do.”

“But don’t destroy my coffee mugs!” I pleaded.

“It’s NOT destruction,” she shouted. “It’s creative destruction! You haven’t read your Schumpeter, or you’d know the difference.”

She was right — it had been a long time since I’d read Schumpeter, and only had the vaguest recollection of those boring books. Didn’t he drink coffee? I had no idea. So I watched helplessly as Tara smashed the final mug to smithereens.

I was at a loss for words. But when she turned to my prized 1925 Steinway XR-Grand piano, I let out an involuntary shriek.

No, no, no, no — not the Steinway.

She hesitated, and then spoke with eerie calmness: “I understand your feelings. But is this analog input system something a Techno-Optimist family should own?”

I had to think fast. Fortunately I remembered that my XR-Grand was a strange Steinway, and it originally had incorporated a player piano mechanism (later removed from my instrument). This gave me an idea:

I started improvising (one of my specialties):

You’re absolutely right. A piano is a shameful thing for a Techno-Optimist to own. Our music should express Dreams of Tomorrow. [I hummed a few bars.] But this isn’t really a piano — you need to consider it as a high performance peripheral, with limitless upgrade potential.

I opened the bottom panel, and pointed to the empty space where the player piano mechanism had once been. “This is where we insert the MIDI interface. Just wait and see.”

She paused, and thought it over — but still kept the sledgehammer poised in midair. Then asked: “Are you sure this isn’t just an outmoded legacy system?”

“Trust me, baby,” I said with all the confidence I could muster. “Together we can transform this bad boy into a cutting edge digital experience platform. We will sail on it together into the Metaverse.”

She hesitated — then put down the sledgehammer. Disaster averted!

“You’re blinding me with science, my dear,” I said to her in my most conciliatory tone.

“Technology!” she responded with a saucy grin.

April 6, 2024

Three AI catastrophe scenarios

David Friedman considers the threat of an artificial intelligence catastrophe and the possible solutions for humanity:

Earlier I quoted Kurzweil’s estimate of about thirty years to human level A.I. Suppose he is correct. Further suppose that Moore’s law continues to hold, that computers continue to get twice as powerful every year or two. In forty years, that makes them something like a hundred times as smart as we are. We are now chimpanzees, perhaps gerbils, and had better hope that our new masters like pets. (Future Imperfect Chapter XIX: Dangerous Company)

As that quote from a book published in 2008 demonstrates, I have been concerned with the possible downside of artificial intelligence for quite a while. The creation of large language models producing writing and art that appears to be the work of a human level intelligence got many other people interested. The issue of possible AI catastrophes has now progressed from something that science fiction writers, futurologists, and a few other oddballs worried about to a putative existential threat.

Large Language models work by mining a large database of what humans have written, deducing what they should say by what people have said. The result looks as if a human wrote it but fits the takeoff model, in which an AI a little smarter than a human uses its intelligence to make one a little smarter still, repeated to superhuman, poorly. However powerful the hardware that an LLM is running on it has no superhuman conversation to mine, so better hardware should make it faster but not smarter. And although it can mine a massive body of data on what humans say it in order to figure out what it should say, it has no comparable body of data for what humans do when they want to take over the world.

If that is right, the danger of superintelligent AIs is a plausible conjecture for the indefinite future but not, as some now believe, a near certainty in the lifetime of most now alive.

[…]

If AI is a serious, indeed existential, risk, what can be done about it?

I see three approaches:

I. Keep superhuman level AI from being developed.

That might be possible if we had a world government committed to the project but (fortunately) we don’t. Progress in AI does not require enormous resources so there are many actors, firms and governments, that can attempt it. A test of an atomic weapon is hard to hide but a test of an improved AI isn’t. Better AI is likely to be very useful. A smarter AI in private hands might predict stock market movements a little better than a very skilled human, making a lot of money. A smarter AI in military hands could be used to control a tank or a drone, be a soldier that, once trained, could be duplicated many times. That gives many actors a reason to attempt to produce it.

If the issue was building or not building a superhuman AI perhaps everyone who could do it could be persuaded that the project is too dangerous, although experience with the similar issue of Gain of Function research is not encouraging. But at each step the issue is likely to present itself as building or not building an AI a little smarter than the last one, the one you already have. Intelligence, of a computer program or a human, is a continuous variable; there is no obvious line to avoid crossing.

When considering the down side of technologies–Murder Incorporated in a world of strong privacy or some future James Bond villain using nanotechnology to convert the entire world to gray goo – your reaction may be “Stop the train, I want to get off.” In most cases, that is not an option. This particular train is not equipped with brakes. (Future Imperfect, Chapter II)

II. Tame it, make sure that the superhuman AI is on our side.

Some humans, indeed most humans, have moral beliefs that affect their actions, are reluctant to kill or steal from a member of their ingroup. It is not absurd to belief that we could design a human level artificial intelligence with moral constraints and that it could then design a superhuman AI with similar constraints. Human moral beliefs apply to small children, for some even to some animals, so it is not absurd to believe that a superhuman could view humans as part of its ingroup and be reluctant to achieve its objectives in ways that injured them.

Even if we can produce a moral AI there remains the problem of making sure that all AI’s are moral, that there are no psychopaths among them, not even ones who care about their peers but not us, the attitude of most humans to most animals. The best we can do may be to have the friendly AIs defending us make harming us too costly to the unfriendly ones to be worth doing.

III. Keep up with AI by making humans smarter too.

The solution proposed by Raymond Kurzweil is for us to become computers too, at least in part. The technological developments leading to advanced A.I. are likely to be associated with much greater understanding of how our own brains work. That might make it possible to construct much better brain to machine interfaces, move a substantial part of our thinking to silicon. Consider 89352 times 40327 and the answer is obviously 3603298104. Multiplying five figure numbers is not all that useful a skill but if we understand enough about thinking to build computers that think as well as we do, whether by design, evolution, or reverse engineering ourselves, we should understand enough to offload more useful parts of our onboard information processing to external hardware.

Now we can take advantage of Moore’s law too.

A modest version is already happening. I do not have to remember my appointments — my phone can do it for me. I do not have to keep mental track of what I eat, there is an app which will be happy to tell me how many calories I have consumed, how much fat, protein and carbohydrates, and how it compares with what it thinks I should be doing. If I want to keep track of how many steps I have taken this hour3 my smart watch will do it for me.

The next step is a direct mind to machine connection, currently being pioneered by Elon Musk’s Neuralink. The extreme version merges into uploading. Over time, more and more of your thinking is done in silicon, less and less in carbon. Eventually your brain, perhaps your body as well, come to play a minor role in your life, vestigial organs kept around mainly out of sentiment.

As our AI becomes superhuman, so do we.

March 25, 2024

Vernor Vinge, RIP

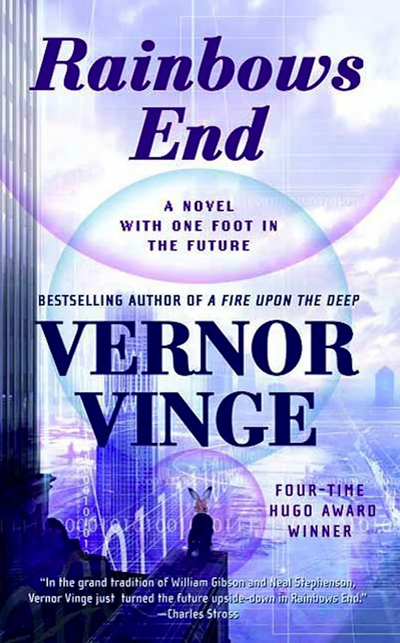

Glenn Reynolds remember science fiction author Vernor Vinge, who died last week aged 79, reportedly from complications of Parkinson’s Disease:

Vernor Vinge has died, but even in his absence, the rest of us are living in his world. In particular, we’re living in a world that looks increasingly like the 2025 of his 2007 novel Rainbows End. For better or for worse.

[…]

Vinge is best known for coining the now-commonplace term “the singularity” to describe the epochal technological change that we’re in the middle of now. The thing about a singularity is that it’s not just a change in degree, but a change in kind. As he explained it, if you traveled back in time to explain modern technology to, say, Mark Twain – a technophile of the late 19th Century – he would have been able to basically understand it. He might have doubted some of what you told him, and he might have had trouble grasping the significance of some of it, but basically, he would have understood the outlines.

But a post-singularity world would be as incomprehensible to us as our modern world is to a flatworm. When you have artificial intelligence (and/or augmented human intelligence, which at some point may merge) of sufficient power, it’s not just smarter than contemporary humans. It’s smart to a degree, and in ways, that contemporary humans simply can’t get their minds around.

I said that we’re living in Vinge’s world even without him, and Rainbows End is the illustration. Rainbows End is set in 2025, a time when technology is developing increasingly fast, and the first glimmers of artificial intelligence are beginning to appear – some not so obviously.

Well, that’s where we are. The book opens with the spread of a new epidemic being first noticed not by officials but by hobbyists who aggregate and analyze publicly available data. We, of course, have just come off a pandemic in which hobbyists and amateurs have in many respects outperformed public health officialdom (which sadly turns out to have been a genuinely low bar to clear). Likewise, today we see people using networks of iPhones (with their built in accelerometers) to predict and observe earthquakes.

But the most troubling passage in Rainbows End is this one:

Every year, the civilized world grew and the reach of lawlessness and poverty shrank. Many people thought that the world was becoming a safer place … Nowadays Grand Terror technology was so cheap that cults and criminal gangs could acquire it. … In all innocence, the marvelous creativity of humankind continued to generate unintended consequences. There were a dozen research trends that could ultimately put world-killer weapons in the hands of anyone having a bad hair day.

Modern gene-editing techniques make it increasingly easy to create deadly pathogens, and that’s just one of the places where distributed technology is moving us toward this prediction.

But the big item in the book is the appearance of artificial intelligence, and how that appearance is not as obvious or clear as you might have thought it would be in 2005. That’s kind of where we are now. Large Language Models can certainly seem intelligent, and are increasingly good enough to pass a Turing Test with naïve readers, though those who have read a lot of Chat GPT’s output learn to spot it pretty well. (Expect that to change soon, though).

December 31, 2023

QotD: Orwell as a “failed prophet”

Some critics do not fault [Nineteen Eighty-Four] on artistic grounds, but rather judge its vision of the future as wildly off-base. For them, Orwell is a naïve prophet. Treating Orwell as a failed forecaster of futuristic trends, some professional “futurologists” have catalogued no fewer than 160 “predictions” that they claim are identifiable in Orwell’s allegedly poorly imagined novel, pertaining to the technical gadgetry, the geopolitical alignments, and the historical timetable.

Admittedly, if Orwell was aiming to prophesy, he misfired. Oceania is a world in which the ruling elite espouses no ideology except the brutal insistence that “might makes right”. Tyrannical regimes today still promote ideological orthodoxy — and punish public protest, organized dissidence, and conspicuous deviation. (Just ask broad swaths of the citizenry in places such as North Korea, Venezuela, Cuba, and mainland China.) Moreover, the Party in Oceania mostly ignores “the proles”. Barely able to subsist, they are regarded by the regime as harmless. The Party does not bother to monitor or indoctrinate them, which is not at all the case with the “Little Brothers” that have succeeded Hitler and Stalin on the world stage.

Rather than promulgate ideological doctrines and dogmas, the Party of Oceania exalts power, promotes leader worship, and builds cults of personality. In Room 101, O’Brien douses Winston’s vestigial hope to resist the brainwashing or at least to leave some scrap of a legacy that might give other rebels hope. “Imagine,” declares O’Brien, “a boot stamping on a human face — forever.” That is the future, he says, and nothing else. Hatred in Oceania is fomented by periodic “Hate Week” rallies where the Outer Party members bleat “Two Minutes Hate” chants, threatening death to the ever-changing enemy. (Critics of the Trump rallies during and since the presidential campaign compare the chants of his supporters — such as “Lock Her Up” about “Crooked Hillary” Clinton and her alleged crimes — to the Hate Week rallies in Nineteen Eighty-Four.)

Yet all of these complaints about the purported shortcomings of Nineteen Eighty-Four miss the central point. If Orwell “erred” in his predictions about the future, that was predictable — because he wasn’t aiming to “predict” or “forecast” the future. His book was not a prophecy; it was — and remains — a warning. Furthermore, the warning expressed by Orwell was so potent that this work of fiction helped prevent such a dire future from being realized. So effective were the sirens of the sentinel that the predictions of the “prophet” never were fulfilled.

Nineteen Eighty-Four voices Orwell’s still-relevant warning of what might have happened if certain global trends of the early postwar era had continued. And these trends — privacy invasion, corruption of language, cultural drivel and mental debris (prolefeed), bowdlerization (or “rectification”) of history, vanquishing of objective truth — persist in our own time. Orwell was right to warn his readers in the immediate aftermath of the defeat of Hitler and the still regnant Stalin in 1949. And his alarms still resound in the 21st century. Setting aside arguments about forecasting, it is indisputable that surveillance in certain locales, including in the “free” world of the West, resembles Big Brother’s “telescreens” everywhere in Oceania, which undermine all possibility of personal privacy. For instance, in 2013, it was estimated that England had 5.9 million CCTV cameras in operation. The case is comparable in many European and American places, especially in urban centers. (Ironically, it was revealed not long ago that the George Orwell Square in downtown Barcelona — christened to honor him for his fighting against the fascists in the Spanish Civil War — boasts several hidden security cameras.)

Cameras are just one, almost old-fashioned technology that violates our privacy, and our freedoms of speech and association. The power of Amazon, Google, Facebook, and other web systems to track our everyday activities is far beyond anything that Orwell imagined. What would he think of present-day mobile phones?

John Rodden and John Rossi, “George Orwell Warned Us, But Was Anyone Listening?”, The American Conservative, 2019-10-02.

August 27, 2023

When the techno-utopians proclaimed the end of the book

In the latest SHuSH newsletter, Ken Whyte harks back to a time when brash young tech evangelists were lining up to bury the ancient codex because everything would be online, accessible, indexed, and (presumably) free to access. That … didn’t happen the way they forsaw:

By the time I picked up Is This a Book?, a slim new volume from Angus Phillips and Miha Kova?, I’d forgotten the giddy digital evangelism of the mid-Aughts.

In 2006, for instance, a New York Times piece by Kevin Kelly, the self-styled “senior maverick” at Wired, proclaimed the end of the book.

It was already happening, Kelly wrote. Corporations and libraries around the world were scanning millions of books. Some operations were using robotics that could digitize 1,000 pages an hour, others assembly lines of poorly paid Chinese labourers. When they finished their work, all the books from all the libraries and archives in the world would be compressed onto a 50 petabyte hard disk which, said Kelly, would be as big as a house. But within a few years, it would fit in your iPod (the iPhone was still a year away; the iPad three years).

“When that happens,” wrote Kelly, “the library of all libraries will ride in your purse or wallet — if it doesn’t plug directly into your brain with thin white cords.”

But that wasn’t what really excited Kelly. “The chief revolution birthed by scanning books”, he ventured, would be the creation of a universal library in which all books would be merged into “one very, very, very large single text”, “the world’s only book”, “the universal library.”

The One Big Text.

In the One Big Text, every word from every book ever written would be “cross-linked, clustered, cited, extracted, indexed, analyzed, annotated, remixed, reassembled and woven deeper into the culture than ever before”.

“Once text is digital”, Kelly continued, “books seep out of their bindings and weave themselves together. The collective intelligence of a library allows us to see things we can’t see in a single, isolated book.”

Readers, liberated from their single isolated books, would sit in front of their virtual fireplaces following threads in the One Big Text, pulling out snippets to be remixed and reordered and stored, ultimately, on virtual bookshelves.

The universal book would be a great step forward, insisted Kelly, because it would bring to bear not only the books available in bookstores today but all the forgotten books of the past, no matter how esoteric. It would deepen our knowledge, our grasp of history, and cultivate a new sense of authority because the One Big Text would indisputably be the sum total of all we know as a species. “The white spaces of our collective ignorance are highlighted, while the golden peaks of our knowledge are drawn with completeness. This degree of authority is only rarely achieved in scholarship today, but it will become routine.”

And it was going to happen in a blink, wrote Kelly, if the copyright clowns would get out of the way and let the future unfold. He recognized that his vision would face opposition from authors and publishers and other friends of the book. He saw the clash as East Coast (literary) v. West Coast (tech), and mistook it for a dispute over business models. To his mind, authors and publishers were eager to protect their livelihoods, which depended on selling one copyright-protected physical book at a time, and too self-interested to realize that digital technology had rendered their business models obsolete. Silicon Valley, he said, had made copyright a dead letter. Knowledge would be free and plentiful—nothing was going to stop its indiscriminate distribution. Any efforts to do so would be “rejected by consumers and ignored by pirates”. Books, music, video — all of it would be free.

Kelly wasn’t altogether wrong. He’d just taken a narrow view of the book. He was seeing it as a container of information, an individual reference work packed with data, facts, and useful knowledge that needed to be agglomerated in his grander project. That has largely happened with books designed simply to convey information — manuals, guides, dissertations, and actual reference books. You can’t buy a good printed encyclopedia today and most scientific papers are now in databases rather than between covers.

What Kelly missed was that most people see the book as more than a container of information. They read for many reasons besides the accumulation of knowledge. They read for style and story. They read to feel, to connect, to stimulate their imaginations, to escape. They appreciate the isolated book as an immersive journey in the company of a compelling human voice.

June 19, 2023

1963: Mockumentary Predicts The Future of 1988 | Time On Our Hands | Past Predictions | BBC

BBC Archive

Published 17 Jun 2023Russian moon landings, week long traffic jams, a workforce replaced by automation and above all, too much leisure time!

These are just some of the bold predictions made in Don Haworth’s 1963 BBC “mockumentary” Time on Our Hands – a remarkable film which projects the viewer a quarter of a century into the future.

Imagine how the futuristic inhabitants of 1988 — a society freed from the shackles of endless hard work — might reflect on the way people live and work in 1963. Its aim is to look back at the extraordinary, almost unbelievable, events of the intervening 25 years — referred to as “the years of the transformation”.

“This Buoyant programme could be repeated a dozen times and still intrigue, delight and disturb me”

Dennis Potter, Daily Herald TV critic, 1963This footage is compiled of excerpts from Time On Our Hands, a faux-documentary film by Don Haworth.

Originally broadcast 19 March, 1963.

May 31, 2023

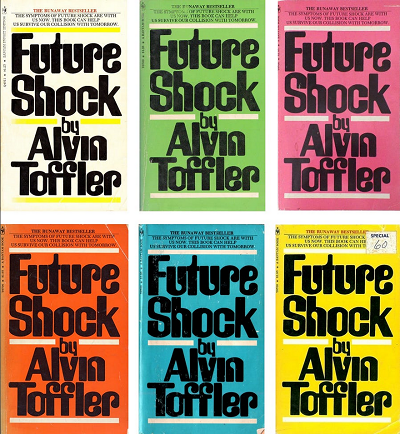

Alvin Toffler may have been utterly wrong in Future Shock, but I suspect his huge royalty cheques helped soften the pain

Ted Gioia on the huge bestseller by Alvin Toffler that got its predictions backwards:

Back in 1970, Alvin Toffler predicted the future. It was a disturbing forecast, and everybody paid attention.

People saw his book Future Shock everywhere. I was just a freshman in high school, but even I bought a copy (the purple version). And clearly I wasn’t alone — Clark Drugstore in my hometown had them piled high in the front of the store.

The book sold at least six million copies and maybe a lot more (Toffler’s website claims 15 million). It was reviewed, translated, and discussed endlessly. Future Shock turned Toffler — previously a freelance writer with an English degree from NYU — into a tech guru applauded by a devoted global audience.

Toffler showed up on the couch next to Johnny Carson on The Tonight Show. Other talk show hosts (Dick Cavett, Mike Douglas, etc.) invited him to their couches too. CBS featured Toffler alongside Arthur C. Clarke and Buckminster Fuller as trusted guides to the future. Playboy magazine gave him a thousand dollar award just for being so smart.

Toffler parlayed this pop culture stardom into a wide range of follow-up projects and businesses, from consulting to professorships. When he died in 2016, at age 87, obituaries praised Alvin Toffler as “the most influential futurist of the 20th century”.

But did he deserve this notoriety and praise?

Future Shock is a 500 page book, but the premise is simple: Things are changing too damn fast.

Toffler opens an early chapter by telling the story of Ricky Gallant, a youngster in Eastern Canada who died of old age at just eleven. He was only a kid, but already suffered from “senility, hardened arteries, baldness, slack, and wrinkled skin. In effect, Ricky was an old man when he died.”

Toffler didn’t actually say that this was going to happen to all of us. But I’m sure more than a few readers of Future Shock ran to the mirror, trying to assess the tech-driven damage in their own faces.

“The future invades our lives”, he claims on page one. Our bodies and minds can’t cope with this. Future shock is a “real sickness”, he insists. “It is the disease of change.”

As if to prove this, Toffler’s publisher released the paperback edition of Future Shock with six different covers — each one a different color. The concept was brilliant. Not only did Future Shock say that things were constantly changing, but every time you saw somebody reading it, the book itself had changed.

Of course, if you really believed Future Shock was a disease, why would you aggravate it with a stunt like this? But nobody asked questions like that. Maybe they were too busy looking in the mirror for “baldness, slack, and wrinkled skin”.

Toffler worried about all kinds of change, but technological change was the main focus of his musings. When the New York Times reviewed his book, it announced in the opening sentence that “Technology is both hero and villain of Future Shock“.

During his brief stint at Fortune magazine, Toffler often wrote about tech, and warned about “information overload”. The implication was that human beings are a kind of data storage medium — and they’re running out of disk space.

March 31, 2023

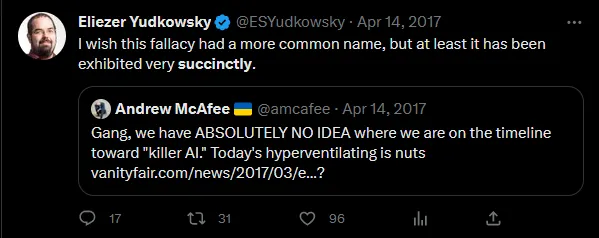

“We have absolutely no idea how AI will go, it’s radically uncertain”… “Therefore, it’ll be fine” (?)

Scott Alexander on the Safe Uncertainty Fallacy, which is particularly apt in artificial intelligence research these days:

The Safe Uncertainty Fallacy goes:

- The situation is completely uncertain. We can’t predict anything about it. We have literally no idea how it could go.

- Therefore, it’ll be fine.

You’re not missing anything. It’s not supposed to make sense; that’s why it’s a fallacy.

For years, people used the Safe Uncertainty Fallacy on AI timelines:

Since 2017, AI has moved faster than most people expected; GPT-4 sort of qualifies as an AGI, the kind of AI most people were saying was decades away. When you have ABSOLUTELY NO IDEA when something will happen, sometimes the answer turns out to be “soon”.

Now Tyler Cowen of Marginal Revolution tries his hand at this argument. We have absolutely no idea how AI will go, it’s radically uncertain:

No matter how positive or negative the overall calculus of cost and benefit, AI is very likely to overturn most of our apple carts, most of all for the so-called chattering classes.

The reality is that no one at the beginning of the printing press had any real idea of the changes it would bring. No one at the beginning of the fossil fuel era had much of an idea of the changes it would bring. No one is good at predicting the longer-term or even medium-term outcomes of these radical technological changes (we can do the short term, albeit imperfectly). No one. Not you, not Eliezer, not Sam Altman, and not your next door neighbor.

How well did people predict the final impacts of the printing press? How well did people predict the final impacts of fire? We even have an expression “playing with fire.” Yet it is, on net, a good thing we proceeded with the deployment of fire (“Fire? You can’t do that! Everything will burn! You can kill people with fire! All of them! What if someone yells “fire” in a crowded theater!?”).

Therefore, it’ll be fine:

I am a bit distressed each time I read an account of a person “arguing himself” or “arguing herself” into existential risk from AI being a major concern. No one can foresee those futures! Once you keep up the arguing, you also are talking yourself into an illusion of predictability. Since it is easier to destroy than create, once you start considering the future in a tabula rasa way, the longer you talk about it, the more pessimistic you will become. It will be harder and harder to see how everything hangs together, whereas the argument that destruction is imminent is easy by comparison. The case for destruction is so much more readily articulable — “boom!” Yet at some point your inner Hayekian (Popperian?) has to take over and pull you away from those concerns. (Especially when you hear a nine-part argument based upon eight new conceptual categories that were first discussed on LessWrong eleven years ago.) Existential risk from AI is indeed a distant possibility, just like every other future you might be trying to imagine. All the possibilities are distant, I cannot stress that enough. The mere fact that AGI risk can be put on a par with those other also distant possibilities simply should not impress you very much.

So we should take the plunge. If someone is obsessively arguing about the details of AI technology today, and the arguments on LessWrong from eleven years ago, they won’t see this. Don’t be suckered into taking their bait.

Look. It may well be fine. I said before my chance of existential risk from AI is 33%; that means I think there’s a 66% chance it won’t happen. In most futures, we get through okay, and Tyler gently ribs me for being silly.

Don’t let him. Even if AI is the best thing that ever happens and never does anything wrong and from this point forward never even shows racial bias or hallucinates another citation ever again, I will stick to my position that the Safe Uncertainty Fallacy is a bad argument.

March 16, 2023

Once it was possible to be a fully fledged techno-optimist … but things have changed for the worse

Glenn Reynolds on how he “lost his religion” about the bright, shiny techno-future so many of us looked forward to:

Listening to that song reminded me of how much more overtly optimistic I was about technology and the future at the turn of the millennium. I realized that I’m somewhat less so now. But why? In truth, I think my more negative attitude has to do with people more than with the machines that Embrace the Machine characterizes as “children of our minds”. (I stole that line from Hans Moravec. Er, I mean it’s a “homage”.) But maybe there’s a connection there, between creators and creations.

It was easy to be optimistic in the 90s and at the turn of the millennium. The Soviet Union lost the Cold War, the Berlin Wall fell, and freedom and democracy and prosperity were on the march almost everywhere. Personal technology was booming, and its dark sides were not yet very apparent. (And the darker sides, like social media and smartphones, basically didn’t exist.)

And the tech companies, then, were run by people who looked very different from the people who run them now – even when, as in the case of Bill Gates, they were the same people. It’s easy to forget that Gates was once a rather libertarian figure, who boasted that Microsoft didn’t even have an office in Washington, DC. The Justice Department, via its Antitrust Division, punished him for that, and he has long since lost any libertarian inclinations, to put it mildly.

It’s a different world now. In the 1990s it seemed plausible that the work force of tech companies would rise up in revolt if their products were used for repression. In the 2020s, they rise up in revolt if they aren’t. Commercial tech products spy on you, censor you, and even stop you from doing things they disapprove of. Apple nowadays looks more like Big Brother than like a tool to smash Big Brother as presented in its famous 1984 commercial.

Silicon Valley itself is now a bastion of privilege, full of second- and third-generation tech people, rich Stanford alumni, and VC scions. It’s not a place that strives to open up society, but a place that wants to lock in the hierarchy, with itself on top. They’re pulling up the ladders just as fast as they can.

February 15, 2023

Refuting The End of History and the Last Man

Freddie deBoer responds to a recent commentary defending the thesis of Francis Fukuyama’s The End of History and the Last Man:

… Ned Resnikoff critiques a recent podcast by Hobbes and defends Francis Fukuyama’s concept of “the end of history”. In another case of strange bedfellows, the liberal Resnikoff echoes conservative Richard Hanania in his defense of Fukuyama — echoes not merely in the fact that he defends Fukuyama too, but in many of the specific terms and arguments of Hanania’s defense. And both make the same essential mistake, failing to understand the merciless advance of history and how it ceaselessly grinds up humanity’s feeble attempts at macrohistoric understanding. And, yes, to answer Resnikoff’s complaint, I’ve read the book, though it’s been a long time.

The big problem with The End of History and the Last Man is that history is long, and changes to the human condition are so extreme that the terms we come up with to define that condition are inevitably too contextual and limited to survive the passage of time. We’re forever foolishly deciding that our current condition is the way things will always be. For 300,000 years human beings existed as hunter-gatherers, a vastly longer period of time than we’ve had agriculture and civilization. Indeed, if aliens were to take stock of the basic truth of the human condition, they would likely define us as much by that hunter-gatherer past as our technological present; after all, that was our reality for far longer. Either way – those hunter-gatherers would have assumed that their system wasn’t going to change, couldn’t comprehend it changing, didn’t see it as a system at all, and for 3000 centuries, they would have been right. But things changed.

And for thousands of years, people living at the height of human civilization thought that there was no such thing as an economy without slavery; it’s not just that they had a moral defense of slavery, it’s that they literally could not conceive of the daily functioning of society without slavery. But things changed. For most humans for most of modern history, the idea of dynastic rule and hereditary aristocracy was so intrinsic and universal that few could imagine an alternative. But things changed. And for hundreds of years, people living under feudalism could not conceive of an economy that was not fundamentally based on the division between lord and serf, and in fact typically talked about that arrangement as being literally ordained by God. But things changed. For most of human history, almost no one questioned the inherent and unalterable second-class status of women. Civilization is maybe 12,000 years old; while there’s proto-feminist ideas to be found throughout history, the first wave of organized feminism is generally defined as only a couple hundred years old. It took so long because most saw the subordination of women as a reflection of inherent biological reality. But women lead countries now. You see, things change.

And what Fukuyama and Resnikoff and Hanania etc are telling you is that they’re so wise that they know that “but then things changed” can never happen again. Not at the level of the abstract social system. They have pierced the veil and see a real permanence where humans of the past only ever saw a false one. I find this … unlikely. Resnikoff writes “Maybe you think post-liberalism is coming; it just has yet to be born. I guess that’s possible.” Possible? The entire sweep of human experience tells us that change isn’t just possible, it’s inevitable; not just change at the level of details, but changes to the basic fabric of the system.

The fact of the matter is that, at some point in the future, human life will be so different from what it’s like now, terms like liberal democracy will have no meaning. In 200 years, human beings might be fitted with cybernetic implants in utero by robots and jacked into a virtual reality that we live in permanently, while artificial intelligence takes care of managing the material world. In that virtual reality we experience only a variety of pleasures that are produced through direct stimulation of the nervous system. There is no interaction with other human beings as traditionally conceived. What sense would the term “liberal democracy” even make under those conditions? There are scientifically-plausible futures that completely undermine our basic sense of what it means to operate as human beings. Is one of those worlds going to emerge? I don’t know! But then, Fukuyama doesn’t know either, and yet one of us is making claims of immense certainty about the future of humanity. And for the record, after the future that we can’t imagine comes an even more distant future we can’t conceive of.

People tend to say, but the future you describe is so fanciful, so far off. To which I say, first, human technological change over the last two hundred years dwarfs that of the previous two thousand, so maybe it’s not so far off, and second, this is what you invite when you discuss the teleological endpoint of human progress! You started the conversation! If you define your project as concerning the final evolution of human social systems, you necessarily include the far future and its immense possibilities. Resnikoff says, “the label ‘post-liberalism’ is something of an intellectual IOU” and offers similar complaints that no one’s yet defined what a post-liberal order would look like. But from the standpoint of history, this is a strange criticism. An 11th-century Andalusian shepherd had no conception of liberal democracy, and yet here we are in the 21st century, talking about liberal democracy as “the object of history”. How could his limited understanding of the future constrain the enormous breadth of human possibility? How could ours? To buy “the end of history”, you have to believe that we are now at a place where we can accurately predict the future where millennia of human thinkers could not. And it’s hard to see that as anything other than a kind of chauvinism, arrogance.

Fukuyama and “the end of history” are contingent products of a moment, blips in history, just like me. That’s all any of us gets to be, blips. The challenge is to have humility enough to recognize ourselves as blips. The alternative is acts of historical chauvinism like The End of History.