Looking at our peasant household, what we generally have are large families on small farms. The households in these farms were not generally nuclear households, but extended ones. Pre-Han Chinese documents assume a household to include three generations: two elderly parents, their son, his wife, and their four children (eight individuals total). Ptolemaic and Roman census data reveal a bewildering array of composite families, including multi-generational homes, but also households composed of multiple nuclear families of siblings (so a man, his wife, his brother and then brother’s wife and their children, for instance), and so on. Normal family units tended to be around eight individuals, but with wide variation (for comparison, the average household size in the United States for a family is 3.14).

At the same time that households were large (by modern standards), the farms they tilled were, by modern standards, very small. The normal size of a Roman household small farm is generally estimated between 5 and 8 iugera (a Roman measurement of land, roughly 3 to 5 acres); in pre-Han Northern China (where wheat and millet, not rice, were the staple crops), the figure was “one hundred mu (4.764 acres)” – essentially the same. In Languedoc, a study of Saint-Thibery in 1460 showed 118 households (out of 189) on farms of less than 20 setérée (12 acres or so; the setérée appears to be an inexact unit of measurement); 96 of them were on less than 10 setérée (about 6 acres). So while there is a lot of variation, by and large it seems like the largest cluster of household farms tend to be around 3 to 8 acres or so; 5 acre farms are a good “average” small farm.

This coincidence of normal farm size and family size is not an accident, but essentially represents multi-generational family units occupying the smallest possible farms which could support them. The pressures that produce this result are not hard to grasp: families with multiple children and a farm large enough to split between them might do so, while families without enough land to split are likely to cluster around the farm they have. Pre-modern societies typically have only limited opportunities for wage labor (which are often lower status and worse in conditions than peasant farming!), so if the extended family unit can cluster on a single farm too small to split up, it will (with exception for the occasional adventurous type who sets off for high-risk occupations like soldier or bandit).

Now to be clear that doesn’t mean the farm sizes are uniform, because they aren’t. There is tremendous variation and obviously the difference between a 10 acre small farm and a 5 acre small farm is half of the farm. Moreover, in most of the communities you will have significant gaps between the poor peasants (whose farms are often very small, even by these measures), the average peasant farmer, and “rich peasants” who might have a somewhat (but often not massively so) larger farm and access to more farming capital (particularly draft animals). […] Nevertheless, what I want to stress is that these fairly small – 3-8 acres of so – farms with an extended family unit on it make up the vast majority of farming households and most of the rural population, even if they do not control most of the land (for instance in that Languedoc village, more than half of the land was held by households with more than 20 setérée a piece, so a handful of those “rich peasants” with larger accumulations effectively dominated the village’s landholding […]).

This is our workforce and we’re going to spend this entire essay talking about them. Why? Because these folks – these farmers – make up the majority of the population of basically all agrarian societies in the pre-modern period. And when I say “the majority” I mean the vast majority, on the order of 80-90% in many cases.

Bret Devereaux, “Collections: Bread, How Did They Make It? Part I: Farmers!”, A collection of Unmitigated Pedantry, 2020-07-24.

April 2, 2022

QotD: The pre-modern farming household

March 30, 2022

March 17, 2022

Irish Stew From 1900 & The Irish Potato Famine

Tasting History with Max Miller

Published 16 Mar 2021Help Support the Channel with Patreon: https://www.patreon.com/tastinghistory

Tasting History Merchandise: crowdmade.com/collections/tastinghistoryFollow Tasting History here:

Instagram: https://www.instagram.com/tastinghist…

Twitter: https://twitter.com/TastingHistory1

Tiktok: TastingHistory

Reddit: r/TastingHistory

Discord: https://discord.gg/d7nbEpyLINKS TO INGREDIENTS & EQUIPMENT**

Sony Alpha 7C Camera: https://amzn.to/2MQbNTK

Sigma 24-70mm f/2.8 Lens: https://amzn.to/35tjyoW

Le Creuset Cast Iron Round Casserole: https://amzn.to/2N5rTJKLINKS TO SOURCES**

Tamales Video: https://www.youtube.com/watch?v=s2JyN…

Quesadilla Video: https://www.youtube.com/watch?v=NPxjQ…

The History of the Great Irish Famine of 1847 by John O’Rourke: https://amzn.to/3qCM8fD

Great Irish Potato Famine: https://amzn.to/3kZg58j**Some of the links and other products that appear on this video are from companies which Tasting History will earn an affiliate commission or referral bonus. Each purchase made from these links will help to support this channel with no additional cost to you. The content in this video is accurate as of the posting date. Some of the offers mentioned may no longer be available.

Subtitles: Jose Mendoza

PHOTO CREDITS

Saint Patrick Catholic Church: By Nheyob – Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index…

Kindred Spirits: By Gavin Sheridan – Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index…MUSIC CREDITS

“Fiddles McGinty” by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 license. https://creativecommons.org/licenses/…

Source: http://incompetech.com/music/royalty-…

Artist: http://incompetech.com/“Achaidh Cheide – Celtic” by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 license. https://creativecommons.org/licenses/…

Source: http://incompetech.com/music/royalty-…

Artist: http://incompetech.com/#tastinghistory #stpatricksday #ireland

January 16, 2022

QotD: Once “discovered”, colonization of the Americas was inevitable

Another major structural issue is this: what precisely would our pious anthropology professors have had Europeans do with the New World once they found it?

This is not a joke. Political reality has a way of crashing in on the pipe dreams of liberal academics. The reality is, if the English had not colonised, then the French or the Dutch would have. If the Spanish had not colonised, the Portuguese would have. This would have shifted the balance of power at home, and any European country which had not colonised, would have been relegated to secondary status. And it is easy to overestimate the amount of control that European governments actually had. As soon as the New World was discovered, many fisherman and traders sailed across the Atlantic on their own, in hopes of circumventing tax authorities and scoring a fortune. Long before colonies were established in most regions, the New World was crawling with Europeans whose superior technology gave them an edge in combat. Nonetheless, it was extremely dangerous for Europeans to provoke fights with Native Americans, and most of them tried to avoid this when possible. In retrospect, one could in theory be impressed that so many European governments showed a genuine concern to rein in the worst excesses of their subjects, with an express eye to protecting the Indians from depredation. The logic was simple: they attempted to protect their subjects at home, in order to secure good order and a better tax base. So they would do the same to their subjects in the New World. For a long time, few Europeans harboured any master plan of pushing the Native Americans out of their own lands. In more densely populated regions such as Mexico, such an idea must have seemed an absurdity. Reality tends to occur ad hoc. Boundaries often took generations to move, and would have seemed fixed at the time. For several centuries, many Europeans assumed that they would long be a minority on the North American continent. In Mexico and Peru, they always have been.

Population density mattered, a lot, when it came to pre-modern global migrations. China and India were “safe” from excessive European colonisation because they had the densest populations in the world, and they were likewise largely immune to any diseases brought by Europeans. SubSaharan Africa had a lower population density depleted by slave raiding, but they still outnumbered European colonists by a large margin throughout the colonial era — again because European contact did not decimate their numbers through disease the way it did in the Americas. It is worth noting that no one claims that Europeans committed genocide in India, Asia or even Africa, although their technological advantages gave them every opportunity had they actually been of a genocidal mindset (as were for example the Mongols). In fact, the European track record shows them to be almost shockingly un-genocidal, given their clear technological advantages over the rest of the world for a period of several centuries. Few other civilisations, given similar power over so much of the world’s people, would have behaved in a less reprehensible manner. This is not to give Europeans a pat on the back. Rather it is to point out that Europeans are regularly painted as the very worst society on Earth, when in fact they had the power to do far, far more evil than they actually did. Let us at least acknowledge this fact.

Jeff Fynn-Paul, “The myth of the ‘stolen century'”, Spectator Magazine, 2020-09-26.

October 27, 2021

August 5, 2021

QotD: September 1939 was pretty much the optimal moment for Germany to go to war

The German economy was already in poor condition, and it was the looting of Austrian gold and Czech armaments that gave it a temporary boost in what was effectively still peacetime. (The later looting of the Polish and French economies never made up for the costs of a full world war being in progress.)

Demographically German military manpower was at a height in 1940/41 that gave it an advantage over the allies and potentially the Russians, that would quickly evaporate within a few years. (Demographics was an important science between the wars, and many leaders – like Hitler and Stalin – made frequent references to it. The Russians in particular would start having more manpower available starting in 1942 … perhaps not a coincidence that Germany invaded in 1941?)

The Nazi air forces had a temporary superiority over the Allies in 1939 that was already being rapidly undercut as both the British and the French finally started mass production of newer aircraft. (By mid-1940 British aircraft production had overtaken the Germans, even without the French. If the war had not started in 1939, by 1941 the Luftwaffe would have been numerically quite inferior to the combined British and French air forces, even without the surprisingly effective new fighters being brought on line by the Dutch and others.)

German ground forces, while not really ready for war in September 1939 (half of their divisions were still pretty much immobile, and they had only 120,000 vehicles all up compared to 300,000 for the French army alone), were nonetheless in a peak of efficiency considering the Czechs and Poles had been knocked out, and the British and French were struggling to get new equipment into service. The Soviet short-term decision to ally with the Germans to carve up Eastern Europe (Stalin knew this was only a temporary delay to inevitable conflict), also allowed the Germans an easy victory and much greater freedom of action. Again, by 1941 British conscription and production, and French (and Belgian, and Dutch, etc.) upgrades and increases in fortifications, would have come a lot closer to making the German task next to impossible. (Even then it was the collapse of French morale after the loss of Finland — leading to the collapse of the French government – and Norway, that really defeated France, not vastly inferior divisions or equipment.)

A byproduct of an Allied ramp up might also have seen Belgium rejoin the allied camp in 1941, or at least make significant planning preparations to properly add its 22 divisions and strong border fortifications to allied defences if Germany attacked. (Rather than the hopeless mess that happened in 1940 when the allies rushed to rescue the temporary non-ally that had undermined the whole interwar defensive project …) Again, the Germans managed to find a sweet spot in 1939-40 that temporarily undermined long-standing interwar co-operation, and one that was not likely to last very long.

Similarly a delay of war would have allowed allied negotiations with the Balkan states to advance. The same guarantee that was given to Poland had been given to Yugoslavia, Rumania and Greece. (It is usually forgotten that Greece – attacked by Italy – and Yugoslavia – voluntarily – joined the British side at the worst possible moment in 1941. (Only to be crushed by the Germans … but with the interesting by-product of effectively undermining Germany’s chances of defeating the Soviets and occupying Moscow in the same year …)

Nigel Davies, “If the War hadn’t started until December 1941, would it?”, rethinking history, 2021-05-01.

July 2, 2021

Britain’s “agricultural revolution”

In the latest Age of Invention newsletter, Anton Howes wonders about the almost-forgotten revolution that pre-dated the much better known Industrial Revolution:

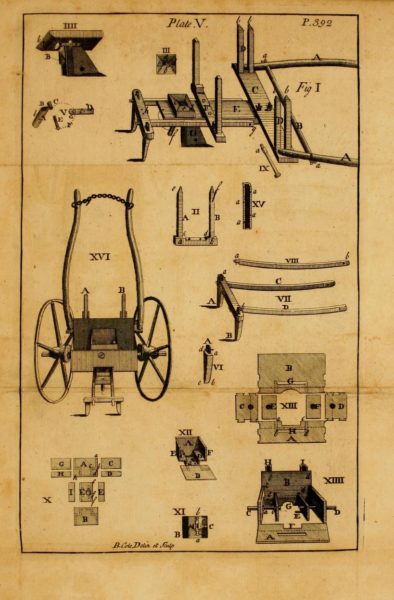

Illustration of a seed drill from Horse-hoeing husbandry, 4th edition by Jethro Tull, 1762 (original work 1731).

Wikimedia Commons.

Whatever happened to “the Agricultural Revolution” of seventeenth and eighteenth-century Britain? In recent years I’ve hardly seen the term used at all, and the last major book on the subject was seemingly published twenty-five years ago. It has become almost totally eclipsed by its more famous sibling “the Industrial Revolution”, with its vivid associations of cotton, coal, and exponential hockey-stick graphs.

Yet for all that popularity, nearly every book investigating the causes of modern economic growth complains about the use of The Industrial Revolution. Even one of the pioneers of economic history, T. S. Ashton, who actually wrote the book The Industrial Revolution, complained on the very second page about the term’s inaccuracy. Much like “Holy Roman Empire”, there’s an error in every word. It involved too many series of changes to really be a The, was about so much more than just industry, and was too gradual a process to properly call a revolution. Yet Ashton had to concede that the term had “become so firmly embedded in common speech that it would be pedantic to offer a substitute.” And this was in 1948. In the intervening three quarters of a century, the term has become all the more difficult to dislodge.

I am, like everyone else, guilty of perpetuating the term Industrial Revolution. It’s a useful shorthand for people to at least get a rough idea of what I’m talking about, for me to then refine. Best to start with what people know, or at least what they think they know, and go from there. You may think of the Industrial Revolution as being about cotton, coal, and steam, but the period also saw major developments in every other industry, from agriculture to watch-making, and everything in-between. And so on. My preferred terms, like “acceleration of innovation”, always require at least a paragraph or two of explanation first.

With the term Agricultural Revolution, however, there’s just no need to reference it. Nobody really talks about it, or has anything more than a very vague conception of what it may mean. At best, people recall a few things from decades-old textbooks: names like “Turnip” Townshend or Jethro Tull, and perhaps a smattering of jargon like selective breeding, crop rotation, or enclosures. Even these are widely misunderstood. See last week’s post, for patrons, on how we get almost everything about the enclosure movement wrong. As for the Agricultural Revolution’s timing, who knows? When, over the course of the sixteenth, seventeenth, eighteenth, and maybe even nineteenth centuries is it supposed to have occurred? With the Industrial Revolution, there’s at least a “classic” period of 1760-1830, with a few decades of leeway. That is of course up for debate, and I’m especially keen on pushing it back much earlier, but it’s at least a half-decent starting point. With the Agricultural Revolution, there’s just no baseline at all. The experts themselves can’t agree.

For all that the term Agricultural Revolution has lost its salience, however, early modern changes to the productivity of agriculture were perhaps the most important of all. The ability to support a much larger population is itself a major economic achievement. For all that we obsess over historical measures of GDP per person, we often forget the much earlier and extraordinary increase in just the sheer number of people. In the early seventeenth century England’s population not only recovered to its pre-Black Death peak of about 5 million, but then from 1700 onwards it began to exceed it. By 1800, after just another century, the population of Britain had doubled to 10 million. And this in a period throughout which the country was a net exporter of grain.

April 17, 2021

Considering the costs and benefits of extreme specialization

In the latest post on Matt Gurney’s Code 47 Substack blog, he considers the trade-off between population density and the range of specialization that can be supported at various densities:

“Model A Ford in front of Gilmore’s historic Shell gas station” by Corvair Owner is licensed under CC BY-SA 2.0

I wish I remembered where I read this. It was a book I was blowing through for a university paper; only one chapter was really of interest to me when I was trawling for footnotes but I stumbled upon an interesting section that talked about services and specialization in a modern economy. The author offered a simple explanation of service specialization that I’ve never forgotten. Imagine a village with 100 people, the author said. Now imagine what services are available there. There’s probably a gas station, and maybe you can get a few services done to your car there, too. Basic repairs. Tire rotations. Oil changes. Things like that. There’s probably also a convenience store, and the store might also have a place to send or receive mail, or maybe even to rent a movie. (Back when that was a thing we did.) You might have a coffee shop of some kind, maybe a diner. But that’s marginal. You almost certainly don’t have a school, full post office, bank branch or medical centre of any kind. Not in a village of 100 people.

When you itemize out all the services you can get, it’s probably about five or maybe 10 — gas pumped, tires changed, oil changed, basic engine repairs, store clerk, movie rental, mail sent and received. Maybe someone to pour you a cup of coffee and get you a sandwich — but only maybe. The point isn’t to be precise in our list or count, but just to contemplate the relationship between the population and the number and type of available services.

Now scale that village up 10 fold, the author said. Now it’s a town of 1,000. The number of services explodes. You still have everything you did before. But now you’ve also got specialized shops, restaurants, a bank or two (and all the services they provide), probably a house of worship, medical services of various kinds (including eye care, dentistry, etc), personal-care services, better access to home and lawn care, various repair and maintenance service, technical services, a post office … the list goes on. You also start to see competition and the efficiency that brings — our village of 100 would have a gas station and a convenience store (quite possibly at the same location!). But our town of 1,000 would have a few of each. You’d get more services, and start to see prices dropping for the commonly available offerings.

You get the idea — the more you scale up a population, the more specialized services that are available and the more accessible they become. And this includes not just categories of service, but also increasing degrees of specialization. Our village of 100 probably has no full-time doctor. Our town of 1,000 probably has a family physician. But after we bump things up to 10,000, 100,000 and then a million people, we’re getting not just doctors, but highly trained, specialized physicians, surgeons and diagnosticians. Our town of 1,000 has a dentist, but our city of a million has dental surgeons who’ve specialized in repairing specific kinds of trauma and injury.

Anyway. I don’t remember what book this was from. But I do remember this short section. I think about it a lot. We Canadians of 2021 are, for the most part, hyper-specialized. I’ve written columns about this before, including this one from 2019, which I’m going to quote liberally below:

Human history is, in one simplified viewing, the story of specialization. As our technology advanced, a smaller and smaller share of the labour pool was required just to keep everyone alive. Perhaps the easiest way to summarize this is to note that 150 years ago, even in the most advanced industrial countries, something close to 50 per cent of the population was directly engaged in agriculture — half the people tilled fields so the other half could eat. Today, in both Canada and the United States, it’s closer to two per cent — one person’s efforts feed 49 others. Those 49 can pursue any of the thousands of specialized jobs that allow our technological civilization to exist. … Those 49 people are our artists and doctors and scientists and teachers. Human advancement depends on this — a civilization that’s scrambling to feed itself doesn’t build particle colliders or invent new neonatal surgeries and cancer-stopping wonderdrugs.

I stand by those remarks. But I’ve been pondering them of late with a different perspective. I’ve spent much of this week talking with doctors and medical experts in Ontario, where the third wave of COVID-19 is threatening to overwhelm the health-care system, with tragic results. And one recurring theme that comes up in these conversations is how this disaster is going to take place almost entirely out of public view. There won’t be any general mobilizations or widespread damage. People are going to die, behind closed doors or tent flaps, and other people will be forever scarred by their inability to save those people. But for most of us — those who aren’t sick, or highly specialized medical professionals — life is going to be something reasonably close to normal.

February 25, 2021

Malthusian cheerleaders

Barry Brownstein looks at some of the claims from Malthus onward about the imminent demise of humanity due to overpopulation and how that same concern keeps popping up again and again:

… James Lovelock advanced the Gaia hypothesis that Earth is one “self-regulating organism.” Lovelock forecasts the population of the Earth will fall to one billion from its current total of over seven billion people. Given Lovelock’s cheerfulness about such carnage, it is easy to see why Alan Hall, a senior analyst at The Socionomist, wonders whether “today’s drives to limit consumption and population” are ideologically related to the eugenics movement from the past century. In his essay “A Socionomic Study of Eugenics,” Hall writes in The Socionomist:

Circa 1900, influential intellectuals in Europe and the U.S. voiced concerns about uncontrolled procreation causing a supposed decline in the quality of human beings. Today, similar groups voice concerns about uncontrolled population growth and resource consumption causing a decline in the quality of the environment … Today’s green advocates brandish images of an overrun, dying planet.

Today, the Bill and Melinda Gates Foundation is working to aid the lives of children living “in extreme poverty.” In his book, Factfulness, the late professor of international health Hans Rosling, reports on critics of the Gates Foundation who reject such efforts. “The argument goes like this,” Rosling writes. “If you keep saving poor children, you’ll kill the planet by causing overpopulation.”

In the face of advocates for such beliefs, no wonder Hall asks us to reflect on whether we “will make the cut” if those seeking to cull humanity are successful.

Malthusian Doom

We’ve all heard the SparkNotes version of Malthusian predictions of doom caused by overpopulation. Malthus thought food production could not keep pace with population growth. In his 1798 “Essay on the Principle of Population,” Malthus anticipated the suffering that awaited humanity.

The power of population is so superior to the power in the earth to produce subsistence for man, that premature death must in some shape or other visit the human race. The vices of mankind are active and able ministers of depopulation. They are the precursors in the great army of destruction; and often finish the dreadful work themselves. But should they fail in this war of extermination, sickly seasons, epidemics, pestilence, and plague, advance in terrific array, and sweep off their thousands and ten thousands. Should success be still incomplete, gigantic inevitable famine stalks in the rear, and with one mighty blow levels the population with the food of the world.

Unlike Ehrlich and others, Malthus had reason to be a pessimist in his lifetime. If Malthus had been writing history or predicting the near future, he would not have been far from the mark.

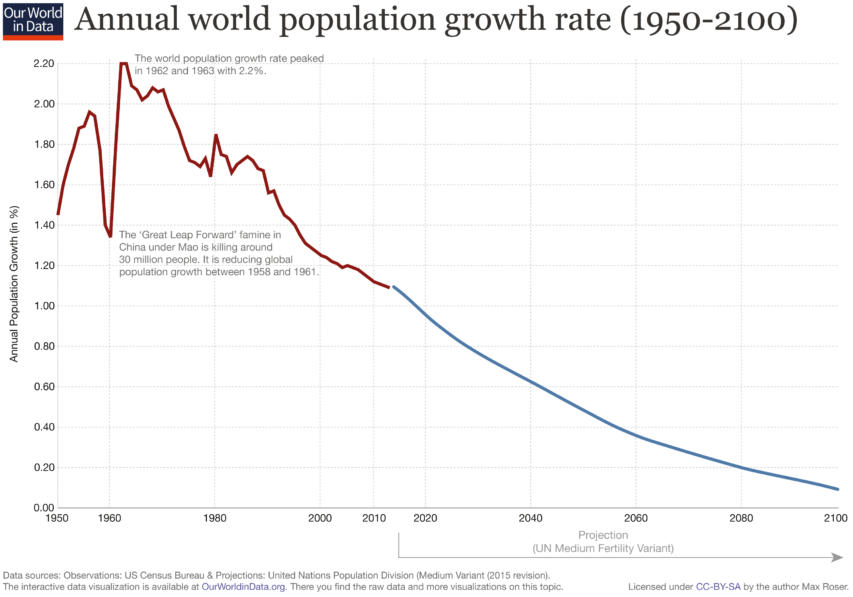

Many of the predictions of overpopulation were based on estimates of population growth (especially in sub-Saharan Africa) which were far from accurate, and in every case we know of, increased economic well-being directly impacts population growth so as a country begins to get richer its population growth begins to slow down significantly (most first-world nations are already at or below population-replacement birthrates).

In their book, Empty Planet: The Shock of Global Population Decline, Darrell Bricker and John Ibbitson have startling facts for those who believe the population will continue to explode.

No, we are not going to keep adding bodies until the world is groaning at the weight of eleven billion of us and more; nine billion is probably closer to the truth, before the population starts to decline. No, fertility rates are not astronomically high in developing countries; many of them are at or below replacement rate. No, Africa is not a chronically impoverished continent doomed to forever grow its population while lacking the resources to sustain it; the continent is dynamic, its economies are in flux, and birth rates are falling rapidly. No, African Americans and Latino Americans are not overwhelming white America with their higher fertility rates. The fertility rates of all three groups have essentially converged.

Looking at current trends and expecting them to continue is what Hans Rosling calls “the straight line instinct.” That instinct often leads to false conclusions.

February 15, 2021

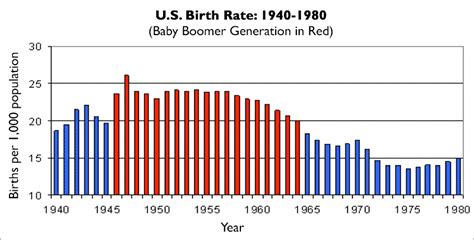

QotD: Tail-end boomers aren’t really Baby Boomers at all

I was born in late ’62. I never considered myself a boomer. And before you scream that boomers go to ’64, let me explain: I swear to you they didn’t use to. My brother, born in early ’54 was considered one of the youngest boomers. And if you look at the ethos of the generation and what formed it, and how its public image was created and also when they came of age, you’ll understand that makes a ton more sense.

The boomers were the baby boom after WWII. By the time I hit school, the classrooms were half empty, the trailers that they’d added the decade before were being used for craft classes or gym or something that required tons of space.

It would take a long time to come home if you were still being born in ’62. (And I’d been due in ’63.)

This is important simply because I want to make it clear when I came of age it wasn’t with the boomer ethos of “each generation is going to be bigger than the last and we’re going to remake the world in our image.” That expectation is still obvious in books of the fifties and sixties, as well as the attached Malthusian panic.

The boomers, like now the millenials, are a much maligned generation. The public image is almost not at all that of the people in the generation I actually know, with a very few exceptions.

The people the media chose to highlight were the ones they wanted the boomers to be, not who they were.

But something about the boomers is true — ironically the reason that caused them to hate my generation before they decided to aggregate us, because it gave them more power to still be considered young and marketable-to — and that is that they were raised in the expectation they would make the world a better place, and that they could because of sheer numbers, and because they’d been brought up to be better than their parents.

Sarah Hoyt, “Business From The Wrong End”, According to Hoyt, 2018-09-27.

January 22, 2021

QotD: The enclosure movement, in historical fact and in Marxist imagining

Consider, for example, that the tenfold increase [in the population of London] was in the period before the expropriative parliamentary enclosures of Marxist legend, when state fiat was used to deny smaller farmers their ancient, customary rights to use the land near their villages. While the very first of these enclosure acts appeared as early as 1604, parliamentary enclosure only really got going from the mid-eighteenth century. Instead, for the period in question, enclosure happened in a piecemeal way, with the open fields gradually dissolving as farmers exchanged or sold their tiny strips of land, over time amalgamating them into larger, privately controlled plots. With ownership concentrated in fewer and fewer hands, it became relatively easy to gain the unanimity needed to suspend common rights. The process played out in myriad ways all over the country, sometimes with amicable agreement and voluntary exchange, sometimes with ruthless monopolising of the land, with the already-large owners systematically buying out their neighbours. In some cases it involved the consolidation of existing arable land, in others it meant the conversion of forest, heath, marsh, or fen — the traditional “wastes”, to which the poorer villagers might have had various customary rights to gather firewood for fuel, or to graze their cattle, or to hunt for small game — into land that could be used for farming or pasture.

How this process played out all depended on extremely specific, local conditions. But on the whole it was slow — piecemeal enclosure had been happening to varying degrees since at least the fourteenth century. It’s hard to see how such a sporadic and piecemeal process could have led to such consistently and increasingly massive numbers flocking specifically to London. Indeed, the fact that they singled out London as their target suggests that this narrative might have it back-to-front. Some economic historians argue that it was the prospect of higher wages in the ever-growing metropolis that induced farmers to leave the countryside in the first place, selling up or abandoning their plots to those they left behind. Rather than enclosure pushing peasants off the land and into the city, London’s specific pull may instead have thus created the vacuum that allowed the remaining farmers to bring about enclosure. Otherwise, why didn’t the peasants simply flock to any old urban centre? The second-tier cities like Norwich or Bristol or Exeter or Coventry or York would all have been far less dangerous.

Anton Howes, “London the Great”, Age of Invention, 2020-10-20.

January 10, 2021

QotD: Sexual equality and the risk of demographic collapse

I like living in a society where women are, generally speaking, as free to choose their own path in life as I am. I like strong women, women who are confident and look me in the eye and see themselves as my equals. But I wonder, sometimes, if sexual equality isn’t doomed by biology. The relevant facts are (a) men and women have different optimal reproductive strategies because of the asymmetry in energy investment – being pregnant and giving birth is a lot more costly and risky than ejaculating, and (b) a woman’s fertile period is a relatively short portion of her lifetime. Following the logic out, it may be that the consequence of sexual equality is demographic collapse — nasty cultures which treat women like brood mares are the future simply because the nice cultures that don’t do that stop breeding at replacement rates.

Eric S. Raymond, “Fearing what might be true”, Armed and Dangerous, 2009-10-23.

November 9, 2020

QotD: The Children of Men becomes disturbingly real in Japan

To western eyes, contemporary Japan has a kind of earnest childlike wackiness, all karaoke machines and manga cartoons and nuttily sadistic game shows. But, to us demography bores, it’s a sad place that seems to be turning into a theme park of P.D. James’ great dystopian novel The Children Of Men. Baroness James’ tale is set in Britain in the near future, in a world that is infertile: The last newborn babe emerged from the womb in 1995, and since then nothing. It was an unusual subject for the queen of the police procedural, and, indeed, she is the first baroness to write a book about barrenness. The Hollywood director Alfonso Cuarón took the broad theme and made a rather ordinary little film out of it. But the Japanese seem determined to live up to the book’s every telling detail.

In Lady James’ speculative fiction, pets are doted on as child-substitutes, and churches hold christening ceremonies for cats. In contemporary Japanese reality, Tokyo has some 40 “cat cafés” where lonely solitary citizens can while away an afternoon by renting a feline to touch and pet for a couple of companiable hours.

In Lady James’ speculative fiction, all the unneeded toys are burned, except for the dolls, which childless women seize on as the nearest thing to a baby and wheel through the streets. In contemporary Japanese reality, toy makers, their children’s market dwindling, have instead developed dolls for seniors to be the grandchildren they’ll never have: You can dress them up, and put them in a baby carriage, and the computer chip in the back has several dozen phrases of the kind a real grandchild might use to enable them to engage in rudimentary social pleasantries.

P.D. James’ most audacious fancy is that in a barren land sex itself becomes a bit of a chore. The authorities frantically sponsor state porn emporia promoting ever more recherché forms of erotic activity in an effort to reverse the populace’s flagging sexual desire just in case man’s seed should recover its potency. Alas, to no avail. As Lady James writes, “Women complain increasingly of what they describe as painful orgasms: the spasm achieved but not the pleasure. Pages are devoted to this common phenomenon in the women’s magazines.”

As I said, a bold conceit, at least to those who believe that shorn of all those boring procreation hang-ups we can finally be free to indulge our sexual appetites to the full. But it seems the Japanese have embraced the no-sex-please-we’re-dystopian-Brits plot angle, too. In October, Abigail Haworth of The Observer in London filed a story headlined “Why Have Young People in Japan Stopped Having Sex?” Not all young people but a whopping percentage: A survey by the Japan Family Planning Association reported that over a quarter of men aged 16–24 “were not interested in or despised sexual contact.” For women, it was 45 per cent.

Mark Steyn, The [Un]documented Mark Steyn, 2014.

October 22, 2020

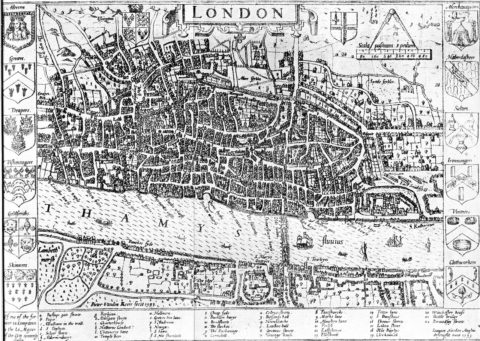

When England “Londonized”

In the latest Age of Invention newsletter, Anton Howes looks at changes in urbanization in England from the Middle Ages onward and the astonishing growth of London in particular:

John Norden’s map of London in 1593. There is only one bridge across the Thames, but parts of Southwark on the south bank of the river have been developed.

Wikimedia Commons.

We must thus imagine pre-modern England as a land of tens of thousands of teeny tiny villages, each having no more than a couple of hundred people, which were in turn served by hundreds of slightly larger market towns of no more than a few hundred inhabitants, and with only a handful of regional centres of more than a few thousand people. By the 1550s, the country’s population had still not recovered to its pre-Black Death peak, and still only about 4% of the population lived in cities. London alone accounted for about half of that, with approximately 50-70,000 people (about five times the size of its closest rival, Norwich). So after a couple of centuries of recovery, London was only a little past its medieval peak.

But over the following century and a half, things began to change. At first glance, England’s continued population growth was unremarkable. By 1700, its overall population had finally reached and even surpassed the medieval 5 million barrier, despite the ravages of civil war. This was, perhaps, to be expected, with a little additional agricultural productivity allowing it to surpass the previous record. But the composition of that population had changed radically, largely thanks to the extraordinary growth of London. England’s overall population had not only recovered, but now 16% of them lived in cities of over 5,000 inhabitants — over two thirds of whom lived in London alone. Rather than simply urbanise, England londonised. By 1700, the city was nineteen times the size of second-place Norwich — even though Norwich’s population had more or less tripled.

London had, by 1700, thus risen from obscurity to become one of the largest cities in Europe. At an estimated 575,000 people, it was rivalled in Europe only by Paris and Constantinople, both of which had been massive for centuries. And although by modern standards it was still rather small, it could at least now be comfortably called a city — more or less on par with the populations of modern-day Glasgow or Baltimore or Milwaukee.

During that crucial century and a half then, London almost single-handedly began to urbanise the country. Its eighteenth-century growth was to consolidate its international position, such that by 1800 the city was approaching a million inhabitants, and from the 1820s through to the 1910s was the largest city in the world. In the mid-nineteenth century England also finally overtook Holland in terms of urbanisation rates, as various other cities also came into their own. But this was all just the continuation of the trend. London’s growth from 1550 to 1700 is the phenomenon that I think needs explaining — an achievement made all the more impressive considering how many of its inhabitants were dropping dead.

Throughout that period, urban death rates were so high that it required waves upon waves of newcomers from the countryside to simply keep the population level, let alone increase it. London was ridden with disease, crime, and filth. Not to mention the occasional mass death event. The city lost over 30,000 souls — almost of a fifth of its population — in the plague of 1603 (which was apparently exacerbated by many thousands of people failing to social distance for the coronation of James I), followed by the loss of a fifth again — 41,000 deaths — in the plague of 1625, and another 100,000 deaths — by now almost a quarter of the city’s population — in 1665. And yet, between 1550 and 1700 its population still managed to increase roughly tenfold.

I’ve been hard-pressed to find an earlier, similarly rapid rise to the half-a-million mark that was not just a recovery to a pre-disaster population or simply the result of an empire’s seat of government being moved. Chang’an, Constantinople, Ctesiphon, Agra, Edo, for example — all owed their initial, massive populations to an administrative change (often accompanied by a degree of forcible relocation), and all then grew fairly gradually up to or beyond half a million. As for a very long-term capital like Rome, it seems to have taken about three or four centuries to achieve the increases that London managed in just one and a half (though bear in mind just how rough and ready our estimates of ancient city populations are — our growth guesstimate for Rome is almost entirely based on the fact that the water supply system roughly doubled every century before its supposed peak). The rapidity of London’s rise from obscurity may thus have been unprecedented in human history — and was certainly up there with the fastest growers — though we’ll likely never know for sure.

But how? I can think of a multitude of factors that may have helped it along, but I find that each of them — even when considered altogether — aren’t quite satisfactory.

September 23, 2020

QotD: Don’t blame the Boomers for the “Summer of Love” … most of ’em were too young to participate

I’ve written a lot here about how the most dangerous types in peacetime are the ones who juuuuust missed participating in some vast social upheaval. The Nazis are an obvious example. The Nazi-est Nazis of them all — Himmler, Heydrich, Eichmann, etc. — were old enough to have seen and understood the great national cataclysm that was World War I, but weren’t quite old enough to participate in it directly. Thus, when their turn came, they had to go double-or-nothing to prove to their older kin and classmates that they had what it takes. In America, guys like Teddy Roosevelt don’t make much sense until you realize that they grew up hearing their fathers and older brothers reminisce about the Civil War. And so on.

Now, I’m all for bashing the goddamn Boomers, but let’s be fair (since it matters for historical analysis). There’s a common misconception about the Baby Boom. Here, see if you can spot it:

Did you see it? Look closer, and you’ll see that while 1947(-ish) appears to be the peak year in terms of total births, the vast majority of what we call “Boomers” were born after 1950. Let’s do some simple math. The very oldest Boomers were born in 1946. The Summer of Love was 1967. Even if we assume the Summer of Love came out of nowhere — which is impossible, of course, any movement that large had antecedents going back years, probably decades, but let’s assume — that means that any “Boomers” participating were, at most, barely 22 years old. They were just barely 24 when Woodstock came around. Granted that the youngest are the dumbest, and thus can have outsize influence, they still can’t have been largely, let alone solely, responsible for the idiocy of the hippies.

That’s all on the older crowd, the so-called “Silent Generation” — the ones who were old enough to be aware of World War II, but unable to participate directly.

It’s easy to verify. The Port Huron Statement, the founding document of the New Left, was penned by coddled college kids in 1962 — meaning, by kids born, at latest, in about 1942 (its principal author, Tom Hayden, was born in 1939). Here are the Chicago Seven and their dates of birth: Abbie Hoffman (1936), Jerry Rubin (1938), David Dellinger (1915!), Hayden, Rennie Davis (1941),John Froines (1939), and Lee Weiner (1939).

Hoffman, especially, bears scrutiny. Though he’s best remembered as a Yippie — that is, the founder of an ostentatiously youth-oriented movement — he was 31 at its founding. Don’t trust anyone over thirty, right?

1936 to 1946 is only a decade, but it’s crucial. A kid born in 1936 would have vivid memories of World War II and its immediate aftermath — fathers, uncles, and older brothers (and, in more than a few cases, aunts and older sisters) coming home from the service. A kid born in 1946 would have a completely different experience — ask any combat veteran about the first year or two back in the world, versus being home for a decade. Those guys — the kids who saw firsthand the angry young strangers they were supposed to call “Dad” — were the ones who did the real damage in The Sixties(TM), just as it was the almost-but-not-quite frontsoldaten who did the real damage in the Third Reich.

With me? Now hang on to your hats, because here’s where it gets pretty meta: It was the “Silent Generation,” not the Boomers, who did the real damage in The Sixties(TM). That is, the guys who juuuust missed the giant social upheaval that was World War II. The Boomers have done all the damage since The Sixties(TM).

That — The Sixties(TM), which is why I’m using that obnoxious (TM) — is the great social upheaval they juuuust missed. [These people] aren’t old fossils from the flower power years, though many of those fossils are still alive and kicking (including four of the Chicago Seven: Hayden, Davis, Froines, and Weiner). Has anyone heard from Billy Ayers lately? How about Noam Chomsky (born 1928)? I’m sure they have plenty to say … but nobody cares.

It’s not retreads from The Sixties(TM) out there doing this stuff. It’s the people who wish they’d been around for the Summer of Love that are doing it. It’s the people who just know they would’ve ended the Vietnam War, if only they hadn’t been in junior high at the time. This is their Woodstock, not least because they only heard about the original when they arrived for freshman orientation in 1976.

Severian, “Talkin’ ’bout My Generation!”, Rotten Chestnuts, 2020-06-11.