The problem with all “models of the world”, as the video puts it, is that they ignore two vitally important factors. First, models can only go so deep in terms of the scale of analysis to attempt. You can always add layers — and it is never clear when a layer that is completely unseen at one scale becomes vitally important at another. Predicting higher-order effects from lower scales is often impossible, and it is rarely clear when one can be discarded for another.

Second, the video ignores the fact that human behavior changes in response to circumstance, sometimes in radically unpredictable ways. I might predict that we will hit peak oil (or be extremely wealthy) if I extrapolate various trends. However, as oil becomes scarce, people discover new ways to obtain it or do without it. As people become wealthier, they become less interested in the pursuit of wealth and therefore become poorer. Both of those scenarios, however, assume that humanity will adopt a moral and optimistic stance. If humans become decadent and pessimistic, they might just start wars and end up feeding off the scraps.

So, interestingly, what the future looks like might be as much a function of the music we listen to, the books we read, and the movies we watch when we are young as of the resources that are available.

Note that the solution they propose to our problems is internationalization. The problem with internationalizing everything is that people have no one to appeal to. We are governed by a number of international laws, but when was the last time you voted in an international election? How do you effect change when international policies are not working out correctly? Who do you appeal to?

The importance of nationalism is that there are well-known and generally-accepted procedures for addressing grievances with the ruling class. These international clubs are generally impervious to the appeals (and common sense) of ordinary people and tend to promote virtue-signaling among the wealthy class over actual virtue or solutions to problems.

Jonathan Bartlett, quoted in “1973 Computer Program: The World Will End In 2040”, Mind Matters News, 2019-05-31.

December 9, 2022

QotD: Computer models of “the future”

October 8, 2022

Faint glimmers of hope for Canadians’ “right to repair”?

Michael Geist on the state of play in modifying Canada’s digital lock rules to allow consumers a tiny bit more flexibility in how they can get their electronic devices repaired:

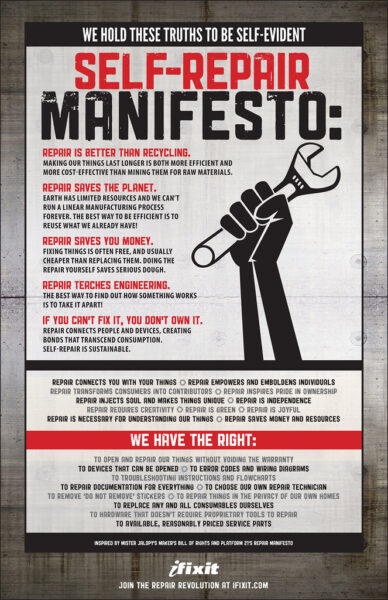

“The Self-Repair Manifesto from ifixit.com ‘If you can’t fix it, you don’t own it’. Hear, hear!” by dullhunk is licensed under CC BY 2.0 .

Canadian anti-circumvention laws (also known as digital lock rules) are among the strictest in the world, creating unnecessary barriers to innovation and consumer rights. The rules are required under the World Intellectual Property Organization’s Internet Treaties, but those treaties leave considerable flexibility in how they should be implemented. This is reflected in the countless examples around the world of countries adopting flexible anti-circumvention rules that seek to maintain the copyright balance. Canada was pressured into following the restrictive U.S. approach in 2012, establishing a framework is not only more restrictive than required under the WIPO treaties, but even more restrictive than the U.S. system.

One of the biggest differences between Canada and the U.S. is that the U.S. conducts a review every three years to determine whether new exceptions to a general prohibition on circumventing a digital locks are needed. This has led to the adoption of several exceptions to TPMs for innovative activities such as automotive security research, repairs and maintenance, archiving and preserving video games, and for remixing from DVDs and Blu-Ray sources. Canada has no such system as the government instead provided assurances that it could address new exceptions through a regulation-making power. In the decade since the law has been in effect, successive Canadian governments have never done so. This is particularly problematic where the rules restrict basic property rights by limiting the ability to repair products or ensure full interoperability between systems.

The best policy would be to clarify that the anti-circumvention rules do not apply to non-infringing uses. This would enable the anti-circumvention rules to work alongside the user rights in the Copyright Act (also known as limitations and exceptions) without restricting their lawful exercise. This approach was endorsed by the 2019 Canadian copyright review, which unanimously concluded:

it agrees that the circumvention of TPMs should be allowed for non-infringing purposes, especially given the fact that the Nintendo case provided such a broad interpretation of TPMs. In other words, while anti-circumvention rules should support the use of TPMs to enable the remuneration of rights-holders and prevent copyright infringement, they should generally not prevent someone from committing an act otherwise authorized under the Act.

The government has not acted on this recommendation, but two private members bills are working their way through the House of Commons that provide some hope of change. First, Bill S-244 on the right of repair. Introduced by Liberal MP Wilson Miao in February, the bill this week passed second reading unanimously and has been referred to the Industry committee for further study. The lack of a right of repair exception in Canadian digital lock rules has hindered both consumers and Canadian innovation significantly, leaving consumers unable to repair their electronic devices and farmers often locked out of their farm equipment. After farmers protested against similar copyright restrictions, the U.S. established specific exceptions permitting digital locks to be circumvented to allow repair of software-enabled devices.

Given the impact on consumers, the agricultural sector, and the environment, a provision that explicitly permits circumvention for purposes of the right of repair in Canada is long overdue. Indeed, such an approach is consistent with the 2019 copyright review recommendation:

Recommendation 19

That the Government of Canada examine measures to modernize copyright policy with digital technologies affecting Canadians and Canadian institutions, including the relevance of technological protection measures within copyright law, notably to facilitate the maintenance, repair or adaptation of a lawfully-acquired device for non-infringing purposes.

August 12, 2022

Apple, afterwards

In Quillette, Jonathan Kay looks at Apple after the death of Steve Jobs:

In 2004, Apple co-founder Steve Jobs asked famed author Walter Isaacson to write his biography. It’s a mark of Jobs’s hallowed place in the pantheon of American corporate titans that Isaacson, whose other subjects included Henry Kissinger, Benjamin Franklin, and Albert Einstein, would eventually say yes. While best-selling books about successful business leaders represent a popular niche, most specimens are fawning airport reads that combine hagiography with self-help advice for aspiring entrepreneurs. Isaacson’s Steve Jobs (2011), by contrast, was a serious work of literary non-fiction that exalted its subject as a once-in-a-generation technological savant, while also showing him to be a callous parent and scathing boss, not to mention a proponent of loopy “fruitarian” medical theories. (Much has been made of Jobs’s use of fringe therapies to treat the pancreatic cancer that killed him in 2011, but he also entertained the bizarre belief that his vegan diet allowed him to avoid bathing for days on end without developing body odour, a proposition vigorously disputed by co-workers.)

Tripp Mickle, a Wall Street Journal technology journalist who covered the Apple beat for five years, isn’t Walter Isaacson (few of us are); and, to his credit, doesn’t try to be. Nor does he seek to present his primary subjects — former lead Apple designer Jony Ive and incumbent chief executive Tim Cook — as world-changing visionaries on par with their departed boss. Indeed, the very title of his book — After Steve: How Apple Became a Trillion-Dollar Company and Lost Its Soul — presents Apple as existing in a state of creative denouement since Jobs’s death — a bloated (if massively profitable) corporate bureaucracy that increasingly feeds shareholders’ demands for quarterly earnings by milking subscription services such as Apple Music and iCloud instead of developing new products.

The first five chapters of After Steve are structured as a twinned biography, following the lives of Ive and Cook from their precocious childhoods (in England and Alabama, respectively), and on through the 2010s, when the pair jointly ran Apple (in function, if not in title) following Jobs’s death.

Timothy Donald Cook grew up in Robertsdale, a farming community located roughly halfway between Mobile, Alabama and Pensacola, Florida, the middle child of a Korean War veteran and a pharmacist’s assistant. In high school, Cook was named “most studious”, and served as the business manager for the school yearbook. “In three years of math, he had never missed a homework assignment”, reports Mickle, also noting that one teacher remembers him as “efficient and dependable”. Cook also happens to be gay, a subject that caused some awkwardness for his Methodist parents, even though Cook wouldn’t come out publicly till later in life. As a means to deflect questions, Mickle reports, Cook’s mother told drug-store coworkers that her son was dating a girl in Foley, a nearby town.

Following high-school graduation, Cook went on to study industrial engineering at Auburn University and business administration at Duke. He then gravitated to the then-burgeoning field of personal computing, quickly carving out a niche within its production and supply-management back office. At IBM and Compaq, Cook turned himself into a sort of human abacus, ruthlessly bringing reduced costs, increased efficiencies, and smaller inventories to every assembly line he set eyes on. By the time he’d arrived at Apple in 1998, Mickle reports, Cook was completely neurotic about keeping any stocked materials off the books, calling inventory, “fundamentally evil”. In time, he pioneered a process by which yellow lines were painted down the floor of Apple’s production plants, with materials on the storage side of the line remaining on suppliers’ books until the very moment they were brought to the other side for assembly.

Like Ive, Cook declined to be interviewed for After Steve. And so it is entirely possible that the man has a rich inner life that remains opaque to Mickle and the outside world more generally. But the portrait that emerges in this book is one of a fanatically dedicated workaholic who rises before 4am to begin examining spreadsheets, and thinks about little else except the fortunes of Apple Inc. during the waking hours that follow. Mickle reports a sad scene in which Cook is spotted by sympathetic strangers at a fancy Utah resort, dining alone during what appears to be a solitary vacation. We also learn that Cook’s Friday-night meetings with Apple’s operations and finance staff were sometimes called “date night with Tim” by attendees, “because it would stretch for hours into the evening, when Cook seemed to have nowhere else to be.”

July 23, 2022

July 18, 2022

John von Neumann, The Man From The Future

One of the readers of Scott Alexander’s Astral Codex Ten has contributed a review of The Man From The Future: The Visionary Life of John von Neumann by Ananyo Bhattacharya. This is one of perhaps a dozen or so anonymous reviews that Scott publishes every year with the readers voting for the best review and the names of the contributors withheld until after the voting is finished:

John von Neumann invented the digital computer. The fields of game theory and cellular automata. Important pieces of modern economics, set theory, and particle physics. A substantial part of the technology behind the atom and hydrogen bombs. Several whole fields of mathematics I hadn’t previously heard of, like “operator algebras”, “continuous geometry”, and “ergodic theory”.

The Man From The Future, by Ananyo Bhattacharya, touches on all these things. But you don’t read a von Neumann biography to learn more about the invention of ergodic theory. You read it to gawk at an extreme human specimen, maybe the smartest man who ever lived.

By age 6, he could multiply eight-digit numbers in his head. At the same age, he spoke conversational ancient Greek; later, he would add Latin, French, German, English, and Yiddish (sometimes joked about also speaking Spanish, but he would just put “el” before English words and add -o to the end). Rumor had it he memorized everything he ever read. A fellow mathematician once tried to test this by asking him to recite Tale Of Two Cities, and reported that “he immediately began to recite the first chapter and continued until asked to stop after about ten or fifteen minutes”.

A group of scientists encountered a problem that the computers of the day couldn’t handle, and asked von Neumann for advice on designing a new generation of computers that was up to the task. But:

When the presentation was completed, he scribbled on a pad, stared so blankly that a RAND scientist later said he looked as if “his mind had slipped his face out of gear”, then said “Gentlemen, you do not need the computer. I have the answer.” While the scientists sat in stunned silence, Von Neumann reeled off the various steps which would provide the solution to the problem.

Do these sound a little too much like urban legends? The Tale Of Two Cities story comes straight from the mathematician involved — von Neumann’s friend Herman Goldstine, writing about his experience in The Computer From Pascal to von Neumann. The computer anecdote is of less certain provenance, quoted without attribution in a 1957 obituary in Life. But this is part of the fun of reading von Neumann biographies: figuring out what one can or can’t believe about a figure of such mythic proportions.

This is not really what Bhattacharya is here for. He does not entirely resist gawking. But he is at least as interested in giving us a tour of early 20th century mathematics, framed by the life of its most brilliant practitioner. The book devotes more pages to set theory than to von Neumann’s childhood, and spends more time on von Neumann’s formalization of quantum mechanics than on his first marriage (to be fair, so did von Neumann — hence the divorce).

Still, for those of us who never made their high school math tutors cry with joy at ever having met them (another von Neumann story, this one well-attested), the man himself is more of a draw than his ergodic theory. And there’s enough in The Man From The Future — and in some of the few hundred references it cites — to start to get a coherent picture.

April 11, 2022

QotD: Programmers as craftsmen

The people most likely to grasp that wealth can be created are the ones who are good at making things, the craftsmen. Their hand-made objects become store-bought ones. But with the rise of industrialization there are fewer and fewer craftsmen. One of the biggest remaining groups is computer programmers.

A programmer can sit down in front of a computer and create wealth. A good piece of software is, in itself, a valuable thing. There is no manufacturing to confuse the issue. Those characters you type are a complete, finished product. If someone sat down and wrote a web browser that didn’t suck (a fine idea, by the way), the world would be that much richer.*

Everyone in a company works together to create wealth, in the sense of making more things people want. Many of the employees (e.g. the people in the mailroom or the personnel department) work at one remove from the actual making of stuff. Not the programmers. They literally think the product, one line at a time. And so it’s clearer to programmers that wealth is something that’s made, rather than being distributed, like slices of a pie, by some imaginary Daddy.

It’s also obvious to programmers that there are huge variations in the rate at which wealth is created. At Viaweb we had one programmer who was a sort of monster of productivity. I remember watching what he did one long day and estimating that he had added several hundred thousand dollars to the market value of the company. A great programmer, on a roll, could create a million dollars worth of wealth in a couple weeks. A mediocre programmer over the same period will generate zero or even negative wealth (e.g. by introducing bugs).

This is why so many of the best programmers are libertarians. In our world, you sink or swim, and there are no excuses. When those far removed from the creation of wealth — undergraduates, reporters, politicians — hear that the richest 5% of the people have half the total wealth, they tend to think injustice! An experienced programmer would be more likely to think is that all? The top 5% of programmers probably write 99% of the good software.

Wealth can be created without being sold. Scientists, till recently at least, effectively donated the wealth they created. We are all richer for knowing about penicillin, because we’re less likely to die from infections. Wealth is whatever people want, and not dying is certainly something we want. Hackers often donate their work by writing open source software that anyone can use for free. I am much the richer for the operating system FreeBSD, which I’m running on the computer I’m using now, and so is Yahoo, which runs it on all their servers.

* This essay was written before Firefox.

Paul Graham, “How to Make Wealth”, Paul Graham, 2004-04.

April 1, 2022

January 7, 2022

The Most Important Invention of the 20th Century: Transistors

The History Guy: History Deserves to Be Remembered

Published 23 Dec 2019On December 23, 1947, three researchers at Bell labs demonstrated a new device to colleagues. The device, a solid-state replacement for the audion tube, represented the pinnacle of the quest to provide amplification of electronic communication. The History Guy recalls the path that brought us what one engineer describes as “The world’s most important thing.”

This is original content based on research by The History Guy. Images in the Public Domain are carefully selected and provide illustration. As images of actual events are sometimes not available, images of similar objects and events are used for illustration.

All events are portrayed in historical context and for educational purposes. No images or content are primarily intended to shock and disgust. Those who do not learn from history are doomed to repeat it. Non censuram.

Find The History Guy at:

Patreon: https://www.patreon.com/TheHistoryGuy

The History Guy: History Deserves to Be Remembered is the place to find short snippets of forgotten history from five to fifteen minutes long. If you like history too, this is the channel for you.

Awesome The History Guy merchandise is available at:

teespring.com/stores/the-history-guyScript by THG

#ushistory #thehistoryguy #invention

November 12, 2021

QotD: The looming quantum computing apocalypse

We’re reaching peak quantum computing hyperbole. According to a dimwit at the Atlantic, quantum computing will end free will. According to another one at Forbes, “the quantum computing apocalypse is immanent.” Rachel Gutman and Schlomo Dolev know about as much about quantum computing as I do about 12th century Talmudic studies, which is to say, absolutely nothing. They, however, think they know smart people who tell them that this is important: they’ve achieved the perfect human informational centipede. This is unquestionably the right time to go short.

Even the national academy of sciences has taken note that there might be a problem here. They put together 13 actual quantum computing experts who poured cold water on all the hype. They wrote a 200 page review article on the topic, pointing out that even with the most optimistic projections, RSA is safe for another couple of decades, and that there are huge gaps on our knowledge of how to build anything usefully quantum computing. And of course, they also pointed out if QC doesn’t start solving some problems which are interesting to … somebody, the funding is very likely to dry up. Ha, ha; yes, I’ll have some pepper on that steak.

Scott Locklin, “Quantum computing as a field is obvious bullshit”, Locklin on Science, 2019-01-15.

November 4, 2021

You think software is expensive now? You wouldn’t believe how expensive 1980s software was

A couple of years ago, Rob Griffiths looked at some computer hobbyist magazines from the 1980s and had both nostalgia for the period and sticker shock from the prices asked for computer games and business software:

A friend recently sent me a link to a large collection of 1980s computing magazines — there’s some great stuff there, well worth browsing. Perusing the list, I noticed Softline, which I remember reading in our home while growing up. (I was in high school in the early 1980s.)

We were fortunate enough to have an Apple ][ in our home, and I remember reading Softline for their game reviews and ads for currently-released games.

It was those ads that caught my eye as I browsed a few issues. Consider Missile Defense, a fun semi-clone of the arcade game Missile Command. To give you a sense of what games were like at the time, here are a few screenshots from the game (All game images in this article are courtesy of MobyGames, who graciously allow use of up to 20 images without prior permission.)

Stunning graphics, aren’t they?

Not quite state of the art, but impressive for a home computer of the day. My first computer was a PC clone, and the IBM PC software market was much more heavily oriented to business applications compared to the Apple, Atari, Commodore, or other “home computers” of the day. I think the first game I got was Broderbund’s The Ancient Art of War, which I remembered at the time as being very expensive. The Wikipedia entry says:

In 1985 Computer Gaming World praised The Ancient Art of War as a great war game, especially the ability to create custom scenarios, stating that for pre-gunpowder warfare it “should allow you to recreate most engagements”. In 1990 the magazine gave the game three out of five stars, and in 1993 two stars. Jerry Pournelle of BYTE named The Ancient Art of War his game of the month for February 1986, reporting that his sons “say (and I confirm from my own experience) is about the best strategic computer war game they’ve encountered … Highly recommended.” PC Magazine in 1988 called the game “educational and entertaining”. […] The Ancient Art of War is generally recognized as one of the first real-time strategy or real-time tactics games, a genre which became hugely popular a decade later with Dune II and Warcraft. Those later games added an element of economic management, with mining or gathering, as well as construction and base management, to the purely military.

The Ancient Art of War is cited as a classic example of a video game that uses a rock-paper-scissors design with its three combat units, archer, knight, and barbarian, as a way to balance gameplay strategies.

Back to Rob Griffiths and the sticker shock moment:

What stood out to me as I re-read this first issue wasn’t the very basic nature of the ad layout (after all, Apple hadn’t yet revolutionized page layout with the Mac and LaserWriter). No, what really stood out was the price: $29.95. While that may not sound all that high, consider that’s the cost roughly 38 years ago.

Using the Bureau of Labor Statistics’ CPI Inflation Calculator, that $29.95 in September of 1981 is equivalent to $82.45 in today’s money (i.e. an inflation factor of 2.753). Even by today’s standards, where top-tier games will spend tens of millions on development and marketing, $82.45 would be considered a very high priced game — many top-tier Xbox, PlayStation, and Mac/PC games are priced in the $50 to $60 range.

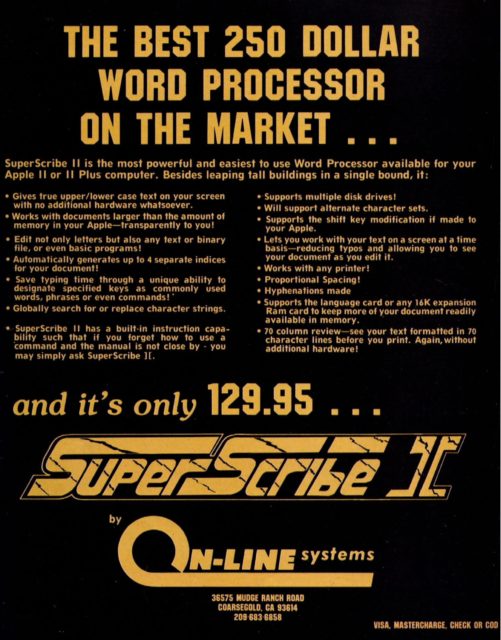

Business software — what there was of it available to the home computer market — was also proportionally much more expensive, but I found the feature list for this word processor to be more amusing: “Gives true upper/lower case text on your screen with no additional hardware support whatsoever.” Gosh!

H/T to BoingBoing for the link.

August 16, 2021

HMS Glamorgan – Computer Ship Of The Future (1967)

British Pathé

Published 13 Apr 2014English Channel.

Top shots of the guided missile destroyer

battleshipHMS Glamorgan at sea. In the control/computer room a man pulls out old-fashioned computer records [circuit packs, I believe] from a large control cupboard; officers on the bridge look at dials and through binoculars. Various shots in steering, control and engine rooms as the ship moves along. On the flight deck sailors play hockey while others watch and cheer them on. A navigator on deck looks through a sextant while another officer makes notes. A helicopter is moved from a hanger out onto the deck and prepared for takeoff; firemen in asbestos suits and helmets stand by with a hose; the chopper takes off.Three young men play guitars and banjos before a television camera in a tiny studio; we hear that the ship has its own closed circuit TV station; good shots of the filming; tape recording machines going round; picture being adjusted. Men in a mess room watch the performance on the television while they have a pint. Several shots of the sailors getting their rum rations. Various shots in the kitchens show food being prepared and served into the sailors mess tins; good canteen and catering shots (most of the food looks pretty unappetising) [the person writing the description clearly has no idea just how unappetizing British food could be in the 1960s … this looks well above average for the time]. In the sick bay a sailor gets a shot in the arm from the Medical Officer.

Several shots of the radar antennae on the ship and the men looking at the radar scanner screens in the control room. Men in protective gloves and hoods load shells in the gun turret; M/Ss of the main guns on the ship being fired. Several shots show the countdown for Seaslug missiles to be fired; men at control panels look at dials and radar screens; an officer counts down into a microphone (mute). Complicated boards of buttons show the location of the missiles, intercut with shots of the missiles moving into position by electronic instruction. The missiles move into the launchers on deck and are fired; men watch their progress on a radar screen. Another launcher moves into position; L/S of the ship as the missiles are fired.

Note: on file is correspondence and information about HMS Glamorgan. Cuts exist – see separate record. FILM ID:419.04

A VIDEO FROM BRITISH PATHÉ. EXPLORE OUR ONLINE CHANNEL, BRITISH PATHÉ TV. IT’S FULL OF GREAT DOCUMENTARIES, FASCINATING INTERVIEWS, AND CLASSIC MOVIES. http://www.britishpathe.tv/

FOR LICENSING ENQUIRIES VISIT http://www.britishpathe.com/

British Pathé also represents the Reuters historical collection, which includes more than 136,000 items from the news agencies Gaumont Graphic (1910-1932), Empire News Bulletin (1926-1930), British Paramount (1931-1957), and Gaumont British (1934-1959), as well as Visnews content from 1957 to the end of 1984. All footage can be viewed on the British Pathé website. https://www.britishpathe.com/

July 26, 2021

When printers malfunction – Printer

Viva La Dirt League

Published 26 Apr 2021Adam experiences the utter frustration of when printers malfunction.

WATCH MORE SKITS HERE: http://bit.ly/VLDLvideos

SUPPORT ON PATREON – http://bit.ly/VLDLpatreon

DISCORD – http://bit.ly/VLDLdiscord

TWITTER: http://bit.ly/VLDLtwitter

INSTAGRAM: http://bit.ly/VLDLinstagram———————————- TWITCH ——————————-

———————————– MERCH——————————–

Merchandise: bit.ly/VLDLmerch

Songs: http://bit.ly/VLDLmusic

June 25, 2021

QotD: Moore’s Law

Moore’s Law is a violation of Murphy’s Law. Everything gets better and better.

Gordon Moore, quoted in “Happy Birthday: Moore’s Law at 40”, The Economist, 2005-03-26

May 14, 2021

Recycling when it makes economic sense? Good. Recycling just because? Not good at all.

Tim Worstall explains why a new push to mandate recycling rare earth from consumer electronic devices will be a really, really bad idea … so bad that it’ll waste more resources than are recovered by the recycling effort:

[Indium is] the thing that makes touchscreens work. Lovely stuff. Normally extracted as a byproduct of getting zinc from spharelite. Usual concentrations in the original mineral are 45 to 500 parts per million.

Now, note something important about a by product material like this. If we recycle indium we don’t in fact save any indium from spharelite. Because we mine spharelite for the zinc, the indium is just a bonus when we do. So, we recycle the indium we’re already using. We don’t process out the indium in our spharelite. We just take the same amount of zinc we always did and dump what we don’t want into the gangue, the waste.

So, note what’s happened. We recycle indium and yet we dig up exactly the same amount of indium we always did. We just don’t use what we’ve dug up – we’re not in fact saving that vital resource of indium at all.

[…]

The number of waste fluorescent lamps arising has been declining since 2013. In 2025, it is estimated there will be 92 tonnes of CRMs in waste fluorescent lamps (Ce: 10 tonnes, Eu: 4 tonnes, La: 13 tonnes, Tb: 4 tonnes and Y: 61 tonnes).

That would be the recovery from all fluorescent lamps in Europe being recycled. In a few – there’s not that much material so therefore only a few plants are needed, meaning considerable geographic spread – plants dotted around.

That’s $50k of cerium, about $100k of europium, $65k of lanthanum, $2.8 million of terbium and $2.2 million of yttrium. To all intents and purposes this is $5 million of material. For which we must have a Europe-wide collection system?

They do realise this is insane which is why they insist that this must be made law. Can’t have people not doing stupid things now, can we?

Just to give another example – not one they mention. As some will know I used to supply rare earths to the global lighting industry. One particular type uses scandium. In a quarter milligram quantity per bulb. Meaning that even with perfect recycling you need to collect 4 million bulbs to gain a kilo of scandium – worth $800.

January 23, 2021

QotD: “Genetics is interesting as an example of a science that overcame a diseased paradigm”

This side of the veil, instead of looking for the “gene for intelligence”, we try to find “polygenic scores”. Given a person’s entire genome, what function best predicts their intelligence? The most recent such effort uses over a thousand genes and is able to predict 10% of variability in educational attainment. This isn’t much, but it’s a heck of a lot better than anyone was able to do under the old “dozen genes” model, and it’s getting better every year in the way healthy paradigms are supposed to.

Genetics is interesting as an example of a science that overcame a diseased paradigm. For years, basically all candidate gene studies were fake. “How come we can’t find genes for anything?” was never as popular as “where’s my flying car?” as a symbol of how science never advances in the way we optimistically feel like it should. But it could have been.

And now it works. What lessons can we draw from this, for domains that still seem disappointing and intractable?

Turn-of-the-millennium behavioral genetics was intractable because it was more polycausal than anyone expected. Everything interesting was an excruciating interaction of a thousand different things. You had to know all those things to predict anything at all, so nobody predicted anything and all apparent predictions were fake.

Modern genetics is healthy and functional because it turns out that although genetics isn’t easy, it is simple. Yes, there are three billion base pairs in the human genome. But each of those base pairs is a nice, clean, discrete unit with one of four values. In a way, saying “everything has three billion possible causes” is a mercy; it’s placing an upper bound on how terrible genetics can be. The “secret” of genetics was that there was no “secret”. You just had to drop the optimistic assumption that there was any shortcut other than measuring all three billion different things, and get busy doing the measuring. The field was maximally perverse, but with enough advances in sequencing and computing, even the maximum possible level of perversity turned out to be within the limits of modern computing.

(This is an oversimplification: if it were really maximally perverse, chaos theory would be involved somehow. Maybe a better claim is that it hits the maximum perversity bound in one specific dimension)

Scott Alexander, “The Omnigenic Model As Metaphor For Life”, Slate Star Codex, 2018-09-13.