Thought experiment: imagine a future in which everybody takes for granted that all software outside a few toy projects in academia will be closed source controlled by managerial elites, computers are unhackable sealed boxes, communications protocols are opaque and locked down, and any use of computer-assisted technology requires layers of permissions that (in effect) mean digital information flow is utterly controlled by those with political and legal master keys. What kind of society do you suppose eventually issues from that?

Remember Trusted Computing and Palladium and crypto-export restrictions? RMS and Linus Torvalds and John Gilmore and I and a few score other hackers aborted that future before it was born, by using our leverage as engineers and mentors of engineers to change the ground of debate. The entire hacker culture at the time was certainly less than 5% of the population, by orders of magnitude.

And we may have mainstreamed open source just in time. In an attempt to defend their failing business model, the MPAA/RIAA axis of evil spent years pushing for digital “rights” management so pervasively baked into personal-computer hardware by regulatory fiat that those would have become unhackable. Large closed-source software producers had no problem with this, as it would have scratched their backs too. In retrospect, I think it was only the creation of a pro-open-source constituency with lots of money and political clout that prevented this.

Did we bend the trajectory of society? Yes. Yes, I think we did. It wasn’t a given that we’d get a future in which any random person could have a website and a blog, you know. It wasn’t even given that we’d have an Internet that anyone could hook up to without permission. And I’m pretty sure that if the political class had understood the implications of what we were actually doing, they’d have insisted on more centralized control. ~For the public good and the children, don’t you know.~

So, yes, sometimes very tiny groups can change society in visibly large ways on a short timescale. I’ve been there when it was done; once or twice I’ve been the instrument of change myself.

Eric S. Raymond, “Engineering history”, Armed and Dangerous, 2010-09-12.

October 8, 2018

QotD: The closed-source software dystopia we barely avoided

September 24, 2018

Verity Stob on early GUI experiences

“Verity Stob” began writing about technology issues three decades back. She reminisces about some things that have changed and others that are still irritatingly the same:

It’s 30 years since .EXE Magazine carried the first Stob column; this is its

pearlPerl anniversary. Rereading article #1, a spoof self-tester in the Cosmo style, I was struck by how distant the world it invoked seemed. For example:

Your program requires a disk to have been put in the floppy drive, but it hasn’t. What happens next?

The original answers, such as:

e) the program crashes out into DOS, leaving dozens of files open

would now need to be supplemented by

f) what’s ‘the floppy drive’ already, Grandma? And while you’re at it, what is DOS? Part of some sort of DOS and DON’TS list?

I say: sufficient excuse to present some Then and Now comparisons with those primordial days of programming, to show how much things have changed – or not.

1988: Drag-and-drop was a showy-offy but not-quite-satisfactory technology.

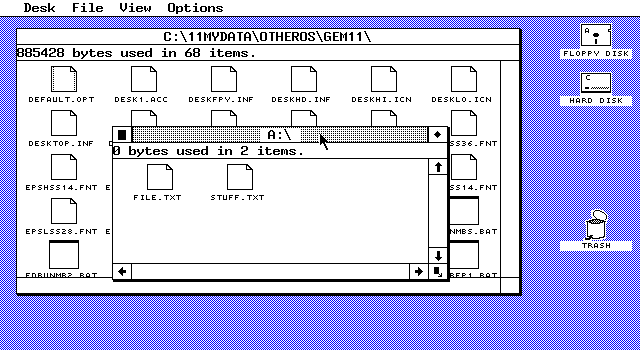

My first DnD encounter was by proxy. In about 1985 my then boss, a wise and cynical old Brummie engineer, attended a promotional demo, free wine and nibbles, of an exciting new WIMP technology called GEM. Part of the demo was to demonstrate the use of the on-screen trash icon for deleting files.

According to Graham’s gleeful report, he stuck up his hand at this point. “What happens if you drag the clock into the wastepaper basket?’

The answer turned out to be: the machine crashed hard on its arse, and it needed about 10 minutes embarrassed fiddling to coax it back onto its feet. At which point Graham’s arm went up again. “What happens if you drop the wastepaper basket into the clock?’

Drag-ons ‘n’ drag-offs

GEM may have been primitive, but it was at least consistent.

The point became moot a few months later, when Apple won a look-and-feel lawsuit and banned the GEM trashcan outright.

2018: Not that much has changed. Windows Explorer users: how often has your mouse finger proved insufficiently strong to grasp the file? And you have accidentally dropped the document you wanted into a deep thicket of nested server directories?

Or how about touch interface DnD on a phone, where your skimming pinkie exactly masks from view the dragged thing?

Well then.

However, I do confess admiration for this JavaScript library that aims to make a dragging and dropping accessible to the blind. Can’t fault its ambition.

August 24, 2018

From Software-as-a-Service to emerging Techno-Feudalism

The shift from selling software to selling software subscriptions was a major sea-change in the technology world. Here’s a bit of historical perspective from The Z Man to explain why this could be a very bad thing indeed for the average user:

Feudalism only works when a small elite controls the source of wealth. Then they can control the exploitation of it. In Europe, as Christianity spread, the Church acquired lands, becoming one of the most powerful forces in society. The warrior elite was exclusively Catholic, thus they had a loyalty to the Pope, as God’s representative on earth. Therefore, the system of controlling wealth not only had a direct financial benefit to the people at the top, it had the blessing of God’s representative, who sat atop the whole system.

That’s something to keep in mind as we see technology evolve into a feudal system, where a small elite controls the resources and grants permission to users. The software oligopolies are now shifting all of their licensing to a subscription model. It’s not just the mobile platforms. Developers of enterprise software for business are adopting the same model. The users have no ownership rights. Instead they are renters, subject to terms and conditions imposed by the developer or platform holder. The user is literally a tenant.

The main reason developers are shifting to this model is that they cannot charge high fees for their software, due to the mass of software on the market. Competition has drive down prices. Further, customers are not inclined to pay high maintenance fees, when they can buy new systems at competitive rates. The solution is stop selling the stuff and start renting it. This fits the oligopoly scheme as it ultimately puts them in control of the developers. Apple and Google are now running protection rackets for developers.

It also means the end of any useful development. Take a look at the situation Stefan Molyneux faces. A band of religious fanatics has declared him a heretic and wants him burned. The Great Church of Technology is now in the process of having him expelled from the internet. As he wrote in a post, he invests 12 years building his business on-line, only to find out he owns none of it. He was always just a tenant farmer, who foolishly invested millions in YouTube. Like a peasant, he is now about to be evicted.

How long before someone like this monster discovers that Google and Apple will no longer allow him to use any apps on his phone? Or maybe he is denied access to his accounting system? How long before his insurer cuts off his business insurance, claiming the threat from homosexual terrorists poses too high of a risk? Federal law prevents the electric company from shutting off his power due to politics, but Federal law used to prevent secret courts and secret warrants. Things change as the people in charge change.

June 20, 2018

Do You Have a Right To Repair Your Phone? The Fight Between Big Tech and Consumers

ReasonTV

Published on 18 Jun 2018Eric Lundgren got 15 months in prison for selling pirated Microsoft software that the tech giant gives away for free. His case cuts to the heart of a major battle going on in the tech industry today: Companies are trying to preserve aspects of U.S. copyright law that give them enormous power over the products we own.

Reason is the planet’s leading source of news, politics, and culture from a libertarian perspective. Go to reason.com for a point of view you won’t get from legacy media and old left-right opinion magazines.

February 16, 2018

Games Should Not Cost $60 Anymore – Inflation, Microtransactions, and Publishing – Extra Credits

Extra Credits

Published on 24 Jan 2018You would think that paying $60 for a game would be enough, but so many games these days ask for money with DLC, microtransactions, and yes, lootboxes. There’s a reason for that.

January 31, 2018

QotD: “Enhancing the user experience”

Once upon a time, computers weren’t all constantly connected to the intertubes. What we call “air-gapped” these days was the normal state of machines back in the desktop beige box days.

Back then, when you bought a program it came in a cardboard box on physical media. You would install it on your computer and it would work the same way from the day you installed it to the day you stopped using it. Nobody could rearrange the menus on WordPerfect or change the buttons in Secret Weapons of the Lufwaffe … Good times.

Nowadays, half the software you interact with doesn’t even reside on your PC. Further, there are whole departments at, say, Facebook or Blizzard or Google whose entire job is to “enhance the user experience”. If they’re not constantly dicking around, adding and removing features, changing what buttons do, moving things around … then they’re not doing their jobs.

We have incentivized instability.

Tamara Keel, “Tinkering for tinkering’s sake…”, View From The Porch, 2018-01-10.

December 12, 2017

“Well sir, there’s nothing on Earth like a genuine, bona-fide, electrified, six-car blockchain!”

The way blockchain technology is being hyped these days, you’d think it was being pushed by the monorail salesman on The Simpsons. At Catallaxy Files, this guest post by Peter Van Valkenburgh is another of their informative series on what blockchain tech can do:

“Blockchain” has become a buzzword in the technology and financial industries. It is often cited as a panacea for all manner business and governance problems. “Blockchain’s” popularity may be an encouraging sign for innovation, but it has also resulted in the word coming to mean too many things to too many people, and — ultimately — almost nothing at all.

The word “blockchain” is like the word “vehicle” in that they both describe a broad class of technology. But unlike the word “blockchain” no one ever asks you, “Hey, how do you feel about vehicle?” or excitedly exclaims, “I’ve got it! We can solve this problem with vehicle.” And while you and I might talk about “vehicle technology,” even that would be a strangely abstract conversation. We should probably talk about cars, trains, boats, or rocket ships, depending on what it is about vehicles that we are interested in. And “blockchain” is the same. There is no “The Blockchain” any more than there is “The Vehicle,” and the category “blockchain technology” is almost hopelessly broad.

There’s one thing that we definitely know is blockchain technology, and that’s Bitcoin. We know this for sure because the word was originally invented to name and describe the distributed ledger of bitcoin transactions that is created by the Bitcoin network. But since the invention of Bitcoin in 2008, there have been several individuals, companies, consortia, and nonprofits who have created new networks or software tools that borrow something from Bitcoin—maybe directly borrowing code from Bitcoin’s reference client or maybe just building on technological or game-theoretical ideas that Bitcoin’s emergence uncovered. You’ve probably heard about some of these technologies and companies or seen their logos.

Aside from being in some way inspired by Bitcoin what do all of these technologies have in common? Is there anything we can say is always true about a blockchain technology? Yes.

All Blockchains Have…

All blockchain technologies should have three constituent parts: peer-to-peer networking, consensus mechanisms, and (yes) blockchains, A.K.A. hash-linked data structures. You might be wondering why we call them blockchain technologies if the blockchain is just one of three essential parts. It probably just comes down to good branding. Ever since Napster and BitTorrent, the general public has unfortunately come to associate peer-to-peer networks with piracy and copyright infringement. “Consensus mechanism” sounds very academic and a little too hard to explain a little too much of a mouthful to be a good brand. But “blockchain,” well that sounds interesting and new. It almost rolls off the tongue; at least compared to, say, “cryptography” which sounds like it happens in the basement of a church.

But understanding each of those three constituent parts makes blockchain technology suddenly easier to understand. And that’s because we can write a simple one sentence explanation about how the three parts achieve a useful result:

Connected computers reach agreement over shared data.

That’s what a blockchain technology should do; it should allow connected computers to reach agreement over shared data. And each part of that sentence corresponds to our three constituent technologies.

Connected Computers. The computers are connected in a peer-to-peer network. If your computer is a part of a blockchain network it is talking directly to other computers on that network, not through a central server owned by a corporation or other central party.

Reach Agreement. Agreement between all of the connected computers is facilitated by using a consensus mechanism. That means that there are rules written in software that the connected computers run, and those rules help ensure that all the computers on the network stay in sync and agree with each other.

Shared Data. And the thing they all agree on is this shared data called a blockchain. “Blockchain” just means the data is in a specific format (just like you can imagine data in the form of a word document or data in the form of an image file). The blockchain format simply makes data easy for machines to verify the consistency of a long and growing log of data. Later data entries must always reference earlier entries, creating a linked chain of data. Any attempt to alter an early entry will necessitate altering every subsequent entry; otherwise, digital signatures embedded in the data will reveal a mismatch. Specifically how that all works is beyond the scope of this backgrounder, but it mostly has to do with the science of cryptography and digital signatures. Some people might tell you that this makes blockchains “immutable;” that’s not really accurate. The blockchain data structure will make alterations evident, but if the people running the connected computers choose to accept or ignore the alterations then they will remain.

September 13, 2017

Tesla’s experiment in price discrimination

Alex Tabarrok links to a story about Tesla using an over-the-air software update to help Tesla owners in hurricane-threatened areas get more range from their lower-battery capacity cars … but he says this may eventually come back and bite the company:

Tesla knows that some of its customers are willing to pay more for a Tesla than others. But Tesla can’t just ask its customers their willingness to pay and price accordingly. High willing-to-pay customers would simply lie to get a lower price. Thus, Tesla must find some characteristic of buyers that is correlated with high willingness-to-pay and charge more to customers with that characteristic. Airlines, for example, price more for the same seat if you book at the last minute on the theory that last minute buyers are probably business-people with high willingness-to-pay as opposed to vacationers who have more options and a lower willingness-to-pay. Tesla uses a slightly different strategy; it offers two versions of the same good, the low and high mileage versions, and it prices the high-mileage version considerably higher on the theory that buyers willing to pay for more mileage are also more likely to be high willingness-to-pay buyers in general. Thus, the high-mileage group pay a higher price-to-cost margin than the low-mileage group. A familiar example is software companies that offer a discounted or “student” version of the product with fewer features. Since the software firm’s costs are mostly sunk R&D costs, the firm can make money selling a low-price version so long as doing so doesn’t cannibalize its high willingness-to-pay customers–and the firm can avoid cannibalization by carefully choosing to disable the features most valuable to high willingness-to-pay customers.

The kind gesture to Tesla owners in Florida is probably deeply appreciated right now, but…

Unfortunately, I fear that Tesla may have made a marketing faux-pas. When it turns off the extra mileage boost are Tesla customers going to say “thanks for temporarily making my car better!” Or are they going to complain, “why are you making MY car worse than it has to be?”

Human nature being what it is, the smart money is betting on the “Thanks for the temporary upgrade, but what have you done for me lately?” attitude setting in quickly.

July 20, 2017

ESR on the early history of distributed software

Eric S. Raymond is asking for additional input to his current historical outline of the development of distributed software collaboration:

Nowadays we take for granted a public infrastructure of distributed version control and a lot of practices for distributed teamwork that go with it – including development teams that never physically have to meet. But these tools, and awareness of how to use them, were a long time developing. They replace whole layers of earlier practices that were once general but are now half- or entirely forgotten.

The earliest practice I can identify that was directly ancestral was the DECUS tapes. DECUS was the Digital Equipment Corporation User Group, chartered in 1961. One of its principal activities was circulating magnetic tapes of public-domain software shared by DEC users. The early history of these tapes is not well-documented, but the habit was well in place by 1976.

One trace of the DECUS tapes seems to be the README convention. While it entered the Unix world through USENET in the early 1980s, it seems to have spread there from DECUS tapes. The DECUS tapes begat the USENET source-code groups, which were the incubator of the practices that later became “open source”. Unix hackers used to watch for interesting new stuff on comp.sources.unix as automatically as they drank their morning coffee.

The DECUS tapes and the USENET sources groups were more of a publishing channel than a collaboration medium, though. Three pieces were missing to fully support that: version control, patching, and forges.

Version control was born in 1972, though SCCS (Source Code Control System) didn’t escape Bell Labs until 1977. The proprietary licensing of SCCS slowed its uptake; one response was the freely reusable RCS (Revision Control System) in 1982.

[…]

The first dedicated software forge was not spun up until 1999. That was SourceForge, still extant today. At first it supported only CVS, but it sped up the adoption of the (greatly superior) Subversion, launched in 2000 by a group for former CVS developers.

Between 2000 and 2005 Subversion became ubiquitous common knowledge. But in 2005 Linus Torvalds invented git, which would fairly rapidly obsolesce all previous version-control systems and is a thing every hacker now knows.

Questions for reviewers:

(1) Can anyone identify a conscious attempt to organize a distributed development team before nethack (1987)?

(2) Can anyone tell me more about the early history of the DECUS tapes?

(3) What other questions should I be asking?

May 24, 2017

ESR presents Open Adventure

Eric S. Raymond recently was entrusted with the original code for ADVENT, and he’s put it up on gitlab for anyone to access:

Colossal Cave Adventure was the origin of many things; the text adventure game, the dungeon-crawling D&D (computer) game, the MOO, the roguelike genre. Computer gaming as we know it would not exist without ADVENT (as it was known in its original PDP-10 incarnation).

Long ago, you might have played this game. Or maybe you’ve just heard stories about it, or vaguely know that “

xyzzy” is a magic word, or have heard people say “You are in a maze of twisty little passages, all alike”,Though there’s a C port of the original 1977 game in the BSD game package, and the original FORTRAN sources could be found if you knew where to dig, Crowther & Woods’s final version – Adventure 2.5 from 1995 – has never been packaged for modern systems and distributed under an open-source license.

Until now, that is.

With the approval of its authors, I bring you Open Adventure. And with it some thoughts about what it means to be respectful of an important historical artifact when it happens to be software.

This is code that fully deserves to be in any museum of the great artifacts of hacker history. But there’s a very basic question about an artifact like this: should a museum preserve it in a static form as close to the original as possible, or is it more in the right spirit to encourage the folk process to continue improving the code?

Modern version control makes this question easier; you can have it both ways, keeping a pristine archival version in the history and improving it. Anyway, I think the answer to the general question is clear; if heritage code like this is relevant at all, it’s as a living and functional artifact. We respect our history and the hackers of the past best by carrying on their work and their playfulness.

May 23, 2017

QotD: The dangers of career “dualization”

This concept [of dualization] applies much more broadly than just drugs and colleges. I sometimes compare my own career path, medicine, to that of my friends in computer programming. Medicine is very clearly dual – of the millions of pre-med students, some become doctors and at that moment have an almost-guaranteed good career, others can’t make it to that MD and have no relevant whatsoever in the industry. Computer science is very clearly non-dual; if you’re a crappy programmer, you’ll get a crappy job at a crappy company; if you’re a slightly better programmer, you’ll get a slightly better job at a slightly better company; if you’re a great programmer, you’ll get a great job at a great company (ideally). There’s no single bottleneck in computer programming where if you pass you’re set for life but if you fail you might as well find some other career path.

My first instinct is to think of non-dualized fields as healthy and dualized fields as messed up, for a couple of reasons.

First, in the dualized fields, you’re putting in a lot more risk. Sometimes this risk is handled well. For example, in medicine, most pre-med students don’t make it to doctor, but the bottleneck is early – acceptance to medical school. That means they fail fast and can start making alternate career plans. All they’ve lost is whatever time they put into taking pre-med classes in college. In Britain and Ireland, the system’s even better – you apply to med school right out of high school, so if you don’t get in you’ve got your whole college career to pivot to a focus on English or Engineering or whatever. But other fields handle this risk less well. For example, as I understand Law, you go to law school, and if all goes well a big firm offers to hire you around the time you graduate. If no big firm offers to hire you, your options are more limited. Problem is, you’ve sunk three years of your life and a lot of debt into learning that you’re not wanted. So the cost of dualization is littering the streets with the corpses of people who invested a lot of their resources into trying for the higher tier but never made it.

Second, dualized fields offer an inherent opportunity for oppression. We all know the stories of the adjunct professors shuttling between two or three colleges and barely making it on food stamps despite being very intelligent people who ought to be making it into high-paying industries. Likewise, medical residents can be worked 80 hour weeks, and I’ve heard that beginning lawyers have it little better. Because your entire career is concentrated on the hope of making it into the higher-tier, and the idea of not making it into the higher tier is too horrible to contemplate, and your superiors control whether you will make it into the higher tier or not, you will do whatever the heck your superiors say. A computer programmer who was asked to work 80 hour weeks could just say “thanks but no thanks” and find another company with saner policies.

(except in startups, but those bear a lot of the hallmarks of a dualized field with binary outcomes, including the promise of massive wealth for success)

Third, dualized fields are a lot more likely to become politicized. The limited high-tier positions are seen as spoils to be distributed, in contrast to the non-dual fields where good jobs are seen as opportunities to attract the most useful and skilled people.

Scott Alexander, “Non-Dual Awareness”, Slate Star Codex, 2015-07-28.

April 13, 2017

Microsoft buries the (security) lede with this month’s patch

In The Register, Shaun Nichols discusses the way Microsoft has effectively hidden the extent and severity of security changes in this month’s Windows 10 patch:

Microsoft today buried among minor bug fixes patches for critical security flaws that can be exploited by attackers to hijack vulnerable computers.

In a massive shakeup of its monthly Patch Tuesday updates, the Windows giant has done away with its easy-to-understand lists of security fixes published on TechNet – and instead scattered details of changes across a new portal: Microsoft’s Security Update Guide.

Billed by Redmond as “the authoritative source of information on our security updates,” the portal merely obfuscates discovered vulnerabilities and the fixes available for them. Rather than neatly split patches into bulletins as in previous months, Microsoft has dumped the lot into an unwieldy, buggy and confusing table that links out to a sprawl of advisories and patch installation instructions.

Punters and sysadmins unable to handle the overload of info are left with a fact-light summary of April’s patches – or a single bullet point buried at the end of a list of tweaks to, for instance, Windows 10.

Now, ordinary folk are probably happy with installing these changes as soon as possible, silently and automatically, without worrying about the nitty-gritty details of the fixed flaws. However, IT pros, and anyone else curious or who wants to test patches before deploying them, will have to fish through the portal’s table for details of individual updates.

[…]

Crucially, none of these programming blunders are mentioned in the PR-friendly summary put out today by Microsoft – a multibillion-dollar corporation that appears to care more about its image as a secure software vendor than coming clean on where its well-paid engineers cocked up. The summary lists “security updates” for “Microsoft Windows,” “Microsoft Office,” and “Internet Explorer” without version numbers or details.

January 29, 2017

Once upon a time, in hacker culture…

ESR performs a useful service in pulling together a document on what all hackers used to need to know, regardless of the particular technical interest they followed. I was never technical enough to be a hacker, but I worked with many of them, so I had to know (or know where to find) much of this information, too.

One fine day in January 2017 I was reminded of something I had half-noticed a few times over the previous decade. That is, younger hackers don’t know the bit structure of ASCII and the meaning of the odder control characters in it.

This is knowledge every fledgling hacker used to absorb through their pores. It’s nobody’s fault this changed; the obsolescence of hardware terminals and the RS-232 protocol is what did it. Tools generate culture; sometimes, when a tool becomes obsolete, a bit of cultural commonality quietly evaporates. It can be difficult to notice that this has happened.

This document is a collection of facts about ASCII and related technologies, notably hardware terminals and RS-232 and modems. This is lore that was at one time near-universal and is no longer. It’s not likely to be directly useful today – until you trip over some piece of still-functioning technology where it’s relevant (like a GPS puck), or it makes sense of some old-fart war story. Even so, it’s good to know anyway, for cultural-literacy reasons.

One thing this collection has that tends to be indefinite in the minds of older hackers is calendar dates. Those of us who lived through all this tend to have remembered order and dependencies but not exact timing; here, I did the research to pin a lot of that down. I’ve noticed that people have a tendency to retrospectively back-date the technologies that interest them, so even if you did live through the era it describes you might get a few surprises from reading.

There are references to Unix in here because I am mainly attempting to educate younger open-source hackers working on Unix-derived systems such as Linux and the BSDs. If those terms mean nothing to you, the rest of this document probably won’t either.

January 25, 2017

The “right to repair” gets a boost in three states

Cory Doctorow reports on a hopeful sign that we might be able to get rid of one of the more pernicious aspects of the DMCA rules:

Section 1201 of the 1998 Digital Millennium Copyright Act makes it both a crime and a civil offense to tamper with software locks that control access to copyrighted works — more commonly known as “Digital Rights Management” or DRM. As the number of products with software in them has exploded, the manufacturers of these products have figured out that they can force their customers to use their own property in ways that benefit the company’s shareholders, not the products’ owners — all they have to do is design those products so that using them in other ways requires breaking some DRM.

The conversion of companies’ commercial preferences into legally enforceable rights has been especially devastating to the repair sector, a huge slice of the US economy, as much as 4% of GDP, composed mostly of small mom-n-pop storefront operations that create jobs right in local communities, because repair is a local business. No one wants to send their car, or even their phone, to China or India for servicing.

[…]

Three states are considering “Right to Repair” bills that would override the DMCA’s provisions, making it legal to break DRM to effect repairs, ending the bizarre situation where cat litter boxes are given the same copyright protection as the DVD of Sleeping Beauty. Grassroots campaigns in Nebraska, Minnesota, and New York prompted the introduction of these bills and there’s more on the way. EFF and the Right to Repair coalition are pushing for national legislation too, in the form of the Unlocking Technology Act.

November 13, 2016

QotD: Don’t call it software engineering

The #gotofail episode will become a text book example of not just poor attention to detail, but moreover, the importance of disciplined logic, rigor, elegance, and fundamental coding theory.

A still deeper lesson in all this is the fragility of software. Prof Arie van Deursen nicely describes the iOS7 routine as “brittle”. I want to suggest that all software is tragically fragile. It takes just one line of silly code to bring security to its knees. The sheer non-linearity of software — the ability for one line of software anywhere in a hundred million lines to have unbounded impact on the rest of the system — is what separates development from conventional engineering practice. Software doesn’t obey the laws of physics. No non-trivial software can ever be fully tested, and we have gone too far for the software we live with to be comprehensively proof read. We have yet to build the sorts of software tools and best practice and habits that would merit the title “engineering”.

I’d like to close with a philosophical musing that might have appealed to my old mentors at Telectronics. Post-modernists today can rejoice that the real world has come to pivot precariously on pure text. It is weird and wonderful that technicians are arguing about the layout of source code — as if they are poetry critics.

We have come to depend daily on great obscure texts, drafted not by people we can truthfully call “engineers” but by a largely anarchic community we would be better of calling playwrights.

Stephan Wilson, “gotofail and a defence of purists”, Lockstep, 2014-02-26.