Some people are claiming that the Heartbleed bug proves that open source software is a failure. ESR quickly addresses that idiotic claim:

I actually chuckled when I read rumor that the few anti-open-source advocates still standing were crowing about the Heartbleed bug, because I’ve seen this movie before after every serious security flap in an open-source tool. The script, which includes a bunch of people indignantly exclaiming that many-eyeballs is useless because bug X lurked in a dusty corner for Y months, is so predictable that I can anticipate a lot of the lines.

The mistake being made here is a classic example of Frederic Bastiat’s “things seen versus things unseen”. Critics of Linus’s Law overweight the bug they can see and underweight the high probability that equivalently positioned closed-source security flaws they can’t see are actually far worse, just so far undiscovered.

That’s how it seems to go whenever we get a hint of the defect rate inside closed-source blobs, anyway. As a very pertinent example, in the last couple months I’ve learned some things about the security-defect density in proprietary firmware on residential and small business Internet routers that would absolutely curl your hair. It’s far, far worse than most people understand out there.

[…]

Ironically enough this will happen precisely because the open-source process is working … while, elsewhere, bugs that are far worse lurk in closed-source router firmware. Things seen vs. things unseen…

Returning to Heartbleed, one thing conspicuously missing from the downshouting against OpenSSL is any pointer to an implementation that is known to have a lower defect rate over time. This is for the very good reason that no such empirically-better implementation exists. What is the defect history on proprietary SSL/TLS blobs out there? We don’t know; the vendors aren’t saying. And we can’t even estimate the quality of their code, because we can’t audit it.

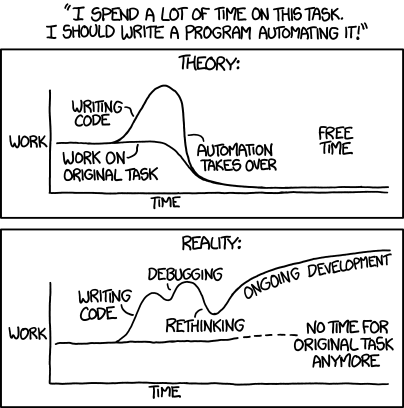

The response to the Heartbleed bug illustrates another huge advantage of open source: how rapidly we can push fixes. The repair for my Linux systems was a push-one-button fix less than two days after the bug hit the news. Proprietary-software customers will be lucky to see a fix within two months, and all too many of them will never see a fix patch.

Update: There are lots of sites offering tools to test whether a given site is vulnerable to the Heartbeat bug, but you need to step carefully there, as there’s a thin line between what’s legal in some countries and what counts as an illegal break-in attempt:

Websites and tools that have sprung up to check whether servers are vulnerable to OpenSSL’s mega-vulnerability Heartbleed have thrown up anomalies in computer crime law on both sides of the Atlantic.

Both the US Computer Fraud and Abuse Act and its UK equivalent the Computer Misuse Act make it an offence to test the security of third-party websites without permission.

Testing to see what version of OpenSSL a site is running, and whether it is also supports the vulnerable Heartbeat protocol, would be legal. But doing anything more active — without permission from website owners — would take security researchers onto the wrong side of the law.

And you shouldn’t just rush out and change all your passwords right now (you’ll probably need to do it, but the timing matters):

Heartbleed is a catastrophic bug in widely used OpenSSL that creates a means for attackers to lift passwords, crypto-keys and other sensitive data from the memory of secure server software, 64KB at a time. The mega-vulnerability was patched earlier this week, and software should be updated to use the new version, 1.0.1g. But to fully clean up the problem, admins of at-risk servers should generate new public-private key pairs, destroy their session cookies, and update their SSL certificates before telling users to change every potentially compromised password on the vulnerable systems.