Researchers at the University of London Institute of Psychiatry say the distractions of email and such extract a toll on intellectual performance as similar to that of marijuana. The study of 1,100 volunteers found that attention and concentration could be so frazzled by answering and managing calls and messages that IQ temporarily dropped by 10 points. The resulting loss of focus due to “Crackberry”, in fact, was judged to worse than that experienced by pot smokers.

This, of course, cannot really be a surprise. It is a great hallmark of modern life that over-indulgence in practically anything can be turned into pathology given enough time and clinical studies.

Jeff A. Taylor, Reason Express, 2005-04-26.

April 20, 2024

QotD: Cyber-addiction

April 17, 2024

QotD: The mid-life crisis, male and female versions

Most men get over the strippers-and-sports-cars overreaction pretty quickly, generally to be replaced by a new outlook on life. The guys who have come through the midlife crisis are generally a lot better people — more focused, more outgoing, far less materialistic — because they’ve taken up, however briefly, the perspective of Eternity. If you’re religious, you wonder if you’ll merit heaven. If you’re not, you wonder how you’ll be remembered. Either way, you start thinking about the kind of world you want to leave behind you, and what you’re going to do to achieve it with whatever time is left to you.

Which is why I’ve found the COVID overreaction so bizarre. Realizing your own mortality changes things. You can always tell, for instance, when it has happened to a younger person — when they come home, combat vets often act like middle-aged men going through a midlife crisis. Readjustment to civilian life is hard. Read the great war narratives, and it’s clear that none of them ever really “got over it”. Robert Graves and Ernst Junger, for instance, both lived to ripe old ages (90 and 103, respectively), and were titans in fields far removed from battle … and yet, the war WAS their lives, in some way we who haven’t been through it will never understand, and it comes through in every line they wrote.

If the Covidians were really freaking out about COVID, then, I’d expect one of two broad types of reaction: Either party-hearty midlife crisis mode, or a new determination to get on with whatever’s left of life. Obviously neither of those are true, and I just can’t grasp it — these might be your last few weeks on Earth, and that’s how you’re going to spend them? Sitting in your apartment like a sheep, wearing a mask and eating takeout, glued to a computer screen?

If you want a measure of just how feminized our society has become, there you go. Call this misogyny if you must, but it’s an easily observed fact of human nature — indeed, it has been observed, in every time, place, and culture of which we have knowledge — that post-menopausal women go a bit batty. Though a man might know for certain that he dies tomorrow, he can still keep plugging away today, because he’s programmed to find real meaning in his “work” — we are, after all, running our snazzy new mental software over kludgy old caveman hardware.

Women aren’t like that. They have one “job”, just one, and when they can’t do it anymore, they get weird. In much the same way high-end sports cars would cease to exist if middle aged men ceased to exist, so there are entire aspects of culture that don’t make sense in any other way except: These are channels for the energies of post-menopausal, and therefore surplus-to-requirements, women. You could go so far as to say that pretty much everything we call culture — traditions, history, customs — exist for that reason. Women go from being the bearers, to being the custodians, of the tribe’s future.

Severian, “Life’s Back Nine”, Rotten Chestnuts, 2021-05-11.

April 15, 2024

Is “Big Trans” in retreat?

In the latest Weekly Dish, Andrew Sullivan considers just how much things have changed in recent years, especially with the publication of the Cass Report on the true medical situation for children being prescribed puberty blocking or opposite sex hormones … and it really doesn’t match the rhetoric we’ve been hearing from activists over the last few years:

Tribalization does funny things to people. If you’d told me a decade ago that within a few years, Republicans would be against Ukraine defending itself from a Russian invasion, and Democrats would be pulling the Full Churchill to counter the Kremlin, I’d have gently asked what sativa strain you were smoking.

If you’d told me the Democrats would soon be the party most protective of the CIA and the FBI, and that Republicans would regard them as part of an evil “deep state,” ditto. And who would have thought that a president accused in 2017 of having “no real ideology [but] white supremacy” would today be doubling his support with black voters, and tripling it with black men? Who would have bet the Dems would go all-in on Big Pharma when it came to Covid vaccines? And who would have thought Republicans who long carried little copies of the Constitution in their suit pockets would lead a riot to prevent the peaceful transfer of power? You live and learn.

But would anyone have predicted that the Democrats and the left in general would soon favor a vast, completely unregulated, for-profit medical industry that would conduct a vast, new experimental treatment on children with drugs that were off-label and without any clinical trials to prove their effectiveness and safety? In the 2016 presidential race, both Dem contenders railed against Big Pharma, with Bernie going as far as calling the industry “a health hazard for the American people.” Back in 2009, you saw MSM stories like this:

The Food and Drug Administration said adults using prescription testosterone gel must be extra careful not to get any of it on children to avoid causing serious side effects. These include enlargement of the genital organs, aggressive behavior, early aging of the bones, premature growth of pubic hair, and increased sexual drive. Boys and girls are both at risk. The agency ordered its strongest warning on the products — a so-called black box.

Nowadays, it’s deemed a “genocide” if you don’t hand out these potent drugs to children almost on demand. Drugs used to castrate sex offenders and to treat adult prostate cancer have been re-purposed, off-label, to sexually reassign children before they even got through puberty. Big Pharma created lucrative “customers for life” by putting kids on irreversible drugs for a condition that could not be measured or identified by doctors and entirely self-diagnosed by … children.

And what if over 80 percent of the children subject to this experiment were of a marginalized group — gay kids? And the result of these procedures was to cure them of same-sex attraction by converting them to the opposite sex? I simply cannot imagine that any liberal or progressive would hand over gender-nonconforming children, let alone their own children, to the pharmaceutical and medical-industrial complex to be experimented on in this way.

And yet for years now, this has been the absolutely rigid left position on sex reassignments for children with gender dysphoria on the verge of puberty. And for years now, those of us who have expressed concern have been vilified, hounded, canceled and physically attacked for our advocacy. When we argued that children should get counseling and support but wait until they have matured before making irreversible, life-long medical choices they have no way of fully understanding, we were told we were bigots, transphobes and haters.

The reason we were told that children couldn’t wait and mature was that they would kill themselves if they didn’t. This is one of the most malicious lies ever told in pediatric medicine. While there is a higher chance of suicide among children with gender distress than those without, it is still extremely rare. And there is absolutely no solid evidence that treatment reduces suicide rates at all.

Don’t take this from me. The most authoritative and definitive study of the question has just been published in Britain, The Cass Report, by Hilary Cass, one of the most respected pediatricians in the country. It’s 388 pages long, crammed with references, five years in the making, based on serious research and interviews with countless doctors, parents, scientists and, most importantly, children and trans people directly affected. In the UK, its findings have been accepted by both major parties and even some of the groups who helped pioneer and enable this experiment. I urge you to read it — if only the preliminary summary.

It’s a decisive moment in this debate. After weighing all the credible evidence and data, the report concludes that puberty blockers are not reversible and not used to “take time” to consider sex reassignment, but rather irreversible precursors for a lifetime of medication. It says that gender incongruence among kids is perfectly normal and that kids should be left alone to explore their own identities; that early social transitioning is not neutral in affecting long-term outcomes; and that there is no evidence that sex reassignment for children increases or reduces suicides.

How on earth did all the American medical authorities come to support this? The report explains that as well: all the studies that purport to show positive results are plagued by profound limitations: no control group, no randomization, no double-blind studies, no subsequent follow-up with patients, or simply poor quality.

April 9, 2024

April 5, 2024

March 25, 2024

One major change in sexual behaviour since the mid-20th century

David Friedman usually blogs about economics, medieval cooking, or politics. His latest post carefully avoids (almost) all of that:

I didn’t have a convenient graphic to use for this post … but I know not to Google something like this.

My picture of sexual behavior now and in the past is based on a variety of readily observable sources — free online porn for the present, writing, both pornography and non-pornographic but explicit, for the past. On that imperfect and perhaps misleading evidence the pattern of when oral sex was or was not common in our society in recent centuries is the opposite of what one would, on straightforward economic grounds, expect.

Casanova’s memoirs provide a fascinating picture of eighteenth century Europe, including its sexual behavior. He mentions incest, male homosexuality, lesbianism, which he regards as normal for unmarried girls:

Marton told Nanette that I could not possibly be ignorant of what takes place between young girls sleeping together.

“There is no doubt,” I said, “that everybody knows those trifles …

I do not believe he ever mentions either fellatio or cunnilingus. Neither does Fanny Hill, published in London in 1748, when Casanova was twenty-three.

Frank Harris, writing in the early 20th century, is familiar with cunnilingus, uses it as a routine part of his seduction tactics, but treats it as something sufficiently exotic so that he had to be talked into trying it by a woman unwilling to risk pregnancy. I do not think he ever mentions fellatio.

Modern online porn in contrast treats both fellatio and cunnilingus as normal parts of foreplay, what routinely comes between erotic kissing and vaginal intercourse.

One online article on the history of fellatio that I found dated the change in attitudes to after the 1976 Hite Report, which found a strongly negative attitude among women to performing it. In contrast:

Today, the act is something more like bread before dinner: noteworthy only if it’s absent. (Fifty Shades of Grey and How One Sex Act Went Mainstream)

And from another, present behavior:

Oral sex precedes and often replaces sexual intercourse because it’s perceived to be noncommittal, quick and safe. For some kids it’s a cool thing to do; for others it’s a cheap thrill. Raised in a culture in which speed is valued, kids, not surprisingly, seek instant gratification through oral sex (the girl by instantly pleasing the boy, the boy by sitting back and enjoying the ride). A seemingly facile command over the sexual landscape of one’s partner is achieved without the encumbrances of clothes, coitus and the rest of the messy business. The blow job is, in essence, the new joystick of teen sexuality. (Salon)

Contrasted with:

When I was a teenager, in the bad-taste, disco-fangled ’70s, fellatio was something you graduated into. Rooted in the great American sport of baseball, the sexual metaphors of my generation put fellatio somewhere after home base, way off in the distant plains of the outfield. In fact, skipping all the bases and going directly to fellatio was the sort of home run reserved only for racy, borderline delinquents, who enjoyed a host of licentious and forbidden activities that made them stars in the firmament of teen recklessness.

March 23, 2024

QotD: The SCIENCE was SETTLED in the 1970s

When it comes to Leftie, it’s really hard to sort out what’s intentional from what’s merely wrong, or outdated, or stupid, or some combination of the above. So while there really does seem to be some kind of coordinated push to get us to eat grass and bugs, the red meat thing is, I think, just old misinformation that Leftie can’t admit has been overtaken by events (because, of course, Leftists can never be wrong about anything). And I’ll even kinda sorta give them a pass on that, because I know a lot of medical people who learned the “red meat is bad for you” mantra back in the days and still haven’t gotten over it …

For younger readers, back in the late 70s the nutritional Powers That Be got in bed with the corn lobby. It sounds funny, but they were and are huge, the corn lobby — why do you think we’re still getting barraged with shit about ethanol, even though it’s actually much worse for the environment than plain ol’ dinosaur juice, when you factor in all the “greenhouse emissions” from growing and harvesting it? Anyway, ethanol wasn’t a thing back then … but corn syrup was, and so suddenly, for no reason whatsoever, the PTB decided that fat was bad and carbohydrates were good.

Teh Science (TM) for this was as bogus and politicized as all the other Teh Science (TM) these days, but since we still had a high degree of social and institutional trust back then — living in a country that’s still 85% White will do that — nobody questioned it, and so suddenly everything had to be “fat free”, lest you get high blood pressure and colon cancer and every other damn thing (ever notice how, with Teh Science (TM), everything they decide is bad suddenly correlates with everything that has ever been bad? Funny, that). But since fat is what makes food taste good, they had to find a tasty substitute … and whaddya know, huge vats full of corn syrup just kinda happened to be there. Obesity rates immediately skyrocketed; who’d have thunk it?

… but again, this isn’t a deliberate thing with your average Leftie. You know how they are about Teh Science (TM), even Teh Science (TM) produced by people who thought polyester bellbottoms were a great look, which alone should tell you everything you need to know. They just learned “red meat is bad”, and so, being the helpful sorts they are, decided to boss you around about it. You know, for your own good.

Severian, “Friday Mailbag / Grab Bag”, Rotten Chestnuts, 2021-06-25.

March 22, 2024

Four years later

Kulak hits the highlights of the last four years in government overstretch, civil liberties shrinkage, the rise of tyrants local and national, and the palpably still-growing anger of the victims:

4 years ago, at this exact moment, we were in the “two weeks” that were supposed to flatten the Curve of Covid.

4 years ago you were still a “conspiracy theorist” if you thought it would be anything more than a minor inconvenience that would last less than a month.

Of course if you predicted that this would not last 2 weeks, but over 2 years; that within 2 months anti-lockdown protests would end in storming of state houses and false-flag FBI manufactured kidnapping attempts of Governors; that within 3 riots would burn a dozens of American cities; that the election would be inconclusive; that matters would go before the US Supreme Court, again; that a riot/mass entrapment would take place within the halls of congress … And then that this was just the Beginning …

That Big-Pharma would rush a vaccine which may well have been more dangerous that the virus; that Australia and various countries would build concentration camps for unvaccinated; that nearly all employers would be pressured or mandated to FORCE this vaccine on their employees; that vaccine passports would be implemented to track your biological status; that Canada and several other countries would implement travel restrictions on the unvaccinated and collude with their neighbors to prevent their population escaping; and then that, nearly 2 years from 2weeks to slow the spread, Canadians!? would mount one of the most logistically complex protests in human history, in the dead of winter, besieging Ottawa and blockading the US border to all trade in an apocalyptic showdown to break free of lockdowns …

Well … not even Alex Jones predicted all of that, though he got a remarkable amount of it.

Indeed the reverence with which Jones is now treated, a Cassandra-like oracle who predicts the future with seemingly (and memeably) 100% clairvoyance only to doomed to disbelief. That alone would have been unpredictable, or unbelievable in those waning days of the long 2019, those first 2-3 months when you could imagine 2020 would MERELY be an Trumpianly heated election cycle like 2016, and not a moment Fukuyama’s veil threatened to tear and History pour back into the world.

Oh, and also the bloodiest European war since the death of Stalin broke out.

March 14, 2024

“The dark world of pediatric gender ‘medicine’ in Canada”

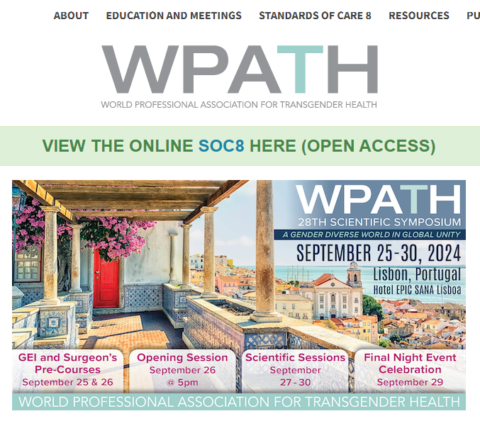

The release of internal documents from the World Professional Association for Transgender Health (WPATH) revealed just how little science went into many or most juvenile gender transitions and how much the process was being driven politically rather than scientifically. Shannon Douglas Boschy digs into how the WPATH’s methods are implemented in Canada:

An undercover investigation at a Quebec gender clinic recently documented that a fourteen-year-old girl was prescribed testosterone for the purpose of medical gender transition within ten minutes of seeing a doctor. She received no other medical or mental health assessment and no information on side-effects. This is status quo in the dark world of pediatric gender “medicine” in Canada.

On March 5th Michael Shellenberger, one of the journalists who broke the Twitter Files in 2022, along with local Ottawa journalist Mia Hughes, released shocking leaks from inside WPATH, the organization that proclaims itself the global scientific and medical authority on gender affirming care. The World Professional Association of Transgender Health is the same organization that the Quebec gender clinic, and Ottawa’s CHEO, cite as their authority for the provision of sex-change interventions for children.

These leaks expose WPATH as nothing more than a self-appointed activist body overseeing and encouraging experimental and hormonal and surgical sex-change interventions on children and vulnerable adults. Shellenberger and Hughes reveal that members fully understand that children cannot consent to loss of fertility and of sexual function, nor can they understand the lifetime risks that will result from gender-affirming medicalization, and they ignore these breaches of medical ethics.

The report reveals communication from an “Internal messaging forum, as well as a leaked internal panel discussion, demonstrat(ing) that the world-leading transgender healthcare group is neither scientific nor advocating for ethical medical care. These internal communications reveal that WPATH advocates for many arbitrary medical practices, including hormonal and surgical experimentation on minors and vulnerable adults. Its approach to medicine is consumer-driven and pseudoscientific, and its members appear to be engaged in political activism, not science.”

These findings have profound implications for medical and public education policies in Canada and raise serious concerns about the practices of secret affirmations and social transitions of children in local schools.

These leaks follow on the recent publication of a British Medical Journal study (BMJ Mental Health), covering 25-years of data, dispelling the myth that without gender-affirmation that children will kill themselves. The study, comparing over 2,000 patients to a control population, found that after factoring for other mental health issues, there was no convincing evidence that children and youth who are not gender-affirmed were at higher risk of suicide than the general population.

In the last week, a second study was released, this one from the American Urology Association, showing that post-surgical transgender-identified men, who underwent vaginoplasty, have twice the rate of suicide attempts as before affirmation surgery, and showing that trans-identified women who underwent phalloplasty, showed no change in pre-operative rates of suicide and post-operative.

These and other studies are now thoroughly debunking the emotional blackmail myths promoted by WPATH, that the absence of sex-change interventions, suggest that gender-distressed children are at high risk of taking their own lives.

March 13, 2024

QotD: Filthy coal

… coal smoke had dramatic implications for daily life even beyond the ways it reshaped domestic architecture, because in addition to being acrid it’s filthy. Here, once again, [Ruth] Goodman’s time running a household with these technologies pays off, because she can speak from experience:

So, standing in my coal-fired kitchen for the first time, I was feeling confident. Surely, I thought, the Victorian regime would be somewhere halfway between the Tudor and the modern. Dirt was just dirt, after all, and sweeping was just sweeping, even if the style of brushes had changed a little in the course of five hundred years. Washing-up with soap was not so very different from washing-up with liquid detergent, and adding soap and hot water to the old laundry method of bashing the living daylights out of clothes must, I imagined, make it a little easier, dissolving dirt and stains all the more quickly. How wrong could I have been.

Well, it turned out that the methods and technologies necessary for cleaning a coal-burning home were fundamentally different from those for a wood-burning one. Foremost, the volume of work — and the intensity of that work — were much, much greater.

The fundamental problem is that coal soot is greasy. Unlike wood soot, which is easily swept away, it sticks: industrial cities of the Victorian era were famously covered in the residue of coal fires, and with anything but the most efficient of chimney designs (not perfected until the early twentieth century), the same thing also happens to your interior. Imagine the sort of sticky film that settles on everything if you fry on the stove without a sufficient vent hood, then make it black and use it to heat not just your food but your entire house; I’m shuddering just thinking about it. A 1661 pamphlet lamented coal smoke’s “superinducing a sooty Crust or Furr upon all that it lights, spoyling the moveables, tarnishing the Plate, Gildings and Furniture, and corroding the very Iron-bars and hardest Stones with those piercing and acrimonious Spirits which accompany its Sulphure.” To clean up from coal smoke, you need soap.

“Coal needs soap?” you may say, suspiciously. “Did they … not use soap before?” But no, they (mostly) didn’t, a fact that (like the famous “Queen Elizabeth bathed once a month whether she needed it or not” line) has led to the medieval and early modern eras’ entirely undeserved reputation for dirtiness. They didn’t use soap, but that doesn’t mean they didn’t clean; instead, they mostly swept ash, dust, and dirt from their houses with a variety of brushes and brooms (often made of broom) and scoured their dishes with sand. Sand-scouring is very simple: you simply dampen a cloth, dip it in a little sand, and use it to scrub your dish before rinsing the dirty sand away. The process does an excellent job of removing any burnt-on residue, and has the added advantage of removed a micro-layer of your material to reveal a new sterile surface. It’s probably better than soap at cleaning the grain of wood, which is what most serving and eating dishes were made of at the time, and it’s also very effective at removing the poisonous verdigris that can build up on pots made from copper alloys like brass or bronze when they’re exposed to acids like vinegar. Perhaps more importantly, in an era where every joule of energy is labor-intensive to obtain, it works very well with cold water.

The sand can also absorb grease, though a bit of grease can actually be good for wood or iron (I wash my wooden cutting boards and my cast-iron skillet with soap and water,1 but I also regularly oil them). Still, too much grease is unsanitary and, frankly, gross, which premodern people recognized as much as we do, and particularly greasy dishes, like dirty clothes, might also be cleaned with wood ash. Depending on the kind of wood you’ve been burning, your ashes will contain up to 10% potassium hydroxide (KOH), better known as lye, which reacts with your grease to create a soap. (The word potassium actually derives from “pot ash,” the ash from under your pot.) Literally all you have to do to clean this way is dump a handful of ashes and some water into your greasy pot and swoosh it around a bit with a cloth; the conversion to soap is very inefficient (though if you warm it a little over the fire it works better), but if your household runs on wood you’ll never be short of ashes. As wood-burning vanished, though, it made more sense to buy soap produced industrially through essentially the same process (though with slightly more refined ingredients for greater efficiency) and to use it for everything.

Washing greasy dishes with soap rather than ash was a matter of what supplies were available; cleaning your house with soap rather than a brush was an unavoidable fact of coal smoke. Goodman explains that “wood ash also flies up and out into the room, but it is not sticky and tends to fall out of the air and settle quickly. It is easy to dust and sweep away. A brush or broom can deal with the dirt of a wood fire in a fairly quick and simple operation. If you try the same method with coal smuts, you will do little more than smear the stuff about.” This simple fact changed interior decoration for good: gone were the untreated wood trims and elaborate wall-hangings — “[a] tapestry that might have been expected to last generations with a simple routine of brushing could be utterly ruined in just a decade around coal fires” — and anything else that couldn’t withstand regular scrubbing with soap and water. In their place were oil-based paints and wallpaper, both of which persist in our model of “traditional” home decor, as indeed do the blue and white Chinese-inspired glazed ceramics that became popular in the 17th century and are still going strong (at least in my house). They’re beautiful, but they would never have taken off in the era of scouring with sand; it would destroy the finish.

But more important than what and how you were cleaning was the sheer volume of the cleaning. “I believe,” Goodman writes towards the end of the book, “there is vastly more domestic work involved in running a coal home in comparison to running a wood one.” The example of laundry is particularly dramatic, and her account is extensive enough that I’ll just tell you to read the book, but it goes well beyond that:

It is not merely that the smuts and dust of coal are dirty in themselves. Coal smuts weld themselves to all other forms of dirt. Flies and other insects get entrapped in it, as does fluff from clothing and hair from people and animals. to thoroughly clear a room of cobwebs, fluff, dust, hair and mud in a simply furnished wood-burning home is the work of half an hour; to do so in a coal-burning home — and achieve a similar standard of cleanliness — takes twice as long, even when armed with soap, flannels and mops.

And here, really, is why Ruth Goodman is the only person who could have written this book: she may be the only person who has done any substantial amount of domestic labor under both systems who could write. Like, at all. Not that there weren’t intelligent and educated women (and it was women doing all this) in early modern London, but female literacy was typically confined to classes where the women weren’t doing their own housework, and by the time writing about keeping house was commonplace, the labor-intensive regime of coal and soap was so thoroughly established that no one had a basis for comparison.

Jane Psmith, “REVIEW: The Domestic Revolution by Ruth Goodman”, Mr. and Mrs. Psmith’s Bookshelf, 2023-05-22.

1. Yeah, I know they tell you not to do this because it will destroy the seasoning. They’re wrong. Don’t use oven cleaner; anything you’d use to wash your hands in a pinch isn’t going to hurt long-chain polymers chemically bonded to cast iron.

March 11, 2024

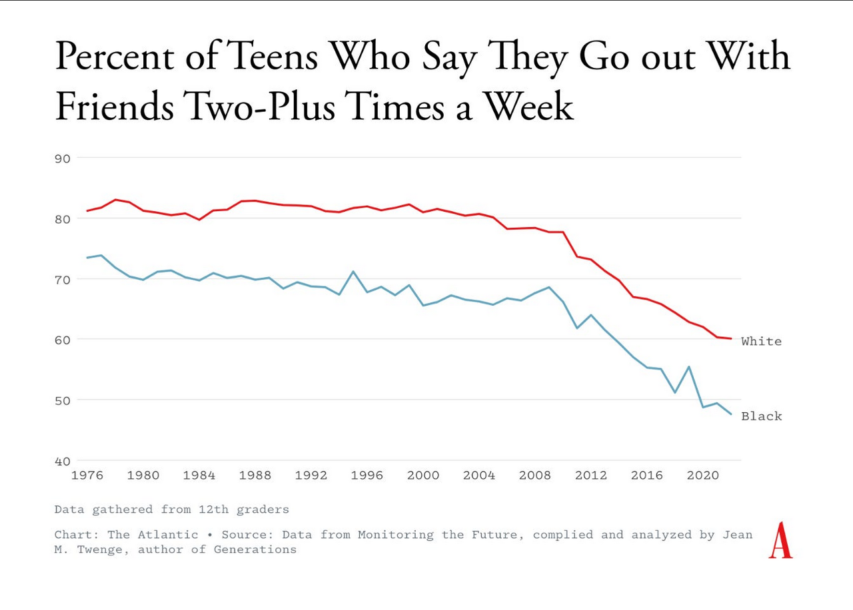

“Is it possible that the new therapy culture and the emphasis on introspection is actually making things worse?”

In Quillette, Brandon McMurtrie asks us to consider why, with more people in therapy than ever before, the overall mental health of the population is declining:

Why has mental health got worse given the prevailing emphasis on self-care and accurately knowing and expressing oneself? And why do people and groups most inclined to focus on their identity appear to be the most distressed, confused, and mentally unwell? Is it possible that the new therapy culture and the emphasis on introspection is actually making things worse?

I am not the first to notice these developments — Abigail Shrier’s new book Bad Therapy has carefully delineated a similar argument. Her arguments are elsewhere supported by research on semantic satiation and ironic uncertainty, the effects of mirror gazing, the effects of meditation, and how all this relates to the constant introspection encouraged by therapy culture and concept creep.

Satiation and Its Effects

Semantic satiation is the uncanny sensation that occurs when a word or sentence is repeated again and again, until it appears to become foreign and nonsensical to the speaker. You may have done this as a child, repeating a word in quick succession until it no longer seems to be recognizable. It’s a highly reliable effect — you can try it now. Repeat a word to yourself quickly, out loud, for an extended period, and really focus on the word and its meaning. Under these circumstances, most people experience semantic satiation.

This well-studied phenomenon — sometimes called “inhibition”, “fatigue”, “lapse of meaning”, “adaptation”, or “stimulus satiation” — applies to objects as well as language. Studies have found that compulsive staring at something can result in dissociation and derealization. Likewise, repeatedly visually checking something can make us uncertain of our perception, which results, paradoxically, in uncertainty and poor memory of the object. This may also occur with facial recognition.

Interestingly, a similar phenomenon can occur in the realm of self-perception. Mirror gazing (staring into one’s own eyes in the mirror) may induce feelings of depersonalization and derealization, causing distortions of self-perception and bodily sensation. This persistent self-inspection can result in a person feeling that they don’t recognize their own face, that they no longer feel real, that their body no longer feels the same as it once did, or that it is not their body at all. Mirror-gazing so reliably produces depersonalization and realization (and a wide range of other anomalous effects), that it can be used in experimental manipulations to trigger these symptoms for research purposes.

[…]

The Satiation of Gender Identity

The number of people identifying as non-binary or trans has skyrocketed in recent years, and a growing number of schools are now teaching gender theory and discussing it with children — sometimes in kindergarten, more often in primary school, but especially in middle- and high-school (though in other schools it is entirely banned). While this may be beneficial for those already struggling with gender confusion, it may also present an avenue for other children to ruminate and become confused via “identity satiation”.

The kind of gender theory increasingly taught in schools encourages children to spend extended periods of time ruminating on self-concepts that most would not otherwise have struggled with. They are given exercises that encourage them to doubt their own unconscious intuitions about themselves, and to ruminate on questions like “Do I feel like a boy?” and “What does it mean to feel like a boy?” and “I thought I was a boy but what if I am not?”

Such questions are often confusing to answer and difficult to express, even for adults unaffected by gender dysphoria. But asking children to ruminate in this way may lead to confusion and depersonalization-derealization via the mechanisms described above. “Identity satiation” may then lead them to decide they are non-binary or trans, especially when identifying as such is rewarded with social recognition and social support. Many people who subsequently de-transitioned have described this process: “I never thought about my gender or had a problem with being a girl before”.

QotD: The profound asshole-ishness of the “best of the best”

Ever met a pro athlete? How about a fighter pilot, or a trauma surgeon? I’ve met a fair amount of all of them, and unless they’re on their very best behavior they all tend to come off as raging assholes. And they get worse the higher up the success ladder they go — the pro athletes I’ve met were mostly in the minors, and though they were big-league assholes they were nothing compared to the few genuine “you see them every night on Sports Center” guys I met. Same way with fighter pilots — I never met an astronaut, but I had buddies at NASA back in the days who met lots, and they told me that even other fighter jocks consider astronauts to be world-class assholes …

The truth is, they’re not — or, at least, they’re no more so than the rest of the population. It’s just that they have jobs where total, utter, profoundly narcissistic self-confidence is a must. It’s what keeps them alive, in the pilots’ case at least, and it’s what keeps you alive if, God forbid, you should ever need the trauma surgeon. Same way with the sportsballers. I can say with 100% metaphysical certainty that there are better basketball players than Michael Jordan, better hitters than Mike Trout, better passers than Tom Brady, out there. There are undoubtedly lots of them, if by “better” you mean “possessed of more raw physical talent at the neuronal level”. What those guys don’t have, but Jordan, Brady, Trout et al do have, is the mental wherewithal to handle failure.

Everyone knows of someone like Billy Beane, the Moneyball guy. So good at football that he was recruited to replace John Elway (!!) at Stanford, but who chose to play baseball instead … and became one of the all-time busts. He had all the talent in the world, but his head wasn’t on straight. Not to put too fine a point on it, he doubted himself. He got to Double A (or wherever) and faced a pitcher who mystified him. Which made him think “Maybe I’m not as good as I think I am?” … and from that moment, he was toast as a professional athlete. Contrast this to the case of Mike Piazza, the consensus greatest offensive catcher of all time. A 27th round draftee, only picked up as a favor to a family friend, etc. Beane was a “better” athlete, but Piazza had a better head. Striking out didn’t make him doubt himself; it made him angry, and that’s why Piazza’s in the Hall of Fame and Beane is a legendary bust.

The problem though, for us normal folks, is that the affect in all cases is pretty much the same … and it’s really hard to turn off, which is why so many pro athletes (fighter jocks, surgeons, etc.) who are actually nice guys come off as assholes. It’s hard to turn off … but as it turns out, it’s pretty easy to turn ON, and that’s in effect what Game teaches.

Severian, “Mental Middlemen II: Sex and the City and Self-Confidence”, Rotten Chestnuts, 2021-05-06.

March 10, 2024

March 9, 2024

Salt – mundane, boring … and utterly essential

In the latest Age of Invention newsletter, Anton Howes looks at the importance of salt in history:

There was a product in the seventeenth century that was universally considered a necessity as important as grain and fuel. Controlling the source of this product was one of the first priorities for many a military campaign, and sometimes even a motivation for starting a war. Improvements to the preparation and uses of this product would have increased population size and would have had a general and noticeable impact on people’s living standards. And this product underwent dramatic changes in the seventeenth and eighteenth centuries, becoming an obsession for many inventors and industrialists, while seemingly not featuring in many estimates of historical economic output or growth at all.

The product is salt.

Making salt does not seem, at first glance, all that interesting as an industry. Even ninety years ago, when salt was proportionately a much larger industry in terms of employment, consumption, and economic output, the author of a book on the history salt-making noted how a friend had advised keeping the word salt out of the title, “for people won’t believe it can ever have been important”.1 The bestselling Salt: A World History by Mark Kurlansky, published over twenty years ago, actively leaned into the idea that salt was boring, becoming so popular because it created such a surprisingly compelling narrative around an article that most people consider commonplace. (Kurlansky, it turns out, is behind essentially all of those one-word titles on the seemingly prosaic: cod, milk, paper, and even oysters).

But salt used to be important in a way that’s almost impossible to fully appreciate today.

Try to consider what life was like just a few hundred years ago, when food and drink alone accounted for 75-85% of the typical household’s spending — compared to just 10-15%, in much of the developed world today, and under 50% in all but a handful of even the very poorest countries. Anything that improved food and drink, even a little bit, was thus a very big deal. This might be said for all sorts of things — sugar, spices, herbs, new cooking methods — but salt was more like a general-purpose technology: something that enhances the natural flavours of all and any foods. Using salt, and using it well, is what makes all the difference to cooking, whether that’s judging the perfect amount for pasta water, or remembering to massage it into the turkey the night before Christmas. As chef Samin Nosrat puts it, “salt has a greater impact on flavour than any other ingredient. Learn to use it well, and food will taste good”. Or to quote the anonymous 1612 author of A Theological and Philosophical Treatise of the Nature and Goodness of Salt, salt is that which “gives all things their own true taste and perfect relish”. Salt is not just salty, like sugar is sweet or lemon is sour. Salt is the universal flavour enhancer, or as our 1612 author put it, “the seasoner of all things”.

Making food taste better was thus an especially big deal for people’s living standards, but I’ve never seen any attempt to chart salt’s historical effects on them. To put it in unsentimental economic terms, better access to salt effectively increased the productivity of agriculture — adding salt improved the eventual value of farmers’ and fishers’ produce — at a time when agriculture made up the vast majority of economic activity and employment. Before 1600, agriculture alone employed about two thirds of the English workforce, not to mention the millers, butchers, bakers, brewers and assorted others who transformed seeds into sustenance. Any improvements to the treatment or processing of food and drink would have been hugely significant — something difficult to fathom when agriculture accounts for barely 1% of economic activity in most developed economies today. (Where are all the innovative bakers in our history books?! They existed, but have been largely forgotten.)

And so far we’ve only mentioned salt’s direct effects on the tongue. It also increased the efficiency of agriculture by making food last longer. Properly salted flesh and fish could last for many months, sometimes even years. Salting reduced food waste — again consider just how much bigger a deal this used to be — and extended the range at which food could be transported, providing a whole host of other advantages. Salted provisions allowed sailors to cross oceans, cities to outlast sieges, and armies to go on longer campaigns. Salt’s preservative properties bordered on the necromantic: “it delivers dead bodies from corruption, and as a second soul enters into them and preserves them … from putrefaction, as the soul did when they were alive”.2

Because of salt’s preservative properties, many believed that salt had a crucial connection with life itself. The fluids associated with life — blood, sweat and tears — are all salty. And nowhere seemed to be more teeming with life as the open ocean. At a time when many believed in the spontaneous generation of many animals from inanimate matter, like mice from wheat or maggots from meat, this seemed a more convincing point. No house was said to generate as many rats as a ship passing over the salty sea, while no ship was said to have more rats than one whose cargo was salt.3 Salt seemed to have a kind of multiplying effect on life: something that could be applied not only to seasoning and preserving food, but to growing it.

Livestock, for example, were often fed salt: in Poland, thanks to the Wieliczka salt mines, great stones of salt lay all through the streets of Krakow and the surrounding villages so that “the cattle, passing to and fro, lick of those salt-stones”.4 Cheshire in north-west England, with salt springs at Nantwich, Middlewich and Northwich, has been known for at least half a millennium for its cheese: salt was an essential dietary supplement for the milch cows, also making it (less famously) one of the major production centres for England’s butter, too. In 1790s Bengal, where the East India Company monopolised salt and thereby suppressed its supply, one of the company’s own officials commented on the major effect this had on the region’s agricultural output: “I know nothing in which the rural economy of this country appears more defective than in the care and breed of cattle destined for tillage. Were the people able to give them a proper quantity of salt, they would … probably acquire greater strength and a larger size.”5 And to anyone keeping pigeons, great lumps of baked salt were placed in dovecotes to attract them and keep them coming back, while the dung of salt-eating pigeons, chickens, and other kept birds were considered excellent fertilisers.6

1. Edward Hughes, Studies in Administration and Finance 1558 – 1825, with Special Reference to the History of Salt Taxation in England (Manchester University Press, 1934), p.2

2. Anon., Theological and philosophical treatise of the nature and goodness of salt (1612), p.12

3. Blaise de Vigenère (trans. Edward Stephens), A Discovrse of Fire and Salt, discovering many secret mysteries, as well philosophical, as theological (1649), p.161

4. “A relation, concerning the Sal-Gemme-Mines in Poland”, Philosophical Transactions of the Royal Society of London 5, 61 (July 1670), p.2001

5. Quoted in H. R. C. Wright, “Reforms in the Bengal Salt Monopoly, 1786-95”, Studies in Romanticism 1, no. 3 (1962), p.151

6. Gervase Markam, Markhams farwell to husbandry or, The inriching of all sorts of barren and sterill grounds in our kingdome (1620), p.22

March 8, 2024

A fresh look at the PUA “bible”

In UnHerd, Kat Rosenfield considers the original pick-up artist bible, The Game by Neil Strauss, in light of more than a decade of changes in how moderns approach relationships with the opposite sex:

A decade letter, I’m struck by the astonishing prescriptiveness of this line: the notion that any sexual encounter preceded by flirtation, negotiation, or indeed any assessment of a suitor’s desirability should be understood as “less-than-ideal” — and that any man who seeks to make himself desirable to an as-yet-uncertain woman is doing something inherently sleazy. Granted, the anti-Game backlash began in the form of reasonable scrutiny of controversial seduction techniques like “negging” (a slightly backhanded compliment deployed for the sake of flirtation).

But since then it has morphed into something much stranger: the idea that anything a man does to impress a woman, from basic grooming to speaking in complete sentences, should be viewed with suspicion. Behind this is the same low-trust mindset that leads women to treat every date as a hunt for the red flags that reveal her suitor as a secret monster. If he compliments you? That’s lovebombing, which means he’s an abuser. If he doesn’t compliment you, that’s withholding, which also means he’s an abuser. Other alleged “red flags” include oversharing, undersharing, paying for the date, not paying for the date, being too eager, being five minutes late, and drinking water — or worse, drinking water through a straw.

Today, the turn against pick-up artistry can be understood at least in part as a reaction against some of its more prominent contemporary practitioners, including men such as Andrew Tate, who makes Mystery look like a catch by comparison. But it is also no doubt an outgrowth of a culture in which male sexuality has effectively been characterised as inherently predatory, while female sexuality is seen as virtually non-existent. The question that seduction manuals once aimed to answer — “how do I, a shy young man, successfully and confidently approach women?” — is now, in itself, a red flag, one likely to provoke anything from squawking indignation to abject horror to bystanders wondering if they ought to call the police. That you are even thinking of approaching women just goes to show what a troglodyte you really are. What do women want? The contemporary answer appears to be: to be left alone, forever, until they die — or to meet someone in a safe and sanitised way, via dating app … although even that option is increasingly positioned as inherently dangerous.

Meanwhile, I was surprised upon revisiting The Game to realise that the strategies contained within the book are not just useful but mostly in keeping with more traditional dating and courtship advice, from “peacocking” (wearing something eye-catching or unusual that can act as a conversation starter), to passing “shit tests” (responding with humour and confidence when a woman teases you). Even the much-derided negging wasn’t originally designed with the goal of insulting or belittling women, but rather to teach men how to talk to them without fawning and drooling all over the place. In the end, the message of The Game is more or less identical to the one in popular women’s dating guides, like The Rules or He’s Just Not That Into You: that confidence is sexy, and naked desperation is a turnoff.

And while this may just be a function of one too many viewings of the BBC’s Pride & Prejudice (featuring Mr Darcy, a man in possession of £50,000 a year and an absolutely legendary negging game), I wonder if the aim of seduction guides is, paradoxically, to restore our confidence in the tension, the mystery, and the playfulness of courtship in the age of the casual hookup. Even as we rightly rejoice in the fact that society no longer stigmatises women for desiring and pursuing sex, there is surely still something to be said for subtlety — and just because we aren’t consigned to the role of the passive damsel, dropping a handkerchief on the ground in the hope that the right man will pick it up, that doesn’t mean every woman wants to be horny on main. It’s not just that announcing your desire through a megaphone can seem uncouth; it’s also a lot less exciting than the dance of lingering glances, double entendres, and simmering chemistry that characterises a mutually-desired seduction in the making. Certain people might deride this brand of sexual encounter as “less-than-ideal” for its political incorrectness, but it’s wildly popular — in novels, in films, and in the fantasies of individual women — for a reason.

Meanwhile, the contemporary dating landscape is one in which the sheer fun of dating, courtship, and, yes, falling into bed together has been largely back-burnered in favour of something at once formal and immensely self-serious. In a world of handwringing over sexual consent — in which a man just talking to a woman at a coffeeshop can trigger an emergency response protocol — the stakes of sex itself come to seem unimaginably high, a breakneck gamble where one wrong move will result in a lifetime of trauma (or, if you’re a guy, a lifetime on a list of shitty men). Add to this the proliferation of dating apps, which makes the entire romantic enterprise feel more like a job search than a playground, and the whole thing begins to seem not just fraught but inherently adversarial — a negotiation between two parties whose interests are completely at odds, who cannot trust each other, and where there’s a very real risk of terrible and irreparable harm.