We know, from experience with software, that secrecy is the enemy of quality — that software bugs, like cockroaches, shun light and flourish in darkness. So, too, with mistakes in the interpretation of scientific data; neither deliberate fraud nor inadvertent error can long survive the skeptical scrutiny of millions. The same remedy we have found in the open-source community applies – unsurprisingly, since we learned it from science in the first place. Abolish the secrecy, let in the sunlight.

Eric S. Raymond, “Open-Sourcing the Global Warming Debate”, Armed and Dangerous, 2009-11-23.

January 27, 2021

QotD: Open-source the data

September 19, 2020

The perils and pitfalls of developing open source software

In The Diff, Byrne Hobart looks at the joys and pains-in-the-ass of open source software development:

“How university open debates and discussions introduced me to open source” by opensourceway is licensed under CC BY-SA 2.0

We’re in a strange in-between state where software is increasingly essential, the most important bits of it are built by dedicated volunteers, and there’s no great way to encourage them to a) keep doing this, and b) stay motivated as the work gets less and less fun. That was my main takeaway from Nadia Eghbal’s Working in Public, published this week.

The book is a tour of the open source phenomenon: who builds projects, how they get made and updated, and why. This is an important topic! Almost all of the software I use to write this newsletter is either built from open source products or relies on them: the text is composed in Emacs, on a computer running Linux; I browse the web using Chrome, which mostly consists of open-source Chromium code; my various i-devices run Unix-based OSes (iOS is not open-source, but there are as many open-source implementations of Unix as you want); etc. All of this software Just Works, and I don’t directly pay for any of it. Clearly there are some incentives at work, or it wouldn’t exist in the first place, but generally the more complex an activity is, the harder it is to coordinate without pricing signals. Somehow, open-source projects don’t fall victim to this.

As Working in Public shows, they do face a demoralizing lifecycle. When a project starts, it’s one coder trying to solve a problem they face, and trying to solve it their way. Sometimes, there’s a good reason their preferred solution doesn’t exist yet, and the project languishes. Sometimes, the project catches on, acquiring new users, new contributors, and new bug reports and feature requests.

[…]

Software projects go through this exact cycle: at first, they’re mostly producing and building on “software capital,” code that executes the underlying logic of the process. Over time, more of the work involves integration and compatibility, and as all the easy edge cases get identified, the remaining bugs are a) disproportionately rare (or they would have been spotted by now) and b) disproportionately hard to fix (because they have to be the result of a rare and thus complicated confluence of circumstances). So, over time, a software project falls into the Baumol Trap, where high productivity in the fun stuff produces more and more un-fun work where productivity gains are hard to come by.

This is depressing for project creators. Early on, they have the triumphant experience of building exactly what they want, and solving their nagging problem. And the result is that they’re cleaning up after a bunch of requests from people who are either annoyed that it doesn’t work for them or have unsolicited feedback on how it ought to work. No wonder Linus Torvalds gets so mad. Running a successful open source project is just Good Will Hunting in reverse, where you start out as a respected genius and end up being a janitor who gets into fights.

Emphasis mine, not in the original article.

H/T to Colby Cosh for sharing the link.

October 8, 2018

QotD: The closed-source software dystopia we barely avoided

Thought experiment: imagine a future in which everybody takes for granted that all software outside a few toy projects in academia will be closed source controlled by managerial elites, computers are unhackable sealed boxes, communications protocols are opaque and locked down, and any use of computer-assisted technology requires layers of permissions that (in effect) mean digital information flow is utterly controlled by those with political and legal master keys. What kind of society do you suppose eventually issues from that?

Remember Trusted Computing and Palladium and crypto-export restrictions? RMS and Linus Torvalds and John Gilmore and I and a few score other hackers aborted that future before it was born, by using our leverage as engineers and mentors of engineers to change the ground of debate. The entire hacker culture at the time was certainly less than 5% of the population, by orders of magnitude.

And we may have mainstreamed open source just in time. In an attempt to defend their failing business model, the MPAA/RIAA axis of evil spent years pushing for digital “rights” management so pervasively baked into personal-computer hardware by regulatory fiat that those would have become unhackable. Large closed-source software producers had no problem with this, as it would have scratched their backs too. In retrospect, I think it was only the creation of a pro-open-source constituency with lots of money and political clout that prevented this.

Did we bend the trajectory of society? Yes. Yes, I think we did. It wasn’t a given that we’d get a future in which any random person could have a website and a blog, you know. It wasn’t even given that we’d have an Internet that anyone could hook up to without permission. And I’m pretty sure that if the political class had understood the implications of what we were actually doing, they’d have insisted on more centralized control. ~For the public good and the children, don’t you know.~

So, yes, sometimes very tiny groups can change society in visibly large ways on a short timescale. I’ve been there when it was done; once or twice I’ve been the instrument of change myself.

Eric S. Raymond, “Engineering history”, Armed and Dangerous, 2010-09-12.

October 21, 2017

Canada’s equivalent to the NSA releases a malware detection tool

At The Register, Simon Sharwood looks at a new security tool (in open source) released by the Communications Security Establishment (CSE, formerly known as CSEC):

Canada’s Communications Security Establishment has open-sourced its own malware detection tool.

The Communications Security Establishment (CSE) is a signals intelligence agency roughly equivalent to the United Kingdom’s GCHQ, the USA’s NSA and Australia’s Signals Directorate. It has both intelligence-gathering and advisory roles.

It also has a tool called “Assemblyline” which it describes as a “scalable distributed file analysis framework” that can “detect and analyse malicious files as they are received.”

[…]

The tool was written in Python and can run on a single PC or in a cluster. CSE claims it can process millions of files a day. “Assemblyline was built using public domain and open-source software; however the majority of the code was developed by CSE.” Nothing in it is commercial technology and the CSE says it is “easily integrated in to existing cyber defence technologies.”

The tool’s been released under the MIT licence and is available here.

The organisation says it released the code because its job is to improve Canadian’s security, and it’s confident Assemblyline will help. The CSE’s head of IT security Scott Jones has also told the Canadian Broadcasting Corporation that the release has a secondary goal of demystifying the organisation.

May 24, 2017

ESR presents Open Adventure

Eric S. Raymond recently was entrusted with the original code for ADVENT, and he’s put it up on gitlab for anyone to access:

Colossal Cave Adventure was the origin of many things; the text adventure game, the dungeon-crawling D&D (computer) game, the MOO, the roguelike genre. Computer gaming as we know it would not exist without ADVENT (as it was known in its original PDP-10 incarnation).

Long ago, you might have played this game. Or maybe you’ve just heard stories about it, or vaguely know that “

xyzzy” is a magic word, or have heard people say “You are in a maze of twisty little passages, all alike”,Though there’s a C port of the original 1977 game in the BSD game package, and the original FORTRAN sources could be found if you knew where to dig, Crowther & Woods’s final version – Adventure 2.5 from 1995 – has never been packaged for modern systems and distributed under an open-source license.

Until now, that is.

With the approval of its authors, I bring you Open Adventure. And with it some thoughts about what it means to be respectful of an important historical artifact when it happens to be software.

This is code that fully deserves to be in any museum of the great artifacts of hacker history. But there’s a very basic question about an artifact like this: should a museum preserve it in a static form as close to the original as possible, or is it more in the right spirit to encourage the folk process to continue improving the code?

Modern version control makes this question easier; you can have it both ways, keeping a pristine archival version in the history and improving it. Anyway, I think the answer to the general question is clear; if heritage code like this is relevant at all, it’s as a living and functional artifact. We respect our history and the hackers of the past best by carrying on their work and their playfulness.

March 1, 2016

BrewDog releases all their beer recipies

Lester Haines on the recent decision by BrewDog to open source their entire beer recipie list:

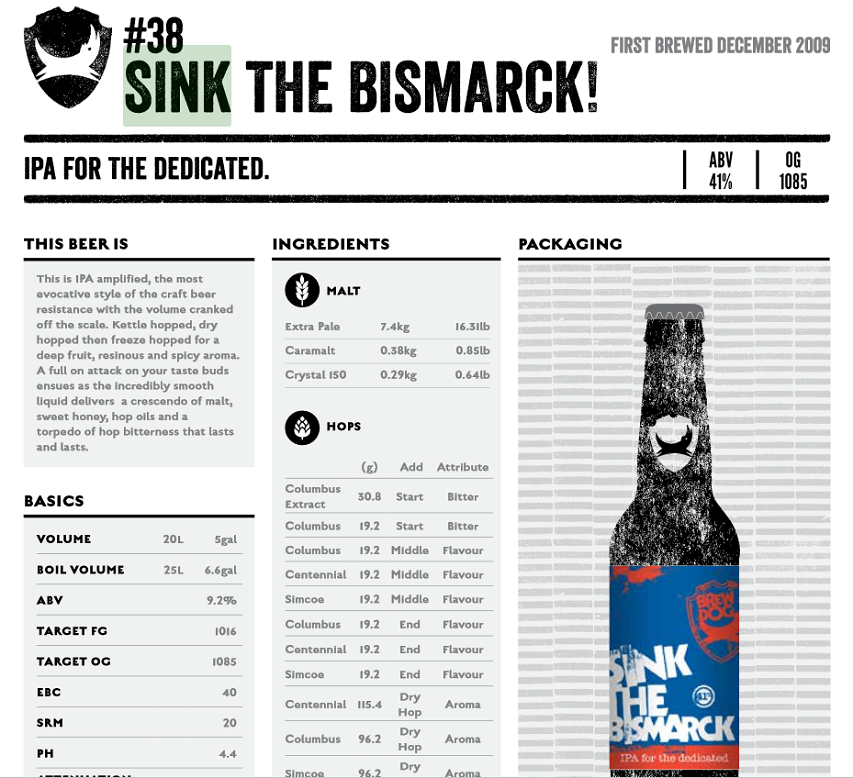

From humble home-brewing origins, James Watt and Martin Dickie have grown BrewDog to an international craft beer operation. Along the way, they’ve claimed the “world’s strongest beer” title twice, firstly with the 41 per cent ABV Sink The Bismarck!, and then with the liver-bashing 55 per cent ABV The End of History.

The recipes for both (albeit with somewhat less lethal ABVs) are available on BrewDog’s “DIY Dog” PDF (see here), along with other tempting tipples such as Tactical Nuclear Penguin and Albino Squid Assassin.

November 17, 2015

September 11, 2015

How about creating a truly open web?

Brewster Kahle on the need to blow up change the current web and recreate it with true open characteristics built-in from the start:

Over the last 25 years, millions of people have poured creativity and knowledge into the World Wide Web. New features have been added and dramatic flaws have emerged based on the original simple design. I would like to suggest we could now build a new Web on top of the existing Web that secures what we want most out of an expressive communication tool without giving up its inclusiveness. I believe we can do something quite counter-intuitive: We can lock the Web open.

One of my heroes, Larry Lessig, famously said “Code is Law.” The way we code the web will determine the way we live online. So we need to bake our values into our code. Freedom of expression needs to be baked into our code. Privacy should be baked into our code. Universal access to all knowledge. But right now, those values are not embedded in the Web.

It turns out that the World Wide Web is quite fragile. But it is huge. At the Internet Archive we collect one billion pages a week. We now know that Web pages only last about 100 days on average before they change or disappear. They blink on and off in their servers.

And the Web is massively accessible – unless you live in China. The Chinese government has blocked the Internet Archive, the New York Times, and other sites from its citizens. And other countries block their citizens’ access as well every once in a while. So the Web is not reliably accessible.

And the Web isn’t private. People, corporations, countries can spy on what you are reading. And they do. We now know, thanks to Edward Snowden, that Wikileaks readers were selected for targeting by the National Security Agency and the UK’s equivalent just because those organizations could identify those Web browsers that visited the site and identify the people likely to be using those browsers. In the library world, we know how important it is to protect reader privacy. Rounding people up for the things that they’ve read has a long and dreadful history. So we need a Web that is better than it is now in order to protect reader privacy.

December 27, 2014

ESR on the origins of open source theory

Eric S. Raymond acknowledges the strong influence of evolutionary psychology on the development of open source theory:

Yesterday I realized, quite a few years after I should have, that I have never identified in public where I got the seed of the idea that I developed into the modern economic theory of open-source software – that is, how open-source “altruism” could be explained as an emergent result of selfish incentives felt by individuals. So here is some credit where credit is due.

Now, in general it should be obvious that I owed a huge debt to thinkers in the classical-liberal tradition, from Adam Smith down to F. A. Hayek and Ayn Rand. The really clueful might also notice some connection to Robert Trivers’s theory of reciprocal altruism under natural selection and Robert Axelrod’s work on tit-for-tat interactions and the evolution of cooperation.

These were all significant; they gave me the conceptual toolkit I could apply successfully once I’d had my initial insight. But there’s a missing piece – where my initial breakthrough insight came from, the moment when I realized I could apply all those tools.

The seed was in the seminal 1992 anthology The Adapted Mind: Evolutionary Psychology and the Generation of Culture. That was full of brilliant work; it laid the foundations of evolutionary psychology and is still worth a read.

(I note as an interesting aside that reading science fiction did an excellent job of preparing me for the central ideas of evolutionary psychology. What we might call “hard adaptationism” – the search for explanations of social behavior in evolution under selection – has been a central theme in SF since the 1940s, well before even the first wave of academic sociobiological thinking in the early 1970s and, I suspect, strongly influencing that wave. It is implicit in, as a leading example, much of Robert Heinlein’s work.)

The specific paper that changed my life was this one: Two Nonhuman Primate Models for the Evolution of Human Food Sharing: Chimpanzees and Callitrichids by W.C. McGrew and Anna T.C. Feistner.

In it, the authors explained food sharing as a hedge against variance. Basically, foods that can be gathered reliably were not shared; high-value food that could only be obtained unreliably was shared.

The authors went on to observe that in human hunter-gatherer cultures a similar pattern obtains: gathered foods (for which the calorie/nutrient value is a smooth function of effort invested) are not typically shared, whereas hunted foods (high variance of outcome in relation to effort) are shared. Reciprocal altruism is a hedge against uncertainty of outcomes.

November 23, 2014

ESR on how to learn hacking

Eric S. Raymond has been asked to write this document for years, and he’s finally given in to the demand:

What Is Hacking?

The “hacking” we’ll be talking about in this document is exploratory programming in an open-source environment. If you think “hacking” has anything to do with computer crime or security breaking and came here to learn that, you can go away now. There’s nothing for you here.

Hacking is a style of programming, and following the recommendations in this document can be an effective way to acquire general-purpose programming skills. This path is not guaranteed to work for everybody; it appears to work best for those who start with an above-average talent for programming and a fair degree of mental flexibility. People who successfully learn this style tend to become generalists with skills that are not strongly tied to a particular application domain or language.

Note that one can be doing hacking without being a hacker. “Hacking”, broadly speaking, is a description of a method and style; “hacker” implies that you hack, and are also attached to a particular culture or historical tradition that uses this method. Properly, “hacker” is an honorific bestowed by other hackers.

Hacking doesn’t have enough formal apparatus to be a full-fledged methodology in the way the term is used in software engineering, but it does have some characteristics that tend to set it apart from other styles of programming.

- Hacking is done on open source. Today, hacking skills are the individual micro-level of what is called “open source development” at the social macrolevel. A programmer working in the hacking style expects and readily uses peer review of source code by others to supplement and amplify his or her individual ability.

- Hacking is lightweight and exploratory. Rigid procedures and elaborate a-priori specifications have no place in hacking; instead, the tendency is try-it-and-find-out with a rapid release tempo.

- Hacking places a high value on modularity and reuse. In the hacking style, you try hard never to write a piece of code that can only be used once. You bias towards making general tools or libraries that can be specialized into what you want by freezing some arguments/variables or supplying a context.

- Hacking favors scrap-and-rebuild over patch-and-extend. An essential part of hacking is ruthlessly throwing away code that has become overcomplicated or crufty, no matter how much time you have invested in it.

The hacking style has been closely associated with the technical tradition of the Unix operating system.

Recently it has become evident that hacking blends well with the “agile programming” style. Agile techniques such as pair programming and feature stories adapt readily to hacking and vice-versa. In part this is because the early thought leaders of agile were influenced by the open source community. But there has since been traffic in the other direction as well, with open-source projects increasingly adopting techniques such as test-driven development.

October 24, 2014

Google Design open sources some icons

If you have a need for system icons and don’t want to create your own (or, like me, you have no artistic skills), you might want to look at a recent Google Design set that is now open source:

Today, Google Design are open-sourcing 750 glyphs as part of the Material Design system icons pack. The system icons contain icons commonly used across different apps, such as icons used for media playback, communication, content editing, connectivity, and so on. They’re equally useful when building for the web, Android or iOS.

![]()

September 30, 2014

“These bugs were found – and were findable – because of open-source scrutiny”

ESR talks about the visibility problem in software bugs:

The first thing to notice here is that these bugs were found – and were findable – because of open-source scrutiny.

There’s a “things seen versus things unseen” fallacy here that gives bugs like Heartbleed and Shellshock false prominence. We don’t know – and can’t know – how many far worse exploits lurk in proprietary code known only to crackers or the NSA.

What we can project based on other measures of differential defect rates suggests that, however imperfect “many eyeballs” scrutiny is, “few eyeballs” or “no eyeballs” is far worse.

September 19, 2014

Doctorow – “The time has come to create privacy tools for normal people”

In the Guardian, Cory Doctorow says that we need privacy-enhancing technical tools that can be easily used by everyone, not just the highly technical (or highly paranoid) among us:

You don’t need to be a technical expert to understand privacy risks anymore. From the Snowden revelations to the daily parade of internet security horrors around the world – like Syrian and Egyptian checkpoints where your Facebook logins are required in order to weigh your political allegiances (sometimes with fatal consequences) or celebrities having their most intimate photos splashed all over the web.

The time has come to create privacy tools for normal people – people with a normal level of technical competence. That is, all of us, no matter what our level of technical expertise, need privacy. Some privacy measures do require extraordinary technical competence; if you’re Edward Snowden, with the entire NSA bearing down on your communications, you will need to be a real expert to keep your information secure. But the kind of privacy that makes you immune to mass surveillance and attacks-of-opportunity from voyeurs, identity thieves and other bad guys is attainable by anyone.

I’m a volunteer on the advisory board for a nonprofit that’s aiming to do just that: Simply Secure (which launches Thursday at simplysecure.org) collects together some very bright usability and cryptography experts with the aim of revamping the user interface of the internet’s favorite privacy tools, starting with OTR, the extremely secure chat system whose best-known feature is “perfect forward secrecy” which gives each conversation its own unique keys, so a breach of one conversation’s keys can’t be used to snoop on others.

More importantly, Simply Secure’s process for attaining, testing and refining usability is the main product of its work. This process will be documented and published as a set of best practices for other organisations, whether they are for-profits or non-profits, creating a framework that anyone can use to make secure products easier for everyone.

April 23, 2014

LibreSSL website – “This page scientifically designed to annoy web hipsters”

Julian Sanchez linked to this Ars Technica piece on a new fork of OpenSSL:

OpenBSD founder Theo de Raadt has created a fork of OpenSSL, the widely used open source cryptographic software library that contained the notorious Heartbleed security vulnerability.

OpenSSL has suffered from a lack of funding and code contributions despite being used in websites and products by many of the world’s biggest and richest corporations.

The decision to fork OpenSSL is bound to be controversial given that OpenSSL powers hundreds of thousands of Web servers. When asked why he wanted to start over instead of helping to make OpenSSL better, de Raadt said the existing code is too much of a mess.

“Our group removed half of the OpenSSL source tree in a week. It was discarded leftovers,” de Raadt told Ars in an e-mail. “The Open Source model depends [on] people being able to read the code. It depends on clarity. That is not a clear code base, because their community does not appear to care about clarity. Obviously, when such cruft builds up, there is a cultural gap. I did not make this decision… in our larger development group, it made itself.”

The LibreSSL code base is on OpenBSD.org, and the project is supported financially by the OpenBSD Foundation and OpenBSD Project. LibreSSL has a bare bones website that is intentionally unappealing.

“This page scientifically designed to annoy web hipsters,” the site says. “Donate now to stop the Comic Sans and Blink Tags.” In explaining the decision to fork, the site links to a YouTube video of a cover of the Twisted Sister song “We’re not gonna take it.”

April 11, 2014

Open source software and the Heartbleed bug

Some people are claiming that the Heartbleed bug proves that open source software is a failure. ESR quickly addresses that idiotic claim:

I actually chuckled when I read rumor that the few anti-open-source advocates still standing were crowing about the Heartbleed bug, because I’ve seen this movie before after every serious security flap in an open-source tool. The script, which includes a bunch of people indignantly exclaiming that many-eyeballs is useless because bug X lurked in a dusty corner for Y months, is so predictable that I can anticipate a lot of the lines.

The mistake being made here is a classic example of Frederic Bastiat’s “things seen versus things unseen”. Critics of Linus’s Law overweight the bug they can see and underweight the high probability that equivalently positioned closed-source security flaws they can’t see are actually far worse, just so far undiscovered.

That’s how it seems to go whenever we get a hint of the defect rate inside closed-source blobs, anyway. As a very pertinent example, in the last couple months I’ve learned some things about the security-defect density in proprietary firmware on residential and small business Internet routers that would absolutely curl your hair. It’s far, far worse than most people understand out there.

[…]

Ironically enough this will happen precisely because the open-source process is working … while, elsewhere, bugs that are far worse lurk in closed-source router firmware. Things seen vs. things unseen…

Returning to Heartbleed, one thing conspicuously missing from the downshouting against OpenSSL is any pointer to an implementation that is known to have a lower defect rate over time. This is for the very good reason that no such empirically-better implementation exists. What is the defect history on proprietary SSL/TLS blobs out there? We don’t know; the vendors aren’t saying. And we can’t even estimate the quality of their code, because we can’t audit it.

The response to the Heartbleed bug illustrates another huge advantage of open source: how rapidly we can push fixes. The repair for my Linux systems was a push-one-button fix less than two days after the bug hit the news. Proprietary-software customers will be lucky to see a fix within two months, and all too many of them will never see a fix patch.

Update: There are lots of sites offering tools to test whether a given site is vulnerable to the Heartbeat bug, but you need to step carefully there, as there’s a thin line between what’s legal in some countries and what counts as an illegal break-in attempt:

Websites and tools that have sprung up to check whether servers are vulnerable to OpenSSL’s mega-vulnerability Heartbleed have thrown up anomalies in computer crime law on both sides of the Atlantic.

Both the US Computer Fraud and Abuse Act and its UK equivalent the Computer Misuse Act make it an offence to test the security of third-party websites without permission.

Testing to see what version of OpenSSL a site is running, and whether it is also supports the vulnerable Heartbeat protocol, would be legal. But doing anything more active — without permission from website owners — would take security researchers onto the wrong side of the law.

And you shouldn’t just rush out and change all your passwords right now (you’ll probably need to do it, but the timing matters):

Heartbleed is a catastrophic bug in widely used OpenSSL that creates a means for attackers to lift passwords, crypto-keys and other sensitive data from the memory of secure server software, 64KB at a time. The mega-vulnerability was patched earlier this week, and software should be updated to use the new version, 1.0.1g. But to fully clean up the problem, admins of at-risk servers should generate new public-private key pairs, destroy their session cookies, and update their SSL certificates before telling users to change every potentially compromised password on the vulnerable systems.