But what about the error correction function of peer review? Surely it’s important to ensure that the literature doesn’t fill up with bullshit? Shouldn’t we want our journals to publish only the most reliable, correct information – data analysis you can set your clock by, conclusions as solid as the Earth under your feet, uncertainties quantified to within the nearest fraction of a covariant Markov Chain Monte Carlo-delineated sigma contour?

Well, about that.

The replication crisis has been festering throughout the academic community for the better part of a decade, now. It turns out that a huge part of the scientific literature simply can’t be reproduced. In many cases the works in question are high-impact papers, the sort of work that careers are based on, that lead to million-dollar grants being handed out to laboratories across the world. Indeed, it seems that the most-cited works are also the least likely to be reproduced (there’s a running joke that if something was published in Nature or Science, you know it’s probably wrong). Awkward.

The scientific community has completely failed to draw the obvious conclusion from the replication crisis, which is that peer review doesn’t work at all. Indeed, it may well play a causal role in the replication crisis.

The replication crisis, I should emphasize, is probably not mostly due to deliberate fraud, although there’s certainly some of that. There was a recent scandal involving the connection of amyloid plaques to Alzheimer’s disease which seems to have been entirely fraudulent, and which led to many millions – perhaps billions – of dollars in biomedical research programs being pissed away, to say nothing of the uncountable number of wasted man-hours. There have been many other such scandals, in almost every field you can name, and God alone knows how many are still buried like undiscovered time bombs in the foundations of various sub-fields. Most scientists, however, are not deliberately, consciously deceptive. They try to be honest. But the different models, assumptions, and methods they adopt can lead to wildly divergent results, even when analyzing the same data and testing the same hypothesis. Beyond that, they can also be sloppy. And the sloppiness, compounded across interlinked citation chains in the knowledge network, builds up.

Scientists know quite well that just because something has received the imprimatur of publication in a peer-reviewed journal with a high impact factor doesn’t mean that it’s correct. But while they know this intellectually, it’s very difficult to avoid the operating assumption that if something has passed peer review it’s probably mostly okay, and they’re not inclined to spend valuable time checking everything themselves. After all, they need to publish their own papers – in order to finish their PhD, get that faculty position, or get that next grant – and papers that are just trying to reproduce the results of other papers, that aren’t doing something novel, aren’t very interesting on their own, hence unlikely to be published. So instead of checking carefully yourself, you assume a work is probably reliable, and you use it as an element of your own work, maybe in a small way – taking a number from a table to populate an empty field in your dataset – or maybe in an important way, as a key supporting measurement or fundamental theoretical interpretative framework.

But some of those papers, despite having been peer reviewed, will be wrong, in small ways and large, and those erroneous results will propagate through your own results, possibly leading to your own paper being irretrievably flawed. But then your paper passes peer review, and gets used as the basis for subsequent work. Over time the entire scientific literature comes to resemble a house of cards.

Peer review gives scientists – and the lay public – a false sense of security regarding the soundness of scientific results. It also imposes an additional, and quite unnecessary, barrier to publication. It frequently takes months for a paper to work its way through the review process. A year or more is not unheard of, particularly if a paper is rejected, and the authors must start the whole process anew at a different journal, submitting their work as a grindstone for whatever rusty old axe the new referee is looking to sharpen. Far from ensuring errors are corrected, peer review slows down the error correction process. A bad paper can persist in the literature – being cited by other scientists – for some time, for years, before the refutation finally makes it to print … at which point some (not all) will consider the original paper debunked, and stop citing it (others, not being aware of the debunking, will continue to cite it). But what if the refutation is itself tendentious? The original authors may wish to reply, but their refutation of the refutation must now go through the peer review process as well, and on and on it interminably drags …

As to what is happening behind the scenes, no one – not the public, not other scientists – has any idea. The correspondence between referees and authors is rarely published along with the paper. Whether the review was meticulous or sloppy, whether the referee’s critiques warranted or absurd, is entirely opaque.

In essence, the peer review process slows down the publication duty cycle, thereby slowing down scientific debate, while taking much of that debate behind closed doors, where its quality cannot be evaluated by anyone but the participants.

John Carter, “DIEing Academic Research Budgets”, Postcards from Barsoom, 2025-03-17.

June 19, 2025

QotD: Peer review and the replication crisis

June 8, 2025

“If the New York Times notices the Buddha, the enlightened one has already left town”

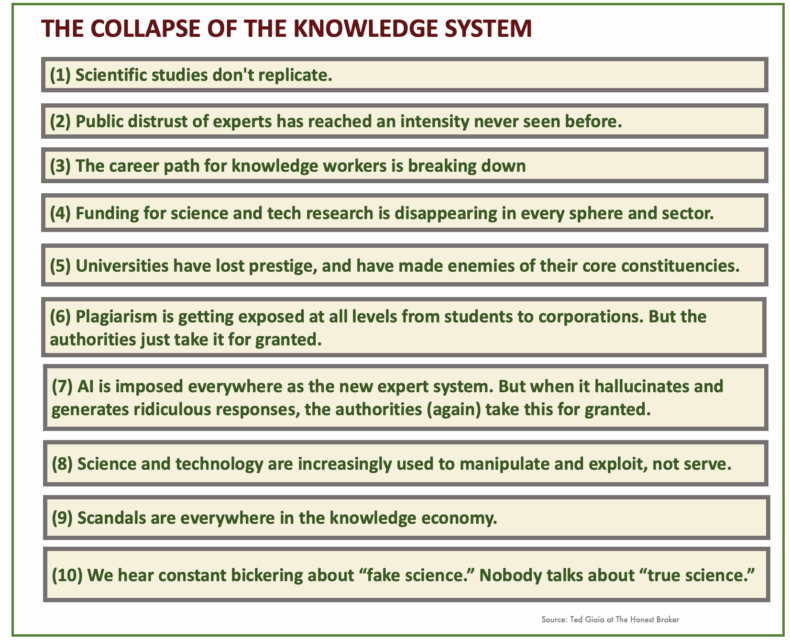

Ted Gioia points out that momentous changes in society are not often noticed until they’ve taken place, and provides ten warning signs of such a change happening right now:

Would you believe me if I told you that the biggest news story of our century is happening right now — but is never mentioned in the press?

That sounds crazy, doesn’t it?

But that is often the case when a bold new worldview appears.

- How long did it take before the Renaissance got mentioned in the town square?

- When did newspapers start covering the Enlightenment?

- Or the collapse in mercantilism?

- Or the rise of globalism?

- Or the birth of Christianity or Islam or some other earthshaking creed?

The biggest changes often happen long before they even get a name. By the time the scribes notice, the world is already reborn.

You can take this to the bank: If the New York Times notices the Buddha, the enlightened one has already left town.

For example, the word Renaissance got introduced two hundred years after the start of the Renaissance. The game was already over.

The same is true of most major cultural movements — they are truly the elephants in the room. And the elites at the epicenter of power are absolutely the last to notice.

Tiberius may run the entire Roman Empire, but he will never hear the Good News.

There’s a general rule here — the bigger the shift, the easier it is to miss.

We are living through a situation like that right now. We are experiencing a total shift — like the magnetic poles reversing. But it doesn’t even have a name — not yet.

So let’s give it one.

Let’s call it: The Collapse of the Knowledge System.

We could also define it as the emergence of a new knowledge system.

In this regard, it resembles other massive shifts in Western history — specifically the rebirth of humanistic thinking in the early Renaissance, or the rise of Romanticism in the nineteenth century.

In these volatile situations, the whole entrenched hierarchy of truth and authority gets totally reversed. The old experts and their systems are discredited, and completely new values take their place. The newcomers bring more than just a new attitude — they turn everything on its head.

That’s happening right now.

The knowledge structure that has dominated everything for our entire lifetime — and for our parents and grandparents — is collapsing. And it’s taking place everywhere, all at once.

If this were just an isolated situation — a problem in universities, or media, or politics — the current hierarchy could possibly survive. But that isn’t the case.

The crisis has spread into every sector of society which relies on clear knowledge and respected authority.

May 26, 2024

“Naked ‘gobbledygook sandwiches’ got past peer review, and the expert reviewers didn’t so much as blink”

Jo Nova on the state of play in the (scientifically disastrous) replication crisis and the ethics-free “churnals” that publish junk science:

Proving that unpaid anonymous review is worth every cent, the 217 year old Wiley science publisher “peer reviewed” 11,300 papers that were fake, and didn’t even notice. It’s not just a scam, it’s an industry. Naked “gobbledygook sandwiches” got past peer review, and the expert reviewers didn’t so much as blink.

Big Government and Big Money has captured science and strangled it. The more money they pour in, the worse it gets. John Wiley and Sons is a US $2 billion dollar machine, but they got used by criminal gangs to launder fake “science” as something real.

Things are so bad, fake scientists pay professional cheating services who use AI to create papers and torture the words so they look “original”. Thus a paper on “breast cancer” becomes a discovery about “bosom peril” and a “naïve Bayes” classifier became a “gullible Bayes”. An ant colony was labeled an “underground creepy crawly state”.

And what do we make of the flag to clamor ratio? Well, old fashioned scientists might call it “signal to noise”. The nonsense never ends.

A “random forest” is not always the same thing as an “irregular backwoods” or an “arbitrary timberland” — especially if you’re writing a paper on machine learning and decision trees.

The most shocking thing is that no human brain even ran a late-night Friday-eye over the words before they passed the hallowed peer review and entered the sacred halls of scientific literature. Even a wine-soaked third year undergrad on work experience would surely have raised an eyebrow when local average energy became “territorial normal vitality”. And when a random value became an “irregular esteem”. Let me just generate some irregular esteem for you in Python?

If there was such a thing as scientific stand-up comedy, we could get plenty of material, not by asking ChatGPT to be funny, but by asking it to cheat. Where else could you talk about a mean square mistake?

Wiley — a mega publisher of science articles has admitted that 19 journals are so worthless, thanks to potential fraud, that they have to close them down. And the industry is now developing AI tools to catch the AI fakes (makes you feel all warm inside?)

Fake studies have flooded the publishers of top scientific journals, leading to thousands of retractions and millions of dollars in lost revenue. The biggest hit has come to Wiley, a 217-year-old publisher based in Hoboken, N.J., which Tuesday will announce that it is closing 19 journals, some of which were infected by large-scale research fraud.

In the past two years, Wiley has retracted more than 11,300 papers that appeared compromised, according to a spokesperson, and closed four journals. It isn’t alone: At least two other publishers have retracted hundreds of suspect papers each. Several others have pulled smaller clusters of bad papers.

Although this large-scale fraud represents a small percentage of submissions to journals, it threatens the legitimacy of the nearly $30 billion academic publishing industry and the credibility of science as a whole.

May 16, 2024

The replication crisis and the steady decline in social trust

Theodore Dalrymple on the depressing unreliability — and sometimes outright fraudulence — of far too high a proportion of what gets published in scientific journals:

Until quite recently — I cannot put an exact date on it — I assumed that everything published in scientific journals was, if not true, at least not deliberately untrue. Scientists might make mistakes, but they did not cheat, plagiarise, falsify, or make up their results. For many years as I opened a medical journal, the possibility simply that it contained fraud did not occur to me. Cases such as those of the Piltdown Man, a hoax in which bone fragments found in the Piltdown gravel pit were claimed to be those of the missing link between ape and man, were famous because they were dramatic but above all because they were rare, or assumed to be such.

Such naivety is no longer possible: instances of dishonesty have become much more frequent, or at least much more publicised. Whether the real incidence of scientific fraud has increased is difficult to say. There is probably no way to estimate the incidence of such fraud in the past by which a proper comparison can be made.

There are, of course, good reasons why scientific fraud should have increased. The number of practising scientists has exploded; they are in fierce competition with one another; their careers depend to a large extent on their productivity as measured by publication. The difference between what is ethical and unethical has blurred. They cite themselves, they recycle their work, they pay for publication, they attach their names to pieces of work they have played no part in performing and whose reports they have not even read, and so forth. As new algorithms are developed to measure their performance, they find new ways to play the game or to deceive. And all this is not even counting commercial pressures.

Furthermore, the general level of trust in society has declined. Are our politicians worse than they used to be, as it seems to everyone above a certain age, or is it that we simply know more about them because the channels of communication are so much wider? At any rate, trust in authority of most kinds has declined. Where once we were inclined to say, “It must be true because I read it in a newspaper”, we are now inclined to say, “It must be untrue because I read it in a newspaper”.

Quite often now I look at a blog called Retraction Watch which, since 2010, has been devoted to tracing and encouraging retraction of flawed scientific papers, often flawed for discreditable reasons. Such reasons are various and include research performed on subjects who have not given proper consent. This is not the same as saying that the results of such research are false, however, and raises the question of whether it is ethical to cite results that have been obtained unethically. Whether it is or not, we have all benefited enormously from past research that would now be considered unethical.

One common problem with research is its reproducibility, or lack of it. This is particularly severe in the case of psychology, but it is common in medicine too.

February 7, 2024

A disturbing proportion of scientific publishing is … bullshit

Tim Worstall on a few of the more upsetting details of how much we’ve been able depend on truth and testability in the scientific community and how badly that’s been undermined in recent years:

The Observer tells us that science itself is becoming polluted by journal mills. Fools — intellectual thieves perhaps — are publishing nonsense in scientific journals, this then pollutes the conclusions reached by people surveying science to see what’s what.

This is true and is a problem. But it’s what people publish as supposedly real science that is the real problem here, not just those obvious cases they’re complaining about:

The startling rise in the publication of sham science papers has its roots in China, where young doctors and scientists seeking promotion were required to have published scientific papers. Shadow organisations – known as “paper mills” – began to supply fabricated work for publication in journals there.

The practice has since spread to India, Iran, Russia, former Soviet Union states and eastern Europe, with paper mills supplying fabricated studies to more and more journals as increasing numbers of young scientists try to boost their careers by claiming false research experience. In some cases, journal editors have been bribed to accept articles, while paper mills have managed to establish their own agents as guest editors who then allow reams of falsified work to be published.

Indeed, an actual and real problem:

The products of paper mills often look like regular articles but are based on templates in which names of genes or diseases are slotted in at random among fictitious tables and figures. Worryingly, these articles can then get incorporated into large databases used by those working on drug discovery.

Others are more bizarre and include research unrelated to a journal’s field, making it clear that no peer review has taken place in relation to that article. An example is a paper on Marxist ideology that appeared in the journal Computational and Mathematical Methods in Medicine. Others are distinctive because of the strange language they use, including references to “bosom peril” rather than breast cancer and “Parkinson’s ailment” rather Parkinson’s disease.

Quite. But the problem is worse, much, much, worse.

Let us turn to something we all can agree is of some importance. Those critical minerals things. We all agree that we’re going to be using more of them in the future. Largely because the whole renewables thing is changing the minerals we use to power the world. We’re — to some extent, perhaps enough, perhaps not enough — moving from using fossil fuels to power the world to using rare earths, silicon, copper and so on to power the world. How much there is, how much useable, of those minerals is important. Because that’s what we’re doing, we’re changing which minerals — from fossil to metallic elements — we use to power the world.

Those estimates of how much there is out there are therefore important. The European Union, for example, has innumerable reports and task forces working on the basis that there’s not that much out there and therefore we’ve got to recycle everything. One of those foundational blocks of the circular economy is that we’ve got to do it anyway. Because there’s simply not enough to be able to power society without the circular economy.

This argument is nads*. The circular economy might be desirable for other reasons. At least in part it’s very sensible too – if it’s cheaper to recycle than to dig up new then of course we should recycle. But that we must recycle, regardless of the cost, because otherwise supply will vanish is that nads*.

But, folk will and do say, if we look at the actual science here we are short of these minerals and metals. Therefore etc. But it’s the science that has become infected. Wrongly infected, infested even.

Here’s the Royal Society of Chemistry and their periodic table. You need to click around a bit to see this but they have hafnium supply risk as “unknown”. That’s at least an advance from their previous insistence that it was at high supply risk. It isn’t, there’s more hafnium out there than we can shake a stick at. At current consumption rates — and assuming no recycling at all which, with hafnium, isn’t all that odd an idea — we’re going to run out sometime around the expected date for the heat death of the universe. No, not run out of the universe’s hafnium, run out of what we’ve got in the lithosphere of our own Earth. To a reasonable and rough measure the entirety of Cornwall is 0.01% hafnium. We happily mine for gold at 0.0001% concentrations and we use less hafnium annually than we do gold.

The RSC also says that gallium and germanium have a high supply risk. Can you guess which bodily part(s) such a claim should be associated with? For gallium we already have a thousand year supply booked to pass through the plants we normally use to extract our gallium for us. For germanium I — although someone competent could be a preference — could build you a plant to supply 2 to 4% of global annual germanium demand/supply. Take about 6 months and cost $10 million even at government contracting rates to do it too. The raw material would be fly ash from coal burning and there’s no shortage of that — hundreds of such plants could be constructed that is.

The idea that humanity is, in anything like the likely timespan of our species, going to run short in absolute terms of Hf, Ga or Ge is just the utmost nads*

But the American Chemistry Society says the same thing:

* As ever, we are polite around here. Therefore we use the English euphemism “nads”, a shortening of “nadgers”, for the real meaning of “bollocks”.

January 18, 2024

QotD: Scientific fraud

In sorting out these feelings, I start from the datum that scientific fraud feels to me like sacrilege. Plausible reports of it make me feel deeply angry and disgusted, with a stronger sense of moral indignation than I get about almost any other sort of misbehavior. I feel like people who commit it have violated a sacred trust.

What is sacred here? What are they profaning?

The answer to that question seemed obvious to me immediately when I first formed the question. But in order to explain it comprehensibly to a reader, I need to establish what I actually mean by “science”. Science is not a set of answers, it’s a way of asking questions. It’s a process of continual self-correction in which we form theories about what is, check them by experiment, and use the result to improve our theories. Implicitly there is no end to this journey; anything we think of as “truth” is merely a theory that has had predictive utility so far but could be be falsified at any moment by further evidence.

When I ask myself why I feel scientific fraud is like sacrilege, I rediscover on the level of emotion something I have written from an intellectual angle: Sanity is the process by which you continually adjust your beliefs so they are predictively sound. I could have written “scientific method” rather than “sanity” there, and that is sort of the point. Scientific method is sanity writ large and systematized; sanity is science in the small personal domain of one’s own skull.

Science is sanity is salvation – it’s how we redeem ourselves, individually and collectively, from the state of ignorance and sin into which we were born. “Sin” here has a special interpretation; it’s the whole pile of cognitive biases, instinctive mis-beliefs, and false cultural baggage we’re wired with that obstruct and weigh down our attempts to be rational. But my emotional reaction to this is, I realize, quite like that of a religious person’s reaction to whatever tribal superstitious definition of “sin” he has internalized.

I feel that scientists have a special duty of sanity that is analogous to a priest’s special duty to be pious and virtuous. They are supposed to lead us out of epistemic sin, set the example, light the way forward. When one of them betrays that trust, it is worse than ordinary stupidity. It damages all of us; it feeds the besetting demons of ignorance and sloppy thinking, and casts discredit on scientists who have remained true to their sacred vocation.

Eric S. Raymond, “Maybe science is my religion, after all”, Armed and Dangerous, 2011-05-18.

January 12, 2024

“… normal people no longer trust experts to any great degree”

Theophilus Chilton explains why the imprimatur of “the experts” is a rapidly diminishing value:

One explanation for the rise of midwittery and academic mediocrity in America directly connects to the “everybody should go to college” mantra that has become a common platitude. During the period of America’s rise to world superpower, going to college was reserved for a small minority of higher IQ Americans who attended under a relatively meritocratic regime. The quality of these graduates, however, was quite high and these were the “White men with slide rules” who built Hoover Dam, put a man on the moon, and could keep Boeing passenger jets from losing their doors halfway through a flight. As the bar has been lowered and the ranks of Gender and Queer Studies programs have been filled, the quality of college students has declined precipitously. One recent study shows that the average IQ of college students has dropped to the point where it is basically on par with the average for the general population as a whole.

Another area where this comes into play is with the replication crisis in science. For those who haven’t heard, the results from an increasingly large number of scientific studies, including many that have been used to have a direct impact on our lives, cannot be reproduced by other workers in the relevant fields. Obviously, this is a problem because being able to replicate other scientists’ results is sort of central to that whole scientific method thing. If you can’t do this, then your results really aren’t any more “scientific” than your Aunt Gertie’s internet searches.

As with other areas of increasing sociotechnical incompetency, some of this is diversity-driven. But not wholly so, by any means. Indeed, I’d say that most of it is due to the simple fact that bad science will always be unable to be consistently replicated. Much of this is because of bad experimental design and other technical matters like that. The rest is due to bad experimental design, etc., caused by overarching ideological drivers that operate on flawed assumptions that create bad experimentation and which lead to things like cherry-picking data to give results that the scientists (or, more often, those funding them) want to publish. After all, “science” carries a lot of moral and intellectual authority in the modern world, and that authority is what is really being purchased.

It’s no secret that Big Science is agenda-driven and definitely reflects Regime priorities. So whenever you see “New study shows the genetic origins of homosexuality” or “Latest data indicates trooning your kid improves their health by 768%,” that’s what is going on. REAL science is not on display. And don’t even get started on global warming, with its preselected, computer-generated “data” sets that have little reflection on actual, observable natural phenomena.

“Butbutbutbut this is all peer-reviewed!! 97% of scientists agree!!” The latter assertion is usually dubious, at best. The former, on the other hand, is irrelevant. Peer-reviewing has stopped being a useful measure for weeding out spurious theories and results and is now merely a way to put a Regime stamp of approval on desired result. But that’s okay because the “we love science” crowd flat out ignores data that contradict their presuppositions anywise, meaning they go from doing science to doing ideology (e.g. rejecting human biodiversity, etc.). This sort of thing was what drove the idiotic responses to COVID-19 a few years ago, and is what is still inducing midwits to gum up the works with outdated “science” that they’re not smart enough to move past.

If you want a succinct definition of “scientism,” it might be this – A belief system in which science is accorded intellectual abilities far beyond what the scientific method is capable of by people whose intellectual abilities are far below being able to understand what the scientific method even is.

September 23, 2023

More on the history field’s “reproducibility crisis”

In the most recent edition of the Age of Invention newsletter, Anton Howes follows up on his earlier post about the history field’s efforts to track down and debunk fake history:

The concern I expressed in the piece is that the field of history doesn’t self-correct quickly enough. Historical myths and false facts can persist for decades, and even when busted they have a habit of surviving. The response from some historians was that they thought I was exaggerating the problem, at least when it came to scholarly history. I wrote that I had not heard of papers being retracted in history, but was informed of a few such cases, including even a peer-reviewed book being dropped by its publisher.

In 2001/2, University of North Carolina Press decided to stop publishing the 1999 book Designs against Charleston: The Trial Record of the Denmark Vesey Slave Conspiracy of 1822 when a paper was published showing hundreds of cases where its editor had either omitted or introduced words to the transcript of the trial. The critic also came to very different conclusions about the conspiracy. In this case, the editor did admit to “unrelenting carelessness“, but maintained that his interpretation of the evidence was still correct. Many other historians agreed, thinking the critique had gone too far and thrown “the baby out with the bath water“.

In another case, the 2000 book Arming America: The Origins of a National Gun Culture — not peer-reviewed, but which won an academic prize — had its prize revoked when found to contain major errors and potential fabrications. This is perhaps the most extreme case I’ve seen, in that the author ultimately resigned from his professorship at Emory University (that same author believes that if it had happened today, now that we’re more used to the dynamics of the internet, things would have gone differently).

It’s somewhat comforting to learn that retraction in history does occasionally happen. And although I complained that scholars today are rarely as delightfully acerbic as they had been in the 1960s and 70s in openly criticising one another, they can still be very forthright. Take James D. Perry in 2020 in the Journal of Strategy and Politics reviewing Nigel Hamilton’s acclaimed trilogy FDR at War. All three of Perry’s reviews are critical, but that of the second book especially forthright, including a test of the book’s reproducibility:

This work contains numerous examples of poor scholarship. Hamilton repeatedly misrepresents his sources. He fails to quote sources fully, leaving out words that entirely change the meaning of the quoted sentence. He quotes selectively, including sentences from his sources that support his case but ignoring other important sentences that contradict his case. He brackets his own conjectures between quotes from his sources, leaving the false impression that the source supports his conjectures. He invents conversations and emotional reactions for the historical figures in the book. Finally, he fails to provide any source at all for some of his major arguments

Blimey.

But I think there’s still a problem here of scale. It’s hard to tell if these cases are signs that history on the whole is successfully self-correcting quickly, or are stand-out exceptions. I was positively inundated with other messages — many from amateur historical investigators, but also a fair few academic historians — sharing their own examples of mistakes that had snuck past the careful scholars for decades, or of other zombies that refused to stay dead.

August 31, 2023

The sciences have replication problems … historians face similar issues

In the latest Age of Invention newsletter, Anton Howes considers the history profession’s closest equivalent to the ongoing replication crisis in the sciences:

… I’ve become increasingly worried that science’s replication crises might pale in comparison to what happens all the time in history, which is not just a replication crisis but a reproducibility crisis. Replication is when you can repeat an experiment with new data or new materials and get the same result. Reproducibility is when you use exactly the same evidence as another person and still get the same result — so it has a much, much lower bar for success, which is what makes the lack of it in history all the more worrying.

Historical myths, often based on mere misunderstanding, but occasionally on bias or fraud, spread like wildfire. People just love to share unusual and interesting facts, and history is replete with things that are both unusual and true. So much that is surprising or shocking has happened, that it can take only years or decades of familiarity with a particular niche of history in order to smell a rat. Not only do myths spread rapidly, but they survive — far longer, I suspect, than in scientific fields.

Take the oft-repeated idea that more troops were sent to quash the Luddites in 1812 than to fight Napoleon in the Peninsular War in 1808. Utter nonsense, as I set out in 2017, though it has been cited again and again and again as fact ever since Eric Hobsbawm first misled everyone back in 1964. Before me, only a handful of niche military history experts seem to have noticed and were largely ignored. Despite being busted, it continues to spread. Terry Deary (of Horrible Histories fame), to give just one of many recent examples, repeated the myth in a 2020 book. Historical myths are especially zombie-like. Even when disproven, they just. won’t. die.

[…]

I don’t think this is just me being grumpy and pedantic. I come across examples of mistakes being made and then spreading almost daily. It is utterly pervasive. Last week when chatting to my friend Saloni Dattani, who has lately been writing a piece on the story of the malaria vaccine, I shared my

mounting paranoiahealthy scepticism of secondary sources and suggested she check up on a few of the references she’d cited just to see. A few days later and Saloni was horrified. When she actually looked closely, many of the neat little anecdotes she’d cited in her draft — like Louis Pasteur viewing some samples under a microscope and having his mind changed on the nature of malaria — turned out to have no actual underlying primary source from the time. It may as well have been fiction. And there was inaccuracy after inaccuracy, often inexplicable: one history of the World Health Organisation’s malaria eradication programme said it had been planned to take 5-7 years, but the sources actually said 10-15; a graph showed cholera pandemics as having killed a million people, with no citation, while the main sources on the topic actually suggest that in 1865-1947 it killed some 12 million people in British India alone.Now, it’s shockingly easy to make these mistakes — something I still do embarrassingly often, despite being constantly worried about it. When you write a lot, you’re bound to make some errors. You have to pray they’re small ones and try to correct them as swiftly as you can. I’m extremely grateful to the handful of subject-matter experts who will go out of their way to point them out to me. But the sheer pervasiveness of errors also allows unintentionally biased narratives to get repeated and become embedded as certainty, and even perhaps gives cover to people who purposefully make stuff up.

If the lack of replication or reproducibility is a problem in science, in history nobody even thinks about it in such terms. I don’t think I’ve ever heard of anyone systematically looking at the same sources as another historian and seeing if they’d reach the same conclusions. Nor can I think of a history paper ever being retracted or corrected, as they can be in science. At the most, a history journal might host a back-and-forth debate — sometimes delightfully acerbic — for the closely interested to follow. In the 1960s you could find an agricultural historian saying of another that he was “of course entitled to express his views, however bizarre.” But many journals will no longer print those kinds of exchanges, they’re hardly easy for the uninitiated to follow, and there is often a strong incentive to shut up and play nice (unless they happen to be a peer-reviewer, in which case some will feel empowered by the cover of anonymity to be extraordinarily rude).

December 10, 2021

QotD: The media and the replication crisis

Here is the iron law of medical — in fact all scientific — studies in the modern world: most do not replicate. This has always been true of studies that supposedly find some link between doing [thing we enjoy] and cancer. This of course does not stop the media from running with initial study results based on 37 study participants as “fact”. The same is true for studies of new drugs and treatments. Most don’t pan out or are not nearly as efficacious as early studies might indicate. We have seen that over and over during COVID.

Warren Meyer, “A Couple of Thoughts on Medical Studies Given Recent Experience”, Coyote Blog, 2021-08-31.

February 23, 2021

The danger period is when “the coherence of the news breaks” … and it appears to be breaking before our eyes

In the latest Libertarian Enterprise, Sarah Hoyt remembers the breakdown of the Soviet Union and the disturbing parallels we can see today:

Our likelihood of coming out of this a constitutional republic is still high. Why? Well, because societies under stress become more themselves.

I remember when the USSR fell. And out of the ashes Tzar Putin emerged, who is despicable, but not particularly out of keeping with Russian monarchy.

So, yeah, the pull of our culture will be towards the reestablishment of who and what we are and were: a constitutional republic.

But on the way …

Look, I remember when the USSR fell.

The people in the USSR knew they were being treated like mushrooms: kept in the dark and fed on crap. They knew there was truth in Pravda. But they were used to having certain information, and interpreting it.

And there is something worse than reading the news in totalitarianism. You can get used to interpreting the news, and knowing the shape of the hole of what they’re not reporting.

But once you realize it’s all nonsense, once the coherence of the news breaks — and it’s doing so now, earlier than I expected, with the Times article, with the New York Times admitting the protesters at the capitol didn’t kill the police officer — once there are holes, but they’re not consistent, or they’re consistent, but then contradicted; once the narrative changes almost by the week, to the point it can’t be ignored, that’s the dangerous period.

I know I joke that by the end of this year I’ll have to apologize to the lizard-people conspiracy theorists. But the problem is that the lizard people conspiracy theorists can acquire respectability and a strange new respect. Or something even crazier. Heck, a lot of crazier things.

To an extent the 9/11 troofer conspiracies, which yes, are crazy and also anti-scientific were our warning shot. That they flourished and that to this day a lot of people believe them means that there was already a sense that the news made no sense, that there were other things going on behind the scenes that we weren’t aware of.

It’s going to get far, far worse than that, as the actual elites, the top of various fields fall like struck trees in a thunder storm. There is a good chance that authorities you rely on for your profession, or just for your knowledge have been compromised. A lot of our research is tainted by China paying to get the results it wants, for instance. And there’s probably worse. You already know most research can’t be reproduced, and that’s not even recent.

As all this stuff comes out, the problem is that people won’t stop believing. Instead they’ll believe in all and everything.

I don’t know how much was reported here, as the USSR collapsed. but I remember what I read in European magazines and journals. All of a sudden it was all new age mysticism and spoon bending and only the good Lord knew what else.

And that’s what we’re going to head into. So, when you find yourself in the middle of an elaborate explanation that someone constructed, well …

First find the facts. Pace Heinlein: Again, and again what are the facts. Never mind if your ideology demands they be something else. Establish the facts to the extent you can. Facts and math don’t lie. (Statistics do. So be aware you can lie with them. And any metrics that involve intangibles, like intelligence or performance much less sociability or micro anything? forget about it.)

From the facts, deploy Occam’s razor. What is the simplest explanation?

Then remember that humans run at the mouth, and the more humans in the conspiracy, the more facts are likely to leak out somewhere.

And while we’ve seen a lot of Omerta among leftists, note that they’re all afflicted by evil villain syndrome. Sooner or later, they brag about how clever they were in deceiving us. So, if your conspiracy theory requires perfect silence forever, it’s probably not true.

December 13, 2020

QotD: The statistical “Rule of Silicone Boobs”

If it’s sexy, it’s probably fake.

“Sexy” means “likely to get published in the New York Times and/or get the researcher on a TEDx stage”. Actual sexiness research is not “sexy” because it keeps running into inconvenient results like that rich and high-status men in their forties and skinny women in their early twenties tend to find each other very sexy. The only way to make a result like that “sexy” is to blame it on the patriarchy, and most psychologist aren’t that far gone (yet).

[…]

Anything counterintuitive is also sexy, and (according to Rule 2) less likely to be true. So is anything novel that isn’t based on solid existing research. After all, the Times is the newspaper business, not in the truthspaper one.

Finding robust results is very hard, but getting sexy results published is very easy. Thus, sexy results generally lack robustness. I personally find a certain robustness quite sexy, but that attitude seems to have gone out of fashion since the Renaissance.

Jacob Falkovich, “The Scent of Bad Psychology”, Put a Number On It!, 2018-09-07.

November 4, 2020

The replication crisis in all fields is worse than you imagine

It may sound like a trivial issue, but it absolutely is not: scientific studies that can’t be replicated are worthless, yet our lives are often impacted by these failed studies, especially when politicans are guided by junk science results:

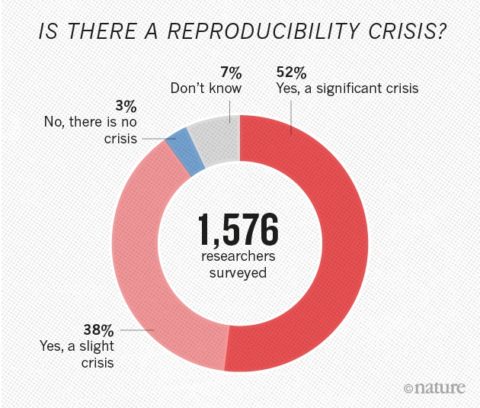

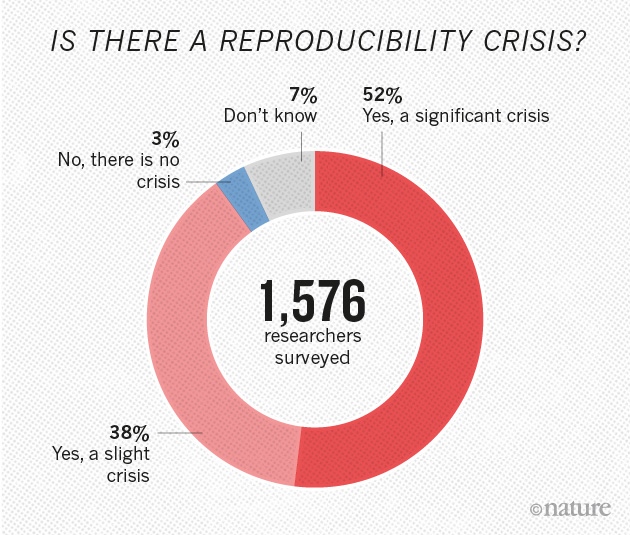

More than 70% of researchers have tried and failed to reproduce another scientist’s experiments, and more than half have failed to reproduce their own experiments. Those are some of the telling figures that emerged from Nature‘s survey of 1,576 researchers who took a brief online questionnaire on reproducibility in research.

The data reveal sometimes-contradictory attitudes towards reproducibility. Although 52% of those surveyed agree that there is a significant ‘crisis’ of reproducibility, less than 31% think that failure to reproduce published results means that the result is probably wrong, and most say that they still trust the published literature.

Data on how much of the scientific literature is reproducible are rare and generally bleak. The best-known analyses, from psychology and cancer biology, found rates of around 40% and 10%, respectively. Our survey respondents were more optimistic: 73% said that they think that at least half of the papers in their field can be trusted, with physicists and chemists generally showing the most confidence.

The results capture a confusing snapshot of attitudes around these issues, says Arturo Casadevall, a microbiologist at the Johns Hopkins Bloomberg School of Public Health in Baltimore, Maryland. “At the current time there is no consensus on what reproducibility is or should be.” But just recognizing that is a step forward, he says. “The next step may be identifying what is the problem and to get a consensus.”

Failing to reproduce results is a rite of passage, says Marcus Munafo, a biological psychologist at the University of Bristol, UK, who has a long-standing interest in scientific reproducibility. When he was a student, he says, “I tried to replicate what looked simple from the literature, and wasn’t able to. Then I had a crisis of confidence, and then I learned that my experience wasn’t uncommon.”

The challenge is not to eliminate problems with reproducibility in published work. Being at the cutting edge of science means that sometimes results will not be robust, says Munafo. “We want to be discovering new things but not generating too many false leads.”

February 28, 2018

Psychology’s replication failures – “many casualties of the replication crisis do indeed bear a strong resemblance to voodoo”

In Psychology Today, Lee Jussim says that once upon a time in the benighted, ignorant past, we generally believed in magic. In these more scientific, advanced, de-mystified days, we believe in psychology. He wonders if there’s actually much of a difference between the two:

Are some of the most famous effects in psychology – priming, stereotype threat, implicit bias – based on smoke and mirrors? Does the widespread credibility given such effects in the face of very weak evidence have a weird kinship with supernatural beliefs?

Many findings in psychology are celebrated in part because they were shocking and seemed almost magical. So magical that psychiatrist Scott Alexander argued that many casualties of the replication crisis do indeed bear a strong resemblance to voodoo — the main difference being an appeal to mysterious unobserved unconscious forces rather than mysterious unobserved supernatural ones.

Belief in the efficacy of voodoo itself can by psychologized: Curses work, some say, because believing you are hexed can kill you. There are similar mind-over-matter tales involving implausibly strong effects from placebos and self-affirmations. Priming studies claim that even thinking about the word “retirement” can transfer the weakness of old age into a young body, and make young people walk slower. The idea that people gravitate toward occupations that sound like their names bears a strange resemblance to sympathetic magic: If you name your daughter Suzie, she’s now more likely to wind up selling shells by the seashore, whereas your son Brandon will be a banker.

Supernatural beliefs are a universal feature of human societies. For people in many tribal societies, magic is serious business — a matter of life and death. Sorcerers can make good money by selling their services, while those accused of sorcery might be killed. The same was true in medieval Europe, where many were executed for supposedly using evil magic against their neighbors.

Belief in magic has retreated in modern times. Science has rendered the world less mysterious, technology has given us more effective control over it, and bureaucratic rules make life more predictable. Magic retreated.

Or did it? Any belief as universal as magic may be marvelously adapted to well-worn ruts in the human brain and encouraged by common structures and rhythms of human interaction.

April 30, 2017

[p-hacking] “is one of the many questionable research practices responsible for the replication crisis in the social sciences”

What happens when someone digs into the statistics of highly influential health studies and discovers oddities? We’re in the process of finding out in the case of “rockstar researcher” Brian Wansink and several of his studies under the statistical microscope:

Things began to go bad late last year when Wansink posted some advice for grad students on his blog. The post, which has subsequently been removed (although a cached copy is available), described a grad student who, on Wansink’s instruction, had delved into a data set to look for interesting results. The data came from a study that had sold people coupons for an all-you-can-eat buffet. One group had paid $4 for the coupon, and the other group had paid $8.

The hypothesis had been that people would eat more if they had paid more, but the study had not found that result. That’s not necessarily a bad thing. In fact, publishing null results like these is important — failure to do so leads to publication bias, which can lead to a skewed public record that shows (for example) three successful tests of a hypothesis but not the 18 failed ones. But instead of publishing the null result, Wansink wanted to get something more out of the data.

“When [the grad student] arrived,” Wansink wrote, “I gave her a data set of a self-funded, failed study which had null results… I said, ‘This cost us a lot of time and our own money to collect. There’s got to be something here we can salvage because it’s a cool (rich & unique) data set.’ I had three ideas for potential Plan B, C, & D directions (since Plan A had failed).”

The responses to Wansink’s blog post from other researchers were incredulous, because this kind of data analysis is considered an incredibly bad idea. As this very famous xkcd strip explains, trawling through data, running lots of statistical tests, and looking only for significant results is bound to turn up some false positives. This practice of “p-hacking” — hunting for significant p-values in statistical analyses — is one of the many questionable research practices responsible for the replication crisis in the social sciences.

H/T to Kate at Small Dead Animals for the link.