World War Two

Published 5 Apr 2024What happened to Italian soldiers overseas after the fall of Mussolini? What about the French soldiers left over in Indochina after the Japanese “occupation by invitation”? And, what did the Allies think of the Italian Communist movement and its partisan forces?

(more…)

April 6, 2024

Italian Communists, the French in Indochina, and the fate of Italy’s army – WW2 – OOTF 34

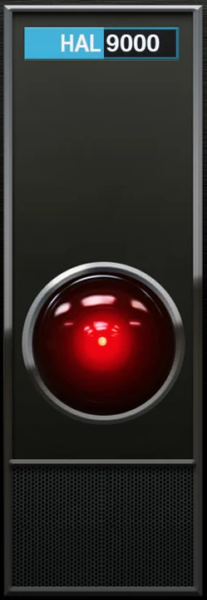

Three AI catastrophe scenarios

David Friedman considers the threat of an artificial intelligence catastrophe and the possible solutions for humanity:

Earlier I quoted Kurzweil’s estimate of about thirty years to human level A.I. Suppose he is correct. Further suppose that Moore’s law continues to hold, that computers continue to get twice as powerful every year or two. In forty years, that makes them something like a hundred times as smart as we are. We are now chimpanzees, perhaps gerbils, and had better hope that our new masters like pets. (Future Imperfect Chapter XIX: Dangerous Company)

As that quote from a book published in 2008 demonstrates, I have been concerned with the possible downside of artificial intelligence for quite a while. The creation of large language models producing writing and art that appears to be the work of a human level intelligence got many other people interested. The issue of possible AI catastrophes has now progressed from something that science fiction writers, futurologists, and a few other oddballs worried about to a putative existential threat.

Large Language models work by mining a large database of what humans have written, deducing what they should say by what people have said. The result looks as if a human wrote it but fits the takeoff model, in which an AI a little smarter than a human uses its intelligence to make one a little smarter still, repeated to superhuman, poorly. However powerful the hardware that an LLM is running on it has no superhuman conversation to mine, so better hardware should make it faster but not smarter. And although it can mine a massive body of data on what humans say it in order to figure out what it should say, it has no comparable body of data for what humans do when they want to take over the world.

If that is right, the danger of superintelligent AIs is a plausible conjecture for the indefinite future but not, as some now believe, a near certainty in the lifetime of most now alive.

[…]

If AI is a serious, indeed existential, risk, what can be done about it?

I see three approaches:

I. Keep superhuman level AI from being developed.

That might be possible if we had a world government committed to the project but (fortunately) we don’t. Progress in AI does not require enormous resources so there are many actors, firms and governments, that can attempt it. A test of an atomic weapon is hard to hide but a test of an improved AI isn’t. Better AI is likely to be very useful. A smarter AI in private hands might predict stock market movements a little better than a very skilled human, making a lot of money. A smarter AI in military hands could be used to control a tank or a drone, be a soldier that, once trained, could be duplicated many times. That gives many actors a reason to attempt to produce it.

If the issue was building or not building a superhuman AI perhaps everyone who could do it could be persuaded that the project is too dangerous, although experience with the similar issue of Gain of Function research is not encouraging. But at each step the issue is likely to present itself as building or not building an AI a little smarter than the last one, the one you already have. Intelligence, of a computer program or a human, is a continuous variable; there is no obvious line to avoid crossing.

When considering the down side of technologies–Murder Incorporated in a world of strong privacy or some future James Bond villain using nanotechnology to convert the entire world to gray goo – your reaction may be “Stop the train, I want to get off.” In most cases, that is not an option. This particular train is not equipped with brakes. (Future Imperfect, Chapter II)

II. Tame it, make sure that the superhuman AI is on our side.

Some humans, indeed most humans, have moral beliefs that affect their actions, are reluctant to kill or steal from a member of their ingroup. It is not absurd to belief that we could design a human level artificial intelligence with moral constraints and that it could then design a superhuman AI with similar constraints. Human moral beliefs apply to small children, for some even to some animals, so it is not absurd to believe that a superhuman could view humans as part of its ingroup and be reluctant to achieve its objectives in ways that injured them.

Even if we can produce a moral AI there remains the problem of making sure that all AI’s are moral, that there are no psychopaths among them, not even ones who care about their peers but not us, the attitude of most humans to most animals. The best we can do may be to have the friendly AIs defending us make harming us too costly to the unfriendly ones to be worth doing.

III. Keep up with AI by making humans smarter too.

The solution proposed by Raymond Kurzweil is for us to become computers too, at least in part. The technological developments leading to advanced A.I. are likely to be associated with much greater understanding of how our own brains work. That might make it possible to construct much better brain to machine interfaces, move a substantial part of our thinking to silicon. Consider 89352 times 40327 and the answer is obviously 3603298104. Multiplying five figure numbers is not all that useful a skill but if we understand enough about thinking to build computers that think as well as we do, whether by design, evolution, or reverse engineering ourselves, we should understand enough to offload more useful parts of our onboard information processing to external hardware.

Now we can take advantage of Moore’s law too.

A modest version is already happening. I do not have to remember my appointments — my phone can do it for me. I do not have to keep mental track of what I eat, there is an app which will be happy to tell me how many calories I have consumed, how much fat, protein and carbohydrates, and how it compares with what it thinks I should be doing. If I want to keep track of how many steps I have taken this hour3 my smart watch will do it for me.

The next step is a direct mind to machine connection, currently being pioneered by Elon Musk’s Neuralink. The extreme version merges into uploading. Over time, more and more of your thinking is done in silicon, less and less in carbon. Eventually your brain, perhaps your body as well, come to play a minor role in your life, vestigial organs kept around mainly out of sentiment.

As our AI becomes superhuman, so do we.

The Fake (and real) History of Potato Chips

Tasting History with Max Miller

Published Jan 2, 2024The fake and true history of the potato chip and an early 19th century recipe for them. Get the recipe at my new website https://www.tastinghistory.com/ and buy Fake History: 101 Things that Never Happened: https://lnk.to/Xkg1CdFB

(more…)

QotD: No navy ever has all its ships at sea at the same time

Warships are complicated engineering marvels, requiring extensive work and support to keep operational and effective. A modern escort ship is a floating town, able to generate power to provide life support and hotel services, propulsion, aviation operations and the ability to operate a variety of very complicated electronic systems and weapon systems, and it is built to do this while surviving damage from enemy attack.

This complex world requires attention on a regular basis, both to make sure that the constituent parts still work as planned, and also to update and replace parts with more modern or better alternatives, or to provide planned upgrades. For instance, it is common for new ships entering service to undergo a short refit to add in any extra capability upgrades that may have been rolled out since construction began, and to rectify any defects.

For the purposes of planning how the fleet works, the Royal Navy looks to provide enough ships to meet agreed defence tasks. In simple terms the MOD works out what tasks are required of it, and what military assets are needed to meet them. This can range from providing a constantly available SSBN to deliver the deterrence mission through to deploying the ice patrol ship to Antarctica.

Once these commitments are understood, planners can work out how many ships / planes / tanks are needed to meet this goal. For example, it may be agreed that the RN needs to sustain multiple overseas deployments, and also be able to generate a carrier strike group too.

If, purely hypothetically the requirement for this is 6 ships, then the next task is to work out how many ships are needed to ensure 6 ships are constantly available. Usually, this has historically been at a 3:1 ratio – one ship is on task or ready to fulfill it, one is in some form of work up or other training ahead of being assigned to the role, and one is just back or in refit.

In practical terms this means that the RN never looks to get 100% of its force to sea, but rather to ensure it doesn’t fail to ensure enough ships are available to meet all the tasks that it is required to do. Consequently there is always going to be a mismatch between the number of ships owned, and the number of ships deployed.

Sir Humphrey, “Inoperable or just maintenance”, Thin Pinstriped Line, 2019-10-24.