A man’s women folk, whatever their outward show of respect for his merit and authority, always regard him secretly as an ass, and with something akin to pity. His most gaudy sayings and doings seldom deceive them; they see the actual man within, and know him for a shallow and pathetic fellow. In this fact, perhaps, lies one of the best proofs of feminine intelligence, or, as the common phase makes it, feminine intuition.

H.L. Mencken, In Defense of Women, 1918.

September 26, 2025

QotD: Men and women

March 5, 2025

QotD: British and French Enlightenments

In 2005, [Gertrude Himmelfarb] published The Roads to Modernity: The British, French, and American Enlightenments. It is a provocative revision of the typical story of the intellectual era of the late eighteenth century that made the modern world. In particular, it explains the source of the fundamental division that still doggedly grips Western political life: that between Left and Right, or progressives and conservatives. From the outset, each side had its own philosophical assumptions and its own view of the human condition. Roads to Modernity shows why one of these sides has generated a steady progeny of historical successes while its rival has consistently lurched from one disaster to the next.

By the time she wrote, a number of historians had accepted that the Enlightenment, once characterized as the “Age of Reason”, came in two versions, the radical and the skeptical. The former was identified with France, the latter with Scotland. Historians of the period also acknowledged that the anti-clericalism that obsessed the French philosophes was not reciprocated in Britain or America. Indeed, in both the latter countries many Enlightenment concepts — human rights, liberty, equality, tolerance, science, progress — complemented rather than opposed church thinking.

Himmelfarb joined this revisionist process and accelerated its pace dramatically. She argued that, central though many Scots were to the movement, there were also so many original English contributors that a more accurate name than the “Scottish Enlightenment” would be the “British Enlightenment”.

Moreover, unlike the French who elevated reason to a primary role in human affairs, British thinkers gave reason a secondary, instrumental role. In Britain it was virtue that trumped all other qualities. This was not personal virtue but the “social virtues” — compassion, benevolence, sympathy — which British philosophers believed naturally, instinctively, and habitually bound people to one another. This amounted to a moral reformation.

In making her case, Himmelfarb included people in the British Enlightenment who until then had been assumed to be part of the Counter-Enlightenment, especially John Wesley and Edmund Burke. She assigned prominent roles to the social movements of Methodism and Evangelical philanthropy. Despite the fact that the American colonists rebelled from Britain to found a republic, Himmelfarb demonstrated how very close they were to the British Enlightenment and how distant from French republicans.

In France, the ideology of reason challenged not only religion and the church, but also all the institutions dependent upon them. Reason was inherently subversive. But British moral philosophy was reformist rather than radical, respectful of both the past and present, even while looking forward to a more enlightened future. It was optimistic and had no quarrel with religion, which was why in both Britain and the United States, the church itself could become a principal source for the spread of enlightened ideas.

In Britain, the elevation of the social virtues derived from both academic philosophy and religious practice. In the eighteenth century, Adam Smith, the professor of moral philosophy at Glasgow University, was more celebrated for his Theory of Moral Sentiments (1759) than for his later thesis about the wealth of nations. He argued that sympathy and benevolence were moral virtues that sprang directly from the human condition. In being virtuous, especially towards those who could not help themselves, man rewarded himself by fulfilling his human nature.

Edmund Burke began public life as a disciple of Smith. He wrote an early pamphlet on scarcity which endorsed Smith’s laissez-faire approach as the best way to serve not only economic activity in general but the lower orders in particular. His Counter-Enlightenment status is usually assigned for his critique of the French Revolution, but Burke was at the same time a supporter of American independence. While his own government was pursuing its military campaign in America, Burke was urging it to respect the liberty of both Americans and Englishmen.

Some historians have been led by this apparent paradox to claim that at different stages of his life there were two different Edmund Burkes, one liberal and the other conservative. Himmelfarb disagreed. She argued that his views were always consistent with the ideas about moral virtue that permeated the whole of the British Enlightenment. Indeed, Burke took this philosophy a step further by making the “sentiments, manners, and moral opinion” of the people the basis not only of social relations but also of politics.

Keith Windschuttle, “Gertrude Himmelfarb and the Enlightenment”, New Criterion, 2020-02.

February 7, 2025

QotD: The Chump Ratio

P.T. Barnum gets a lot of quotes about gullibility attributed to him, because, well, he’d know, wouldn’t he? There’s a sucker born every minute, you’ll never go broke overestimating the public’s stupidity, and so on. One I particularly like is: One in Five.

That’s what you might call the Chump Ratio. In any given crowd, Barnum (or whomever) said, one person in five is a born chump. He’s ready, willing, and able to believe anything you put in front of him, and so long as it’s not skull-fornicatingly obvious fakery — an extremely low bar, as you might imagine — he’s all in. The best part is, chumps don’t know they’re chumps, and they never, ever wise up (poker players have a similar adage: “After a half hour at the table, if you can’t spot the sucker, then you’re the sucker”; it has the same impact on behavior, namely: none whatsoever). You don’t have to do anything to sell the chumps; they’re practically begging you to take their money.

Barnum didn’t say much about these guys, but there’s another ratio that applies to a given crowd, also about one in five: The born skeptic, the killjoy, call them what you will. This is the guy completely unaffected by the lights, the music, the smells of popcorn and cotton candy, the children’s laughter … all he can see at the carny is the tattooed meth head who put everything together overnight with an Allen wrench. He might well show up at your carny — the wife and kids wanted to go — but you’ll never make a dime off him. No show in the world is ever going to sell him, so you don’t need to worry about him.

It’s those other three guys in any given crowd that make you some serious money … or bring the whole thing crashing down on your head. They’re who the show is really for.

It’s pretty easy to sell these folks. After all, they want to be sold. They’re at the carnival, aren’t they? And yet, it’s also pretty easy to screw it up. They’re willing to suspend disbelief — they want to — but the line between “necessary suspension of disbelief” and “an insult to one’s intelligence” is thinner than you think, and lethally easy to cross.

Severian, “Carny World”, Founding Questions, 2021-09-24.

January 25, 2024

The Bathtub Hoax and debunked medieval myths

David Friedman spends a bit of time debunking some bogus but widely believed historical myths:

“Image” by Lauren Knowlton is licensed under CC BY 2.0 .

The first is a false story that teaches a true lesson — the U.S. did treat Amerinds unjustly in a variety of contexts, although the massive die off as a result of the spread of Old World diseases was a natural result of contact, not deliberate biological warfare. The second lets moderns feel superior to their ignorant ancestors; most people like feeling superior to someone.

Another example of that, deliberately created by a master, is H.L. Mencken’s bathtub hoax, an entirely fictitious history of the bathtub published in 1917:

The article claimed that the bathtub had been invented by Lord John Russell of England in 1828, and that Cincinnatian Adam Thompson became acquainted with it during business trips there in the 1830s. Thompson allegedly went back to Cincinnati and took the first bath in the United States on December 20, 1842. The invention purportedly aroused great controversy in Cincinnati, with detractors claiming that its expensive nature was undemocratic and local doctors claiming it was dangerous. This debate was said to have spread across the nation, with an ordinance banning bathing between November and March supposedly narrowly failing in Philadelphia and a similar ordinance allegedly being effective in Boston between 1845 and 1862. … Oliver Wendell Holmes Sr. was claimed to have campaigned for the bathtub against remaining medical opposition in Boston; the American Medical Association supposedly granted sanction to the practice in 1850, followed by practitioners of homeopathy in 1853.

According to the article, then-Vice President Millard Fillmore visited the Thompson bathtub in March 1850 and having bathed in it became a proponent of bathtubs. Upon his accession to the presidency in July of that year, Fillmore was said to have ordered the construction of a bathtub in the White House, which allegedly refueled the controversy of providing the president with indulgences not enjoyed by George Washington or Thomas Jefferson. Nevertheless, the effect of the bathtub’s installation was said to have obliterated any remaining opposition, such that it was said that every hotel in New York had a bathtub by 1860. (Wikipedia)

Writing more than thirty years later, Mencken claimed to have been unable to kill the story despite multiple retractions. A google search for [Millard Fillmore bathtub] demonstrates that it is still alive. Among other hits:

The first bathtub placed in the White House is widely believed to have had been installed in 1851 by President Millard Fillmore (1850-53). (The White House Bathrooms & Kitchen)

Medieval

The desire of moderns to feel superior to their ancestors, helps explain a variety of false beliefs about the Middle Ages including the myth, discussed in detail in an earlier post, that medieval cooking was overspiced to hide the taste of spoiled meat.

Other examples:

Medieval witch hunts: Contrary to popular belief, large scale persecution of witches started well after the end of the Middle Ages. The medieval church viewed the belief that Satan could give magical powers to witches, on which the later prosecutions were largely based, as heretical. The Spanish Inquisition, conventionally blamed for witchcraft prosecutions, treated witchcraft accusations as a distraction from the serious business of identifying secret Jews and Muslims, dealt with such accusations by applying serious standards of evidence to them.

Chastity Belts: Supposedly worn by the ladies of knights off on crusade. The earliest known evidence of the idea of a chastity belt is well after the end of the crusades, a 15th century drawing, and while there is literary evidence for their occasional use after that no surviving examples are known to be from before the 19th century.

Ius Prima Noctae aka Droit de Seigneur was the supposed right of a medieval lord to sleep with a bride on her wedding night. Versions of the institution are asserted in a variety of sources going back to the Epic of Gilgamesh, but while it is hard to prove that it never existed in the European middle ages it was clearly never the norm.

The Divine Right of Kings: Various rulers through history have claimed divine sanction for their rule but “The Divine Right of Kings” is a doctrine that originated in the sixteenth and seventeenth century with the rise of absolute monarchy — Henry VIII in England, Louis XIV in France. Medieval rulers were absolute in neither theory or practice. The feudal relation was one of mutual obligation, in its simplest form protection by the superior in exchange for set obligations of support by the inferior. In practice the decentralized control of military power under feudalism presented difficulties for a ruler who wished to overrule the desires of his nobility, as King John discovered.

Some fictional history functions in multiple versions designed to support different causes. The destruction of the Library of Alexandria has been variously blamed on Julius Caesar, Christian mobs rioting against pagans, and the Muslim conquerors of Egypt, the Caliph Umar having supposedly said that anything in the library that was true was already in the Koran and anything not in the Koran was false. There is no good evidence for any of the stories. The library existed in classical antiquity, no longer exists today, but it is not known how it was destroyed and it may have just gradually declined.

October 15, 2023

September 7, 2023

QotD: Techno-pessimism

Unfortunately, by any objective measure, most new things are bad. People are positively brimming with awful ideas. Ninety percent of startups and 70 percent of small businesses fail. Just 56 percent of patent applications are granted, and over 90 percent of those patents never make any money. Each year, 30,000 new consumer products are brought to market, and 95 percent of them fail. Those innovations that do succeed tend to be the result of an iterative process of trial-and-error involving scores of bad ideas that lead to a single good one, which finally triumphs. Even evolution itself follows this pattern: the vast majority of genetic mutations confer no advantage or are actively harmful. Skepticism towards new ideas turns out to be remarkably well-warranted.

The need for skepticism towards change is just as great when the innovation is social or political. For generations, many progressives embraced Marxism and thought its triumph inevitable. Future generations would view us as foolish for resisting it — just like Thoreau and the telegraph. But it turned out that Marxism was a terrible idea, and resisting it an excellent one. It had that in common with virtually every other utopian ideal in the history of social thought. Humans struggle to identify where precisely the arc of history is pointing.

Nicholas Phillips, “The Fallacy of Techno-Optimism”, Quillette, 2019-06-06.

July 10, 2023

QotD: Liberal and conservative views on innovation

Liberals and conservatives don’t just vote for different parties — they are different kinds of people. They differ psychologically in ways that are consistent across geography and culture. For example, liberals measure higher on traits like “openness to new experience” and seek out novelty. Conservatives prefer order and predictability. Their attachment to the status quo is an impediment to the re-ordering of society around new technology. Meanwhile, the technologists of Silicon Valley, while suspicious of government regulation, are still some of the most liberal people in the country. Not all liberals are techno-optimists, but virtually all techno-optimists are liberals.

A debate on the merits of change between these two psychological profiles helps guarantee that change benefits society instead of ruining it. Conservatives act as gatekeepers enforcing quality control on the ideas of progressives, ultimately letting in the good ones (like democracy) and keeping out the bad ones (like Marxism). Conservatives have often been wrong in their opposition to good ideas, but the need to win over a critical mass of them ensures that only the best-supported changes are implemented. Curiously, when the change in question is technological rather than social, this debate process is neutered. Instead, we get “inevitablism” — the insistence that opposition to technological change is illegitimate because it will happen regardless of what we want, as if we were captives of history instead of its shapers.

This attitude is all the more bizarre because it hasn’t always been true. When nuclear technology threatened Armageddon, we did what was necessary to limit it to the best of our ability. It may be that AI or automation causes social Armageddon. No one really knows — but if the pessimists are right, will we still have it in us to respond accordingly? It seems like the command of the optimists to lay back and “trust our history” has the upper hand.

Conservative critics of change have often been comically wrong — just like optimists. That’s ultimately not so interesting, because humans can’t predict the future. More interesting have been the times when the predictions of the critics actually came true. The Luddites were skilled artisans who feared that industrial technology would destroy their way of life and replace their high-status craft with factory drudgery. They were right. Twentieth century moralists feared that the automobile would facilitate dating and casual sex. They were right. They erred not in their predictions, but in their belief that the predicted effects were incompatible with a good society. That requires us to have a debate on the merits — about the meaning of the good life.

Nicholas Phillips, “The Fallacy of Techno-Optimism”, Quillette, 2019-06-06.

March 26, 2023

Plandemic? Manufactured crisis? Mass formation psychosis?

In The Conservative Woman, James Delingpole lets his skeptic flag fly:

“Covid 19 Masks” by baldeaglebluff is licensed under CC BY-SA 2.0

Tell me about your personal experiences of Covid 19. Actually, wait, don’t. I think I may have heard it already, about a million times. You lost all sense of smell or taste – and just how weird was that? It floored you for days. It gave you a funny dry cough, the dryness and ticklishness of which was unprecedented in your entire coughing career. You’ve had flu a couple of times and, boy, when you’ve got real flu do you know it. But this definitely wasn’t flu. It was so completely different from anything you’ve ever known, why you wouldn’t be surprised to learn that it had been bioengineered in a lab with all manner of spike proteins and gain-of-function additives, perhaps even up to and including fragments of the Aids virus …

Yeah, right. Forgive me for treading on the sacred, personal domain of your lived experience. But might I cautiously suggest that none of what you went through necessarily validates lab-leak theory. Rather what it may demonstrate is the power of susceptibility, brainwashing and an overactive imagination. You lived – we all did – through a two-year period in which health-suffering anecdotes became valuable currency. Whereas in the years before the “pandemic”, no one had been much interested in the gory details of your nasty cold, suddenly everyone wanted to compare notes to see whether they’d had it as bad as you – or, preferably, for the sake of oneupmanship, even worse. This in turn created a self-reinforcing mechanism of Covid panic escalation: the more everyone talked about it, the more inconvertible the “pandemic” became.

Meanwhile, in the real world, hard evidence – as opposed to anecdotal evidence – for this “pandemic” remained stubbornly non-existent. The clincher for me was a landmark article published in January 2021 by Simon Elmer at his website Architects For Social Housing. It was titled “Lies, Damned Lies and Statistics: Manufacturing the Crisis”.

In it Elmer asked the question every journalist should have asked but which almost none did: is this “pandemic” really as serious as all the experts, and government ministers and media outlets and medics are telling us it is? The answer was a very obvious No. As the Office for National Statistics data cited by Elmer clearly showed, 2020 – Year Zero for supposedly the biggest public health threat since “Spanish Flu” a century earlier – was one of the milder years for death in the lives of most people.

Let’s be clear about this point, because something you often hear people on the sceptical side of the argument say is, “Of course, no one is suggesting that Covid didn’t cause a horrific number of deaths.” But that’s exactly what they should be suggesting: because it’s true. Elmer was quoting the Age Standardised Mortality statistics for England and Wales dating back to 1941. What these show is that in every year up to and including 2008, more people died per head of population than in the deadly Covid outbreak year of 2020. Of the previous 79 years, 2020 had the 12th lowest mortality rate.

Covid, in other words, was a pandemic of the imagination, of anecdote, of emotion rather than of measured ill-health and death. Yet even now, when I draw someone’s attention to that ONS data, I find that the most common response I get is one of denial. That is, when presented with the clearest, most untainted (this was before ONS got politicised and began cooking the books), impossible-to-refute evidence that there was NO Covid pandemic in 2020, most people, even intelligent ones, still choose to go with their feelings rather with the hard data.

This natural tendency many of us have to choose emotive narratives over cool evidence makes us ripe for exploitation by the cynical and unscrupulous. We saw this during the pandemic when the majority fell for the exciting but mendacious story that they were living through a new Great Plague, and that only by observing bizarre rituals – putting strips of cloth over one’s face, dancing round one another in supermarkets, injecting unknown substances into one’s body – could one hope to save oneself and granny. And we’re seeing it now, in a slightly different variant, in which lots of people – even many who ought to know better – are falling for some similarly thrilling but erroneous nonsense about lab-leaked viruses.

It’s such a sexy story that I fell for it myself. In those early days when all the papers were still dutifully trotting out World Health Organisation-approved propaganda about pangolins and bats and the apparently notorious wet market (whatever the hell that is) in Wuhan, I was already well ahead of the game. I knew, I just knew, as all the edgy, fearless seekers of truth did that it was a lab leak wot done it. If you knew where to dig, there was a clear evidence trail to support it.

We edgy, fearless truth seekers knew all the names and facts. Dodgy Peter Daszak of the EcoHealth Alliance was in it up to the neck; so too, obviously, was the loathsomely chipper and smugly deceitful Anthony Fauci. We knew that all this crazy, Frankenvirus research had initially been conducted in Chapel Hill, North Carolina, but had been outsourced to China after President Obama changed the regulations and it became too much of a hot potato for US-based labs. And let’s not forget Ukraine – all those secret bio-research labs run on behalf of the US Deep State, but then exposed as the Russians unhelpfully overran territory such as Mariupol.

August 29, 2022

“Follow the science!”, “No, not like that!”

Chris Bray recounts his experiences when he “followed the science” over the Wuhan Coronavirus:

It’s happening again, and so is the response. It’s becoming our one persistent cultural cycle.

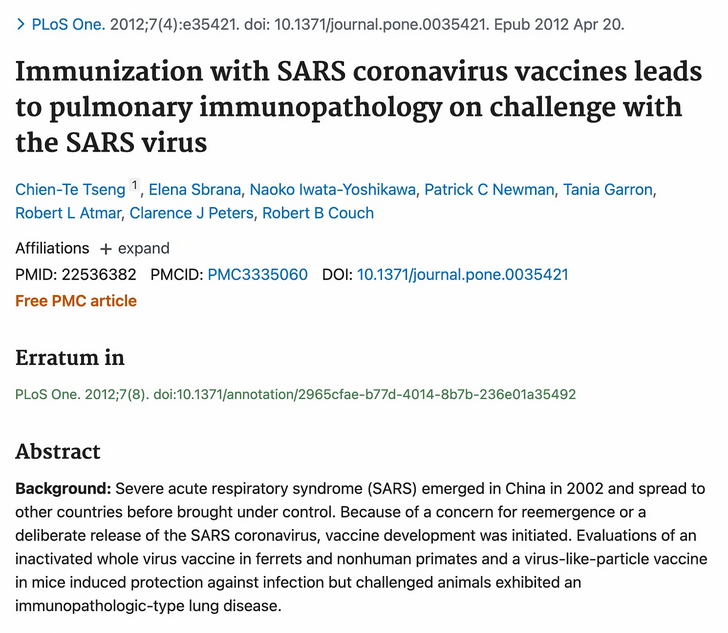

During the first availability of the Covid-19 “vaccines” — which don’t prevent transmission or infection, but we changed the meaning of that word, so shut up shut up shut up — I did what I usually do: I thought about the past to try to make sense of the present. If we’ve instantly produced safe and effective vaccines for SARS-CoV-2, I wondered, why didn’t we do the same for SARS-CoV-1? It took less than five minutes to answer that question:

So scientists did come up with a vaccine for SARS-CoV-1, but when they gave it to animals, it made the animals extremely susceptible to severe illness when they were “challenged” with the virus again — “suggesting hypersensitivity to SARS-CoV components was induced”. And so, the authors of that 2012 paper argued, “Caution in proceeding to application of a SARS-CoV vaccine in humans is indicated.”

Because I believe in science, I followed that advice, and I told my doctor that I was following that advice — and that I wasn’t terribly concerned about Covid-19 anyway, so whatever. I would be cautious about injecting a novel medical product into my body: I would wait, calmly. She assured me that there was no scientific shortcutting at all in the development of the vaccines for SARS-CoV-2, which were absolutely known to be 100% safe and effective, but she also agreed that there was nothing wrong with watching and waiting for a few months.

I meant it. At that point, I hadn’t refused the vaccines — I had just decided that I would wait for a bit to see how they played out once they’d been injected into a few billion human lab rats.

And then the shaming started. I was uninvited from a family event, and ordered to stay away — and then, after a short pause, repeatedly shamed by email as a disgusting selfish pig who made the family sick with my ignorance and selfishness. (Distant family, thankfully.) The public sphere came alive with this message, and Joe Biden let me know that his patience was wearing thin for my kind. Social media was a daily fear bath, and consumed with shaming rituals.

It was exactly that message that turned my skepticism, my preference for watching and waiting, into a flat and permanent refusal. People said they were talking about science — in a vicious flood of hyperemotional shaming language, the hysterical tone and substance of which made it clear that they weren’t talking about science at all. They were talking about their fearfulness and their weakness; they were talking about their cowardice, and about the shame they felt at finding their fear of the air wasn’t shared. The shaming made me contemptuous; it secured my commitment to resist.

So now comes a new flood of shaming messages, assuring people that mere political disagreement is a sure sign of monstrous cruelty and hate.

April 16, 2021

“Students will find in Shakespeare absolutely no moral compass”

Sky Gilbert responds more than adequately to a demand to “Cancel Shakespeare” that also appeared in The Line recently:

This was long thought to be the only portrait of William Shakespeare that had any claim to have been painted from life, until another possible life portrait, the Cobbe portrait, was revealed in 2009. The portrait is known as the “Chandos portrait” after a previous owner, James Brydges, 1st Duke of Chandos. It was the first portrait to be acquired by the National Portrait Gallery in 1856. The artist may be by a painter called John Taylor who was an important member of the Painter-Stainers’ Company.

National Portrait Gallery image via Wikimedia Commons.

Allan thinks that Shakespeare’s language is difficult and old fashioned, and that students today find analyzing the complexities of his old-fashioned rhetoric boring and irrelevant. Yes, Shakespeare essentially writes in another language (early modern English). And reading or even viewing his work can be a tough slog. Not only did he invent at least 1,700 words (some of which are now forgotten today), he favoured a befuddling periodic syntax in which the subject does not appear until the end of a sentence.

But a study of Shakespeare’s rhetoric is important in 2021. There is one — and only one — exceedingly relevant idea that can be lifted from Shakespeare’s congested imagery, his complex, sometimes confusing metaphors — one jewel that can be dragged out of his ubiquitous references to OVID and Greek myth (references which were obviously effortless for him, but for most of us, only confound). And this idea is very relevant today. Especially in the era of “alternate facts” and “fake news.”

This idea is the only one Shakespeare undoubtedly believed. I say this because he returns to it over and over. Trevor McNeely articulated this notion clearly and succinctly when he said that Shakespeare was constantly warning us the human mind “can build a perfectly satisfactory reality on thin air, and never think to question it.” Shakespeare is always speaking — in one way or another — about his suspicion that the bewitching power of rhetoric — indeed the very beauty of poetry itself — is both enchanting and dangerous.

Shakespeare lived at the nexus of a culture war. The Western world was gradually rejecting the ancient rhetorical notion that “truth is anything I can persuade you to believe in poetry” for “truth is whatever can be proved best by logic and science.” Shakespeare was fully capable of persuading us of anything (he often does). But his habit is to subsequently go back and undo what he has just said. He does this so that we might learn to fundamentally question the manipulations of philosophy and rhetoric — to question what were his very own manipulations. Shakespeare loved the beautiful hypnotizing language of poetry, but was also painfully aware that it could be dangerous as hell.

In fact, Shakespeare’s work is very dangerous for all of us. That’s why students should — and must — read it. Undergraduates today hotly debate whether The Merchant of Venice is anti-Semitic, or whether Prospero’s Caliban is a victim of colonial oppression. Education Week reported that “in 2016, students at Yale University petitioned the school to ‘decolonize’ its reading lists, including by removing its Shakespeare requirement.”

It’s true that Shakespeare is perhaps one of the oldest and whitest writers we know. (And sometimes he’s pretty sexist too — Taming of the Shrew, anyone?). But after digging systematically into Shakespeare’s work even the dullest student will discover that for every Kate bowing in obedience to her husband, there is a fierce Lucrece — not only standing up to a man, but permanently and eloquently dressing him down. (And too, the “colonialist” Prospero will prove to be just as flawed as the “indigenous” Caliban.) William Hazlitt said: Shakespeare’s mind “has no particular bias about anything” and Harold Bloom said: “his politics, like his religion, evades me, but I think he was too wary to have any.”

August 15, 2020

QotD: The worth of a human being

A lot of things happened, more than half a century ago; suddenly I’m among the shrinking number who recall this. For today’s Idlepost, I will remember an article I read in a popular science magazine, back then. I’ve forgotten both the title of the publication, and the date of the number. I can, however, say that I was in high school then.

According to this article, the worth of a human being was 98 cents. The authors showed how their figure was arrived at. They had combined current market prices for the materials in an average human frame of 130 pounds. (Details like this I remember.) A sceptic, even then, I recall noting that they excluded hat, mid-season clothing, and shoes, from their total; and that they didn’t mention whether they were citing wholesale or retail values on the flesh and chemicals. Most pointedly, while accompanying my mother to a supermarket, I checked the prices for beef, pork, and broiler chicken, choosing the lowest grades. All were over 10 cents a pound; and so I concluded that the overall price of the meat alone, per human, would exceed their estimate.

Given background inflation rates, I think the total value in 2020 may approach twenty dollars, or even twenty-five. I’d have to recheck chemical prices, to be sure. Though perhaps the total might be reduced, closer to one dollar again, for babies.

David Warren, “Virtual March for Life”, Essays in Idleness, 2020-05-14.

May 7, 2018

Study: climate change skeptics behave in a more environmentally friendly manner than believers

In common with some similar observed phenomena, people who like to signal their climate change beliefs are actually less likely to act in environmentally beneficial ways than declared climate skeptics. At Pacific Standard, Tom Jacobs details the findings of a recent study:

Do our behaviors really reflect our beliefs? New research suggests that, when it comes to climate change, the answer is no. And that goes for both skeptics and believers.

Participants in a year-long study who doubted the scientific consensus on the issue “opposed policy solutions,” but at the same time, they “were most likely to report engaging in individual-level, pro-environmental behaviors,” writes a research team led by University of Michigan psychologist Michael Hall.

Conversely, those who expressed the greatest belief in, and concern about, the warming environment “were most supportive of government climate policies, but least likely to report individual-level actions.”

Sorry, I didn’t have time to recycle — I was busy watching a documentary about the crumbling Antarctic ice shelf.

The study, published in the Journal of Environmental Psychology, followed more than 400 Americans for a full year. On seven occasions — roughly once every eight weeks — participants revealed their climate change beliefs, and their level of support for policies such as gasoline taxes and fuel economy standards.

They also noted how frequently they engaged in four environmentally friendly behaviors: recycling, using public transportation, buying “green” products, and using reusable shopping bags.

The researchers found participants broke down into three groups, which they labeled “skeptical,” “cautiously worried,” and “highly concerned.” While policy preferences of group members tracked with their beliefs, their behaviors largely did not: Skeptics reported using public transportation, buying eco-friendly products, and using reusable bags more often than those in the other two categories.

February 27, 2018

The notion of “uploading” your consciousness

Skeptic author Michael Shermer pours cold water on the dreams and hopes of Transhumanists, Cryonicists, Extropians, and Technological Singularity proponents everywhere:

It’s a myth that people live twice as long today as in centuries past. People lived into their 80s and 90s historically, just not very many of them. What modern science, technology, medicine, and public health have done is enable more of us to reach the upper ceiling of our maximum lifespan, but no one will live beyond ~120 years unless there are major breakthroughs.

We are nowhere near the genetic and cellular breakthroughs needed to break through the upper ceiling, although it is noteworthy that companies like Google’s Calico and individuals like Abrey deGrey are working on the problem of ageing, which they treat as an engineering problem. Good. But instead of aiming for 200, 500, or 1000 years, try to solve very specific problems like cancer, Alzheimer’s and other debilitating diseases.

Transhumanists, Cryonicists, Extropianists, and Singularity proponents are pro-science and technology and I support their efforts but extending life through technologies like mind-uploading not only cannot be accomplished anytime soon (centuries at the earliest), it can’t even do what it’s proponents claim: a copy of your connectome (the analogue to your genome that represents all of your memories) is just that—a copy. It is not you. This leads me to a discussion of…

The nature of the self or soul. The connectome (the scientific version of the soul) consists of all of your statically-stored memories. First, there is no fixed set of memories that represents “me” or the self, as those memories are always changing. If I were copied today, at age 63, my memories of when I was, say, 30, are not the same as they were when I was 50 or 40 or even 30 as those memories were fresh in my mind. And, you are not just your memories (your MEMself). You are also your point-of-view self (POVself), the you looking out through your eyes at the world. There is a continuity from one day to the next despite consciousness being interrupted by sleep (or general anaesthesia), but if we were to copy your connectome now through a sophisticated fMRI machine and upload it into a computer and turn it on, your POVself would not suddenly jump from your brain into the computer. It would just be a copy of you. Religions have the same problem. Your MEMself and POVself would still be dead and so a “soul” in heaven would only be a copy, not you.

Whether or not there is an afterlife, we live in this life. Therefore what we do here and now matters whether or not there is a hereafter. How can we live a meaningful and purposeful life? That’s my final chapter, ending with a perspective that our influence continues on indefinitely into the future no matter how long we live, and our species is immortal in the sense that our genes continue indefinitely into the future, making it all the more likely our species will not go extinct once we colonize the moon and Mars so that we become a multi-planetary species.

February 9, 2018

Defining bias

In Quillette, Bo Winegard explains how to define bias:

Bias is an important concept both inside and outside of academia. Despite this, it is remarkably difficult to define or to measure. And many, perhaps all, studies of it are susceptible to reasonable objections from some framework of normative reasoning or another. Nevertheless, in common discourse the term is easy enough to understand. Bias is a preference or commitment that impels a person away from impartiality. If Sally is a fervid fan of the New York Knicks and uses different criteria for assessing fouls against them than against their opponents, then we would say that she is biased.

There are many kinds of biases, and bias can penetrate the cognitive process from start to finish and anywhere between. It can lead to selective exposure, whereby people preferentially seek material that favors their preferred position, and avoid material that contradicts it; it can lead to motivated skepticism, whereby people are more critical of material that opposes their preferred position than of material that supports it; and it can lead to motivated credulity, whereby people assimilate information that supports their preferred position more easily and rapidly than information that contradicts it. Often, these biases all work together.

So, imagine Sally the average ardent progressive. She probably exposes herself chiefly to progressive magazines, news outlets, and friends; and, quite possibly, she inhabits a workplace surrounded by other progressives (selective exposure). Furthermore, when she is exposed to conservative arguments or articles, she is probably extremely critical of them. That National Review article she read this morning about abortion, for example, was insultingly obtuse and only confirmed her opinion that conservatives are cognitively challenged (motivated skepticism). Compounding this, she is equally ready to praise and absorb arguments and articles in progressive magazines (motivated credulity). Just this afternoon, for example, she read a compelling takedown of the Republican tax cuts in Mother Jones which strengthened her intuition that conservatism is an intellectual and moral dead end. (This example would work equally well with an average ardent conservative). The result is an inevitably blinkered world view.

The strength of one’s bias is influenced by many factors, but, for simplicity, we can break these factors into three broad categories: clarity, accuracy concerns, and extraneous concerns. Clarity refers to how ambiguous a topic is. The more ambiguous, the lower the clarity and the higher the bias. So, the score of a basketball game has very high clarity, whereas an individual foul call may have very low clarity. Accuracy concerns refer to how desirous an individual is to know the truth. The higher the concern, on average, the lower the bias. If a fervid New York Knicks fan were also a referee in training who really wanted to get foul calls right, then she would probably have lower bias than the average impassioned fan. Last, extraneous concerns refer to any concerns (save accuracy concerns) that motivate a person toward a certain answer. Probably the most powerful of these are group affiliation and status, but there are many others (self-esteem et cetera).

At risk of simplification, we might say that bias can be represented by an equation such that extraneous concerns (E) minus (accuracy concerns (A) plus clarity (C)) equals bias: (E – (A + C) = B).

May 1, 2017

“100% certainty is almost always an indication of a cult rather than any sort of actual truth”

Jay Currie looks at the reaction to a Bret Stephens climate article in the New York Times:

On the science side the greatest threats were the inadequacy of the climate models and the advent of the “hiatus”. The models entirely failed to project any circumstances in which temperature ceased to rise when CO2 continued to rise. However the hiatus created exactly that set of conditions for what is now looking like twenty years. (Right this instant, last year’s El Nino, broke the hiatus. However, rapidly cooling post El Nino temperatures look set to bring the hiatus back into play in the next six months to a year.)

The economic side is even worse. It turns out that renewable energy – windmills and solar – costs a fortune and is profoundly unreliable. Governments which went all in for renewables (see Ontario) found their energy prices hockey sticking and the popularity plummeting without, as it turns out, making even a slight impression on the rise of CO2 concentrations.

The economics of climate change and its “mitigation” are a shambles. And it is beginning to dawn on assorted politicians that they might have been railroaded with science which was not quite ready for prime time.

Which makes it all the more imperative for the Nuccitelli and DeSmog blogs of this world to redouble their attacks on even mildly sceptical positions. Had the alarmists been less certain their edifice could have easily withstood a recalibration of the science and a recalculation of the cost/benefits. But they weren’t. They went all in for a position which claimed to know for certain that CO2 was driving world temperature and that there was no other possible cause for an increase or decrease in that temperature.

The problem with that position is that it was premature and very brittle. As lower sensitivity estimates emerge, as other, non-CO2 driven, temperature controls are discovered, consensus climate science becomes more and more embattled. What had looked like a monopoly on political discourse and media comment begins to fray. The advent of Trump and a merry band of climate change skeptics in the regulatory agencies and in Congress, has pretty much killed any forward motion for the climate alarmists in the US. And the US is where this battle will be won or lost. However, the sheer cost of so called “carbon reduction” schemes in the UK, Germany and the rest of Europe has been staggering and has shown next to no actual benefit so scepticism is rising there too. China has both embarked on an embrace of climate change abatement and the construction of dozens of coal fired electrical generation plants every year.