The last few days have provided both a good laugh and some food for thought on the important question of confirmation bias — people’s tendency to favor information that confirms their pre-existing views and ignore information that contradicts those views. It’s a subject well worth some reflection.

The laugh came from a familiar source. Without (it seems) a hint of irony, Paul Krugman argued on Monday that everyone is subject to confirmation bias except for people who agree with him. He was responding to this essay Ezra Klein wrote for his newly launched site, Vox.com, which took up the question of confirmation bias and the challenges it poses to democratic politics. Krugman acknowledged the research that Klein cites but then insisted that his own experience suggests it is actually mostly people he disagrees with who tend to ignore evidence and research that contradicts what they want to believe, while people who share his own views are more open-minded, skeptical, and evidence driven. I don’t know when I’ve seen a neater real-world example of an argument that disproves itself. Good times.

Yuval Levin, “Confirmation Bias and Its Limits”, National Review, 2014-04-09

April 10, 2014

QotD: Confirmation bias for thee but not for me

October 15, 2013

Lies we tell to pollsters

David Harsanyi wishes the nonsense we tell to pollsters was a bit closer to the truth, at least in some cases:

A recent Rasmussen poll found that one in three Americans would rather win a Nobel Prize than an Oscar, Emmy or Grammy.

Though there’s no way to disprove this peculiar finding, I’m rather confident that it’s complete baloney. The average American probably can’t name more than one Nobel Prize winner — if that. Even if they could, it’s unlikely many would choose a life in physics or “peace” over being a celebrated actor, musician or television star. Put it this way, any man who tells you he wants the life of Nobel Prize-winning Ahmet Uzumcu, Director General of the Organization for the Prohibition of Chemical Weapons, instead of George Clooney is lying. And that includes Ahmet Uzumcu.

Polls might have been precise in forecasting recent elections (though, 2012 pollsters only received an average “C+ grade” in a poll conducted by Pew Research Center; we’re waiting on a poll that tells us what to think about polls that poll polls), but it’s getting difficult to believe much of anything else. Beyond sampling biases or phraseology biases, many recent polls prove that Americans will tell pollsters what they think they think, but not how they intend to act. Part of the problem is social desirability bias — the tendency to give answers that they believe will be viewed favorably by others. That might explain why someone would tell a pollster that he would rather win a Nobel Prize than a Grammy. There is also confirmation bias — the tendency of people to say things that confirm their beliefs or theories. Whatever the case, voters are fooling themselves in various ways. And when it comes to politics, they’re also giving small-government types like myself false hope.

Over the last few months, we seem to have been added to some sort of polling telephone list, as we’ve had dozens of calls from various institutions conducting “important public research” and insisting that we have to take part in their surveys. It’s quite remarkable how angry they get when I say we don’t want to take part. They go from vaguely pleasant at the start of the call to downright authoritarian by the time I hang up the phone … how dare I not want to give them the data they’re asking for? They’ve collectively become more irritating than the calls from “Bob” at “Windows Technical Support”.

January 30, 2013

QotD: Confirmation-Bias Theatre Of The Absurd

Unlike Andrew Coyne and Pierre Karl Péladeau, I am no expert on CRTC television policy. I couldn’t tell you the difference between a “must-carry” Class A license, a Class B carry-at-will, and a class X concealed-carry. But I do know a little about what makes for good journalism. And on that basis, I’d hate to see Sun News get taken off the air for want of revenue.

Sun’s enemies accuse the network’s hosts of being a bunch of haters. And it’s hard to deny the charge. Among the people they hate: Occupy protesters, fake hunger strikers and sanctimonious left-wing activists.

And Omar Khadr. Wow, do they hate Omar Khadr.

We know this because Sun News TV segments tend to go light on actual news, and heavy on middle-aged white guys shouting about people they don’t like. Sometimes, they sit around their Toronto studio interviewing each other. It’s a sort of performance art that might well be dubbed — by the surprisingly large number of left-wing Toronto hipsters who watch the channel ironically — as Confirmation-Bias Theatre Of The Absurd.

Jonathan Kay, “David Suzuki is poster boy for why Canada needs Sun’s brand of journalism”, National Post, 2013-01-29

July 30, 2012

If your source data is flawed, your conclusions are useless

James Delingpole on the recent paper from Anthony Watts and his co-authors:

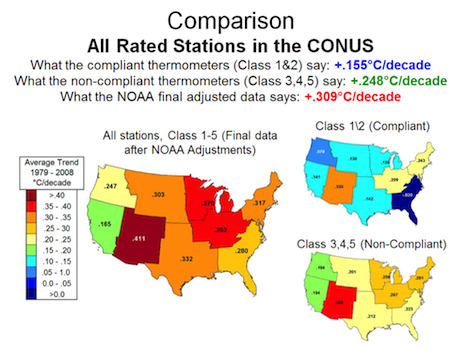

Have a look at this chart. It tells you pretty much all you need to know about the much-anticipated scoop by Anthony Watts of Watts Up With That?

What it means, in a nutshell, is that the National Oceanic and Atmospheric Administration (NOAA) — the US government body in charge of America’s temperature record, has systematically exaggerated the extent of late 20th century global warming. In fact, it has doubled it.

Is this a case of deliberate fraud by Warmist scientists hell bent on keeping their funding gravy train rolling? Well, after what we saw in Climategate anything is possible. (I mean it’s not like NOAA is run by hard-left eco activists, is it?) But I think more likely it is a case of confirmation bias. The Warmists who comprise the climate scientist establishment spend so much time communicating with other warmists and so little time paying attention to the views of dissenting scientists such as Henrik Svensmark — or Fred Singer or Richard Lindzen or indeed Anthony Watts — that it simply hasn’t occurred to them that their temperature records need adjusting downwards not upwards.

What Watts has conclusively demonstrated is that most of the weather stations in the US are so poorly sited that their temperature data is unreliable. Around 90 per cent have had their temperature readings skewed by the Urban Heat Island effect. While he has suspected this for some time what he has been unable to do until his latest, landmark paper (co-authored with Evan Jones of New York, Stephen McIntyre of Toronto, Canada, and Dr. John R. Christy from the Department of Atmospheric Science, University of Alabama, Huntsville) is to put precise figures on the degree of distortion involved.

March 10, 2012

May 6, 2011

“Reasoning is a non-violent weapon given to us by evolution to help us get our way”

Remember that old saw about it being impossible to reason someone out of an opinion they were never reasoned into? Ian Leslie looks at a new paper about the function of reasoning:

This is a widespread habit, of course, and one we might notice in ourselves in other contexts. Whether it’s relationships or politics or the workplace, we have a tendency to start off with we want and then reason backwards towards it; to cloak our true motivations or prejudices in the guise of reason. It’s been shown again and again in studies that we have a very strong ‘confirmation bias’; once we have an idea about the world we like (Obama is un-American, my girlfriend is cheating on me, the world is or isn’t getting warmer) we pick up on evidence we think supports our hypothesis and ruthlessly disregard evidence that undermines it, even without realising we’re doing so.

[. . .]

We tend to think of reason as an abstract, truth-seeking method that gets contaminated by our desires and motivations. But the paper argues it’s the other way around — that reasoning is a non-violent weapon given to us by evolution to help us get our way. Its capacity to help us get to the truth about things is a by-product, albeit a hugely important one. In many ways, reasoning does as much to screw us up as it does to help us. The paper’s authors, Dan Sperber and Hugo Mercier, put it like this:

The evidence reviewed here shows not only that reasoning falls quite short of reliably delivering rational beliefs and rational decisions. It may even be, in a variety of cases, detrimental to rationality. Reasoning can lead to poor outcomes, not because humans are bad at it, but because they systematically strive for arguments that justify their beliefs or their actions. This explains the confirmation bias, motivated reasoning, and reason-based choice, among other things.

H/T to Tim Harford for the link.