At Wired, Brendan I. Koerner talks about the odd circumstances which led to H. Ross Perot being instrumental in saving an iconic piece of computer history:

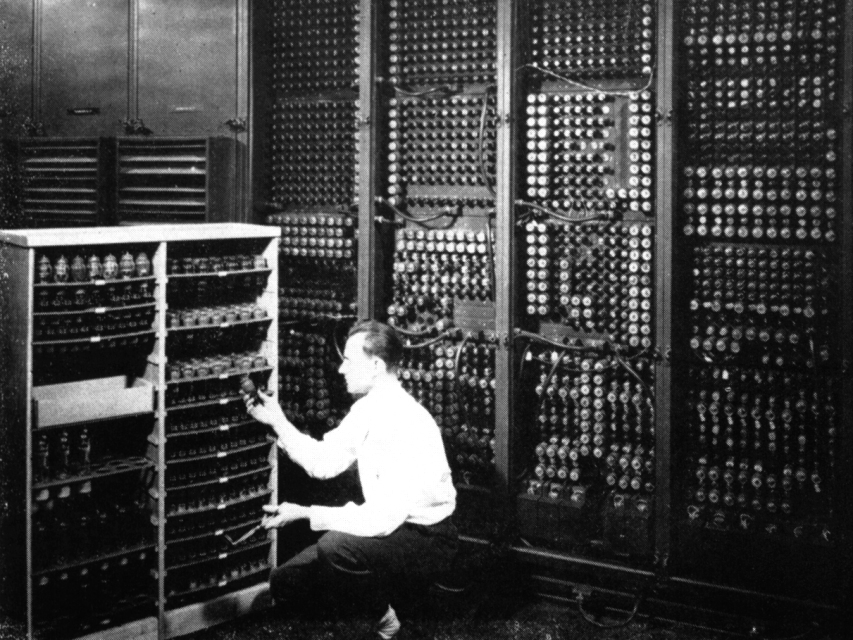

Eccentric billionaires are tough to impress, so their minions must always think big when handed vague assignments. Ross Perot’s staffers did just that in 2006, when their boss declared that he wanted to decorate his Plano, Texas, headquarters with relics from computing history. Aware that a few measly Apple I’s and Altair 880’s wouldn’t be enough to satisfy a former presidential candidate, Perot’s people decided to acquire a more singular prize: a big chunk of ENIAC, the “Electronic Numerical Integrator And Computer.” The ENIAC was a 27-ton, 1,800-square-foot bundle of vacuum tubes and diodes that was arguably the world’s first true computer. The hardware that Perot’s team diligently unearthed and lovingly refurbished is now accessible to the general public for the first time, back at the same Army base where it almost rotted into oblivion.

ENIAC was conceived in the thick of World War II, as a tool to help artillerymen calculate the trajectories of shells. Though construction began a year before D-Day, the computer wasn’t activated until November 1945, by which time the U.S. Army’s guns had fallen silent. But the military still found plenty of use for ENIAC as the Cold War began — the machine’s 17,468 vacuum tubes were put to work by the developers of the first hydrogen bomb, who needed a way to test the feasibility of their early designs. The scientists at Los Alamos later declared that they could never have achieved success without ENIAC’s awesome computing might: the machine could execute 5,000 instructions per second, a capability that made it a thousand times faster than the electromechanical calculators of the day. (An iPhone 6, by contrast, can zip through 25 billion instructions per second.)

When the Army declared ENIAC obsolete in 1955, however, the historic invention was treated with scant respect: its 40 panels, each of which weighed an average of 858 pounds, were divvied up and strewn about with little care. Some of the hardware landed in the hands of folks who appreciated its significance — the engineer Arthur Burks, for example, donated his panel to the University of Michigan, and the Smithsonian managed to snag a couple of panels for its collection, too. But as Libby Craft, Perot’s director of special projects, found out to her chagrin, much of ENIAC vanished into disorganized warehouses, a bit like the Ark of the Covenant at the end of Raiders of the Lost Ark.

Lost in the bureaucracy