I’d never heard this term before, but it’s an excellent description of the problem:

Lassie was a fictional dog. In all her literary, film, and TV adaptations the most recurring plot device was some character getting in trouble (in the print original, two brothers lost in a snowstorm; in popular memory “Little Timmy fell in a well”, though this never actually happened in the movies or TV series) and Lassie running home to bark at other humans to get them to follow her to the rescue.

In software, “Lassie error” is a diagnostic message that barks “error” while being comprehensively unhelpful about what is actually going on. The term seems to have first surfaced on Twitter in early 2020; there is evidence in the thread of at least two independent inventions, and I would be unsurprised to learn of others.

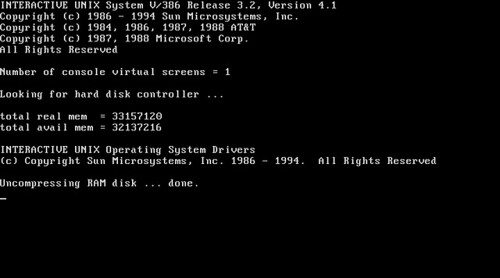

In the Unix world, a particularly notorious Lassie error is what the ancient line-oriented Unix editor “ed” does on a command error. It says “?” and waits for another command – which is especially confusing since ed doesn’t have a command prompt. Ken Thompson had an almost unique excuse for extreme terseness, as ed was written in 1973 to run on a computer orders of magnitude less capable than the embedded processor in your keyboard.

Herewith the burden of my rant: You are not Ken Thompson, 1973 is a long time gone, and all the cost gradients around error reporting have changed. If you ever hear this term used about one of your error messages, you have screwed up. You should immediately apologize to the person who used it and correct your mistake.

Part of your responsibility as a software engineer, if you take your craft seriously, is to minimize the costs that your own mistakes or failures to anticipate exceptional conditions inflict on others. Users have enough friction costs when software works perfectly; when it fails, you are piling insult on that injury if your Lassie error leaves them without a clue about how to recover.