World War Two

Published 30 Aug 2023The Germans are assaulting London with waves of V-1 flying bombs. But Eddie Chapman, a career criminal, serial womaniser, and masterful double agent working for MI5’s Double Cross is fighting a secret battle to beat the bombs. When he’s done with that, he pulls the wool over the Reich’s eyes to help Britain beat the Kriegsmarine. This is Agent Zigzag.

(more…)

August 31, 2023

The Double Agent Saving London From the V-1 – WW2 Documentary Special

August 19, 2023

One Day in August – Dieppe Anniversary Battlefield Event (Operation Jubilee)

WW2TV

Published 19 Aug 2021One Day in August — Dieppe Anniversary Battlefield Event (Operation Jubilee) With David O’Keefe, Part 3 — Anniversary Battlefield Event.

David O’Keefe joins us for a third and final show about Operation Jubilee to explain how the plan unravelled and how the nearly 1,000 British, Canadian and American commandos died. We will use aerial footage, HD footage taken in Dieppe last week and maps, photos, and graphics.

In Part 1 David O’Keefe talked about the real reason for the raid on Dieppe in August 1942. In Part 2 David talked about the plan for Operation Jubilee. The intentions of the raid and how it was supposed to unfold.

(more…)

August 18, 2023

One Day in August – Dieppe – Part 2 – The Plan

WW2TV

Published 17 Jan 2021Part 2 – The Plan With David O’Keefe

David O’Keefe joins us again. In Part 1 he talked about the real reason for the raid on Dieppe in August 1942. In Part 2 we talk about the plan for Operation Jubilee and David will share his presentation about the intentions of the raid and how it was supposed to unfold.

A final show sometime in the summer will come live from Dieppe to explain how the plan unravelled and how the nearly 1,000 British, Canadian and American commandos died.

(more…)

August 17, 2023

Dieppe – One Day in August – Ian Fleming, Enigma and the Deadly Raid – Part 1

WW2TV

Published 29 Nov 2020In less than six hours in August 1942, nearly 1,000 British, Canadian and American commandos died in the French port of Dieppe in an operation that for decades seemed to have no real purpose. Was it a dry-run for D-Day, or perhaps a gesture by the Allies to placate Stalin’s impatience for a second front in the west?

Canadian historian David O’Keefe uses hitherto classified intelligence archives to prove that this catastrophic and apparently futile raid was in fact a mission, set up by Ian Fleming of British Naval Intelligence as part of a “pinch” policy designed to capture material relating to the four-rotor Enigma machine that would permit codebreakers like Alan Turing at Bletchley Park to turn the tide of the Second World War.

In this first show we will look at how the raid has been written about in previous books and the research David undertook and as importantly why he did it. In a future show, we will look at filming in Dieppe itself and explain the sequence of events.

(more…)

June 4, 2023

The Allies are Driving for Rome – WW2 – Week 249 – June 3, 1944

World War Two

Published 3 Jun 2023The Allies head north in Italy after the fall of Monte Cassino last week; the Japanese head south in China in a new phase of their offensive; and the Soviets and the Western Allies make ever more concrete plans for their huge offensives, to go off very soon.

(more…)

April 23, 2023

The Biggest Offensive in Japanese History – WW2 – Week 243 – April 22, 1944

World War Two

Published 22 Apr 2023Japan Launches Operation Ichigo in China, their largest offensive of the war … or ever, but over in India things are not going well for the Japanese at Imphal and Kohima. The Allies also launch attacks on the Japanese at Hollandia, while over in the Crimea, the German defenses at Sevastopol are cracking under Soviet pressure.

(more…)

November 12, 2022

Long distance communication in the pre-modern era

In the latest Age of Invention newsletter, Anton Howes considers why the telegraph took so long to be invented and describes some of the precursors that filled that niche over the centuries:

… I’ve also long wondered the same about telegraphs — not the electric ones, but all the other long-distance signalling systems that used mechanical arms, waved flags, and flashed lights, which suddenly only began to really take off in the eighteenth century, and especially in the 1790s.

What makes the non-electric telegraph all the more interesting is that in its most basic forms it actually was used all over the world since ancient times. Yet the more sophisticated versions kept on being invented and then forgotten. It’s an interesting case because it shows just how many of the budding systems of the 1790s really were long behind their time — many had actually already been invented before.

The oldest and most widely-used telegraph system for transmitting over very long distances was akin to Gondor’s lighting of the beacons, capable only of communicating a single, pre-agreed message (with flames often more visible at night, and smoke during the day). Such chains of beacons were known to the Mari kingdom of modern-day Syria in the eighteenth century BC, and to the Neo-Assyrian emperor Ashurbanipal in the seventh century BC. They feature in the Old Testament and the works of Herodotus, Aeschylus, and Thucydides, with archaeological finds hinting at even more. They remained popular well beyond the middle ages, for example being used in England in 1588 to warn of the arrival of the Spanish Armada. And they were seemingly invented independently all over the world. Throughout the sixteenth century, Spanish conquistadors again and again reported simple smoke signals being used by the peoples they invaded throughout the Americas.

But what we’re really interested in here are systems that could transmit more complex messages, some of which may have already been in use by as early as the fifth century BC. During the Peloponnesian War, a garrison at Plataea apparently managed to confuse the torch signals of the attacking Thebans by waving torches of their own — strongly suggesting that the Thebans were doing more than just sending single pre-agreed messages.

About a century later, Aeneas Tacticus also wrote of how ordinary fire signals could be augmented by using identical water clocks — essentially just pots with taps at the bottom — which would lose their water at the same rate and would have different messages assigned to different water levels. By waving torches to signal when to start and stop the water clocks (Ready? Yes. Now start … stop!), the communicator could choose from a variety of messages rather than being limited to one. A very similar system was reportedly used by the Carthaginians during their conquests of Sicily, to send messages all the way back to North Africa requesting different kinds of supplies and reinforcement, choosing from a range of predetermined signals like “transports”, “warships”, “money”.

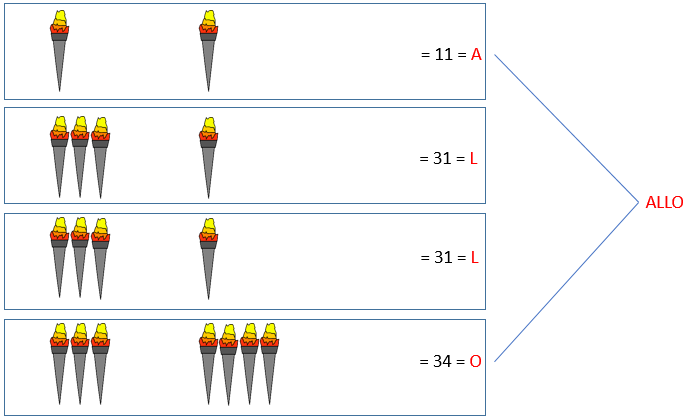

Diagram of a fire signal using the Polybius cipher.

Created by Jonathan Martineau via Wikimedia Commons.By the second century BC, a new method had appeared. We only know about it via Polybius, who claimed to have improved on an even older method that he attributed to a Cleoxenus and a Democleitus. The system that Polybius described allowed for the spelling out of more specific, detailed messages. It used ten torches, with five on the left and five on the right. The number of torches raised on the left indicated which row to consult on a pre-agreed tablet of letters, while the number of torches raised on the right indicated the column. The method used a lot of torches, which would have to be quite spread out to remain distinct over very long distances. So it must have been quite labour-intensive. But, crucially, it allowed for messages to be spelled out letter by letter, and quickly.

Three centuries later, the Romans were seemingly still using a much faster and simpler version of Polybius’s system, almost verging on a Morse-like code. The signalling area now had a left, right, and middle. But instead of signalling a letter by showing a certain number of torches in each field all at once, the senders waved the torches a certain number of times — up to eight times in each field, thereby dividing the alphabet into three chunks. One wave on the left thus signalled an A, twice on the left a B, once in the middle an I, twice in the middle a K, and so on.

By the height of the Roman Empire, fire signals had thus been adapted to rapidly transmit complex messages over long distances. But in the centuries that followed, these more sophisticated techniques seem to have disappeared. The technology appears to have regressed.

July 28, 2022

The Posh Brits Betraying Their Country – WW2 – Spies & Ties 20

World War Two

Published 27 Jul 2022Being allies does little to discourage Moscow from recruiting double agents in the British establishment. The most famous of them all, Kim Philby and the Cambridge Five, penetrate deep into MI5, MI6, and Bletchley Park. With friends like the NKVD, who needs enemies?

(more…)

June 1, 2022

How To Kill A U-Boat – WW2 Special

World War Two

Published 31 May 2022How to kill a U-Boat? The threat of the illusive and nearly undetectable submarines had been on the mind of every Allied naval planner since the Great War. As the Kriegsmarine once more unleashed its wolfpacks to the high seas, it became a race against time to find a way to stop the deadly stalkers from beneath the surface.

(more…)

May 29, 2022

Black May, Nazi Subs Defeated – WW2 – 196 – May 28, 1943

World War Two

Published 28 May 2021German Grand Admiral Karl Dönitz orders the U-Boats to leave the Atlantic this week; the losses lately have just been too great for their patrols to continue there. There is active fighting in China, the Aleutians, and the Kuban, and there are special weapons tests in the skies over Germany.

(more…)

May 25, 2022

The Spy Game That Killed Yamamoto – WW2 – Spies & Ties 17

World War Two

Published 24 May 2022We’ve already seen the power of signals intelligence. Churchill loves being fed information from MI6’s Ultra. Now it brings a vengeance for his American allies. They manage to bag the scourge of Pearl Harbor, C-in-C of the Imperial Japanese Navy, Admiral Isoroku Yamamoto.

(more…)

April 24, 2022

Ladies and Gentlemen, We Got Him – Yamamoto – WW2 – 191 – April 23, 1943

World War Two

Published 23 Apr 2022The mastermind of Pearl Harbor meets his fate this week in the Solomons, as do a great many Italian airmen and sailors in the Mediterranean in the Palm Sunday Massacre trying to supply the desperate Axis forces in Tunisia.

(more…)

April 20, 2022

The Unbreakable ROCKEX: Canada’s answer to the Enigma machine

Polyus Studios

Published 30 Dec 2021Well before digital encryption and VPNs there was Rockex, Canada’s unbreakable cipher machine that rivaled the German Enigma in its day. Although completely hidden from the public, Canada has played a noticeable role in the history of espionage. During the Cold War Canadian cipher machines worked alongside those of her allies to keep communications secure and helped to preserve the peace.

Check out cryptomuseum.com for more information about Rockex and to find the source of most of the pictures of the device I used.

March 20, 2022

Kharkov Falls Once Again – WW2 – 186 – March 19, 1943

World War Two

Published 19 Mar 2022The British are attacking the Mareth Line in North Africa while the Americans hit the Axis flank, but the Allies are withdrawing in Burma. It’s the Germans who are pulling back in the USSR, though, and there is another attempt from within German command to assassinate Adolf Hitler.

(more…)

March 6, 2022

Another Naval Disaster for Japan – WW2 – 184 – March 5, 1943

World War Two

Published 5 Mar 2022The Japanese again fail to reinforce New Guinea, losing many transport ships, and their forces there are ever more isolated. In Tunisia the Axis lose a bunch of new Tiger tanks, but in the Soviet Union it is the Axis forces that are on the offensive as Erich von Manstein’s offensive continues.

(more…)